Mastering Kafka: From Basics to Advanced Concepts

Atif Raza

Atif Raza

Introduction to Apache Kafka: A Distributed Event Streaming Platform

In today’s fast-paced digital world, businesses generate vast amounts of data that need to be processed, stored, and analyzed in real time. Apache Kafka has emerged as a powerful solution for handling data streams efficiently. In this blog, we will explore what Kafka is, its key components, and why it has become the go-to choice for event-driven architectures.

Kafka is an open-source event streaming platform used to handle real-time data. It helps applications send, receive, store, and process large amounts of messages efficiently. Think of it like a messaging system where data flows smoothly between different systems.

It has three main parts:

Producers (send data)

Brokers (store and distribute data)

Consumers (receive and process data)

Kafka is widely used in banking, e-commerce, and big data systems for real-time analytics, logging, and event-driven applications. 🚀

What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform designed to handle real-time data feeds. Originally developed by LinkedIn and later donated to the Apache Software Foundation, Kafka is used for building high-performance, scalable, and fault-tolerant messaging systems.

Kafka enables applications to publish, subscribe to, store, and process event streams in real time. It acts as a distributed message broker that allows different systems to communicate asynchronously with high throughput and low latency.

Core Components of Kafka

Producer: Producers publish (send) messages to Kafka topics.

Broker: Brokers are Kafka servers that store and manage message logs.

Consumer: Consumers read messages from Kafka topics.

Topic: Topics categorize messages and act as logical channels.

Partition: Each topic is divided into partitions for scalability and parallel processing.

Consumer Group: A group of consumers that work together to consume messages from a topic.

ZooKeeper (Optional in newer versions): Manages Kafka’s metadata and cluster coordination.

1. Producer

Sends (publishes) messages to Kafka topics.

Pushes data to Kafka brokers asynchronously.

Can write messages to multiple topics at once.

Uses partitioning to distribute messages across multiple brokers.

2. Broker

Kafka server that stores and manages messages.

Handles data from producers and delivers it to consumers.

Kafka clusters typically have multiple brokers for fault tolerance and scalability.

Each broker has a unique ID.

3. Topic

Logical category/feed where messages are published.

Similar to a "table" in a database but for streaming data.

Topics are partitioned to enable parallel processing.

4. Partition

A subset of a topic that helps distribute data across brokers.

Enables horizontal scaling and parallel processing.

Each partition has an offset (ID) to track messages.

Example: A topic can have 3 partitions, meaning Kafka can process 3 messages in parallel.

5. Consumer

Reads (subscribes) messages from topics.

Works in consumer groups to distribute the load.

Uses offsets to track which messages have been read.

Can read in real-time or batch mode.

6. Consumer Group

A group of consumers working together to process data.

Each consumer in the group reads from different partitions of a topic.

Ensures fault tolerance and parallelism.

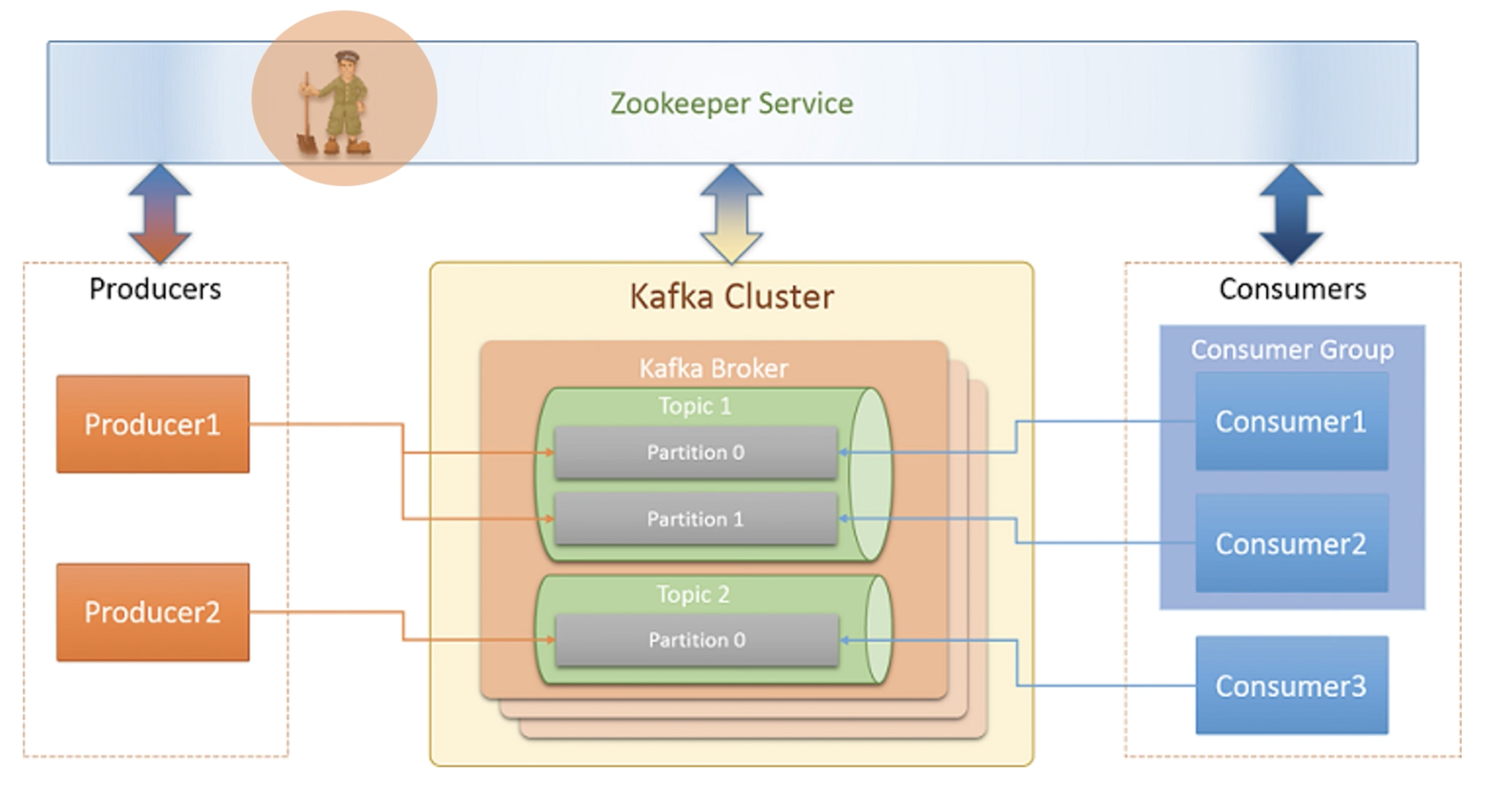

7. Zookeeper (Used for Kafka Cluster Management)

Manages Kafka brokers and leader elections.

Keeps track of broker metadata (which broker is active).

Stores consumer offsets (in older versions of Kafka).

Kafka no longer requires Zookeeper from version 2.8+ (using KRaft mode instead).

8. Kafka Connect

A tool for integrating Kafka with external systems (databases, cloud, etc.).

Provides pre-built connectors for databases, message queues, and cloud storage.

Example: Connecting Kafka with MySQL, MongoDB, Elasticsearch, AWS S3.

9. Kafka Streams

A stream processing API for real-time data transformation.

Enables processing of Kafka messages as they arrive.

Used for building real-time analytics, ETL pipelines, and event-driven apps.

10. Schema Registry (Optional, but Useful)

Stores schemas for messages in Kafka topics.

Ensures data compatibility between producers and consumers.

Works with Apache Avro, Protobuf, or JSON Schema.

How Kafka Works

Producers send messages to specific topics in Kafka.

Kafka brokers store the messages in partitions, ensuring distributed storage.

Consumers read messages from topics either individually or as part of a consumer group.

Kafka ensures message durability by persisting messages and replicating data across multiple brokers.

Why Use Apache Kafka?

Kafka is widely adopted for several reasons:

Scalability: Kafka’s distributed architecture allows it to scale horizontally across multiple nodes.

High Throughput: It can handle millions of messages per second with low latency.

Fault Tolerance: Kafka replicates data across multiple brokers, ensuring durability and reliability.

Decoupling of Services: Kafka enables microservices and event-driven kafk by allowing producers and consumers to communicate asynchronously.

Persistent Storage: Messages are stored durably on disk and can be replayed when needed.

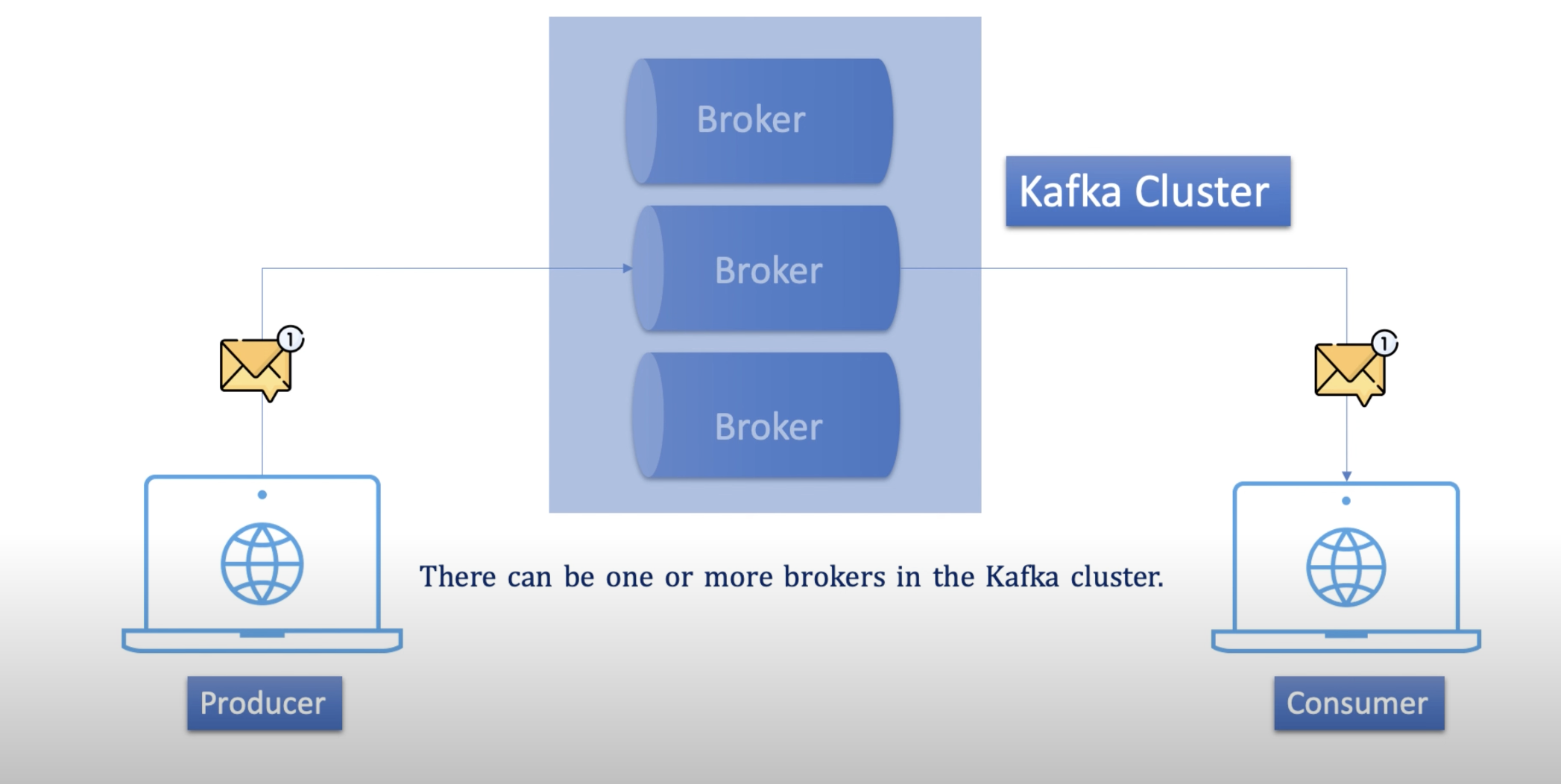

Kafka Cluster

A Kafka Cluster consists of multiple brokers (servers) working together to handle large-scale real-time data streaming. The cluster ensures high availability, fault tolerance, and scalability by distributing data across multiple brokers and partitions.

Kafka Cluster Architecture

A typical Kafka cluster consists of the following components:

ZooKeeper (or KRaft in newer versions): Manages broker metadata, leader election, and cluster coordination.

Kafka Brokers: Servers that store and manage data, handling producer and consumer requests.

Producers: Applications that send data (events/messages) to Kafka topics.

Partitions: Each topic is divided into partitions to enable parallel processing.

Consumers & Consumer Groups: Applications that read and process messages from topics.

Kafka Cluster Workflow

Producers send data to Kafka topics.

Kafka brokers store the messages in partitions, ensuring distributed storage.

ZooKeeper (or KRaft mode) manages cluster health, broker information, and leader elections.

Consumers pull data from partitions, ensuring high throughput and load balancing.

Data replication ensures fault tolerance—if a broker fails, another takes over.

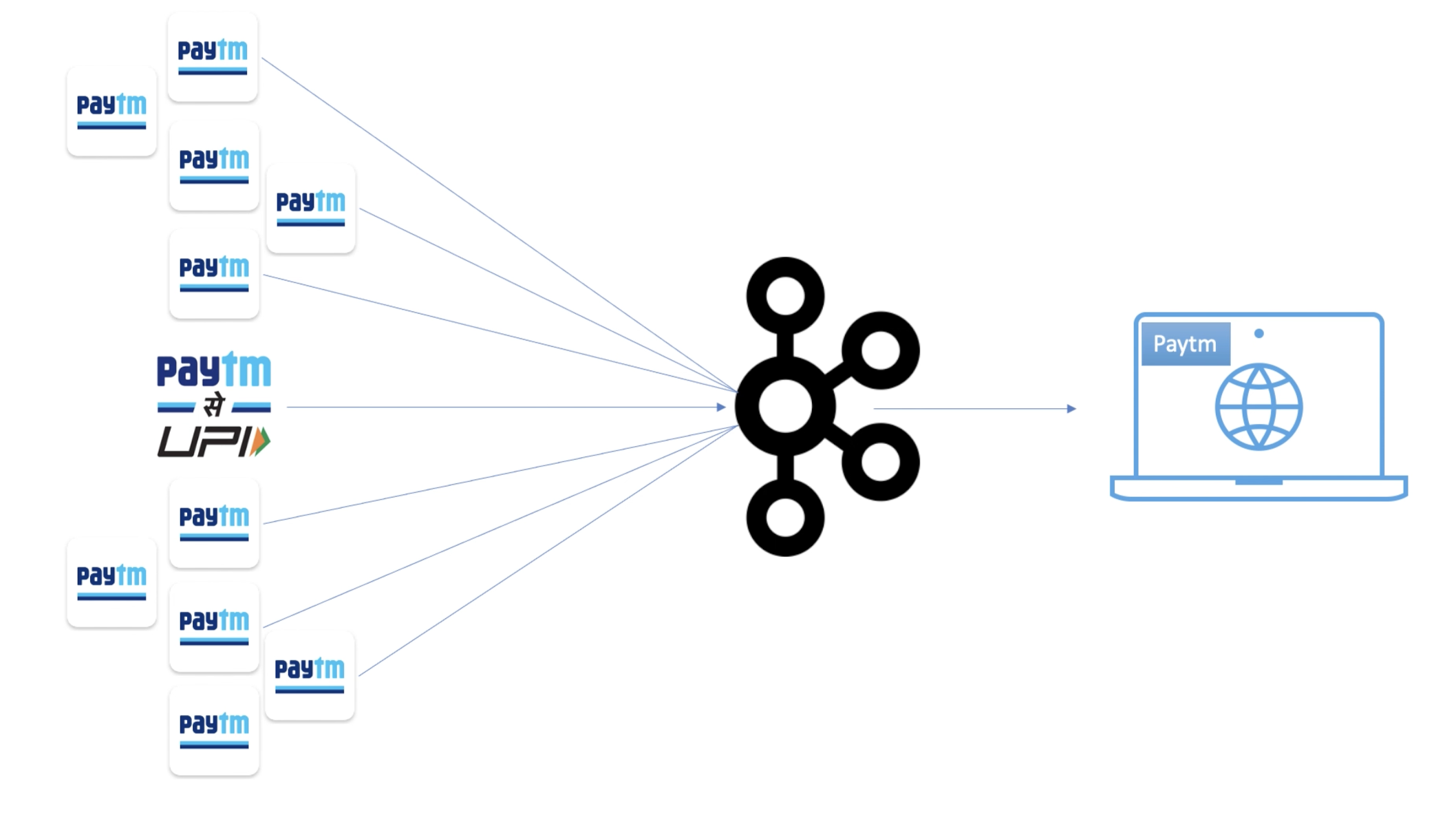

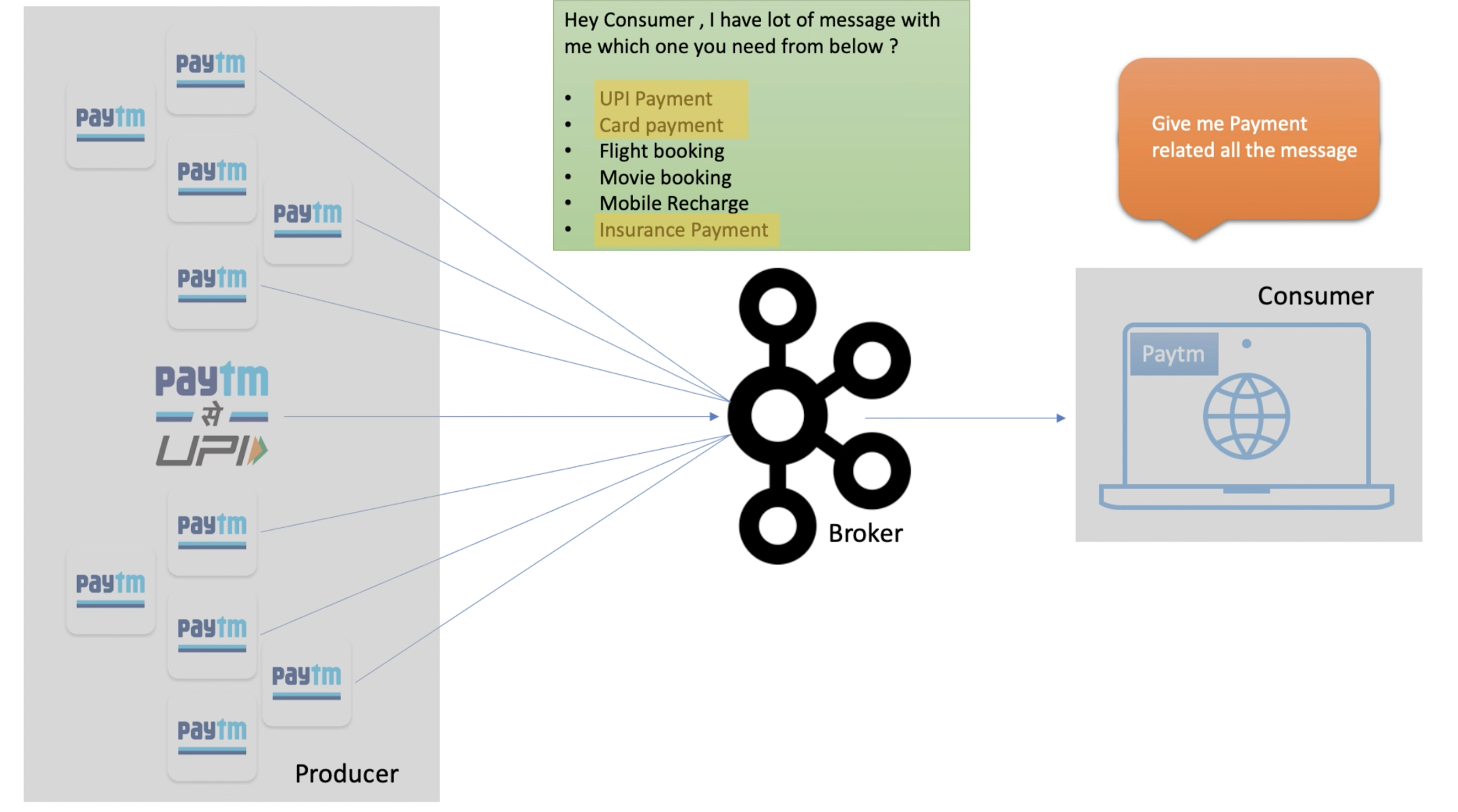

Right now, the consumer needs to read all the messages to process them.

So, the consumer asks the broker:

"Hey broker, give me all the messages you have received."

The broker then checks its storage and replies:

"Hey consumer, I have a lot of messages. Which one do you need?"

Again, the consumer asks...

Right now, the consumer needs only payment-specific messages.

So, the consumer asks the broker:

"Hey broker, give me all the payment-specific messages."

The broker responds with a counter-question:

"Hey buddy, I have different types of payment messages. Which one do you need?"

Now, the consumer is stuck because of this back-and-forth questioning. This can be frustrating, right?

So, how can we avoid this issue?

This is where topics come into play. Topics help categorize different types of messages, making it easier for consumers to get only what they need.

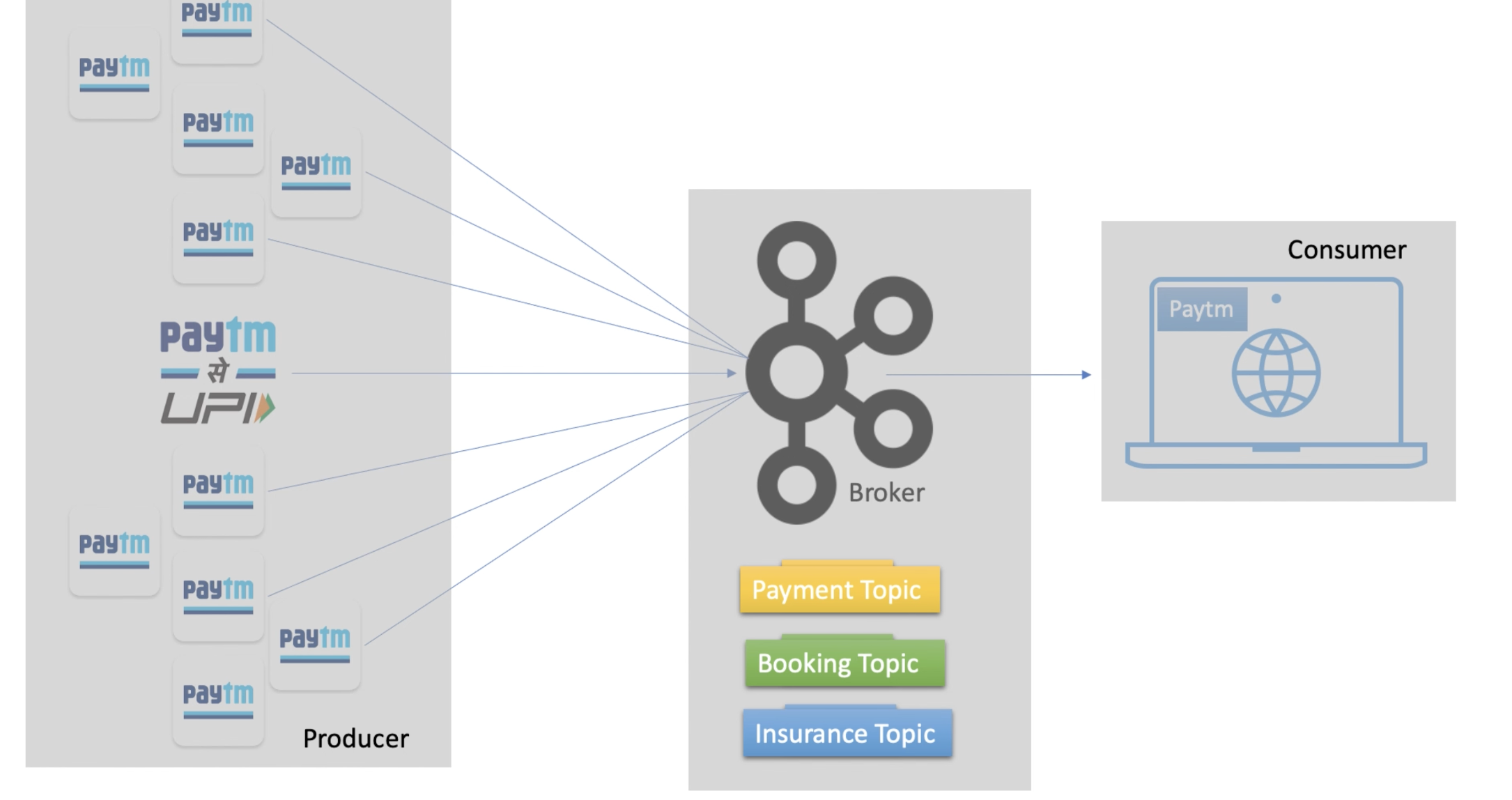

How can topics help?

We can create multiple topics to store different types of messages. For example, we can have:

Payment Topic

Insurance Topic

Now, if a producer is sending payment-specific messages, they will be posted directly to the Payment Topic. This way, consumers can simply subscribe to the Payment Topic and get only payment-related messages—without any unnecessary back-and-forth communication.

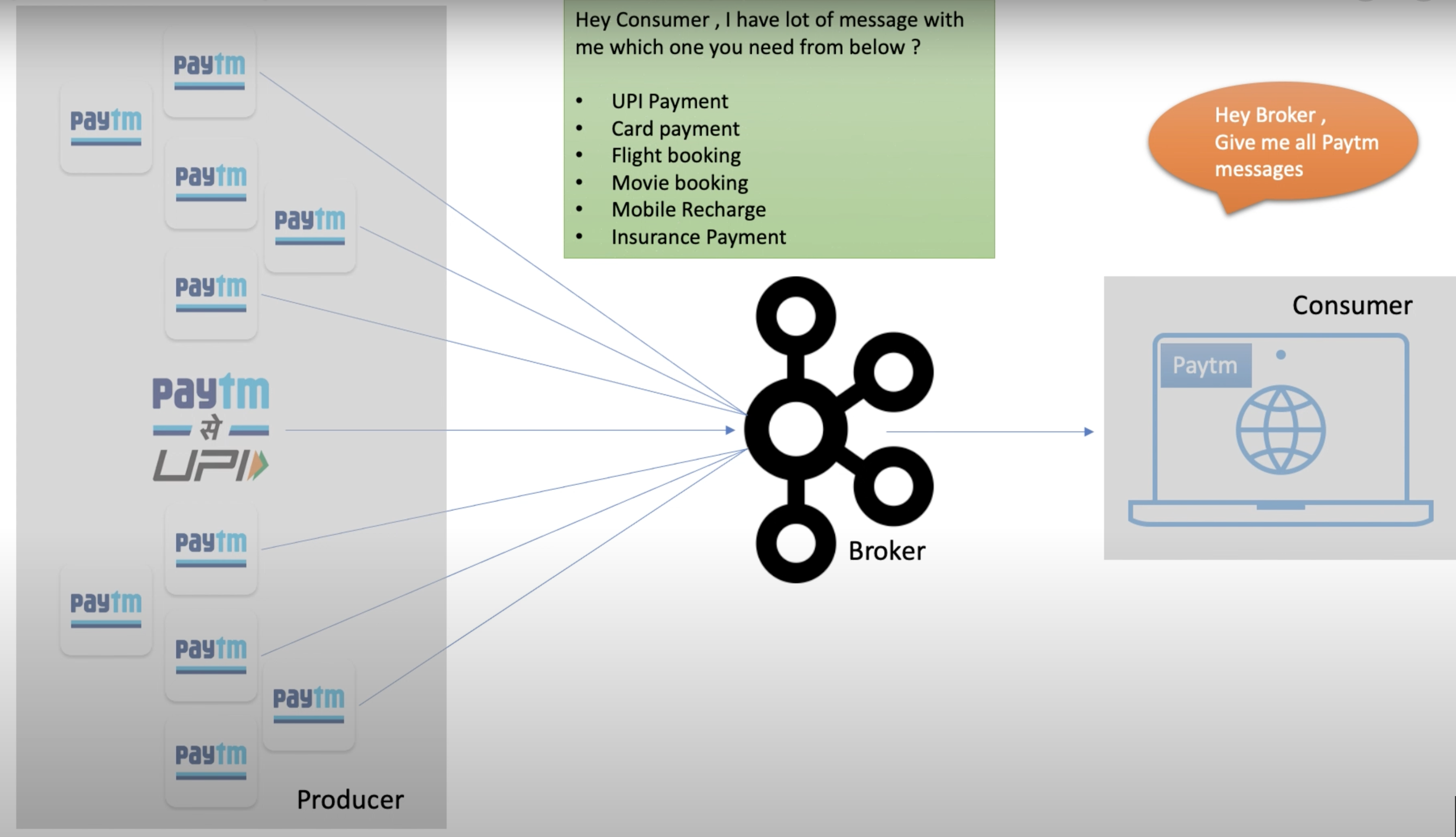

Segregating Different Event Types into Separate Topics in Kafka

In Kafka, you can organize different types of events into separate topics. This allows consumers to subscribe only to the topics relevant to them without needing back-and-forth communication with brokers.

For example, if a consumer only needs payment-related messages, they can directly subscribe to the payment topic instead of requesting the broker for payment-specific data. The consumer simply listens to the payment topic and gets all the required messages.

The diagram represents a Kafka-based event streaming architecture where a producer (Paytm) sends different types of events (Payments, Booking, and Insurance) to a Kafka broker, which organizes them into respective topics. A consumer then processes these messages.

Handling Payment Cards and Transaction-Related Data in Kafka

To manage payment transactions securely and efficiently in Kafka, follow these best practices:

Define Kafka Topics for Payments and Transactions

Payment Topic: Handles payment-related events such as card transactions, UPI payments, and wallet transactions.

Booking Topic: Stores booking-related information (e.g., travel or movie tickets).

Insurance Topic: Captures insurance-related data.

Similarly, you can think of a Kafka topic like a database table.

In a database, we store employee information in the employee table and payment information in the payment table.

In Kafka, we follow the same approach. If a producer sends employee-related messages, they go to the employee topic. If it publishes payment-related messages, they go to the payment topic.

With this structure, multiple consumers can efficiently retrieve the data they need:

Consumer 1, who wants employee data, can subscribe to the employee topic instead of asking the broker for employee messages.

Consumer 2, who needs payment information, can subscribe to the payment topic.

This approach ensures better organization, efficient message retrieval, and scalability in the Kafka ecosystem.

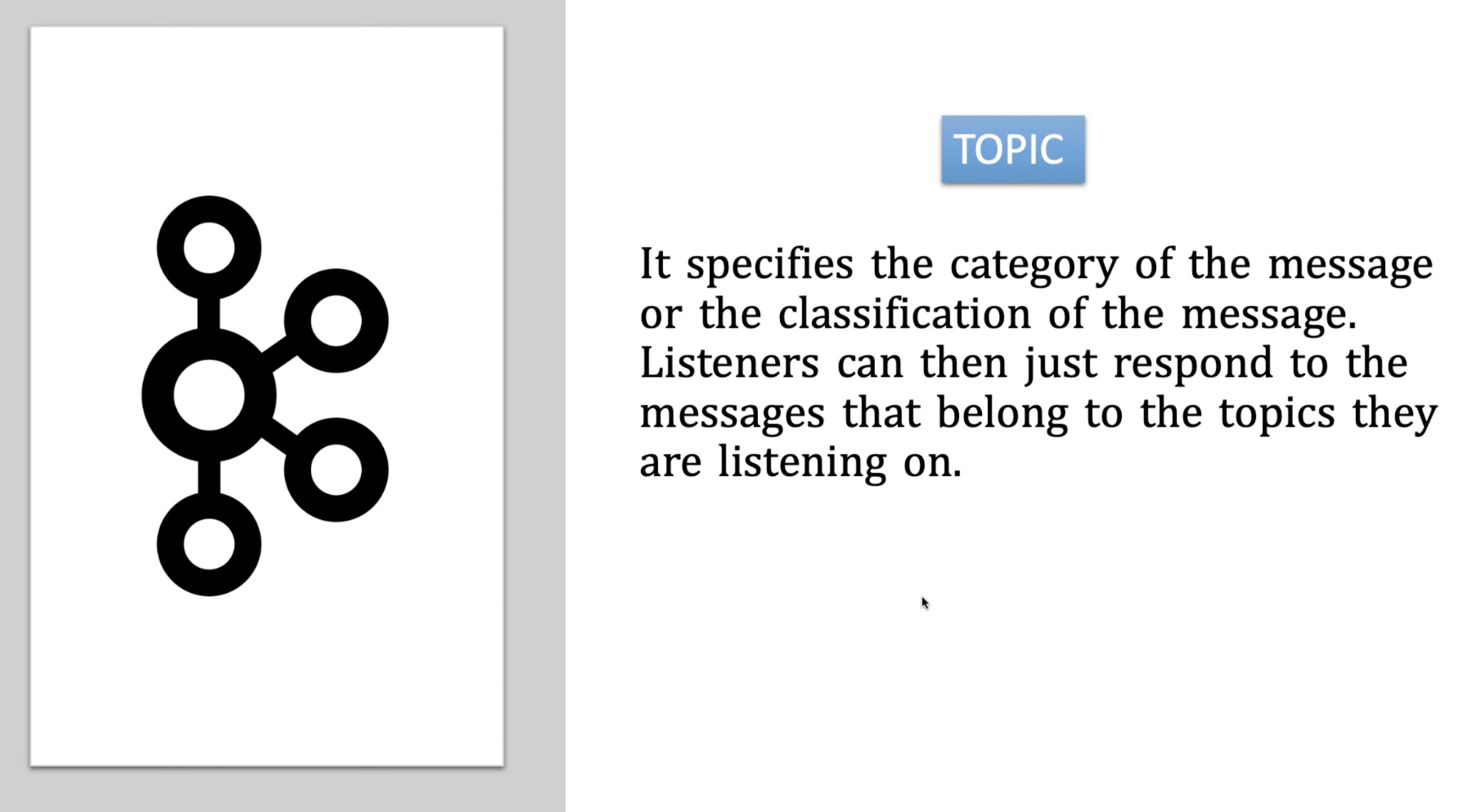

Definition of a Topic in Kafka

A topic in Kafka is used to categorize or classify messages. Consumers (listeners) subscribe to specific topics to receive only the messages relevant to them.

For example, if Consumer 1 needs payment-related information, it can subscribe to the payment topic and receive only payment-related messages.

Topics play a crucial role in the Kafka ecosystem by organizing data efficiently.

Now, let's move on to the next key component: partition.

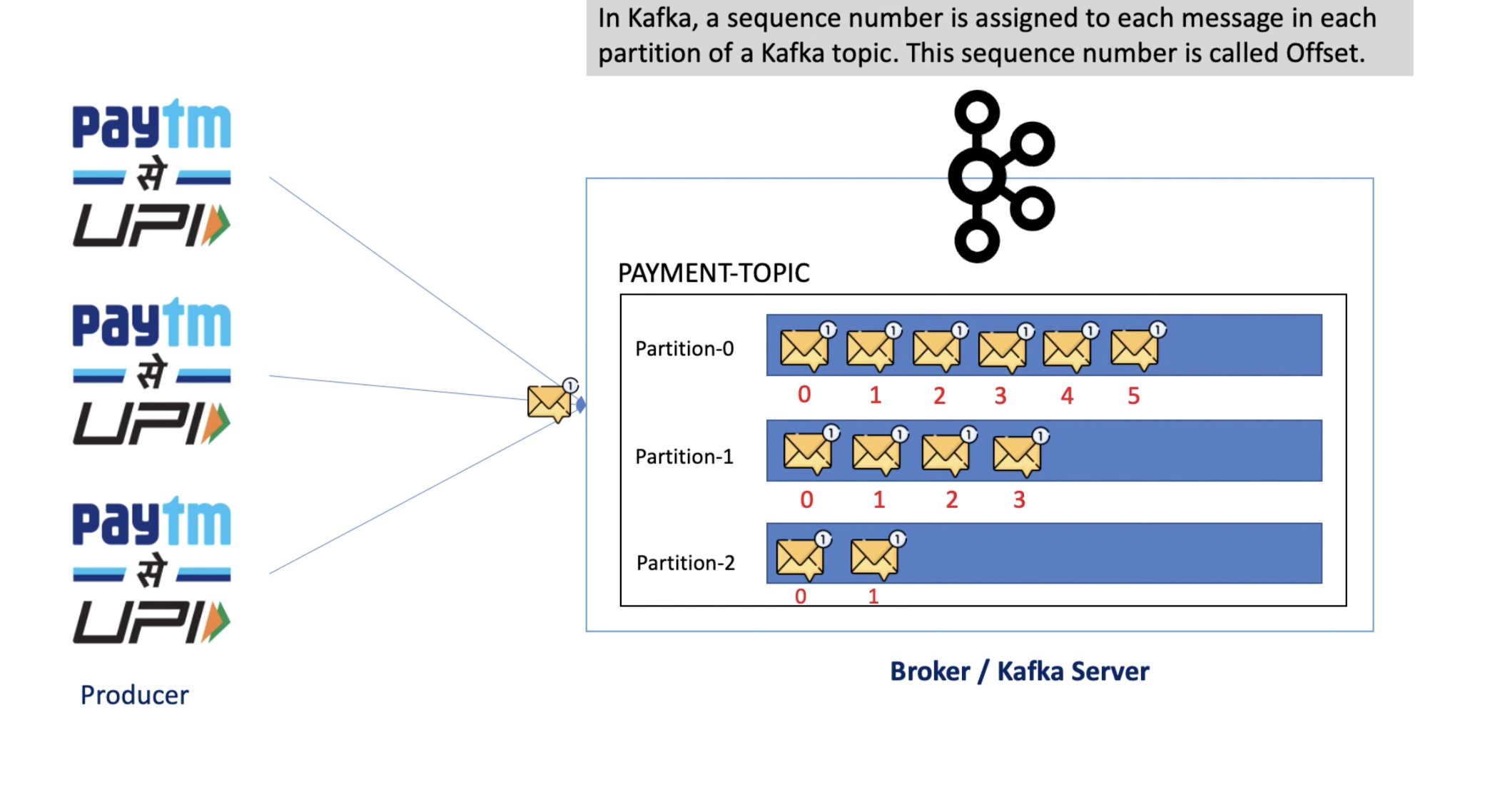

Understanding Kafka Partitioning in Simple Terms

Imagine Paytm is sending a massive amount of data every second—millions or even billions of messages. The Kafka broker receives these messages and stores them inside a topic.

Can a single topic handle so much data?

No! If all messages are stored in one place (one machine), it can cause storage problems and slow down processing.

What’s the solution?

Since Kafka is a distributed system, we can split the topic into multiple parts and store them across different machines. This process is called topic partitioning, and each part is called a partition.

Why is partitioning important?

Better Performance 🚀 – Messages are stored in multiple partitions, allowing Kafka to handle large amounts of data faster.

High Availability 🔄 – If one partition fails, the others can still work, preventing system downtime.

Parallel Processing ⚡ – Different partitions can accept messages at the same time, making the system more efficient.

How does it work?

When a producer (like Paytm) sends bulk messages, they get divided across multiple partitions. This improves speed and reliability.

Can we control the number of partitions?

Yes! We can decide the number of partitions when creating a Kafka topic. More partitions mean better scalability and performance.

Now that we understand partitioning, let’s move to the next concept: offsets! 🔥

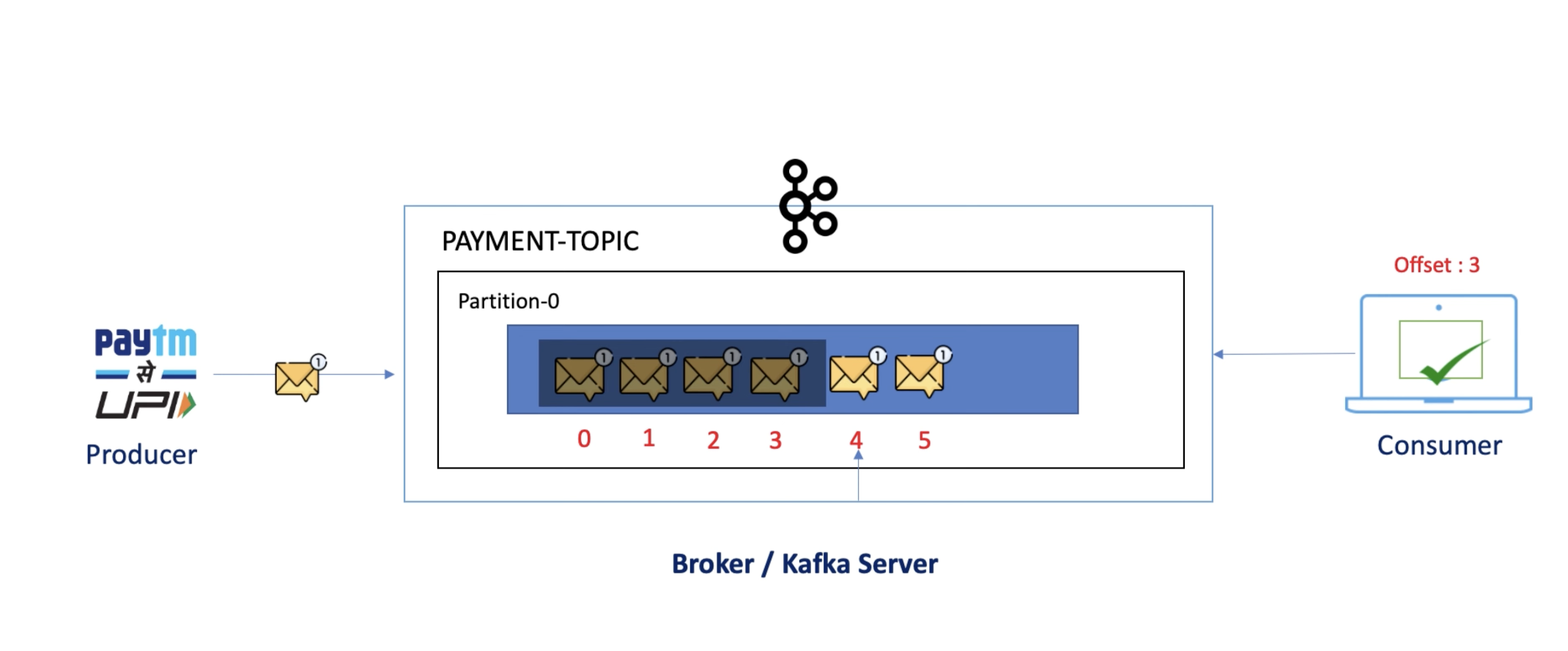

Offset

Kafka Offset (Tracking Messages)

Every message in a Kafka partition gets a unique number called an offset (e.g., 0, 1, 2, 3...). This number helps Kafka track which messages have been read.

If a consumer (the system that reads messages) stops working and comes back later, it can continue reading from where it left off instead of starting over. This prevents data loss and ensures smooth processing.

If a consumer stops at 3 and later resumes, it won’t start from 0 again. Instead, it will continue from 4, picking up where it left off.

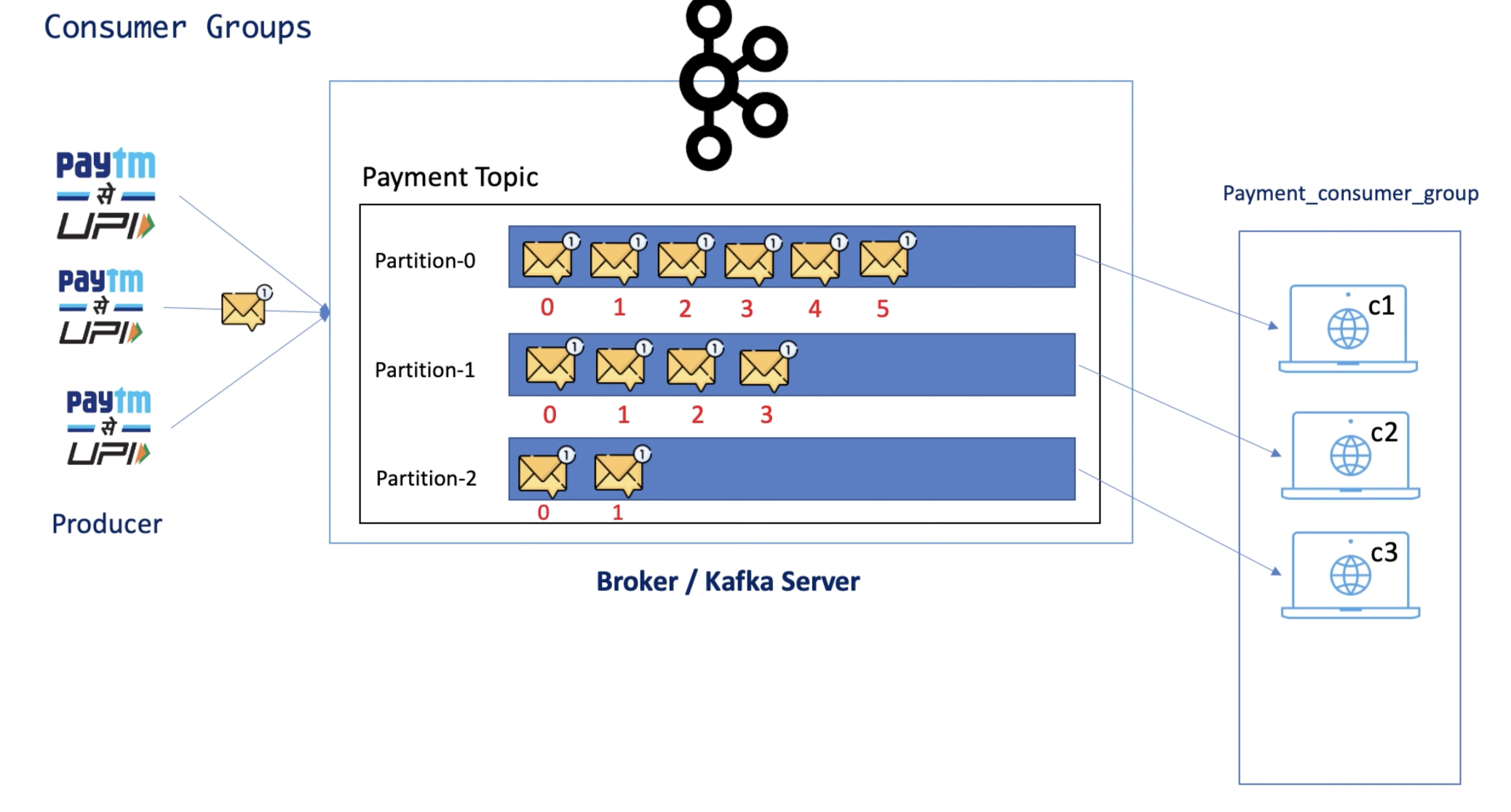

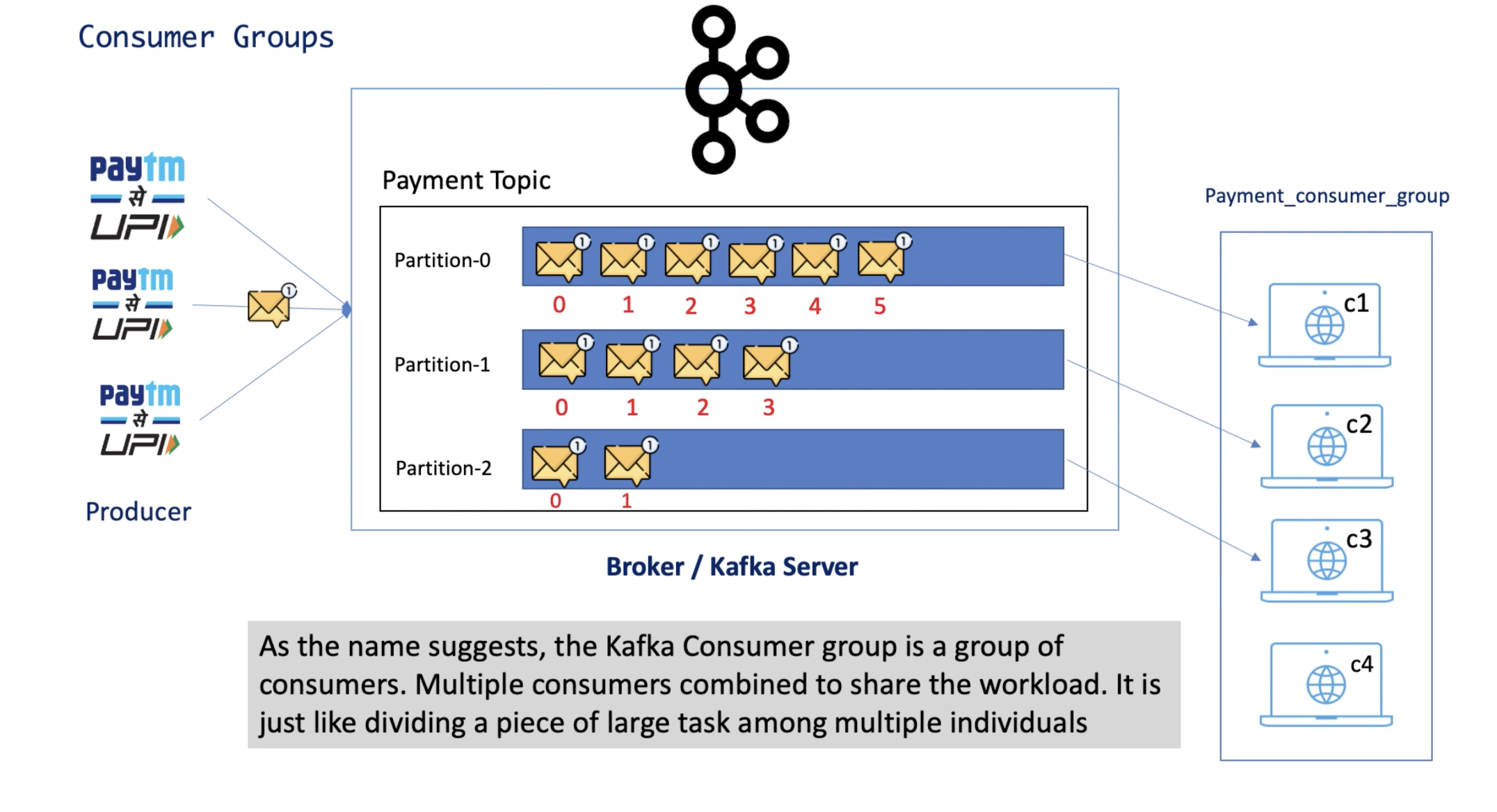

Kafka Consumer Group (Sharing the Work)

A consumer reads messages from Kafka partitions, but one consumer alone might be slow. To speed things up, Kafka allows multiple consumers to form a Consumer Group. Each consumer in the group reads from different partitions at the same time, improving performance.

If there are 3 partitions, each consumer in the group gets one partition to read from.

If a 4th consumer joins but there are only 3 partitions, it remains idle until another consumer fails. Then, it takes over that partition.

If a consumer fails, Kafka reassigns partitions to available consumers. This is called consumer rebalancing.

Kafka Zookeeper (The Coordinator)

Kafka uses Zookeeper to manage and keep track of all components. It acts as a manager that:

Keeps records of topics, partitions, offsets, and consumers.

Helps in managing failures and reassigning partitions when needed.

Zookeeper ensures that Kafka runs smoothly and efficiently in a distributed system.

Advantages of a Kafka Cluster

Scalability – Easily add brokers to handle more data.

Fault Tolerance – Data replication prevents loss in case of failure.

High Throughput – Processes millions of messages per second.

Parallel Processing – Multiple partitions allow concurrent data processing.

Decoupling Services – Producers and consumers are independent.

Use Cases of Kafka

Log Aggregation: Collecting and analyzing logs from different applications.

Real-Time Analytics: Streaming data for analytics dashboards.

Event-Driven Architectures: Decoupling microservices using event-driven messaging.

IoT Data Processing: Handling sensor data streams from IoT devices.

Fraud Detection: Streaming and analyzing transactions in real time.

Conclusion

Apache Kafka is a game-changer in data streaming and event-driven architecture. Its ability to process real-time data efficiently makes it an essential tool for modern applications. Whether you're dealing with logs, analytics, or microservices, Kafka can help you build scalable, reliable, and high-performance systems.

If you're new to Kafka, start by setting up a local Kafka cluster and experimenting with producers and consumers. As you gain experience, you can explore advanced features such as Kafka Streams and Connect for building robust data pipelines.

Are you using Kafka in your projects? Share your experience in the comment

Subscribe to my newsletter

Read articles from Atif Raza directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by