AWS Cloud Cost Optimization Using Lambda Function Project Boto3

Ankita Lunawat

Ankita LunawatLet's break down the AWS Cloud Cost Optimization project using Lambda and Boto3, going into detail about its components and how they work together.

Project Overview:

This project aims to automate AWS cost optimization by creating a Lambda function that performs several resource cleanup tasks. It leverages Boto3 (the AWS SDK for Python) to interact with various AWS services. The primary focus is on identifying and removing idle or unnecessary resources, thus reducing cloud spending.

Key Components:

AWS Lambda Function (Python with Boto3):

Core Logic: The Lambda function contains the Python code that executes the cost optimization tasks. It uses Boto3 to interact with EC2, CloudWatch, EBS, Snapshots, and SNS.

Resource Identification: It identifies resources based on specific criteria (e.g., CPU utilization, volume status, snapshot age) and tags.

Resource Management: It performs actions like stopping EC2 instances, deleting EBS volumes, and deleting snapshots.

Notifications: It sends notifications via SNS to inform users about the actions taken.

Configuration: The Lambda function uses variables to define thresholds, SNS topic ARN, and tagging strategies, making it configurable.

Boto3 (AWS SDK for Python):

Service Interaction: Boto3 is the library that allows the Python code to communicate with AWS services.

API Calls: It provides methods to make API calls to EC2, CloudWatch, EBS, Snapshots, and SNS.

Data Retrieval: It retrieves resource information and metrics from AWS services.

Action Execution: It executes actions like stopping instances and deleting resources.

AWS Services:

EC2 (Elastic Compute Cloud):

The Lambda function identifies and stops idle EC2 instances based on CPU and network utilization metrics from CloudWatch.

It uses tags to filter instances.

CloudWatch:

CloudWatch is used to retrieve CPU and network utilization metrics for EC2 instances.

The Lambda function analyzes these metrics to determine if an instance is idle.

EBS (Elastic Block Storage):

The Lambda function identifies and deletes unattached EBS volumes.

This helps eliminate unnecessary storage costs.

Snapshots:

The Lambda function identifies and deletes old snapshots based on their age.

This helps manage storage costs associated with snapshots.

SNS (Simple Notification Service):

SNS is used to send notifications about the actions taken by the Lambda function.

This provides visibility and transparency into the cost optimization process.

IAM (Identity and Access Management):

IAM roles and policies are used to grant the Lambda function the necessary permissions to interact with AWS services.

This ensures that the function operates with the principle of least privilege.

CloudWatch Events (or Schedule):

CloudWatch Events (or a schedule) is used to trigger the Lambda function at regular intervals.

This automates the cost optimization process

Prerequisites :

AWS account with EC2 instances, EBS volumes, and snapshots.

Advanced Python and Boto3 knowledge.

Familiarity with AWS Lambda and IAM.

IAM role with appropriate permissions.

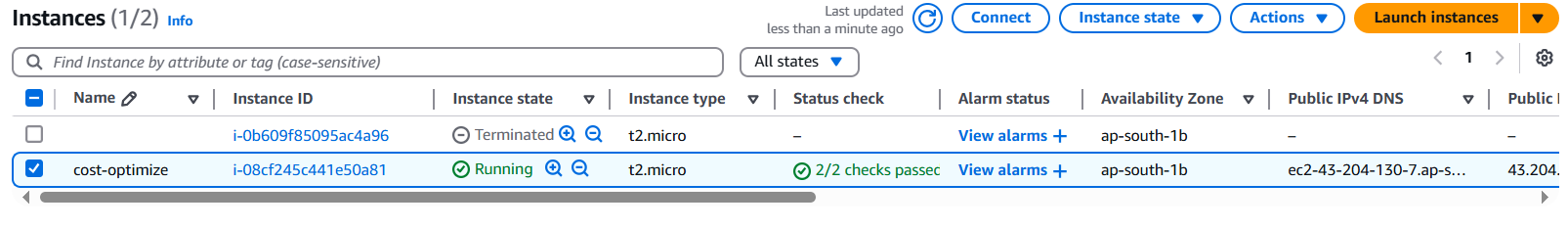

1.Create an EC2 instance and take a snapshot.

Create an EC2 instance and take a snapshot of its attached volume.

- Create a snapshot of the attached volume: Once the instance is running, we'll create a snapshot of its attached volume. Think of this snapshot as a backup or image of the volume (attached to ec2).

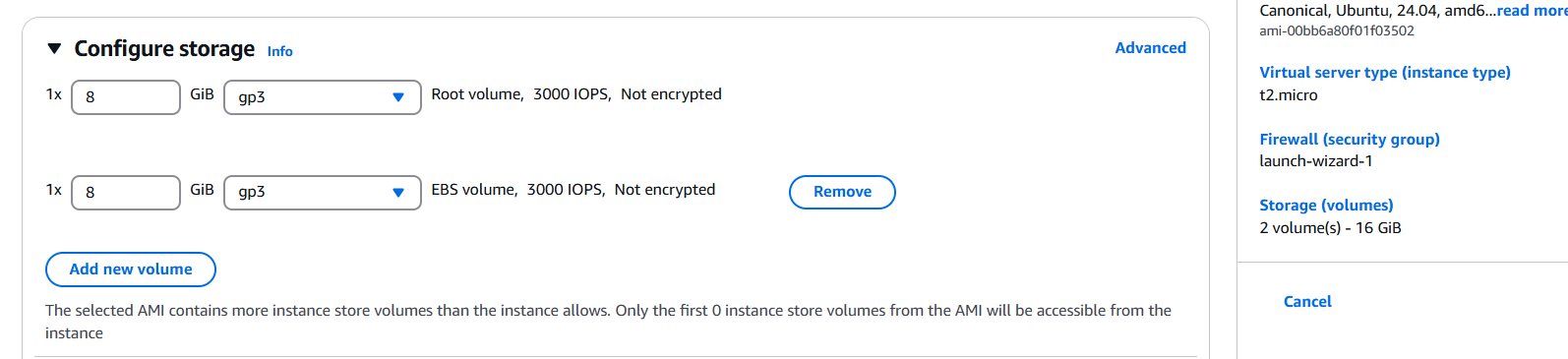

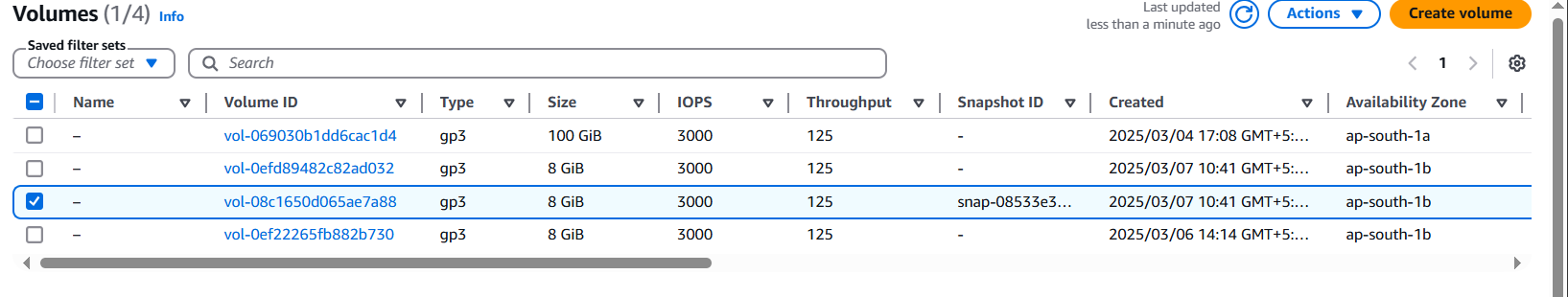

Our instance is created. Check volume now > (LEFT) Elastic Block Store > Volume

Our attached volume has been created along with the EC2 instance.

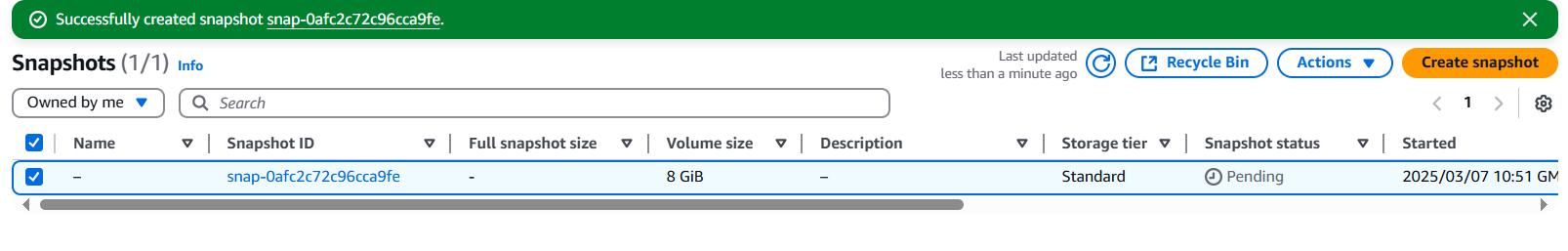

2.Create a Snapshot

Go to your EC2 dashboard > Snapshots.

Our developer wants to delete the EC2 instance, volume, and snapshot, but forgot to delete the snapshot while the volume was automatically deleted with the EC2 instance.

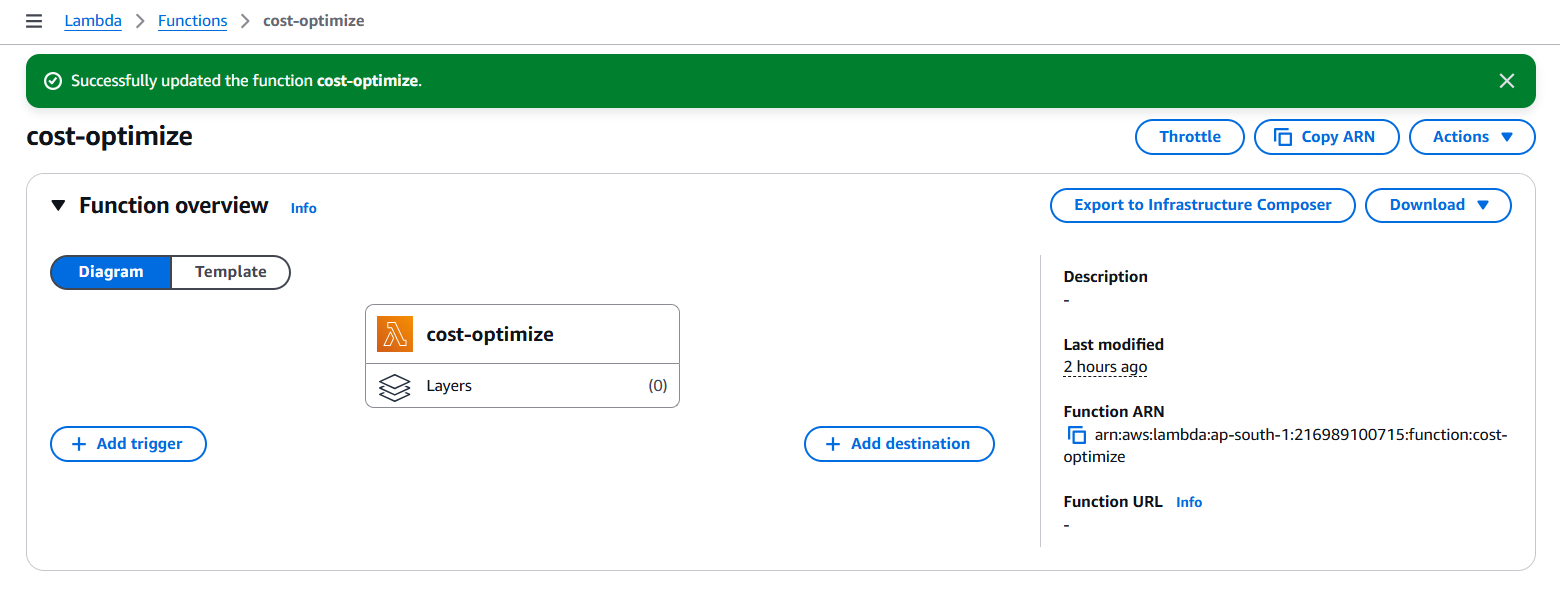

3.Lambda Function for Cost Optimization

Set up a Lambda function to automate cleaning up old snapshots.

Login to AWS Console

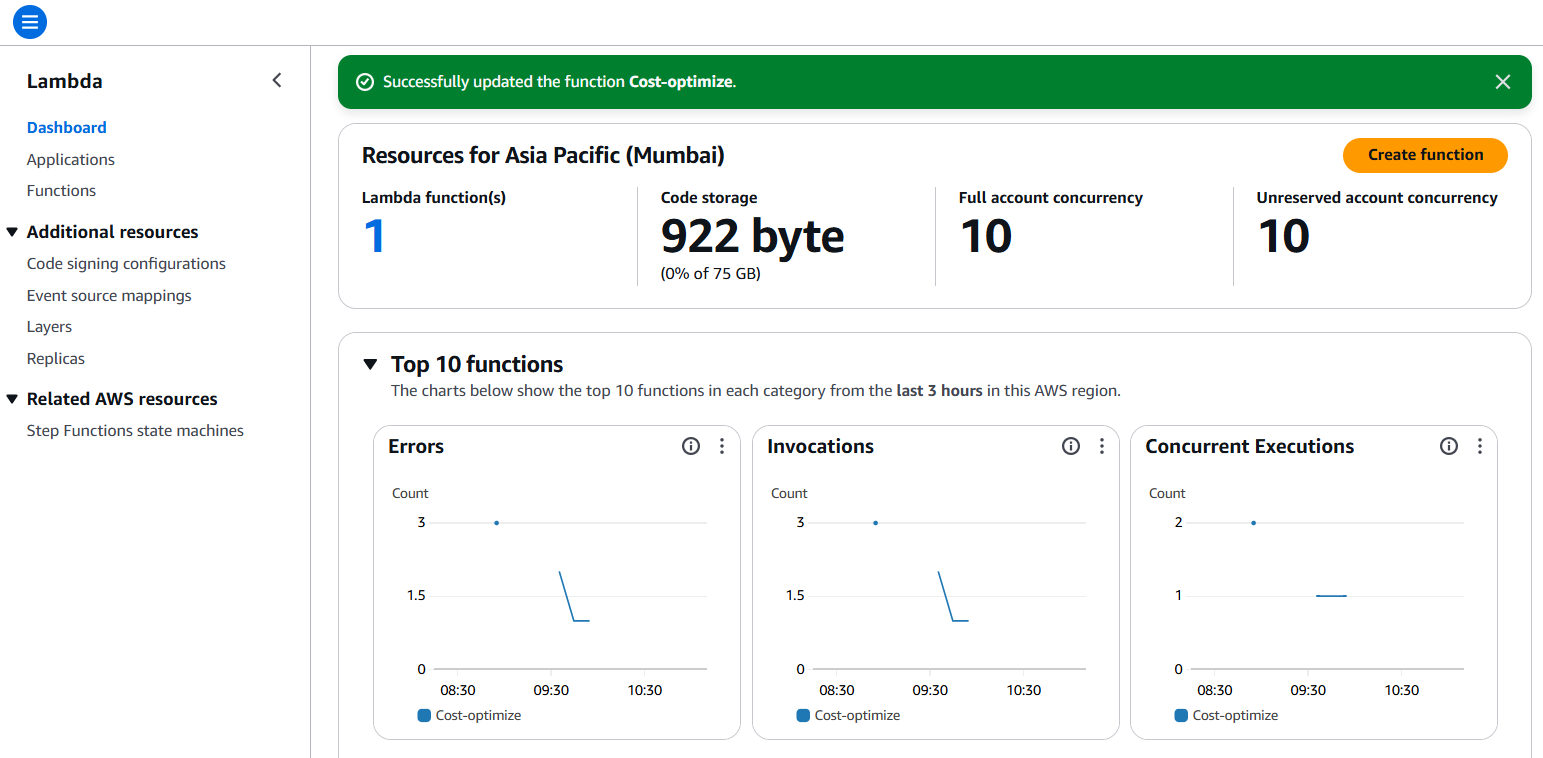

- Go to the AWS Management Console, navigate to Lambda in the Services menu, click on Create function, select Author from Scratch, name your function

cost-optimization-ebs-snapshot, choose Python as the runtime, and leave the default execution role to update it later for permissions.

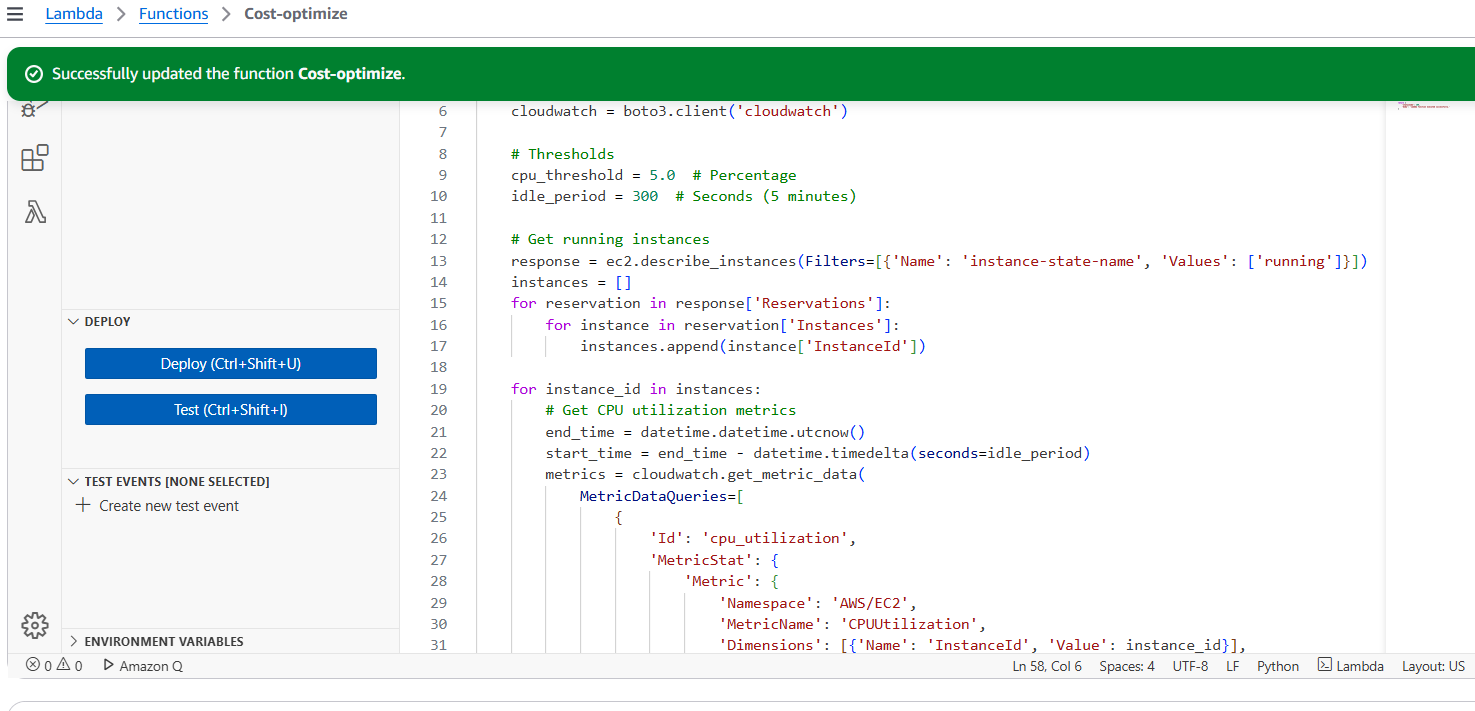

- Deploy Lambda Function

Once the function is created, scroll to the Function code section, paste your code in the editor, and click Deploy to deploy the Lambda function.

import boto3 import datetime ec2 = boto3.client('ec2') cloudwatch = boto3.client('cloudwatch') sns = boto3.client('sns') # Configuration cpu_threshold = 5.0 network_threshold = 1000000 #bytes idle_period = 300 # Seconds (5 minutes) snapshot_age_threshold = 30 # Days sns_topic_arn = 'YOUR_SNS_TOPIC_ARN' tag_name = "CostOptimization" tag_value = "true" def lambda_handler(event, context): stop_idle_instances() delete_unattached_ebs_volumes() delete_old_snapshots() return {'statusCode': 200, 'body': 'Optimization completed.'} def stop_idle_instances(): response = ec2.describe_instances(Filters=[ {'Name': 'instance-state-name', 'Values': ['running']}, {'Name': f'tag:{tag_name}', 'Values': [tag_value]} ]) for reservation in response['Reservations']: for instance in reservation['Instances']: instance_id = instance['InstanceId'] if is_instance_idle(instance_id): ec2.stop_instances(InstanceIds=[instance_id]) send_notification(f"Stopped idle instance: {instance_id}") def is_instance_idle(instance_id): end_time = datetime.datetime.utcnow() start_time = end_time - datetime.timedelta(seconds=idle_period) cpu_metrics = cloudwatch.get_metric_data( MetricDataQueries=[{ 'Id': 'cpu', 'MetricStat': { 'Metric': {'Namespace': 'AWS/EC2', 'MetricName': 'CPUUtilization', 'Dimensions': [{'Name': 'InstanceId', 'Value': instance_id}]}, 'Period': 60, 'Stat': 'Average' }, 'ReturnData': True }], StartTime=start_time, EndTime=end_time ) network_metrics = cloudwatch.get_metric_data( MetricDataQueries=[{ 'Id': 'network', 'MetricStat': { 'Metric': {'Namespace': 'AWS/EC2', 'MetricName': 'NetworkOutBytes', 'Dimensions': [{'Name': 'InstanceId', 'Value': instance_id}]}, 'Period': 60, 'Stat': 'Average' }, 'ReturnData': True }], StartTime=start_time, EndTime=end_time ) cpu_average = sum(cpu_metrics['MetricDataResults'][0]['Values']) / len(cpu_metrics['MetricDataResults'][0]['Values']) if cpu_metrics['MetricDataResults'][0]['Values'] else 100 network_average = sum(network_metrics['MetricDataResults'][0]['Values']) / len(network_metrics['MetricDataResults'][0]['Values']) if network_metrics['MetricDataResults'][0]['Values'] else 1000000000 return cpu_average < cpu_threshold and network_average < network_threshold def delete_unattached_ebs_volumes(): response = ec2.describe_volumes(Filters=[{'Name': 'status', 'Values': ['available']}, {'Name': f'tag:{tag_name}', 'Values': [tag_value]}]) for volume in response['Volumes']: volume_id = volume['VolumeId'] ec2.delete_volume(VolumeId=volume_id) send_notification(f"Deleted unattached EBS volume: {volume_id}") def delete_old_snapshots(): response = ec2.describe_snapshots(OwnerIds=['self'], Filters=[{'Name': f'tag:{tag_name}', 'Values': [tag_value]}]) for snapshot in response['Snapshots']: start_time = snapshot['StartTime'] age_days = (datetime.datetime.now(tz=datetime.timezone.utc) - start_time).days if age_days > snapshot_age_threshold: snapshot_id = snapshot['SnapshotId'] ec2.delete_snapshot(SnapshotId=snapshot_id) send_notification(f"Deleted old snapshot: {snapshot_id}") def send_notification(message): sns.publish(TopicArn=sns_topic_arn, Message=message)

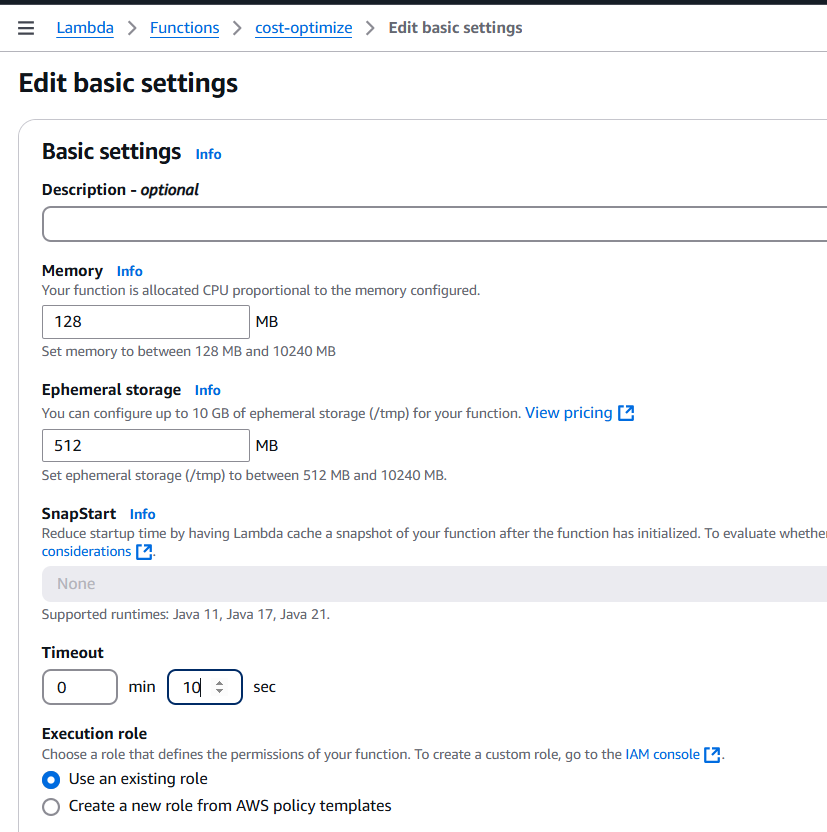

4. Changing the default execution time from 3 seconds to 10 seconds.

Keeping execution time minimal is crucial because AWS Lambda charges based on time, similar to Lambda execution.

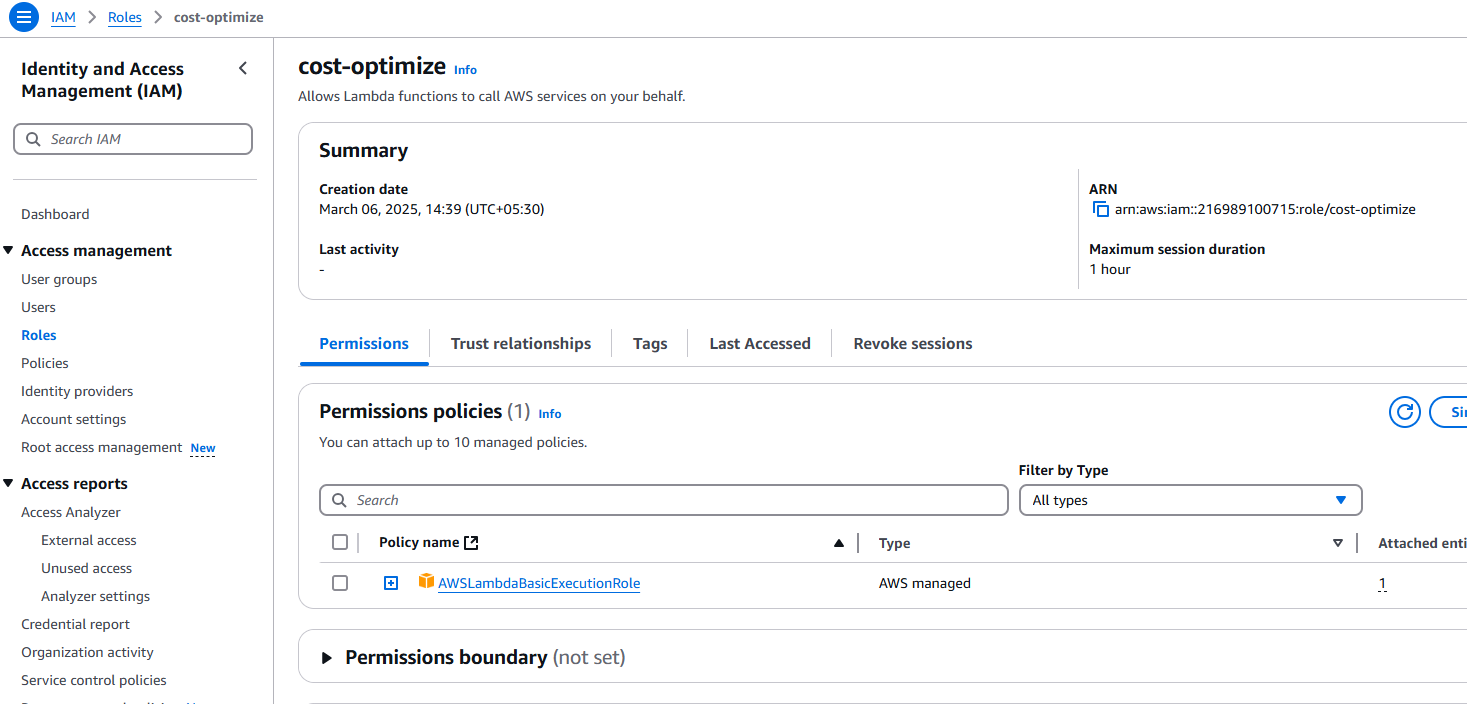

Initially, the Lambda function will fail because the role attached to it lacks the necessary permissions to describe snapshots, volumes, or EC2 instances.

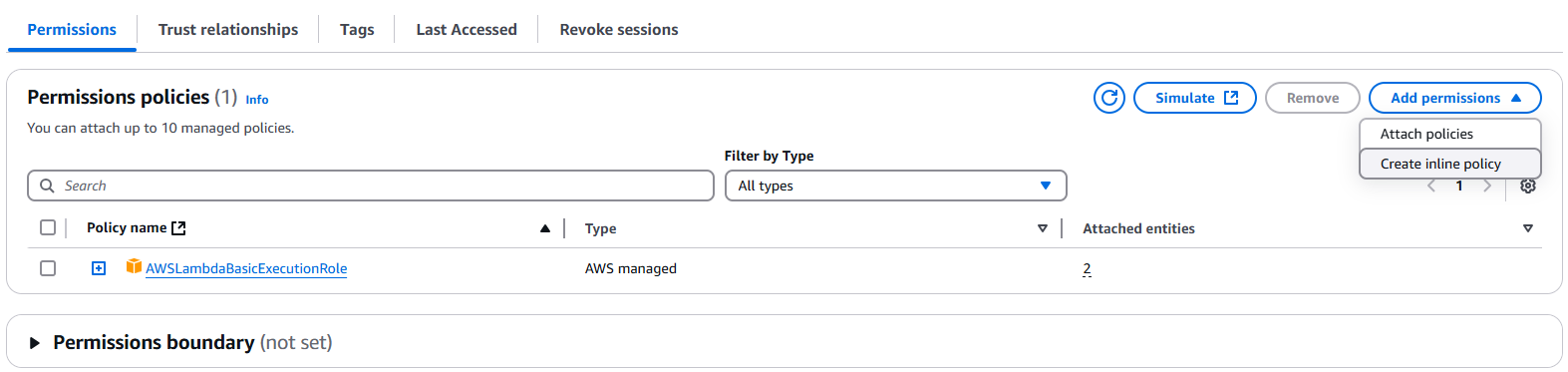

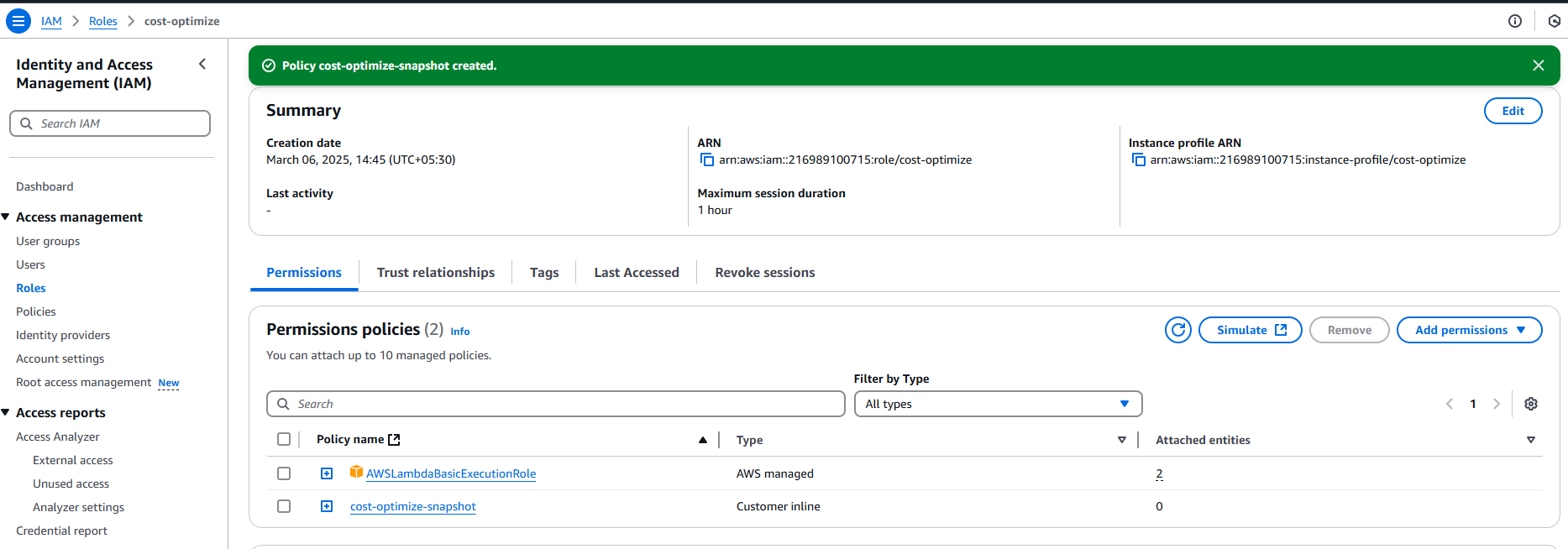

5.Grant Permissions to Lambda Role

- Navigate to the Lambda Permissions: In the Lambda function's configuration, locate the Execution role, which is used by Lambda to interact with other AWS services, and click on it to go to IAM.

Permissions Granting :

Permissions → Add permissions → Attach policies

You need to grant the Lambda role permissions to interact with EC2 and EBS services.

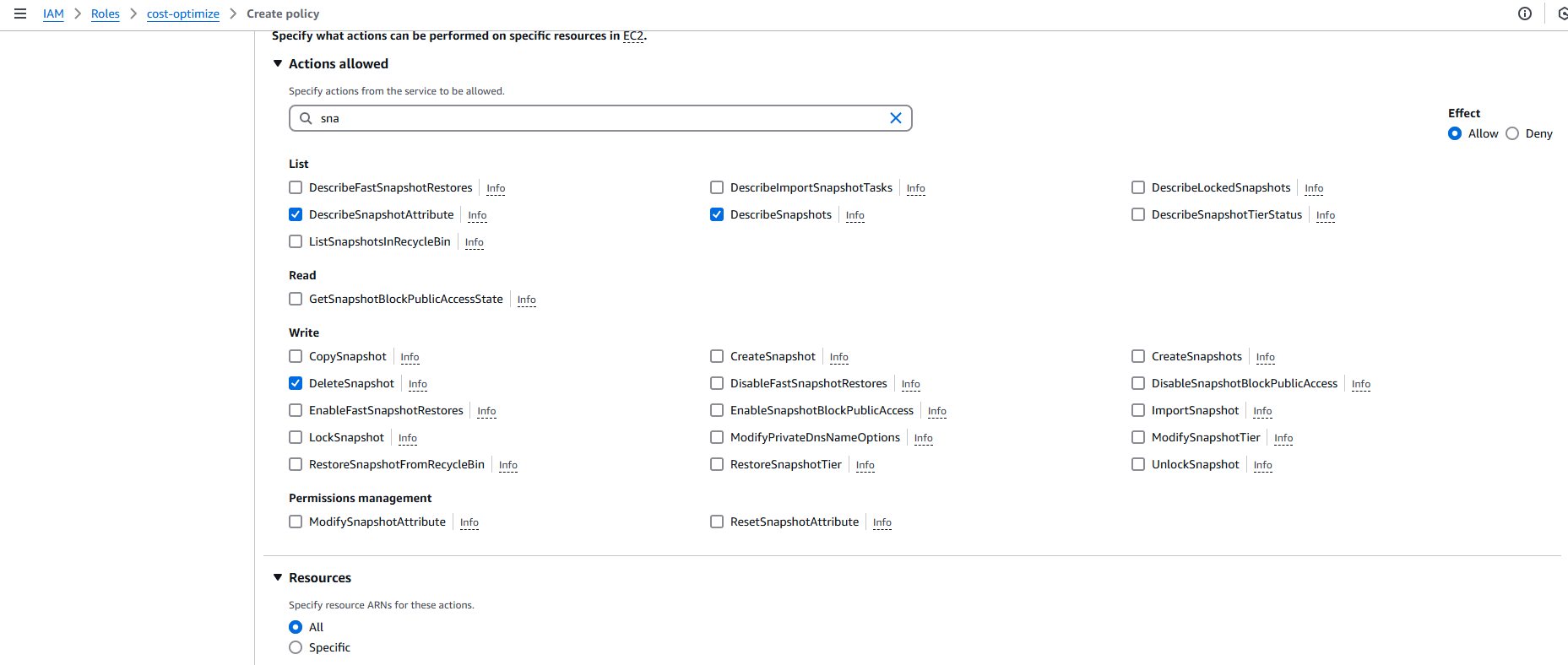

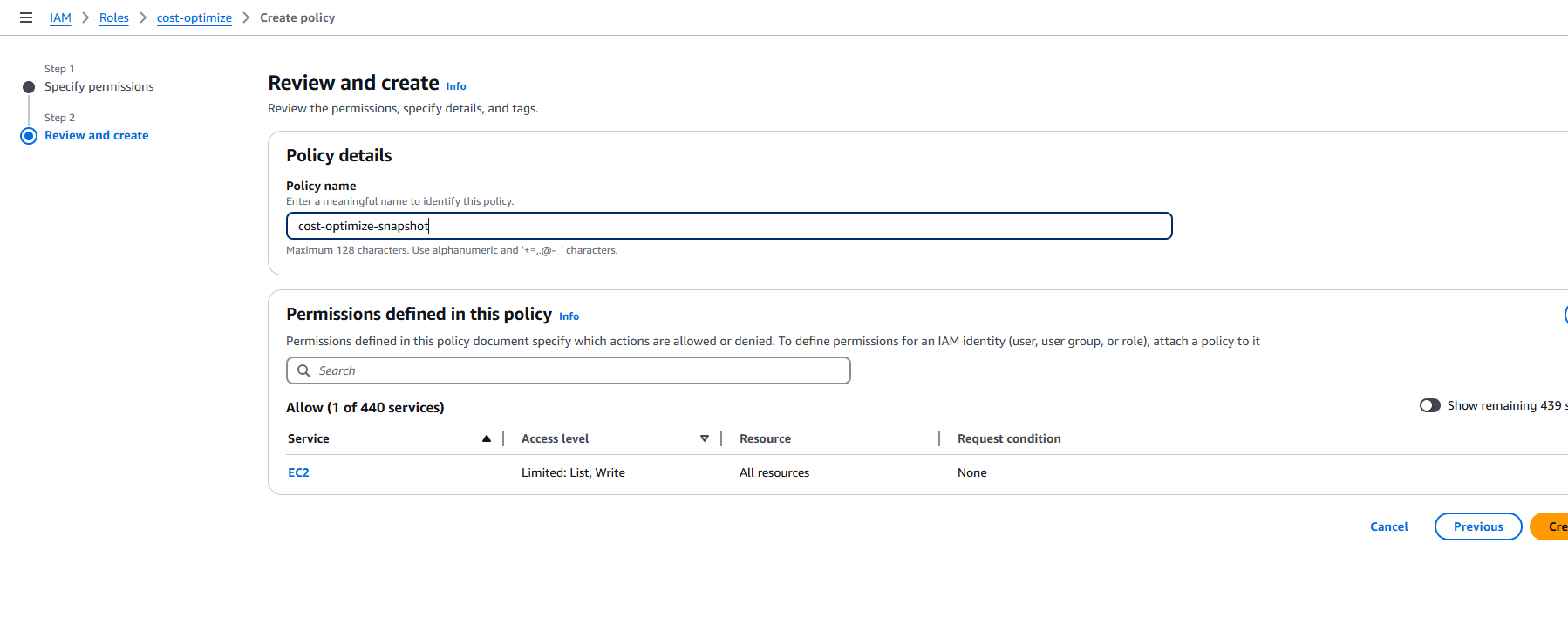

Go to Permissions → Add permissions → Attach policies (Inline), then go to IAM → Policies → Create Inline Policy, and select EC2 for Service.Create a custom policy that allows actions on EC2 snapshots and volumes. Use the following actions:

Describe snapshots (for listing snapshots)

Delete snapshots (for deleting snapshots)

Describe instances (to find if an EC2 instance is associated with a volume)

Describe volumes (for volume information)

Save the policy and attach it to the Lambda execution role.

Note: We increased the Lambda function execution timeout, granted permissions to the Lambda function roles for describing EC2 instances and volumes, and for describing and deleting snapshots, then created and executed a test event to manually run the Lambda function without CloudWatch or S3 triggers.

Successfully deploy the AWS Cloud Cost Optimization project using a Lambda function with Boto3!

Happy Learning…!

Subscribe to my newsletter

Read articles from Ankita Lunawat directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ankita Lunawat

Ankita Lunawat

Hi there! I'm a passionate AWS DevOps Engineer with 2+ years of experience in building and managing scalable, reliable, and secure cloud infrastructure. I'm excited to share my knowledge and insights through this blog. Here, you'll find articles on: AWS Services: Deep dives into core AWS services like EC2, S3, Lambda, and more. DevOps Practices: Best practices for CI/CD, infrastructure as code, and automation. Security: Tips and tricks for securing your AWS environments. Serverless Computing: Building and deploying serverless applications. Troubleshooting: Common issues and solutions in AWS. I'm always eager to learn and grow, and I hope this blog can be a valuable resource for fellow DevOps enthusiasts. Feel free to connect with me on [LinkedIn/Twitter] or leave a comment below!