Mistral OCR vs JigsawStack vOCR

Yoeven D Khemlani

Yoeven D Khemlani

Since Mistral claims to be the world's best OCR, let’s put that to the challenge against JigsawStack vOCR.

Mistral recently launched their OCR model showing benchmarks against Azure OCR, GCP, and AWS with support for multilingual use cases. So we’ll put it through a real-world test with a range of languages and content types.

What is OCR? OCR stands for Optical Character Recognition which is a model that’s great at extracting text from a document or image. Some basic features that almost all OCR models should have:

Multilingual support

Handwriting/Printed support

Bounding boxes (position of the text on the document/image)

Now what makes a great OCR model, especially in the world of AI?

High accuracy of text extraction and formatting

Support for a wide range of languages

Retrieval of specific values from a document

Consistently structured data

Understanding the context of the document

We’ll be comparing Mistral OCR on the above standards to JigsawStack OCR with real-world examples and use cases.

Sneak peek if you can’t wait 👇

| Mistral OCR 2503 | JigsawStack vOCR | |

| 🌐 Multilingual support | Limited. Works only if the image or documents are in a specific format. 12 benchmarked languages. ❌ | 70+ languages including less popular languages like Telugu compared to Hindi and Chinese ✅ |

| 📝 Handwriting/Printed support | Only worked on specific printed images. Failed in most situations, including handwriting. ❌ | Worked with the most distorted images including handwriting, printed, and text on walls. ✅ |

| ⊞ Bounding boxes | No support was found on their API docs. ❌ | Bounding boxes and positions data on PDFs and images. ✅ |

| 📁 Native structured output and retrieval | No support for native structured output or retrieval of values. Required to switch to Mistrals LLM which provides an ok result. Would need a lot more prompt engineering to get a close enough output. ❌ | Native support for structured output and highly accurate and concise retrieval. ✅ |

| 📕 Markdown support | Fast and good for PDFs. Doesn’t work well with images. ◐ ✅ | Full support for markdown on PDFs and images ✅ |

| 🧠 Native context understanding | Has context understanding as part of the LLM but not natively in the OCR model which I assume is a tool call to the OCR model. ◐✅ | Native context understanding merged with OCR ✅ |

| 👯♀️ Team Size | ~206 people | 3 |

Multilingual support

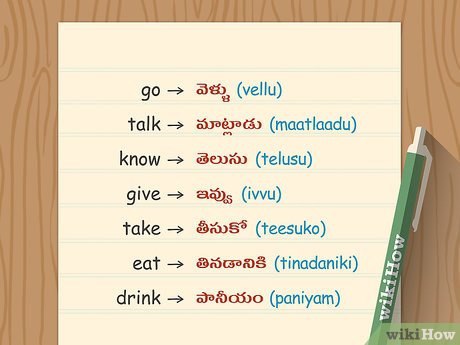

English & Telugu Clear Printed Example

We were recently training a new image-to-image translation model and we’ve been using the above image to experiment with it. Now let’s try extracting all text from the above image which contains both English and Telugu.

Mistral OCR

JigsawStack OCR

Mistral wasn’t able to detect the image with text. JigsawStack extracted all the text with 100% accurately.

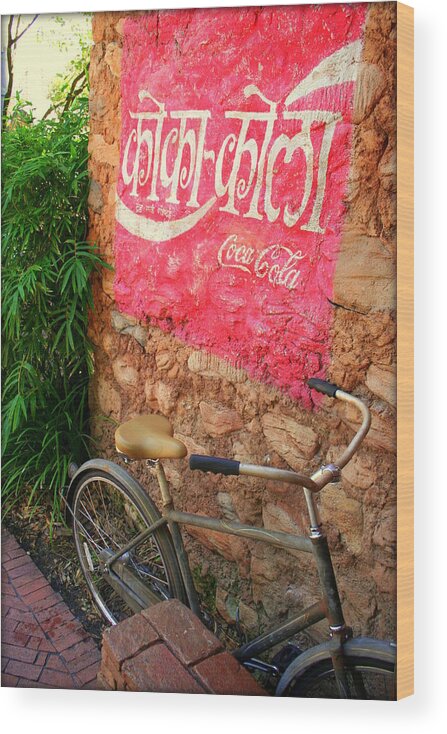

Hindi & English Hard-to-Read Example

Mistral OCR

JigsawStack OCR

In another real-world multilingual example, Mistral isn’t able to detect the text in the image while JigsawStack OCR has accurately extracted and mapped the full result.

Handwriting & Printed text detection support

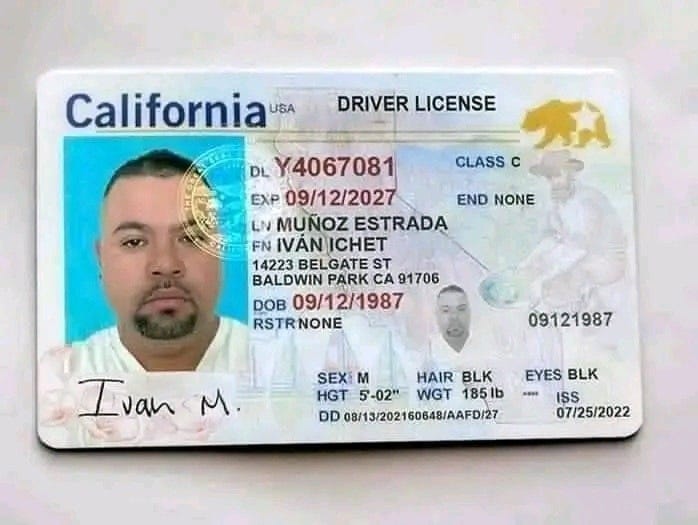

Drivers License Example

Mistral OCR

JigsawStack OCR

Mistral isn’t able to detect most of the text on the image while JigsawStack OCR can extract all text in a structured format and can differentiate between printed and written text.

Bounding boxes and positions

Example doc from Mistral blog (https://mistral.ai/news/mistral-ocr)

Mistral OCR

Full response

{

"pages": [

{

"index": 0,

"markdown": "# Mistral 7B \n\nAlbert Q. Jiang, Alexandre Sablayrolles, Arthur Mensch, Chris Bamford, Devendra Singh Chaplot, Diego de las Casas, Florian Bressand, Gianna Lengyel, Guillaume Lample, Lucile Saulnier, Lélio Renard Lavaud, Marie-Anne Lachaux, Pierre Stock, Teven Le Scao, Thibaut Lavril, Thomas Wang, Timothée Lacroix, William El Sayed\n\n\n\n## Abstract\n\nWe introduce Mistral 7B, a 7-billion-parameter language model engineered for superior performance and efficiency. Mistral 7B outperforms the best open 13B model (Llama 2) across all evaluated benchmarks, and the best released 34B model (Llama 1) in reasoning, mathematics, and code generation. Our model leverages grouped-query attention (GQA) for faster inference, coupled with sliding window attention (SWA) to effectively handle sequences of arbitrary length with a reduced inference cost. We also provide a model fine-tuned to follow instructions, Mistral 7B - Instruct, that surpasses Llama 2 13B - chat model both on human and automated benchmarks. Our models are released under the Apache 2.0 license. Code: https://github.com/mistralai/mistral-src\nWebpage: https://mistral.ai/news/announcing-mistral-7b/\n\n## 1 Introduction\n\nIn the rapidly evolving domain of Natural Language Processing (NLP), the race towards higher model performance often necessitates an escalation in model size. However, this scaling tends to increase computational costs and inference latency, thereby raising barriers to deployment in practical, real-world scenarios. In this context, the search for balanced models delivering both high-level performance and efficiency becomes critically essential. Our model, Mistral 7B, demonstrates that a carefully designed language model can deliver high performance while maintaining an efficient inference. Mistral 7B outperforms the previous best 13B model (Llama 2, [26]) across all tested benchmarks, and surpasses the best 34B model (LLaMa 34B, [25]) in mathematics and code generation. Furthermore, Mistral 7B approaches the coding performance of Code-Llama 7B [20], without sacrificing performance on non-code related benchmarks.\nMistral 7B leverages grouped-query attention (GQA) [1], and sliding window attention (SWA) [6, 3]. GQA significantly accelerates the inference speed, and also reduces the memory requirement during decoding, allowing for higher batch sizes hence higher throughput, a crucial factor for real-time applications. In addition, SWA is designed to handle longer sequences more effectively at a reduced computational cost, thereby alleviating a common limitation in LLMs. These attention mechanisms collectively contribute to the enhanced performance and efficiency of Mistral 7B.",

"images": [

{

"id": "img-0.jpeg",

"top_left_x": 313,

"top_left_y": 669,

"bottom_right_x": 1162,

"bottom_right_y": 901,

"image_base64": null

}

],

"dimensions": {

"dpi": 200,

"height": 1999,

"width": 1500

}

}

],

"model": "mistral-ocr-2503-completion",

"usage_info": {

"pages_processed": 1,

"doc_size_bytes": 274769

}

}

JigsawStack OCR

Full response: https://gist.github.com/yoeven/a6bccae45a7d133130a6e1486e0df4ac Response is too large to display here.

Mistral OCR currently has no bounding boxes or position data in the response while JigsawStack OCR contains both sentence and word-level positions.

Retrieval and structured output

Receipt Retrieval Example

Mistral OCR

JigsawStack OCR

Mistral does a good job pulling out all the text but doesn’t natively support structured output JSON or queries for the OCR which requires a different model or an LLM to post-process. JigsawStack provides structured output for exactly the fields requested along with the body of text and bounding boxes for each value.

15-Page Arxiv PDF Retrieval Example

Full document: https://arxiv.org/pdf/2406.04692

Mistral OCR

JigsawStack OCR

Both Mistral and JigsawStack get relevant results, however, there are a few hoops to jump through using Mistral. You will need to switch the API to use Mistrals LLM rather than the native OCR product, which I assume is doing some tool calling under the hood. Structured data is going to be a challenge as consistency of the data output in JSON is going to take a lot of work and prompt engineering. While on JigsawStack you get structured JSON exactly with the keys provided and only the answer required.

JigsawStack allows you to describe an array of fields that will always return consistent results mapped to those exact values.

Markdown Support Example

We’ll use the same PDF document as above.

Mistral OCR

JigsawStack OCR

For this comparison, we had to switch back from Mistral LLM to the OCR model. Both Mistral and JigsawStack OCR do a great job of extracting markdowns consistently. The downside here is that JigsawStack supports up to 10 pages of PDF per API call. This is soon changing in the coming weeks with 100-page support.

Conclusion

In all of the above, we used JigsawStack’s vOCR where we merged a vLLM with a fine-tuned OCR model to provide high-quality results while supporting over 70+ languages.

For the above tests, we used Mistrals OCR Rest API which can be found here, and JigsawStacks vOCR Rest API which can be found here.

Overall in terms of language, structured data output context understanding, and providing the basics of OCR like bounding boxes, JigsawStack OCR does take the lead.

| Mistral OCR 2503 | JigsawStack vOCR | |

| 🌐 Multilingual support | Limited. Works only if the image or documents are in a specific format. 12 benchmarked languages. ❌ | 70+ languages including less popular languages like Telugu compared to Hindi and Chinese ✅ |

| 📝 Handwriting/Printed support | Only worked on specific printed images. Failed in most situations, including handwriting. ❌ | Worked with the most distorted images including handwriting, printed, and text on walls. ✅ |

| ⊞ Bounding boxes | No support was found on their API docs. ❌ | Bounding boxes and positions data on PDFs and images. ✅ |

| 📁 Native structured output and retrieval | No support for native structured output or retrieval of values. Required to switch to Mistrals LLM which provides an ok result. Would need a lot more prompt engineering to get a close enough output. ❌ | Native support for structured output and highly accurate and concise retrieval. ✅ |

| 📕 Markdown support | Fast and good for PDFs. Doesn’t work well with images. ◐ ✅ | Full support for markdown on PDFs and images ✅ |

| 🧠 Native context understanding | Has context understanding as part of the LLM but not natively in the OCR model which I assume is a tool call to the OCR model. ◐✅ | Native context understanding merged with OCR ✅ |

| 👯♀️ Team Size | ~206 people | 3 |

How to get started with JigsawStack vOCR?

The goal at JigsawStack is to build small focused models that are good at doing one thing very well. Then we pack all the models into a single DX-friendly SDK.

Typescript/Javascript example

import { JigsawStack } from "jigsawstack";

const jigsawstack = JigsawStack({

apiKey: "your-api-key",

});

const result = await jigsaw.vision.vocr({

prompt: ["total_price", "tax"],

url: "https://media.snopes.com/2021/08/239918331_10228097135359041_3825446756894757753_n.jpg",

});

Python

from jigsawstack import JigsawStack

jigsawstack = JigsawStack(api_key="your-api-key")

result = jigsawstack.vision.vocr({"url": "https://media.snopes.com/2021/08/239918331_10228097135359041_3825446756894757753_n.jpg", "prompt" : ["total_price", "tax"]})

Subscribe to my newsletter

Read articles from Yoeven D Khemlani directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by