Installing Kafka, Zookeeper, and Debezium for MySQL: A Change Data Capture Guide1

Olamigoke Oyeneyin

Olamigoke OyeneyinTable of contents

Brief Introduction

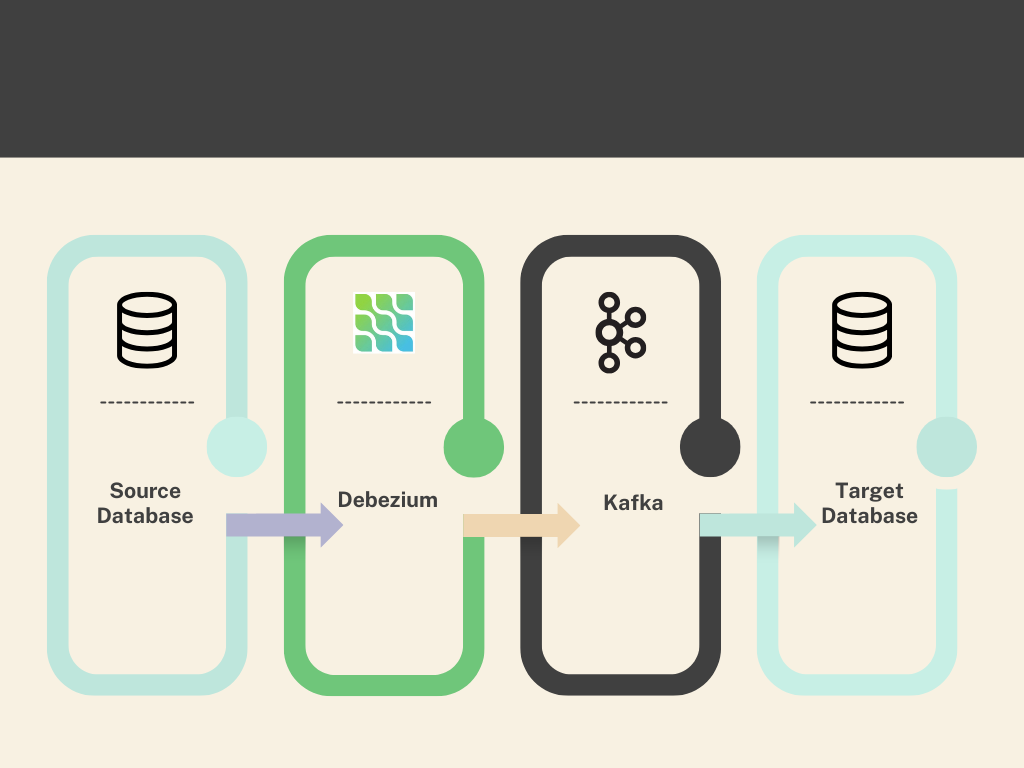

Kafka, Debezium, and ZooKeeper work together to move and manage data in real time. Kafka acts like a storage hub that collects and holds events (data changes) from a source database(in our case, a mysql database). Debezium is a tool that grabs those changes from the database’s transaction logs and turns them into events Kafka can store. ZooKeeper is like a coordinator that keeps Kafka running smoothly by managing its operations. Together, they ensure data is copied and available across systems efficiently.

This guide walks you through setting up Kafka, Zookeeper, and Debezium to capture MySQL changes and stream them to Kafka.

I’ll break it down into simple steps, include download commands, and cover real challenges I faced (and fixed). I am assuming you have mysql already setup. Please note this is for a development environment . Proper planning for scalability will have to be considered to setup in a production site.

Let’s get started!

What You’ll Need

Operating System: Linux (I used rhel 9.4 arm aarch64)

Tools: Terminal access, root privileges

Internet: To download files

Setup: MySQL on 192.168.64.6, Kafka on 192.168.64.10

Step-by-Step Process

Step 1: Set Up MySQL replication user

Debezium needs a MySQL user and binlog enabled.

Log into MySQL

- Assuming MySQL is on 192.168.64.6:

mysql -u root -p -h 192.168.64.6

- Assuming MySQL is on 192.168.64.6:

Create Debezium User

Run:

CREATE USER 'debezium'@'%' IDENTIFIED BY 'xxxx!'; ALTER USER 'debezium'@'%' IDENTIFIED WITH 'mysql_native_password' BY 'xxxx!';GRANT SELECT, RELOAD, SHOW DATABASES, REPLICATION SLAVE, REPLICATION CLIENT ON *.* TO 'debezium'@'%';FLUSH PRIVILEGES;

Enable Binlog

check MySQL instance to see if binlog is enabled.

In MySQL:

SHOW VARIABLES LIKE 'log_bin';SHOW VARIABLES LIKE 'binlog_format';Expect: log_bin=ON, binlog_format=ROW.

if not then edit the MySQL config file:

Edit MySQL config (/etc/mysql/my.cnf or /etc/my.cnf):

sudo vi /etc/mysql/my.cnfAdd under [mysqld]:

log_bin=ONlog-bin=mysql-binbinlog_format = ROWRestart MySQL:

systemctl restart mysqld

Verify Binlog

In MySQL:

SHOW VARIABLES LIKE 'log_bin';SHOW VARIABLES LIKE 'binlog_format';Expect: log_bin=ON, binlog_format=ROW.

Step 2: Install Java (JDK)

Kafka and Zookeeper need Java to run, so let’s install JDK 8.

Download JDK

I downloaded rpm package for my os architecture. You need to select the one for your os architecture. Mine is linux aarch64

https://www.oracle.com/ng/java/technologies/downloads/#java8

After download, go to the download folder mine is:

cd /home/sandbox/Downloads;lsjdk-8u441-linux-aarch64.rpm

install java

[root@localhost Downloads]#

rpm -ivf jdk-8u441-linux-aarch64.rpmwarning: jdk-8u441-linux-aarch64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEYVerifying packages...Preparing packages...jdk-1.8-2000:1.8.0_441-7.aarch64[root@localhost Downloads]#

java -versionjava version "1.8.0_441"Java(TM) SE Runtime Environment (build 1.8.0_441-b07)Java HotSpot(TM) 64-Bit Server VM (build 25.441-b07, mixed mode)

Step 3: Download and Extract Zookeeper

Kafka includes Zookeeper, so we’ll grab it next.

Download Zookeeper from apache

Run wget cmd below to download zookeeper

[root@localhost Downloads]#

wgethttps://archive.apache.org/dist/zookeeper/zookeeper-3.6.2/apache-zookeeper-3.6.2.tar.gz--2025-03-02 21:59:08--https://archive.apache.org/dist/zookeeper/zookeeper-3.6.2/apache-zookeeper-3.6.2.tar.gzResolvingarchive.apache.org(archive.apache.org)... 65.108.204.189, 2a01:4f9:1a:a084::2Connecting toarchive.apache.org(archive.apache.org)|65.108.204.189|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 3372391 (3.2M) [application/x-gzip]Saving to: ‘apache-zookeeper-3.6.2.tar.gz’apache-zookeeper-3. 100%[===================>] 3.22M 892KB/s in 3.8s2025-03-02 21:59:15 (858 KB/s) - ‘apache-zookeeper-3.6.2.tar.gz’ saved [3372391/3372391][root@localhost Downloads]#

lsapache-zookeeper-3.6.2.tar.gz jdk-8u441-linux-aarch64.rpm

Extract Zookeeper

Go to the download folder:

cd /home/sandbox/DownloadsExtract:

[root@localhostDownloads]# tar -xvf apache-zookeeper-3.6.2.tar.gz

Move zookeeper

Move it to a working directory:

[root@localhostDownloads]# mv apache-zookeeper-3.6.2 zookeeper /home/zookeeper

Step 4: Download and Extract Kafka

Download Kafka

Run:

root@localhost Downloads]#

wgethttps://archive.apache.org/dist/kafka/2.6.3/kafka_2.12-2.6.3.tgztar -xvzf kafka_2.12-2.6.3.tgz--2025-03-02 22:02:26--https://archive.apache.org/dist/kafka/2.6.3/kafka_2.12-2.6.3.tgzResolvingarchive.apache.org(archive.apache.org)... 65.108.204.189, 2a01:4f9:1a:a084::2Connecting toarchive.apache.org(archive.apache.org)|65.108.204.189|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 65826045 (63M) [application/x-gzip]Saving to: ‘kafka_2.12-2.6.3.tgz’kafka_2.12-2.6.3.tg100%[===================>] 62.78M 1.11MB/s in 61s2025-03-02 22:03:28 (1.02 MB/s) - ‘kafka_2.12-2.6.3.tgz’ saved [65826045/65826045]

Extract Kafka

Go to the download folder:

cd /home/sandbox/DownloadsExtract:

tar -xvf kafka_2.12-2.6.3.tgz

Move Kafka

- Move it to a working directory:

mv kafka_2.12-2.6.3 /home/kafka

- Move it to a working directory:

Step 5: Setup Debezium for MySQL connector

Download Debezium version 1.8 from maven.org

root@localhost kafka]#

wgethttps://repo1.maven.org/maven2/io/debezium/debezium-connector-mysql/1.8.0.Final/debezium-connector-mysql-1.8.0.Final-plugin.tar.gz--2025-03-02 22:05:48--https://repo1.maven.org/maven2/io/debezium/debezium-connector-mysql/1.8.0.Final/debezium-connector-mysql-1.8.0.Final-plugin.tar.gzResolvingrepo1.maven.org(repo1.maven.org)... 199.232.52.209, 2a04:4e42:4a::209Connecting torepo1.maven.org(repo1.maven.org)|199.232.52.209|:443... connected.HTTP request sent, awaiting response... 200 OKLength: 9187406 (8.8M) [application/x-gzip]Saving to: ‘debezium-connector-mysql-1.8.0.Final-plugin.tar.gz’debezium-connector- 100%[===================>] 8.76M 2.04MB/s in 5.2s2025-03-02 22:05:56 (1.68 MB/s) - ‘debezium-connector-mysql-1.8.0.Final-plugin.tar.gz’ saved [9187406/9187406]Extract Debezium

Extract:

[root@localhost kafka]#

tar -xvzfdebezium-connector-mysql-1.8.0.Final-plugin.tar.gz

Move Debezium

- Move it to a working directory:

mvdebezium-connector-mysql-1.8.0.Final-plugin /home/kafka/plugins

- Move it to a working directory:

Step 6: Configure Debezium

Debezium connects MySQL to Kafka.

Create Config File

Command:

nano /home/kafka/config/connect-debezium-mysql.propertiesAdd:

name=mysql-connector-02connector.class=io.debezium.connector.mysql.MySqlConnectortasks.max=1database.hostname=192.168.64.6database.port=3306database.user=debeziumdatabase.password=xxxxdatabase.server.id=223344database.history.kafka.topic=msql.historydatabase.server.name=mysql-connector-02database.include.list=classicmodelsdatabase.history.kafka.bootstrap.servers=192.168.64.10:9092 database.jdbc.url=jdbc:mysql://192.168.64.6:3306/classicmodels?useSSL=falseSave and exit (:wq)

Step 7: Start Zookeeper

Zookeeper manages Kafka’s coordination.

Navigate to Kafka Directory

- Run:

cd /home/kafka

- Run:

Start Zookeeper

Command:

./bin/zookeeper-server-start.shkafka/config/zookeeper.properties&This runs Zookeeper in the background.

Challenge: JVM Option Error

Error Faced:

Error: VM option 'UseG1GC' is experimental and must be enabled via -XX:+UnlockExperimentalVMOptions. Error: Could not create the Java Virtual Machine.Why: JDK 8u202 doesn’t enable experimental options like UseG1GC by default.

Fix: Edit the kafka-run-class script:

vi /home/kafka/bin/kafka-run-class.shFind the line with JVMFLAGS (e.g., JVMFLAGS="-Xmx512M -XX:+UseG1GC").

Either:

Remove -XX:+UseG1GC, leaving JVMFLAGS="-Xmx512M".

Or add -XX:+UnlockExperimentalVMOptions before it: JVMFLAGS="-Xmx512M -XX:+UnlockExperimentalVMOptions -XX:+UseG1GC".

Save and start:

./bin/zookeeper-server-start.sh/home/kafka/config/zookeeper.properties&

Verify

- Check logs (in terminal or /home/kafka/logs/):

Step 8: Start Kafka Broker

Kafka handles message streaming.

Start Kafka

- Command:

./bin/kafka-server-start.sh/home/kafka/config/server.properties&

- Command:

Step 9: Create Kafka Topic

Debezium needs a history topic.

Create Topic

- Run:

./bin/kafka-topics.sh--create --bootstrap-server 192.168.64.10:9092 --replication-factor 1 --partitions 1 --topic msql.history

- Run:

Verify

Check:

./bin/kafka-topics.sh--list --bootstrap-server 192.168.64.10:9092Output: msql.history.

Step 10: Start Kafka Connect with Debezium

This ties everything together.

Run Kafka Connect

- Command:

./bin/connect-standalone.sh/home/kafka/config/connect-standalone.properties/home/kafka/config/connect-debezium-mysql.properties

- Command:

Step 11: Verify Replication

Ensure data flows from MySQL to Kafka.

Check Topics

Run:

./bin/kafka-topics.sh--list --bootstrap-server 192.168.64.10:9092Expect: msql.history, mysql-connector-02.classicmodels.<table> (e.g., mysql-connector-02.classicmodels.mytab).

Consume Table Data

Test a table (e.g., mytab):

./bin/kafka-console-consumer.sh--topic mysql-connector-02.classicmodels.mytab --bootstrap-server 192.168.64.10:9092 --from-beginningInsert data in MySQL:

INSERT INTO testdb.mytab VALUES ('test');See JSON events in the terminal.

Check Schema Changes

Consume history topic:

./bin/kafka-console-consumer.sh--topic msql.history --bootstrap-server 192.168.64.10:9092 --from-beginningExpect DDL like CREATE TABLE.

Challenges Faced and resolution:

Challenge 1: Public Key Retrieval Not Allowed

Error: java.sql.SQLNonTransientConnectionException: Public Key Retrieval is not allowed.

Why: MySQL 8’s default caching_sha2_password.

Fix: Used mysql_native_password (already set at create user in Step 1). If you already created the user you can use:

alter user 'debezium'@'%' identified with 'mysql_native_password' by 'Password123!';Challenge 2: Unrecognized Time Zone 'WAT'

Error: The server time zone value 'WAT' is unrecognized.

Why: MySQL’s WAT confused the JDBC driver.

Fix: Set timezone in MySQL:

SET GLOBAL time_zone = 'Africa/Lagos';FLUSH PRIVILEGES;mysql> select @@global.time_zone,@@session.time_zone;| @@global.time_zone | @@session.time_zone || Africa/Lagos | Africa/Lagos |+--------------------+---------------------+ 1 row in set (0.01 sec)

Challenge 3: Kafka Host Mismatch

Error: No history topic due to wrong Kafka IP.

Why: Used mysql db server iP for kafka IP. database.history.kafka.bootstrap.servers parameter was 192.168.64.6:9092 in connect-debezium-mysql.properties file.

Fix: Updated to 192.168.64.10:9092. 192.168.64.10 is kafka server while 192.168.64.6 is mysql db server.

I hope I have been able to break down the steps. Next, I will be using another mysql db server as a secondary database to consume the changes published to kafka. This will complete the CDC(Change Data Capture) series.

Subscribe to my newsletter

Read articles from Olamigoke Oyeneyin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Olamigoke Oyeneyin

Olamigoke Oyeneyin

An experienced database administrator that loves exploring.