The Pulse of the Cloud: Unleashing Event-Driven Power" ⚡☁️

Koushal Akash RM

Koushal Akash RM

Introduction to Event-Driven Architecture in the Cloud

In the fast-paced digital era, applications need to be responsive, scalable, and real-time to meet the demands of modern users. This is where Event-Driven Architecture (EDA) becomes the backbone of many cloud-native applications, enabling them to function seamlessly and efficiently.

At its core, Event-Driven Architecture revolves around the concept of events—discrete occurrences such as a user clicking a button, a payment being processed, or a sensor detecting a temperature change. Instead of relying on traditional request-response interactions, EDA allows applications to react asynchronously to these events in a decoupled manner.

Why Event-Driven Architecture in the Cloud?

Cloud platforms like AWS, Azure, and Google Cloud provide a robust ecosystem for implementing EDA using services such as AWS Lambda, Amazon S3 Events,AWS EventBridge, Azure Event Grid, Google Cloud Pub/Sub, and Apache Kafka. By leveraging these cloud-native services, applications can:

✅ Seamlessly handle high volumes of events without overloading the system.

✅ Scale dynamically based on workload demands.

✅ Reduce latency by enabling real-time processing.

✅ Enhance resilience with loosely coupled microservices.

✅ Improve cost efficiency by eliminating unnecessary compute resources.

Powering Modern Applications

From financial transactions and e-commerce order processing to IoT automation and DevOps workflows, Event-Driven Architecture is the foundation that makes many modern applications feel instantaneous. Whether it's an online retailer processing millions of customer orders without delays, a ride-sharing app dynamically updating driver locations in real time, or an AI-driven chatbot responding to user queries instantly, EDA ensures that these complex operations happen effortlessly.

By embracing Event-Driven Architecture in the Cloud, organizations can build highly responsive, scalable, and efficient systems that cater to today's digital-first world. 🚀

Automating Document Organization with Event-Driven Magic: AWS Lambda + S3⚡✨

Imagine a fast-growing firm where hundreds of documents are uploaded daily into an Amazon S3 bucket—contracts, invoices, reports, you name it! But here’s the challenge: keeping them organized by upload date without any manual effort.

Manually sorting these files into date-based folders? Tedious and time-consuming. Wouldn't it be great if the system could magically organize everything the moment a document is uploaded?

Event-Driven Architecture—where AWS S3 and Lambda work together like clockwork. When a file lands in the bucket, an event is triggered, and AWS Lambda seamlessly moves it into the appropriate date-based folder. No delays, no manual intervention—just pure automation!

With this setup, the firm achieves effortless document management, improved organization, and a scalable solution that adapts as their business grows. This is the power of Event-Driven Architecture in the cloud—making things happen instantly, without anyone lifting a finger! 🚀

Want to see how this works under the hood? Let’s dive in! ⬇️

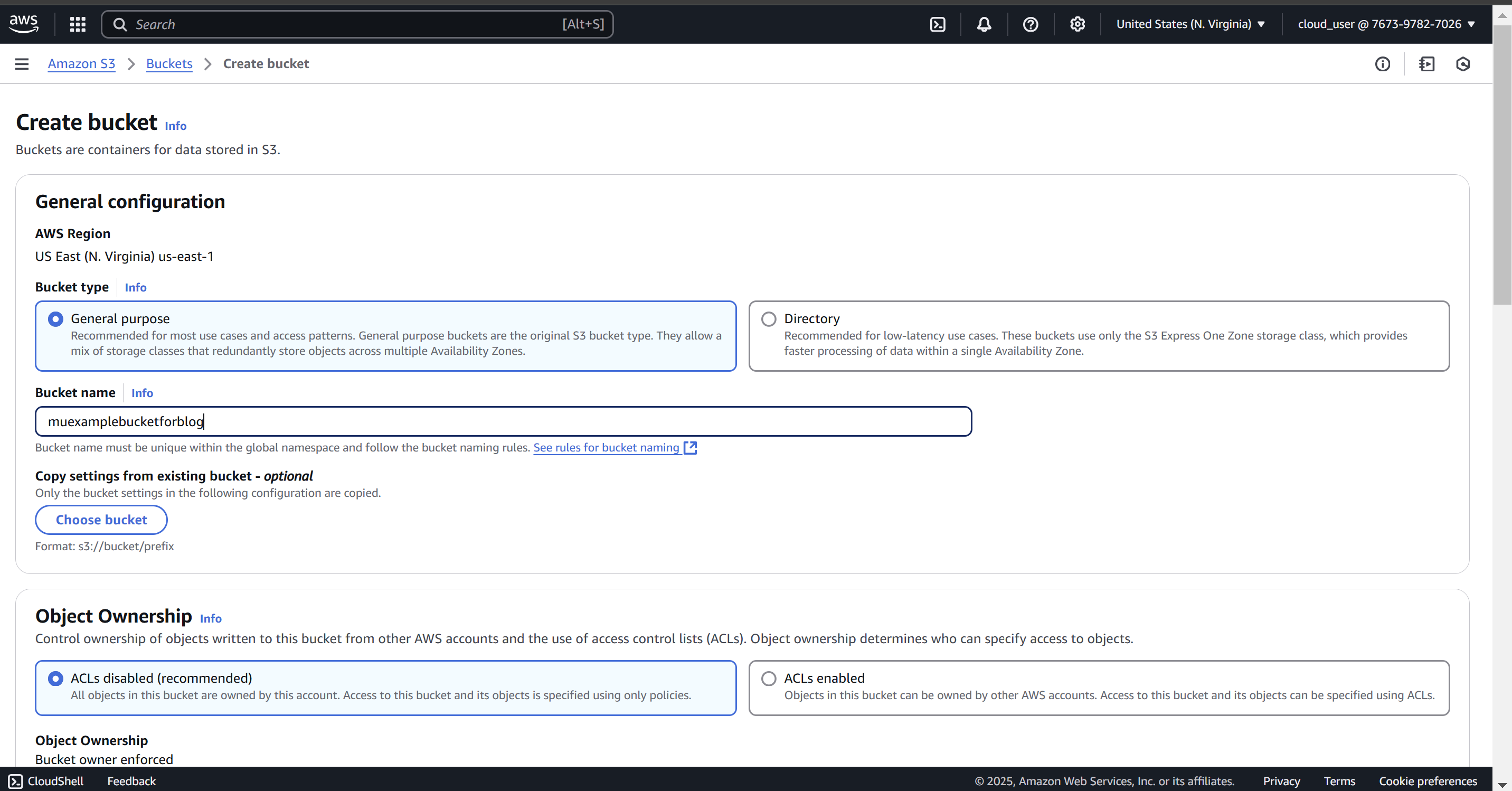

Step 1 : Create a S3 bucket

This bucket is where the Data will be uploaded.

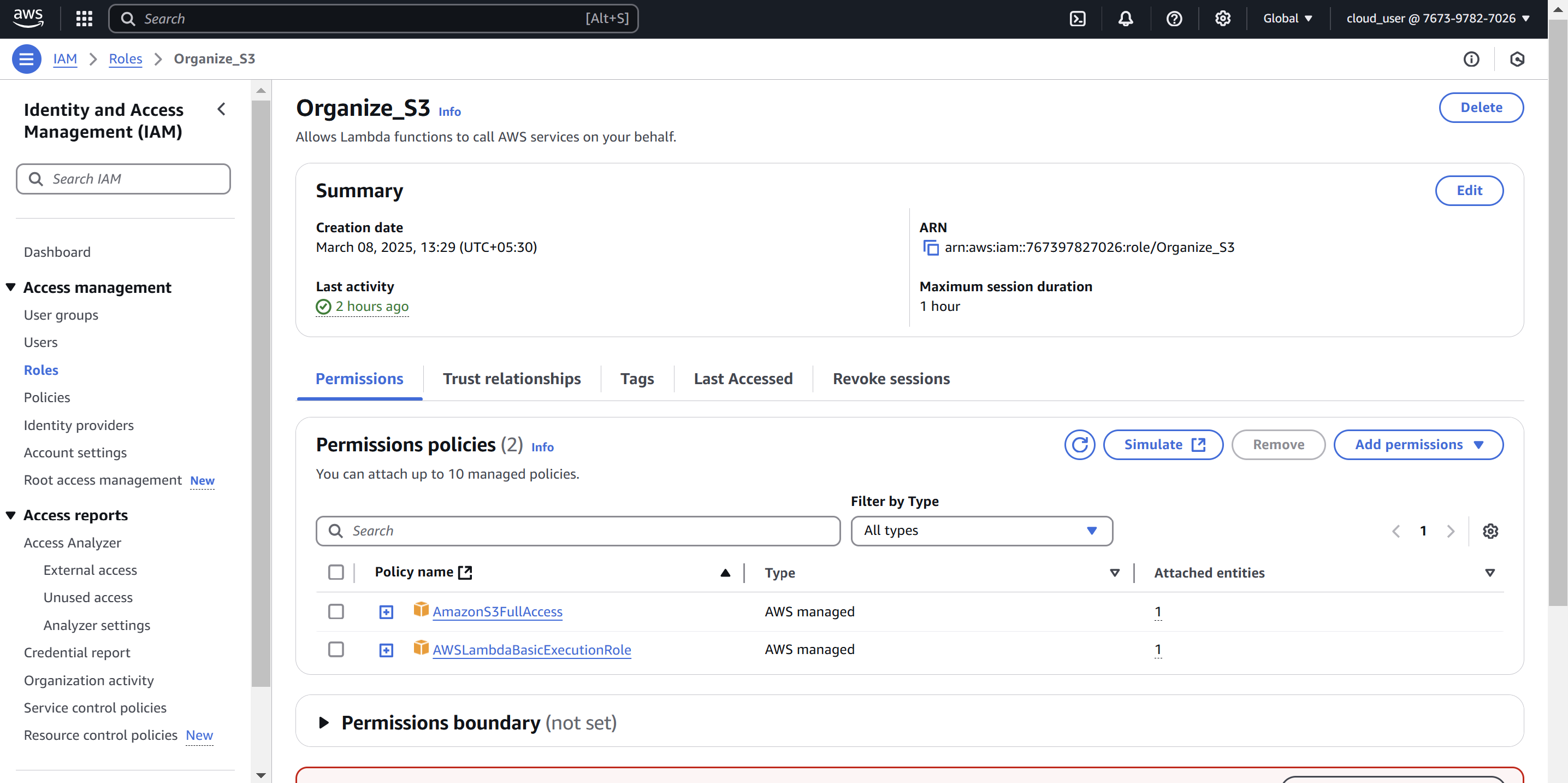

Step 2 : Create a IAM role for your Lambda:

Here you could see I have attached two policies ,One is AmazonS3FullAcess which gives full access for Amazon S3 buckets. and other gives basic permission for lamda to act as executioner

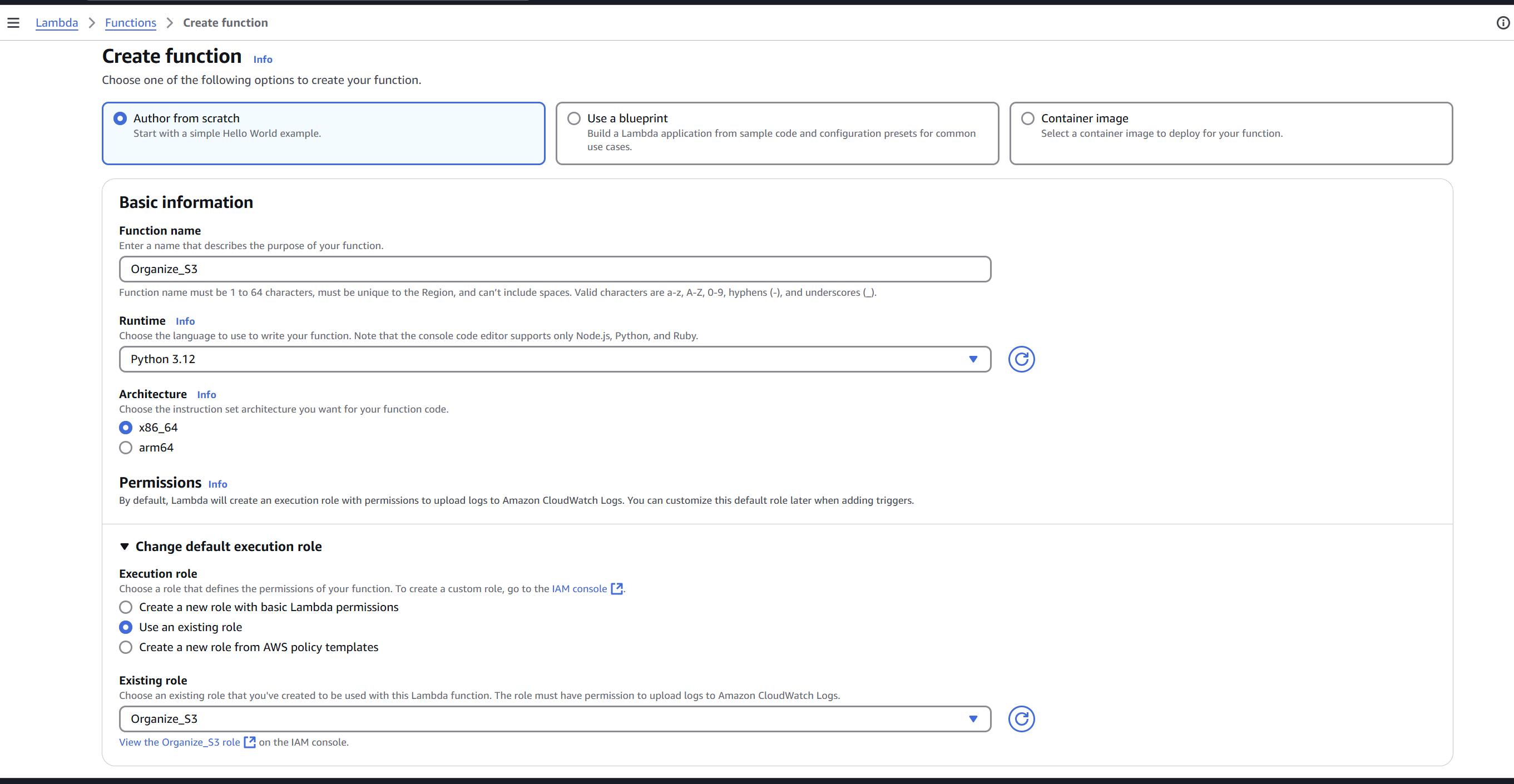

Step 3 : Create your Lambda Function

Before creating a Lambda function, a short Overview of AWS Lambda

AWS Lambda is a serverless compute service that allows you to run code in response to events without provisioning or managing servers. One of its biggest advantages is its support for multiple programming languages, giving developers flexibility in choosing the right tool for the job. Some of the popular languages supported by AWS Lambda include:

✅ Python – Great for automation, scripting, and quick execution times.

✅ Node.js – Ideal for event-driven applications and serverless APIs.

✅ Java – A solid choice for enterprise applications and large-scale workloads.

✅ Go – Known for its efficiency and performance.

✅ C# (.NET) – Preferred by developers working in the Microsoft ecosystem.

✅ Ruby – Useful for rapid development and scripting.

✅ Custom Runtimes – AWS also allows you to bring your own runtime, such as Rust or PHP.

Why Python for This Automation?

For our S3 document organization automation, I’ll be using Python, mainly because:

It's lightweight and executes quickly in AWS Lambda.

It has a rich ecosystem of AWS SDKs, making integrations seamless.

It’s widely used for automation and cloud scripting.

Overview of Boto3 – The AWS SDK for Python

To interact with AWS services, we’ll use Boto3, the official AWS SDK for Python. It allows Python applications to:

✅ Upload and manage objects in S3

✅ Trigger Lambda functions based on S3 events

✅ Modify and organize files programmatically

✅ Automate infrastructure tasks like creating S3 buckets

In our automation, Boto3 will be the key tool for moving uploaded documents into date-based folders within an S3 bucket. It provides an easy-to-use interface to access AWS services, making our Lambda function efficient and powerful.

Ready to see how we put this into action? Let's dive into the implementation! 🚀

Breaking Down the Automation: Organizing S3 Files with AWS Lambda & Boto3

This AWS Lambda function organizes uploaded documents into date-based folders within an Amazon S3 bucket. Let’s break it down into key segments and understand how each part works.

1️⃣ Setting Up the Environment

import boto3

from datetime import datetime

today = datetime.today()

today_date = today.strftime("%Y%m%d")

bucket_name = "muexamplebucketforblog"

dir_name = today_date + "/"

S3Client = boto3.client('s3', region_name="us-east-1")

🔹 What’s happening here?

We import Boto3 (AWS SDK for Python) and datetime.

We get the current date in

YYYYMMDDformat to create a folder name.Define the S3 bucket name where files are stored.

Create an S3 client using Boto3 to interact with AWS S3.

2️⃣ Fetching the List of Objects in the S3 Bucket

list_object_response = S3Client.list_objects_v2(Bucket=bucket_name)

get_contents = list_object_response.get("Contents")

all_object_list = []

for content in get_contents:

all_object_list.append(content["Key"])

🔹 What’s happening here?

We use

list_objects_v2()to fetch all objects from the S3 bucket.Extract the file names (keys) from the response.

Store them in a list (

all_object_list) for easy checking.

3️⃣ Creating the Date-Based Folder (If Not Exists)

if dir_name not in all_object_list:

S3Client.put_object(Bucket=bucket_name, Key=dir_name)

🔹 What’s happening here?

Before moving files, we check if a folder for today’s date exists in S3.

If not, we create it using

put_object().In S3, folders don’t technically exist, but adding an object with a

/at the end simulates a directory.

4️⃣ Moving Eligible Files to the Date Folder

for item in get_contents:

object_creation_date = item.get("LastModified").strftime("%Y%m%d") + "/"

object_name = item.get("Key")

if object_creation_date == dir_name and "/" not in object_name:

S3Client.copy_object(Bucket=bucket_name,

CopySource=bucket_name + "/" + object_name,

Key=dir_name + object_name)

S3Client.delete_object(Bucket=bucket_name, Key=object_name)

🔹 What’s happening here?

We loop through each file and check its LastModified date.

If the file matches today’s date and is not already inside a folder, we move it.

Moving in S3 means:

Copy the file to the correct folder using

copy_object().Delete the original file using

delete_object().

The Complete Code Logic🪄

import boto3

from datetime import datetime

today=datetime.today()

today_date=today.strftime("%Y%m%d")

bucket_name="muexamplebucketforblog"

dir_name = today_date+ "/"

S3Client=boto3.client('s3',region_name="us-east-1")

list_object_response=S3Client.list_objects_v2(Bucket=bucket_name)

get_contents=list_object_response.get("Contents")

all_object_list=[]

for content in get_contents:

all_object_list.append(content["Key"])

#Now Check if the bucket has already has the today's Directory

if(dir_name not in all_object_list):

S3Client.put_object(Bucket=bucket_name,Key=dir_name)

#Now the NextStep we need to check what are contents that are eligble to the respective folder

for item in get_contents:

object_creation_date = item.get("LastModified").strftime("%Y%m%d") + "/"

object_name = item.get("Key")

if object_creation_date == dir_name and "/" not in object_name:

S3Client.copy_object(Bucket=bucket_name, CopySource=bucket_name+"/"+object_name, Key=dir_name+object_name)

S3Client.delete_object(Bucket=bucket_name,Key=object_name)

📌 Flow Breakdown

1️⃣ Lambda Triggered → The function runs, either manually or as part of an event-driven workflow.

2️⃣ Fetch Object List → Retrieves all files from the S3 bucket.

3️⃣ Check If Today’s Folder Exists → If not, create a folder named with today’s date.

4️⃣ Loop Through Each File in the Bucket

Extract the file's LastModified date.

If the file belongs to today’s date and is not in a folder, move it.

5️⃣ Move File to Date-Based FolderCopy the file to the correct folder.

Delete the original file to avoid duplicates

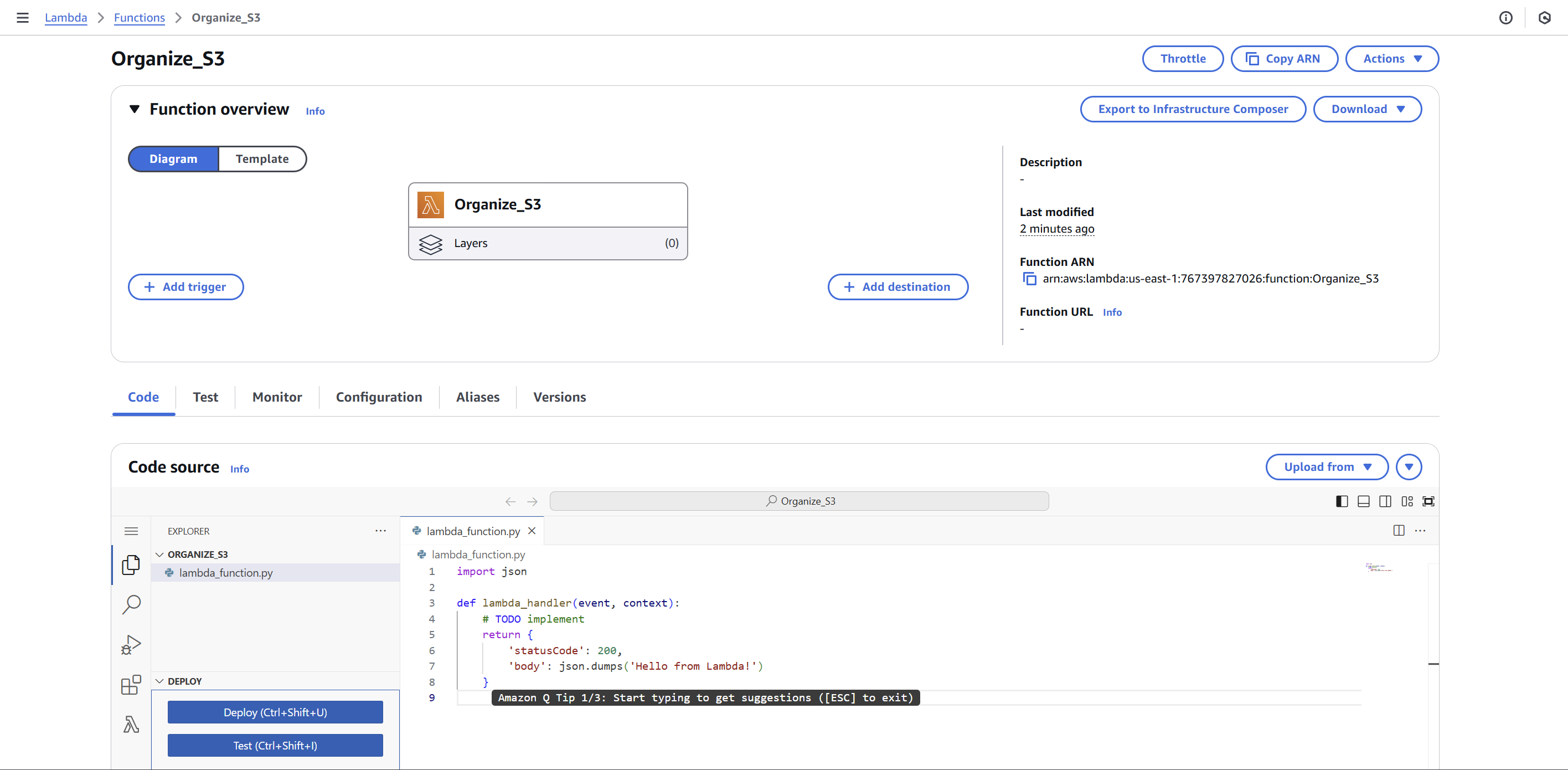

Go to your AWS console and select the Lambda service create your Function

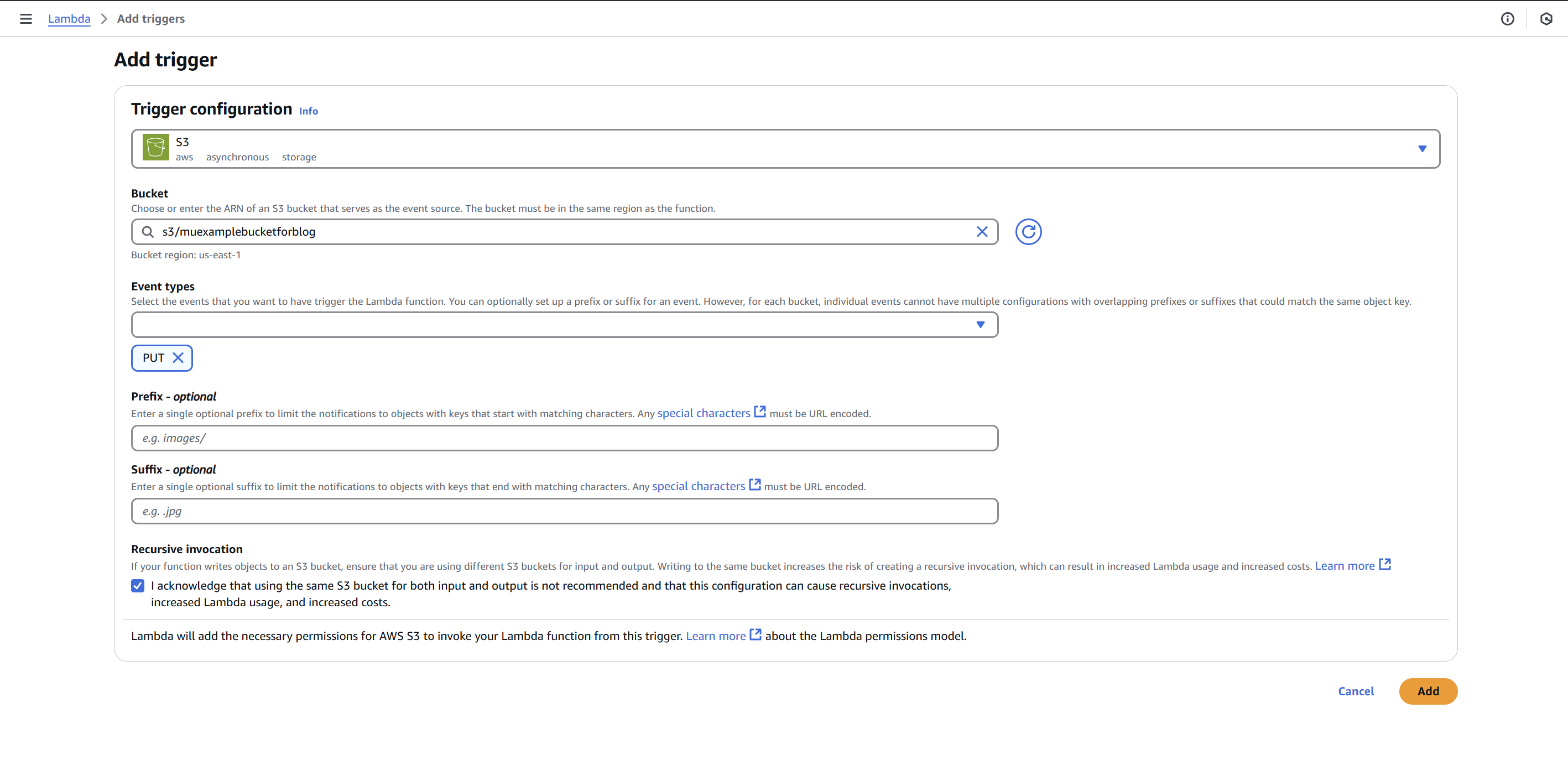

Add Trigger

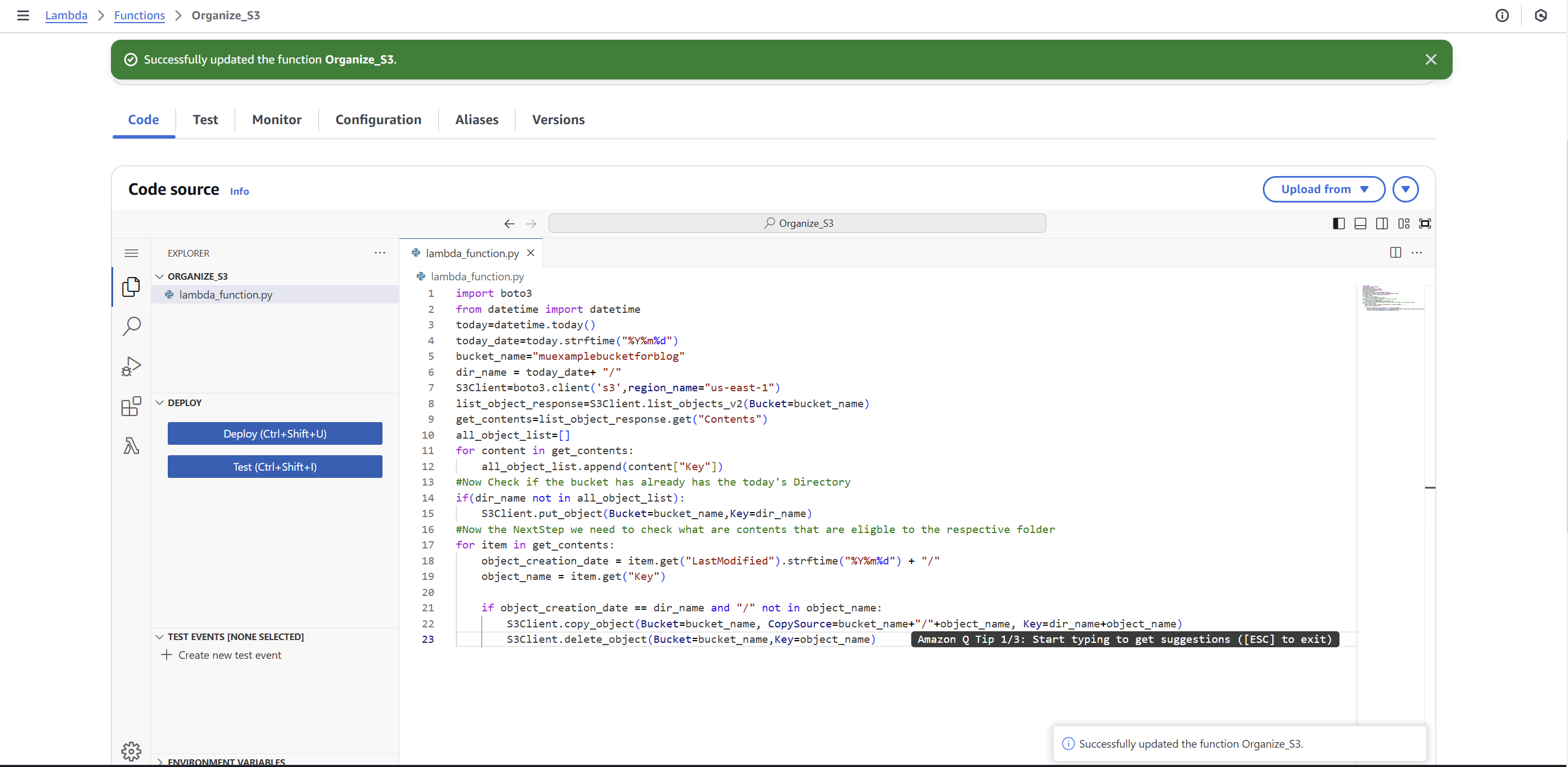

Paste the Above above-mentioned code and Deploy

Now everything is in the Right Spot let’s test it out 🤞

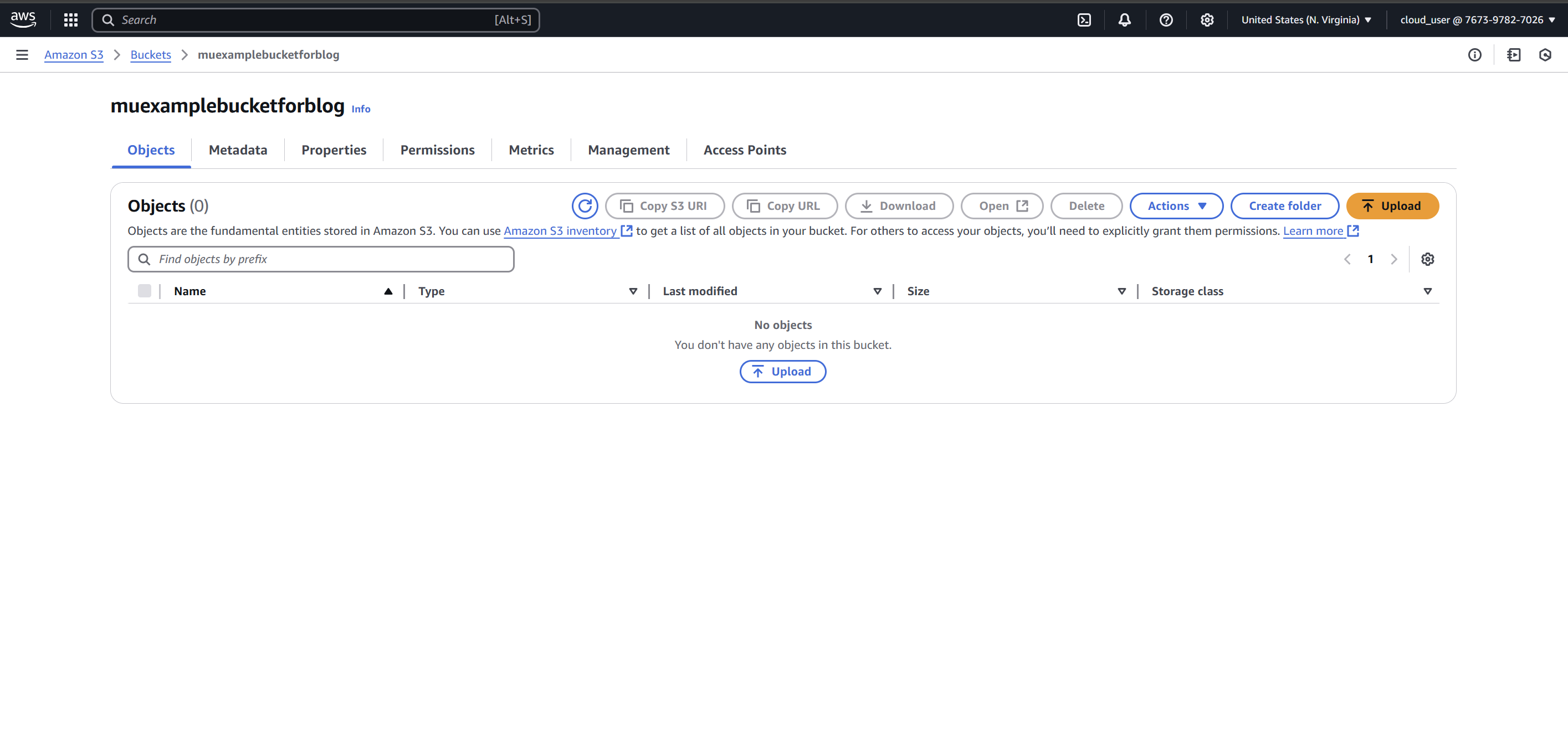

The Bucket is Empty:

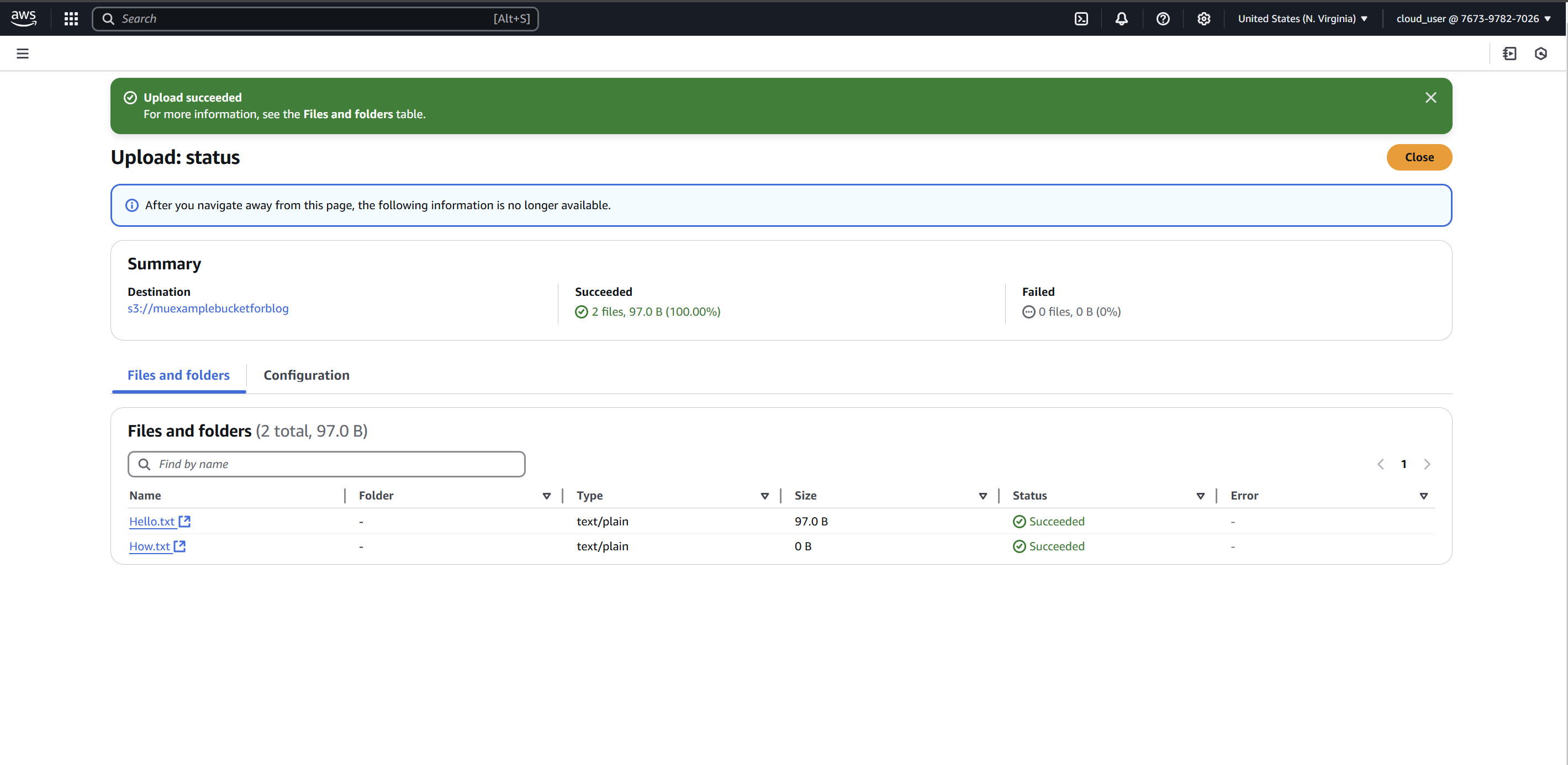

Let’s upload some files to it and see the Result 🤞🤞

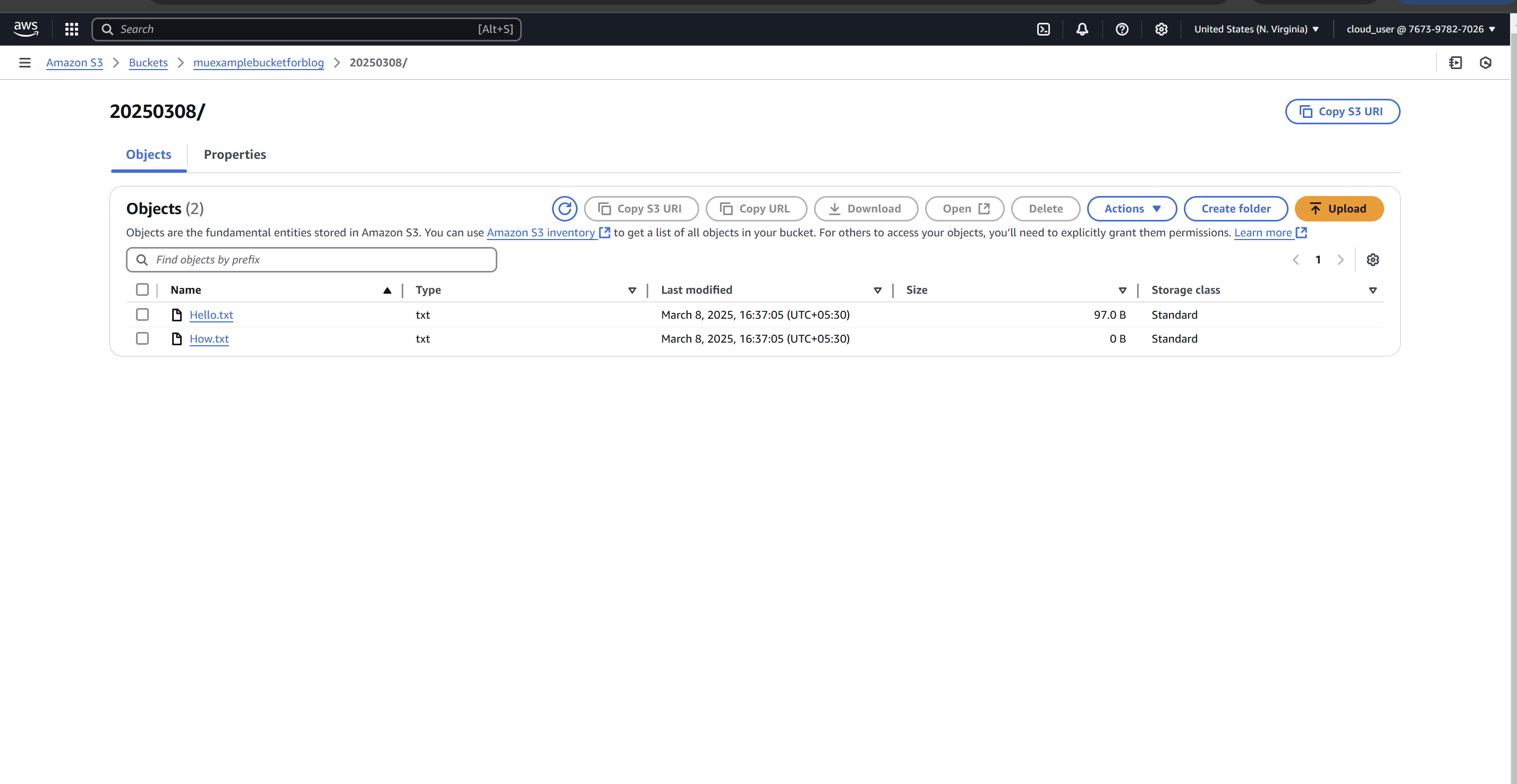

Wola!🪄 It works perfectly fine! ✨ Kudos ✌️ 😎 for Coming up to here That’s it for this Blog. Stay Curious and Happy Building 💪⚡

Subscribe to my newsletter

Read articles from Koushal Akash RM directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by