Curated resources: AI Product x How to eval?

Dhaval Singh

Dhaval Singh

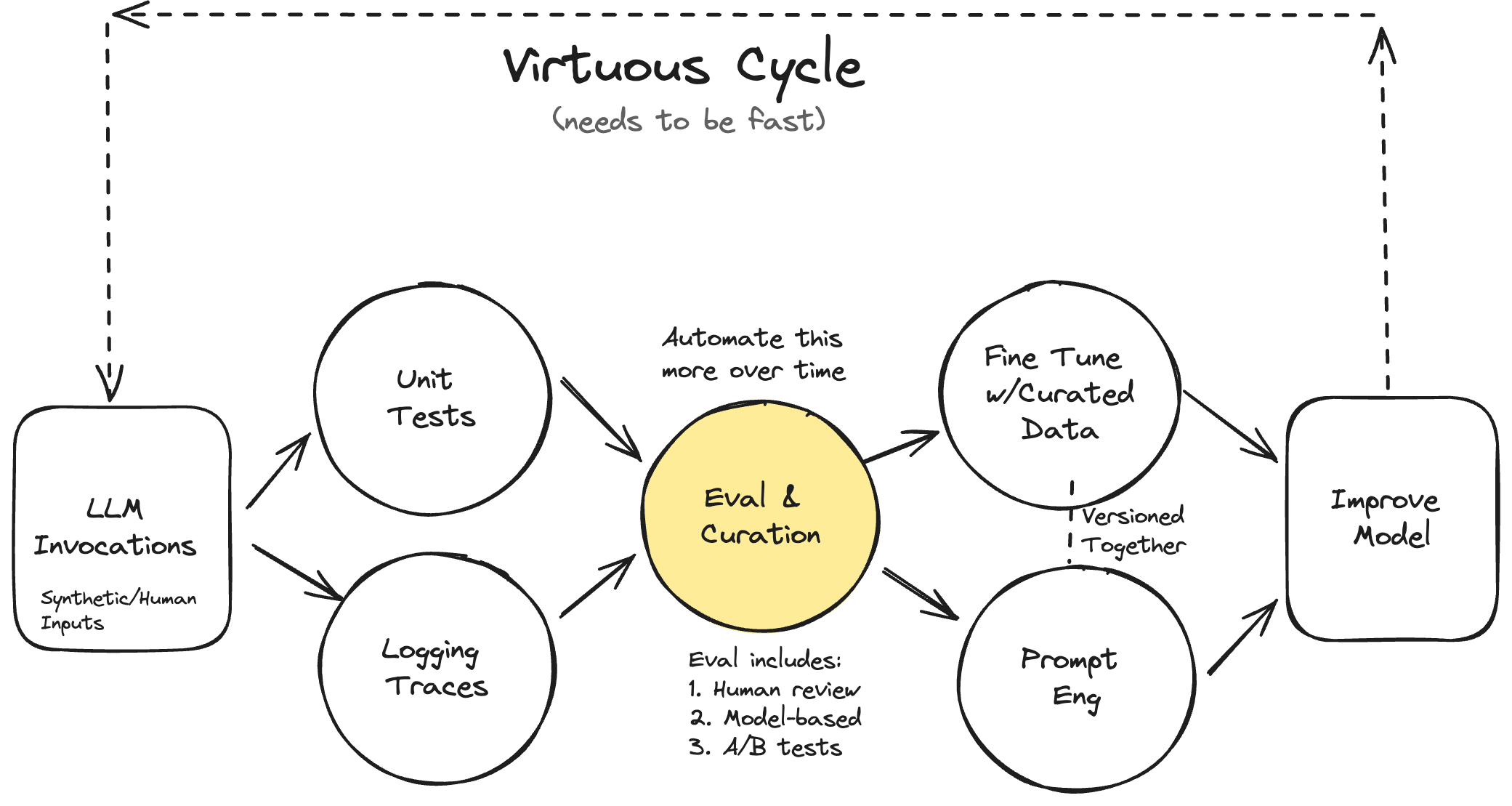

We at Seezo have been trying our best to make evals a first class citizen in our SDLC of our LLM backed product. The end goal is to have a robust pipeline that integrates seamlessly in your normal developemnt cycle. This is even more difficult if you have a non-chatbot usecase, since most of the ready to use OSS/Paid tools cater to this specific usecase and it’s pretty hard to customize them for your needs.

From annotating to dataset managment to prompt versioning to analyzing eval results over time, there are a lot of moving parts and it can be very confusing for anyone to wrap their head around how to think about it, what tools to use, how to integrate them, etc. Hence I keep reading about evals quite a bit on a regular basis. There are a lot of articles and guides out there, but very few of them are non-AI slop and useful.

Hence here is a curated list of resouces you should focus on if you are learning or trying to build eval for your own usecase :)

PS- This is mostly focused on evals for products using LLMs and not evaluating LLMs for different tasks.

Basics

-

- Video postcast on which the above blog is based on. They share screen and show how they do everything.

Implementation & Metrics

Datasets, annotations and more…

Case Studies

Developing GitLab Duo: How we validate and test AI models at scale

How Dosu Used LangSmith to Achieve a 30% Accuracy Improvement with No Prompt Engineering

Asana's LLM testing playbook: our analysis of Claude 3.5 Sonnet

List of eval tools:

Note: Blog cover image taken from https://hamel.dev/blog/posts/evals/

Subscribe to my newsletter

Read articles from Dhaval Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by