Persistent Storage in GKE: From RWO to RWX with NFS, Filestore, and GCS Fuse

Aniket Dubey

Aniket Dubey

In Kubernetes, managing persistent storage is crucial for stateful applications. Kubernetes offers different access modes for Persistent Volumes (PVs), primarily ReadWriteOnce (RWO), ReadOnlyMany (ROX), and ReadWriteMany (RWX). Understanding these modes and their implications is essential for designing robust storage solutions in your Kubernetes clusters.

ReadWriteOnce (RWO)

The ReadWriteOnce (RWO) access mode allows a volume to be mounted as read-write by a single node. This is suitable for applications where only one instance needs write access to the storage at any given time. However, in scenarios requiring multiple pods across different nodes to read and write to the same volume, RWO becomes a limitation.

Challenges with RWO:

A common issue with RWO volumes is the “Multi-Attach error,” which occurs when multiple pods attempt to mount the same RWO volume simultaneously. This error indicates that the volume is already attached to one node and cannot be attached to another, leading to pod scheduling failures. Such errors can also occur if the pods that should use the volume are located on different nodes.

Solutions for Shared Write Access: ReadWriteMany (RWX)

To enable multiple pods to read and write to the same storage, Kubernetes offers the ReadWriteMany (RWX) access mode. Implementing RWX can be achieved through various approaches:

NFS Server (Self-managed solution)

Google Filestore (Managed NFS service)

GCS Fuse (Mount Google Cloud Storage as a filesystem)

1. Network File System (NFS) Server

Deploying an NFS server within your Kubernetes cluster allows multiple pods to mount shared storage. This method involves setting up a dedicated NFS server that exports a shared directory, which pods can then mount as a volume. This approach is cost-effective and flexible but requires managing the NFS server’s availability and performance.

Step 1: Create a Compute Disk for NFS

Before setting up NFS, create a Google Compute Engine (GCE) Persistent Disk:

gcloud compute disks create gce-nfs-disk --size=10Gi --type=pd-balanced --zone=<zone-name> --project=YOUR_PROJECT_ID

Step 2: Deploy an NFS Server and Expose it as a Service

The NFS server will export a shared directory for other pods to use.

nfs-server.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-server

spec:

replicas: 1

selector:

matchLabels:

role: nfs-server

template:

metadata:

labels:

role: nfs-server

spec:

containers:

- name: nfs-server

image: gcr.io/google_containers/volume-nfs:0.8

ports:

- name: nfs

containerPort: 2049

- name: mountd

containerPort: 20048

- name: rpcbind

containerPort: 111

securityContext:

privileged: true

volumeMounts:

- mountPath: /exports

name: nfs-storage

volumes:

- name: nfs-storage

gcePersistentDisk:

pdName: gce-nfs-disk

fsType: ext4

---

apiVersion: v1

kind: Service

metadata:

name: nfs-server

spec:

ports:

- name: nfs

port: 2049

- name: mountd

port: 20048

- name: rpcbind

port: 111

selector:

role: nfs-server

Step 3: Create a Persistent Volume (PV) and Persistent Volume Claim (PVC)

Define a PV that points to the NFS server and a PVC to request storage.

pv-pvc.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

nfs:

path: /exports

server: nfs-server.default.svc.cluster.local

persistentVolumeReclaimPolicy: Retain

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: ""

resources:

requests:

storage: 1Gi

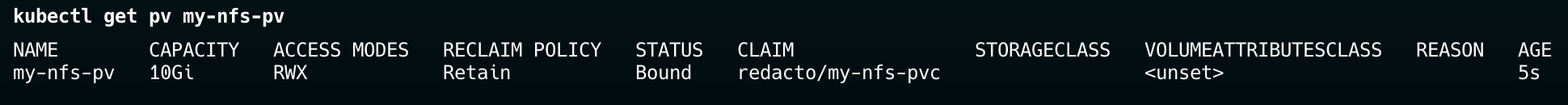

You should see that the PVC status is Bound and the volume it is bound to is nfs-pv. There is a 1-to-1 mapping between a PV and PVC. Once a PV is bound to a PVC, that PV can't be used to serve any other claim.

Step 4: Deploy a Sample Application

A deployment that mounts the RWX volume from NFS.

app-deployement.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

spec:

replicas: 2

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: app-container

image: busybox

command: ["sh", "-c", "while true; do echo 'Writing to shared storage' >> /mnt/shared/data.txt; sleep 5; done"]

volumeMounts:

- name: shared-storage

mountPath: /app/data

volumes:

- name: shared-storage

persistentVolumeClaim:

claimName: my-nfs-pvc

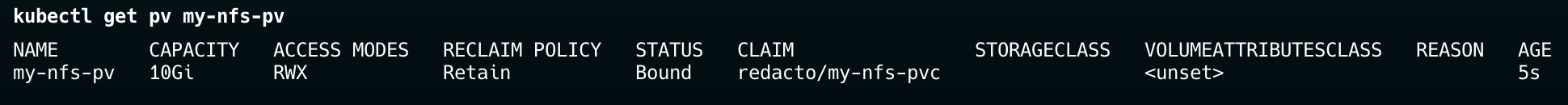

Verify that the pods are correctly using the RWX volume:

kubectl describe pod <pod-name>

You should see the NFS volume mounted under the Volumes section of the pod description.

2. Google Filestore (Managed NFS Solution)

Google Filestore provides a fully managed NFS storage solution that can be used for RWX workloads.

Step 1: Enable the Filestore API

Enable Filestore in your Google Cloud project:

gcloud services enable file.googleapis.com --project=<PROJECT-ID>

Verify that the API is enabled:

gcloud services list --enabled --project=<PROJECT_ID> | grep file.googleapis.comStep 2: Enable Google Filestore CSI Driver

Step 2: Register the CSI Driver

gcloud container clusters <CLUSTER_NAME> \

--update-addons=GcpFilestoreCsiDriver=ENABLED \

--region=<GCP_REGION>

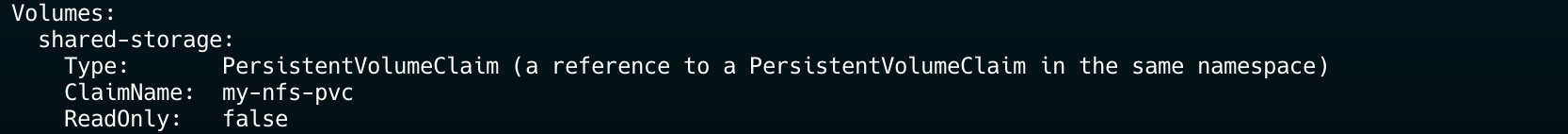

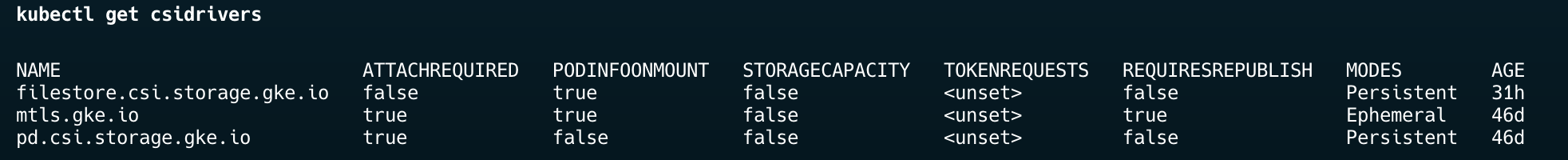

Verify that the driver is enabled:

Step 3: Verify Available Storage Classes

Google Filestore automatically creates multiple storage classes.

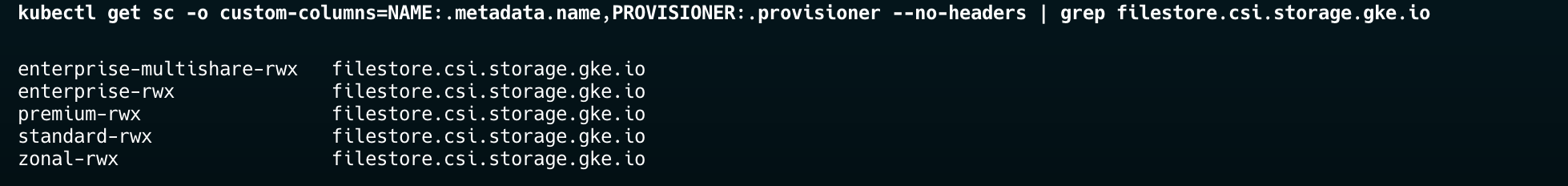

kubectl get sc -o custom-columns=NAME:.metadata.name,PROVISIONER:.provisioner --no-headers | grep filestore.csi.storage.gke.io

You should see storage classes like:

standard-rwx(basic tier)premium-rwx(premium tier)enterprise-rwx(enterprise tier)

Step 4: Create a Persistent Volume Claim (PVC)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: firestore-pvc

namespace: default # change this

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 1Gi

storageClassName: standard-rwx

This will automatically provision a Filestore instance and create a corresponding PV.

Step 5: Deploy an Application Using Filestore

Use the same application deployment YAML as in the NFS setup, but refer the Filestore PVC.

volumes:

- name: shared-storage

persistentVolumeClaim:

claimName: firestore-pvc # # Use Filestore PVC

3. GCS Fuse (Mount Google Cloud Storage as a Filesystem)

Google Cloud Storage FUSE allows you to mount GCS buckets as filesystems within your Kubernetes pods. This is particularly useful for workloads that need to share large amounts of data.

Step 1: Enable the GCS Fuse CSI driver in your GKE cluster

gcloud container clusters update <CLUSTER_NAME> \

--update-addons=GcsFuseCsiDriver=ENABLED \

--region=<GCP_REGION>

Verify that the driver is enabled:

kubectl get csidrivers | grep gcsfuse.csi.storage.gke.io

Step 2: Create a Storage Bucket

If you don’t have a GCS bucket, create one:

gsutil mb -p <PROJECT_ID> -l <GCP_REGION> gs://<BUCKET_NAME>/

Step 3: Create the Necessary Service Account and IAM Bindings

Create a Google Service Account (GSA):

#GSA

gcloud iam service-accounts create gcs-fuse-sa \

--description="Service account for GCS Fuse access" \

--display-name="GCS Fuse Service Account"

gcloud projects add-iam-policy-binding <PROJECT_ID> \

--member "serviceAccount:gcs-fuse-sa@<PROJECT_ID>.iam.gserviceaccount.com" \

--role "roles/storage.objectAdmin"

Create a Kubernetes Service Account (KSA) and link it to the GSA:

kubectl create serviceaccount gcs-fuse-ksa -n <NAMESPACE>

kubectl annotate serviceaccount gcs-fuse-ksa -n <NAMESPACE> \

iam.gke.io/gcp-service-account=gcs-fuse-sa@<PROJECT_ID>.iam.gserviceaccount.com

gcloud iam service-accounts add-iam-policy-binding gcs-fuse-sa@<PROJECT_ID>.iam.gserviceaccount.com \

--role roles/iam.workloadIdentityUser \

--member "serviceAccount:<PROJECT_ID>.svc.id.goog[<NAMESPACE>/gcs-fuse-ksa]"

Verify the binding:

gcloud iam service-accounts get-iam-policy gcs-fuse-sa@<PROJECT_ID>.iam.gserviceaccount.com

Enable GKE Metadata for your node pool:

gcloud container node-pools update <NODE_POOL_NAME> \

--cluster <CLUSTER_NAME> \

--location <GCP_REGION> \

--workload-metadata=GKE_METADATA

Why we're doing this: This command configures the node pool to use GKE Metadata, which is required for Workload Identity to function. GKE Metadata allows pods to acquire credentials based on their service account, enabling them to authenticate with Google Cloud services without explicit credentials.

Step 4: Create a PersistentVolume and PersistentVolumeClaim

pv-pvc.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: gcs-fuse-pv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 5Gi

storageClassName: ""

mountOptions:

- implicit-dirs

- uid=0

- gid=0

csi:

driver: gcsfuse.csi.storage.gke.io

volumeHandle: <BUCKET_NAME>

readOnly: true

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gcs-fuse-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

volumeName: gcs-fuse-pv

storageClassName: ""

Step 5: Deploy an Application Using GCS Fuse

apiVersion: apps/v1

kind: Deployment

metadata:

name: gcs-fuse-example

namespace: ecorp

annotations:

spec:

replicas: 2

selector:

matchLabels:

app: gcs-fuse-example

template:

metadata:

labels:

app: gcs-fuse-example

annotations:

gke-gcsfuse/volumes: "true"

gke-gcsfuse/ephemeral-storage-limit: "2Gi"

spec:

containers:

- image: busybox

name: busybox

# command: ["sleep"]

# args: ["infinity"]

command: ["sh", "-c", "while true; do echo 'Writing to GCS Fuse storage' >> /data/ecorp.txt; sleep 5; done"]

volumeMounts:

- name: gcs-fuse-csi-static

mountPath: /data

serviceAccountName: gcs-fuse-ksa

volumes:

- name: gcs-fuse-csi-static

persistentVolumeClaim:

claimName: gcs-fuse-pvc

This deployment:

Creates 2 replicas of a simple busybox container

Includes the special

gke-gcsfuse/volumes: "true"annotation that tells GKE this pod uses GCS FUSESets

gke-gcsfuse/ephemeral-storage-limit: "2Gi"to allocate node storage for the FUSE cacheSpecifies the KSA we created earlier via

serviceAccountName: gcs-fuse-ksaMounts our GCS FUSE PVC at

/datain the containerWrites to a file in the shared GCS bucket every 5 seconds

The use of our KSA is crucial here, as it provides the necessary permissions to access the GCS bucket via Workload Identity.

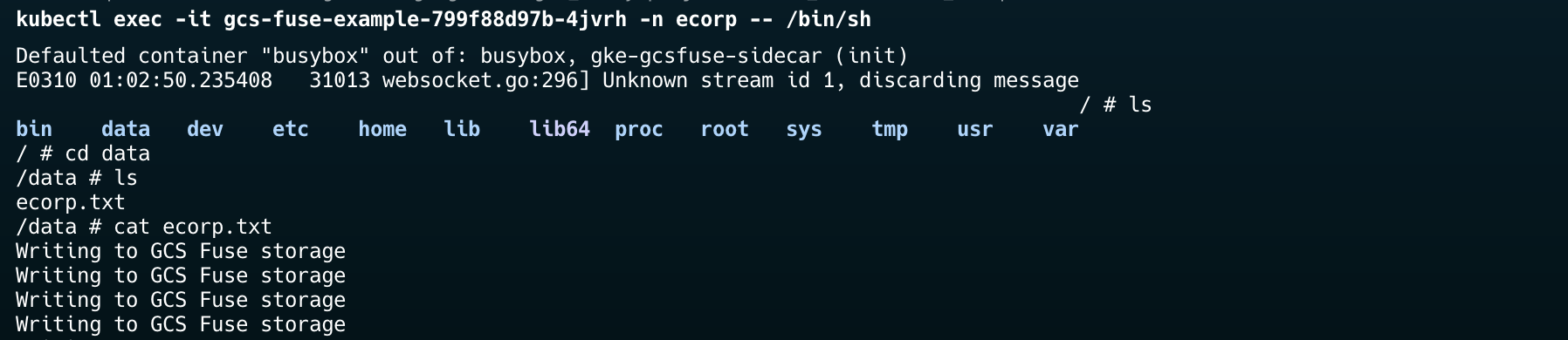

Step 6: Verify GCS Fuse Integration

Exec into the container to confirm the file exists and view its contents:

kubectl exec -it <POD_NAME> -n ecorp -- /bin/sh

Once inside the container:

cat /data/ecorp.txt

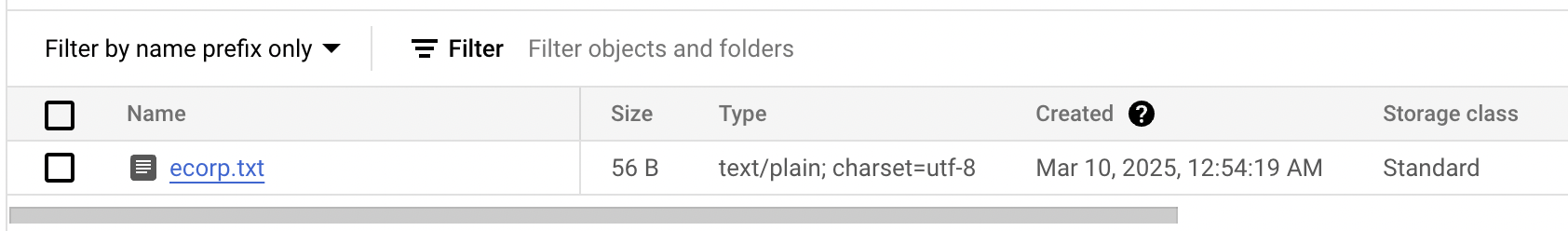

Now, verify that the file appears in your GCS bucket:

References and Resources

https://cloud.google.com/kubernetes-engine/docs/how-to/cloud-storage-fuse-csi-driver-pv

https://github.com/GoogleCloudPlatform/gcs-fuse-csi-driver/issues/142

Conclusion

Understanding Kubernetes persistent storage access modes and implementing the right solution for your workload is critical for building reliable, scalable applications. Whether you choose a self-managed NFS server, a managed solution like Google Filestore, or an object storage approach with GCS Fuse, each option provides ReadWriteMany capabilities with different tradeoffs.

By following the steps outlined in this guide, you can implement robust shared storage solutions for your Kubernetes applications, enabling stateful workloads to run reliably at scale.

Subscribe to my newsletter

Read articles from Aniket Dubey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Aniket Dubey

Aniket Dubey

My passion lies in creating scalable serverless solutions that scale seamlessly in the cloud, particularly in AWS. I thrive on crafting efficient Infrastructure as Code (IaC) using Terraform. Let's build, scale, and innovate in the cloud era! ☁️🚀