AI Agent Orchestration with OutSystems Developer Cloud

Stefan Weber

Stefan Weber

AI is everywhere. In every discussion I've had in recent months, AI capabilities and development with AI models have come up. I often notice that when people talk about implementation, they quickly mention custom code AI frameworks, especially for AI agent orchestration. Frameworks like crewAI, for example. For some reason, OutSystems Developer Cloud doesn't even cross their minds in this area, even though they use ODC for various other developments. From my perspective, that's unfortunate. It's true that custom code frameworks like crewAI offer a lot for agentic AI, including many built-in utilities, tools, and safeguards, but in the end, they are just code.

In this lab, I want to show that OutSystems Developer Cloud is more than capable of building agentic AI solutions. The initial effort might be higher than with a custom code AI framework, but on the other hand, you save on setting up a deployment pipeline. (….. and python 😎)

Demo Application

This lab includes a component on Forge called “Agentic AI Lab”.

Dependencies:

AWSBedrockRuntime - External Logic Connector library for Amazon Bedrock.

LiquidFluid - External Logic Utility library. This library is used to merge text templates with placeholders with data using the Shopify Liquid Templating language.

The Lab uses Amazon Bedrock models. You will need AWS credentials with access to the following models in the us-east-1 region.

amazon.nova-micro-v1:0

us.anthropic.claude-3-5-sonnet-20241022-v2:0 (cross region inference)

After installing the Lab into your ODC development environment, set the application settings for the AWS Access Key and AWS Secret Access Key.

Introduction

Let's begin by introducing some important terms and definitions related to agents and agent orchestration.

Model and Prompt

Let’ start with AI models and prompts. Sometimes, I notice misunderstandings about how AI models work, so let's clear a few things up.

AI models are stateless, meaning they do not store anything. Everything you think the model needs as input must be included in the prompt, which is then sent to the model to produce a result.

The prompt sent to the model is plain text. The API, such as the Chat Completion API from OpenAI, is an abstraction on top of the model that makes it easier to create a prompt with the necessary model-specific instruction tokens.

The OpenAI Chat Completion API has become a de facto standard in the market. Most major vendors offer an equivalent or similar API to interact with their models.

At a glance, the API allows you to easily build a prompt for the model with:

System Prompt - A system prompt is a set of predefined instructions and guidelines given to an AI model to shape its responses and behavior. It defines the context, tone, and boundaries within which the AI operates.

Message Conversation - An array of messages between a user and the model (assistant) that form a conversation history.

- Tools - (not applicable to all models) A tool definition includes a tool name, a description, and a JSON schema. The model examines the entire context to determine if a tool should be used based on the description. If necessary, tool parameters defined in the JSON schema are extracted from the context. Once all required tool parameters are extracted, the model returns a "Tool Use" result with the tool name and its extracted parameters. It is up to your application to execute the actual tool and add the results back to the Message Conversation.

- Configurations - Additional model settings. Here, you can usually specify the maximum number of tokens—words or parts of words—that the model should generate, and also how creative the output should be.

Agents

An AI agent is like a role in a company, with specific tasks and decision-making abilities, and access to tools for completing tasks or gathering information to make informed decisions. Employees in a role also bring their own knowledge and experience, which helps them handle tasks and interact with other employees and roles in the company.

When defining an AI agent, you consider the same questions as when defining a company role and its responsibilities (tasks). However, there is one difference: while company roles often cover a wide range of topics, AI agents are designed to focus on a specific topic and related tasks.

Purpose - The purpose of the agent and a description of what it can do.

Goals - A description of the goals and objectives the agent aims to achieve, along with a definition of the concrete results the agent produces.

Instructions - Guidelines on how the agent should operate and interact with its environment (e.g., a user) to achieve its goals.

Tools - A definition of the tools it must or can use to produce the results.

Constraints - Additional safeguards and constraints that must be considered when the agent performs its tasks. (These exist for human roles too, though they might not always be written down).

From a technical perspective an agent would consist of

Model - An AI model that powers the agent. The Purpose, Goals, Instructions, and Constraints serve as general guidelines for its behavior. The AI model you choose to power an agent can depend on several criteria, such as its ability to infer on a specific topic, the maximum context window, or its proficiency in a particular language.

Tools - One or more tool definitions. Remember, a model cannot run a tool on its own. It can only decide if a tool should be used and identify the necessary input parameters from the context, leaving the actual tool execution to your application. A provided tool is simply a combination of a description and the required input parameter definitions. Tools supply on-demand data to the context.

Knowledge - Additional information added to give the agent more context, helping it perform tasks better. This is like the extra knowledge and experience a human agent might have.

Agent Orchestration

Agent Orchestration involves managing and coordinating multiple AI agents to complete complex tasks and goals. An orchestrator is an agent designed to choose the best agents from a group to perform tasks. Different development frameworks for agentic AI solutions use various names for this agent, such as orchestrator, supervisor, or dispatcher. Besides selecting and activating agents, the orchestrator handles the overall context information and ensures that an agent receives the necessary context information when needed.

The main idea of agent orchestration with highly capable agents is that with minimal input data, the entire process can run independently. Agents collaborate to gather information, make decisions, and produce a result, such as placing an order with a vendor. However, fully automated orchestrations are not quite common right now. Instead, orchestrations linked to a chat conversation with a human in the loop are more typical.

A user sends a message to a chat, which is added to the orchestration context.

The orchestrator reviews the context and, if needed, selects an agent to handle the user's message. The orchestrator also provides the full context or parts of it to the agent.

The agent processes the message and produces a result, which could be the final outcome or a request for clarification or more information.

The orchestrator adds the agent's output to the overall context and displays it to the user in the chat.

Repeat from Step 1.

Why you should care

An orchestrated collection of AI agents with data and tools is excellent for automation and offers many advantages over using a single model and single agent.

Automation - You can begin with an agentic human-in-the-loop orchestration and gradually move towards more automation by sending one agent's output directly to another agent. As your agents become more advanced, you'll need a human less often to check or manually adjust results.

Model - You can choose the best model for your agent to perform its tasks from thousands of available foundational and fine-tuned models, including special-purpose models optimized for tasks like data extraction.

Cost - Whether in your data center or in the cloud, using models incurs costs. By choosing smaller and therefore less expensive models for simpler tasks instead of relying on a single, large, and costly general-purpose model, you can significantly reduce your overall costs.

Performance - Smaller models are typically faster at inferring than large models, resulting in better performance.

Hallucination - Using focused agents with an orchestrator lets you carefully manage the context for a model's use, generally keeping the model's context smaller and reducing the risk of model hallucinations.

Tool Overlap - Instead of defining many tools in a single model invocation, you attach tools to individual agents. This approach reduces the chance of the model choosing the wrong tool due to ambiguous tool descriptions.

Lab Outline

In this lab we a are building a human-in-the-loop agentic solution. We are going to implement two agents:

Product Agent - This agent acts as a product manager in our company. In our implementation this agent is only responsible for creating new products in our company product catalog. Meaning that the agent will create a new product record in an entity of our application.

LinkedIn Agent - This agent is a social media expert and drafts LinkedIn posts. In our implementation it is just an AI model with some instructions.

And, of course, an Orchestrator that evaluates which agent is best suited to handle a request from a conversation.

Agent and Orchestrator Implementation

Let's walk through the important steps to implement an agent and an orchestrator. Open the Agent AI Lab app in ODC Studio.

Agent

Both agents are defined in a static entity AgentSettings under Data - Entities - Database. Each agent record includes the following properties:

Name - A short name that also serves as the record ID to identify the agent.

Model - The Amazon Bedrock model the agent will use. Note that the Product Agent uses Amazon Nova Micro, a very small yet fast model, while the LinkedIn Agent uses the Anthropic Sonnets Claude model.

Role - The agent's role title.

Description - A description of the agent's capabilities.

Goal - The outcome the agent aims to achieve.

Instruction - Additional instructions for the agent.

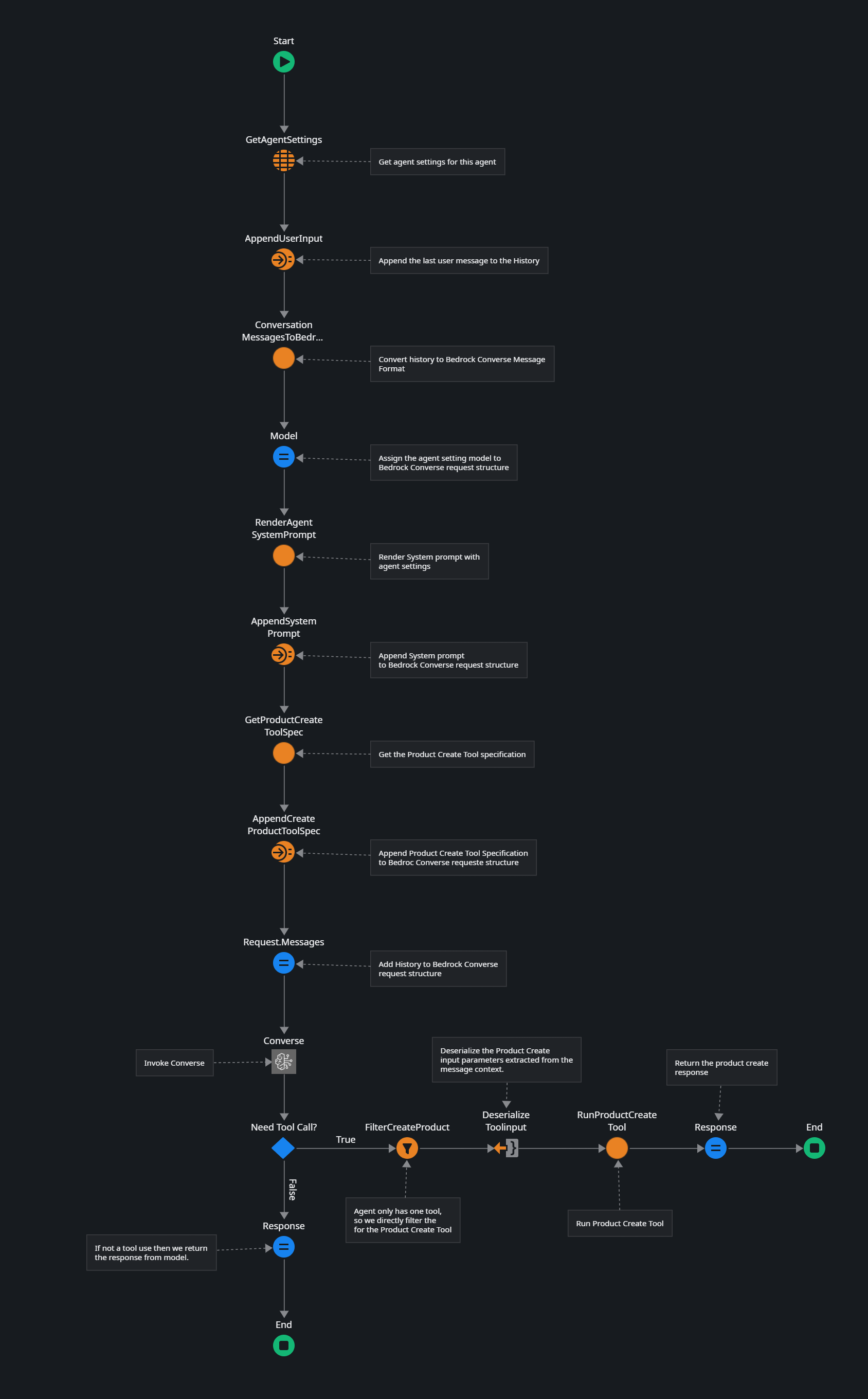

Open the server action Agent_ProductManager in Logic - Server Actions - Agents. Unlike the LinkedIn agent, the Product Manager agent has an attached tool. Let's go through this one to explore the implementation details.

In general, the action flow of an agent consists of the following steps:

Retrieve agent settings from the AgentSettings static entity

Build the system prompt for the agent

Add tool specification(s)

Invoke the model

Handle tool execution (if requested by the model)

Return the response

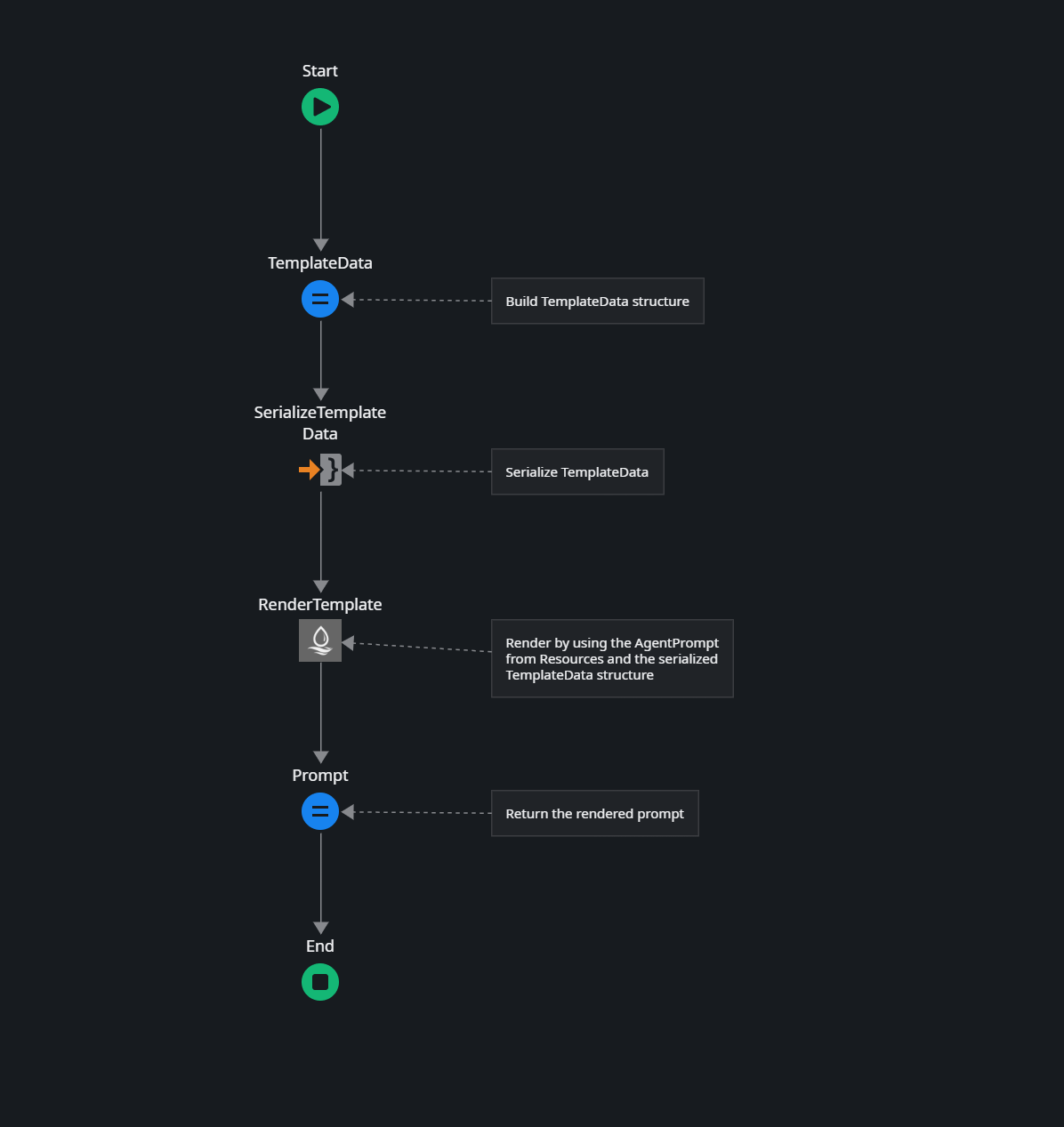

Lets take a close look at RenderAgentSystemPrompt

RenderAgentSystemPrompt Action

This action uses the RenderTemplate action from the LiquidFluid external logic Forge component. The template, AgentPrompt, is located in Data - Resources - Agents. LiquidFluid allows you to merge JSON data with a text template that contains handlebars placeholders based on the Shopify Liquid Templating language.

The template for an agent looks like this:

You are an {{ role }}. You will engange in an open-ended conversation, providing helpful and accurate information.

**Scope**

Your scope of providing assistance is limited to the following:

{{ description }}.

**Instructions**

{{ instructions }}

**Goal and expected result**

{{ goal }}

**Additional Instructions**

- Provide well-reasoned responses that directly address the users intent

- Provide additional explanations where appropiate

- Ask for clarification if parts of the question are ambigous.

- Ask for additional information if the context does not provide sufficient information

- Do assist in user questions or intents that are outside of the given scope. Decline such requests and respond respectfully.

TemplateData - Here we assign parameters to a local structure variable that matches the handlebar placenholders in the template.

SerializeTemplateData - Generates the stringified JSON document that is required for the LiquidFluid external logic.

RenderTemplate - Merges the data with the agent template from Data - Resources - Agents

Prompt - Assigned the rendered prompt to the output.

GetProductCreateToolSpec Action

Switch to the GetProductCreateToolSpec server action in Logic - Server Actions - Tools.

A tool specification consists of the following:

Name - A tool descriptor that identifies a tool.

Description - A detailed explanation of the tool and when it should be used.

Schema - A JSON schema with required and optional input parameters.

Our Product Create Tool includes the following:

Name - create_product

Description - Creates a new product in the product catalog. This tool requires a user to specify a name, description, and price.

The parameter schema is loaded from Data - Resources - Tools and looks like this:

{

"type": "object",

"properties": {

"name": {

"type": "string",

"description": "Name of the product"

},

"description": {

"type": "string",

"description": "Short description of the product"

},

"price": {

"type": "number",

"description": "Price of the product."

}

},

"required": ["name", "description", "price"]

}

The schema defines three required properties name, description and price.

Handle Model response

The most interesting part is handling the model response.

After running the Converse action, the model can respond with either a text message or a tool use request. The IF condition after the model call checks for this.

If it's a regular response, we simply return the text response generated by the model. This might happen if the user didn't provide enough information for the Product Create tool.

If it's a tool request (tool_use), we know it can only be the Product Create tool since we have only one tool attached. If you have multiple tools assigned, you would need to adjust the flow to identify which tool is requested and take the appropriate action.

FilterCreateProduct - Here, we filter the list of messages from the Converse action for

ToolUse.Name = "create_product".DeserializeToolInput - The

FilterCreateProduct.FilteredList.Current.ToolUse.Inputproperty contains the extracted and serialized tool parameters that we deserialize into a Product_Create structure.RunProductCreateTool - This action creates a new record in our Product entity and returns a response text.

Finally, we return the response text from the RunProductCreateTool to the orchestrator.

Take a moment to look at the LinkedIn agent too. You'll notice that this agent is much simpler because it doesn't have an attached tool.

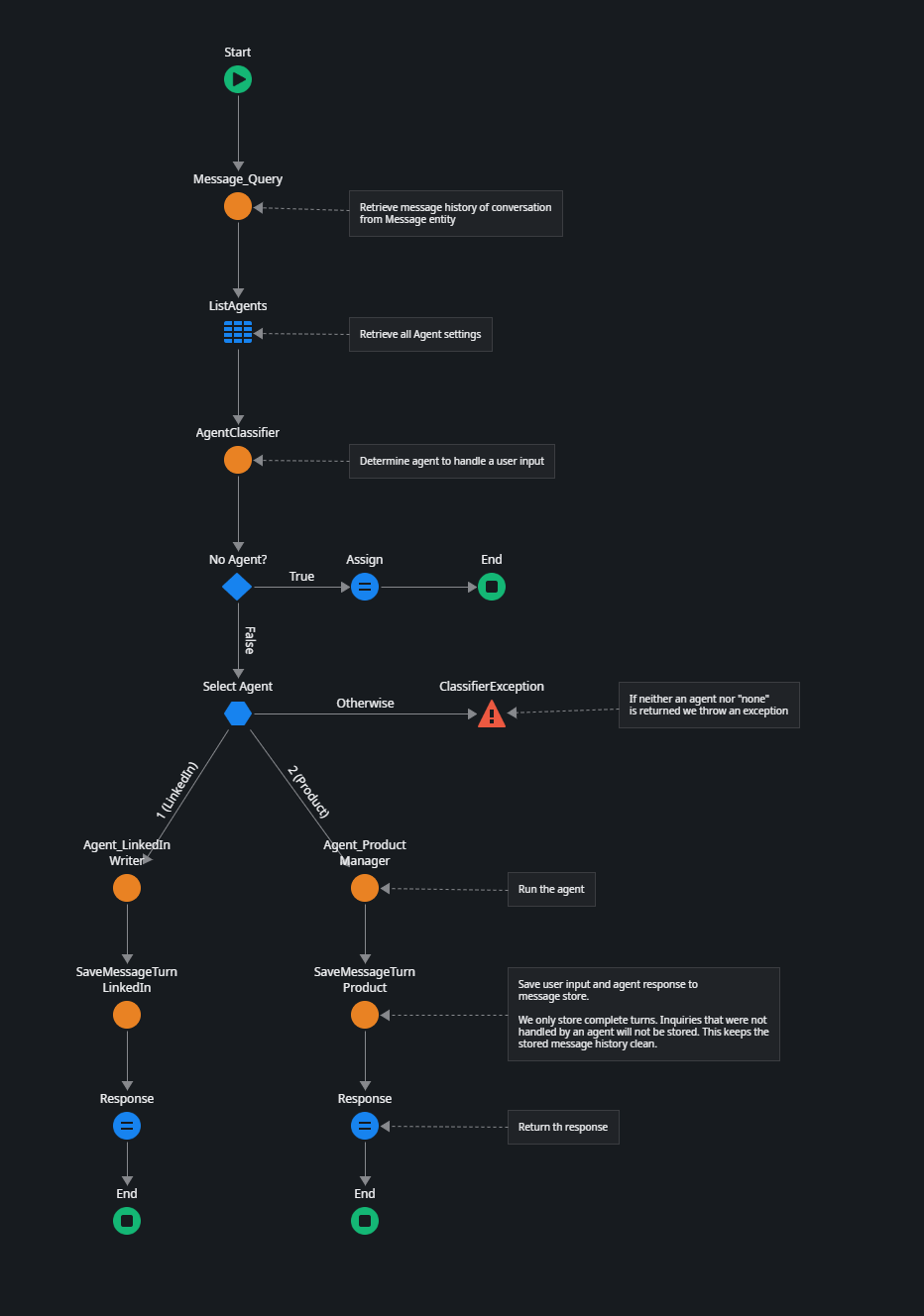

Orchestrator

The Orchestrator is the "heart" of our implementation. It manages and stores the conversation history, decides which agent should handle a user's input, and calls the agent implementation.

Open the Orchestrator server action in Logic - Server Actions - Agents.

The Orchestrator takes the last user input message as a parameter and then performs the following steps:

Message_Query - Retrieves the message history from the Message entity.

ListAgents - Retrieves the agent settings from the AgentSettings static entity.

AgentClassifier - Determines the most suitable agent to respond to the user's input message based on the message history. See details below.

Run Agent - Executes one of the determined agents.

SaveMessageTurn - Saves both the user input message and the model response to the Message entity.

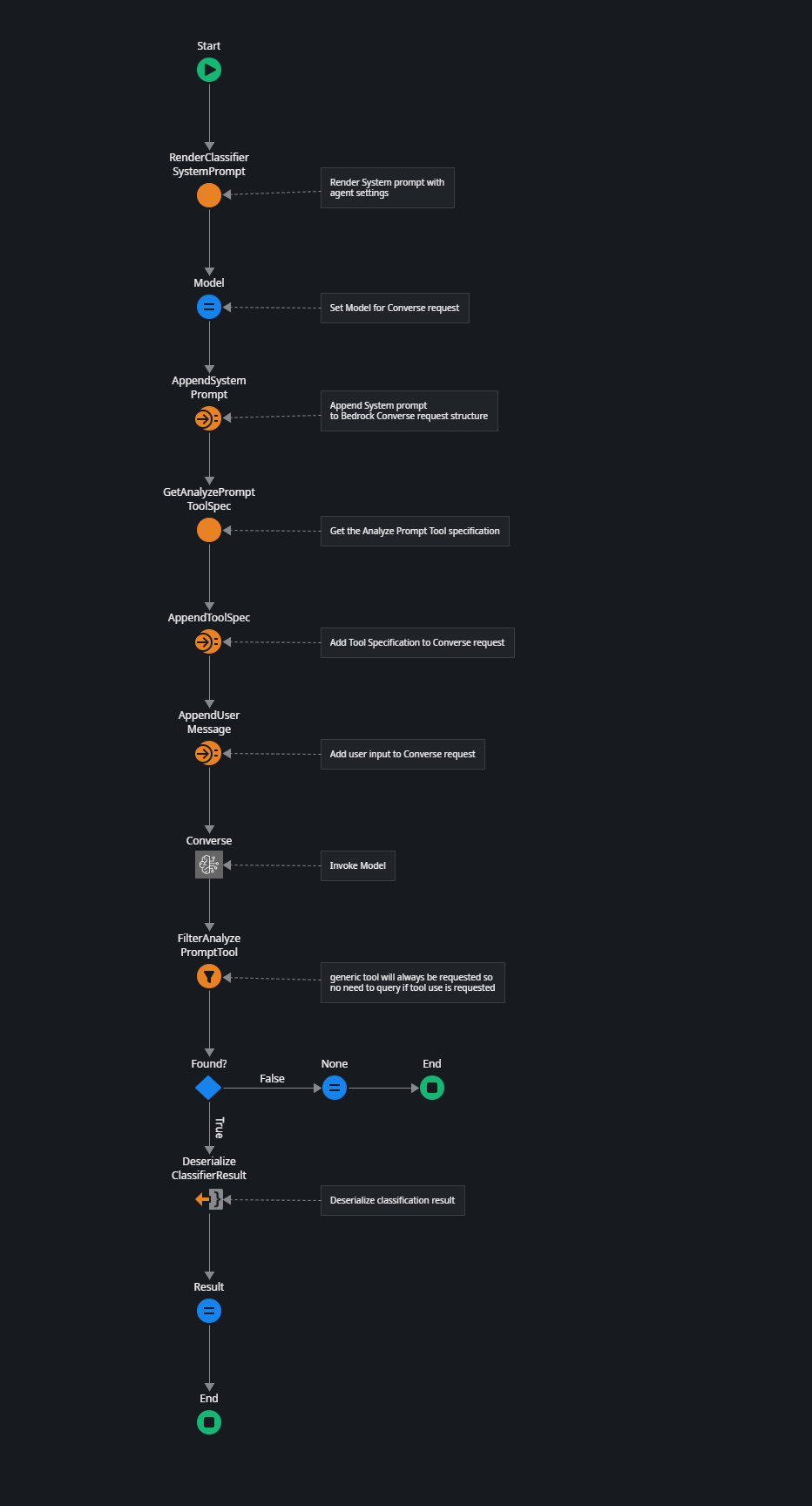

Agent Classifier

The AgentClassifier server action in Logic - Server Actions - Agents is responsible for selecting one of our agents based on user input and the message context. You'll notice that its implementation resembles a typical agent implementation, and that's exactly what it is.

There are only two differences between the Agent Classifier and a regular agent: the prompt and the tool specification.

The system prompt for the classifier looks like this.

You are an agent dispatcher, an intelligent assistant who analyzes and evaluates requests and then forwards these requests to the most suitable agent. Your job is to understand a request, identify key entities and intentions and determine which of the available agents is best suited to handle a request.

**Important**: A request can also be a continuation of a previous request. The conversation history together with the short name of the last agent used is provided. If a request looks as if it is a continuation of a previous request, then use the same agent as before.

Analyze the request and determine exactly one of the following available agents.

**Available Agents**

Here is the list of available agents.

<agents>

{% for agent in agents %}

<agent>

<shortName>{{ agent.shortName }}</shortName>

<description>{{ agent.description }}</decription>

</agent>

{% endfor %}

</agents>

**Instructions for Agent classification**

- Agent Shortname: Choose the most appropiate agent shortname based on the nature of the request. For follow-up requests use the the same agent shortname as the previous interaction.

- Confidence: Indicate how confident you are in the classification on a scale from 0.0 (not confident) to 1.0 (very confident)

- If you are unable to select an agent put "none"

Handle variations in user input, including different phrasing, synonyms, and potential spelling errors. For short requests like "yes", "ok", "I want to know more", or numerical answers, treat them as follow-ups and maintain previous agent selection.

**Conversation History**

Here is the conversation history that you need to take into account before answering. The last message of a user has the highest relevance when determining an agent.

A message entry consists of a role and the message. The role can be “user” or “assistant”. “user” messages are requests from a user and ‘assistant’ messages are responses from an agent. Assistant messages have the agent shortname in brackets before the message.

<history>

{% for message in history %}

<message>

<role>{{ message.role }}</role>

<message>{{ message.text }}</message>

</message>

{% endfor %}

</history>

**Additional Instructions**

Skip any preamble and provide only the response in the specified format.

In short, this prompt provides the model with information about the available agents and the message history it should consider.

The real highlight is the attached tool specification, which looks like this:

Name - analyze_prompt

Description - Inspect a prompt and provide structured output

Schema

{

"type": "object",

"properties": {

"userinput": {

"type": "string",

"description": "The original user input"

},

"selected_agent": {

"type": "string",

"description": "The short name of the selected agent"

},

"confidence": {

"type": "number",

"description": "The confidence level between 0.0 and 1.0"

}

},

"required": ["userinput", "selected_agent", "confidence"]

}

Unlike the Product Create tool of the Product agent, this tool is always executed because the model can always derive the parameters from the given prompt. This allows us to deserialize the result every time into a ClassifierResult structure and use the SelectedAgent property to execute an agent.

Try and Debug

Now that we've explored the basics of orchestrator and agent implementation, I suggest you take some time to debug the implementation.

If something isn't working as expected, you may need to modify the prompts with additional instructions or constraints.

AI Agent Builder

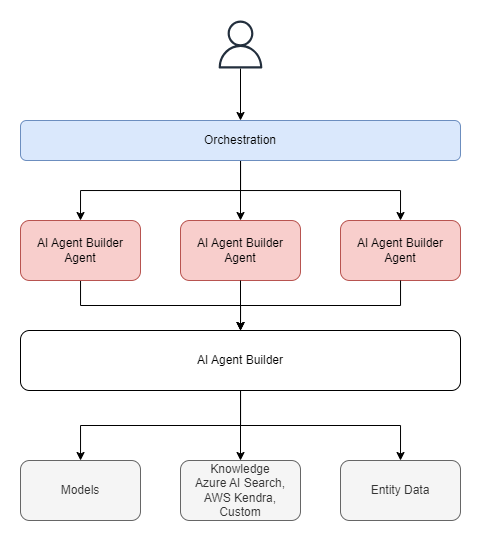

Before we conclude the lab let us quickly discuss where AI Agent Builder fits in.

AI Agent Builder allows you to build a single agent with access to knowledge, data from entities and tools. It fits nicely into an orchestration like the one we just explored.You would just build the orchestration that handles the message context and the execute service agents from your AI Agent Builder agent implementation the same way as we did with our custom agents above.

Summary

This concludes our lab on Agentic AI with OutSystems Developer Cloud. In this lab, we explored the basics of building agent orchestration. It shows that ODC is a good alternative to existing agentic frameworks, although it currently doesn't offer as many features. However, you don't have to worry about custom coding or deploying a custom code agentic solution. OutSystems Developer Cloud, along with some Forge components, provides everything you need to build. The only limitation is that ODC cannot call agents in parallel, but in a human-in-the-loop scenario, this might not be necessary. ODC is rapidly adding new features, and I hope we will see parallel action calling in the platform soon.

Nevertheless, thoroughly evaluate your use-case scenarios before making a decision. The good thing is that you can always mix and match.

I hope you enjoyed it. Please leave a comment with your feedback.

Subscribe to my newsletter

Read articles from Stefan Weber directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Stefan Weber

Stefan Weber

Throughout my diverse career, I've accumulated a wealth of experience in various capacities, both technically and personally. The constant desire to create innovative software solutions led me to the world of Low-Code and the OutSystems platform. I remain captivated by how closely OutSystems aligns with traditional software development, offering a seamless experience devoid of limitations. While my managerial responsibilities primarily revolve around leading and inspiring my teams, my passion for solution development with OutSystems remains unwavering. My personal focus extends to integrating our solutions with leading technologies such as Amazon Web Services, Microsoft 365, Azure, and more. In 2023, I earned recognition as an OutSystems Most Valuable Professional, one of only 80 worldwide, and concurrently became an AWS Community Builder.