Cutting costs on developing RAG chatbots

Teogenes Moura

Teogenes MouraTable of contents

TL;DR

I'm testing the engineering cost vs price pros and cons of hosting the same LLM for different tasks;

The good news is it's possible: The application works as expected while using both;

Maintaining the infrastructure might prove to be a dealbreaker soon, though.

One of the challenges of developing anything AI-related without relying on OpenAI infrastructure (models or libraries) is figuring out what infrastructure is worth building or paying for. While building Endobot, I've been experimenting with pricing vs. engineering effort. Today, I want to share a few observations on using a local and hosted version of the same LLM for different purposes.

The basic flow of the app is as follows:

1 - Insert new specialized content into a database. Since LLM models don't speak English, we should convert the text content into embeddings.

2 - Get a WhatsApp message from the user;

3 - Transform the message into embeddings and compare it to the ones we have in the database;

4 - If we find matches, we enrich the standard LLM response and send it back to the user;

Inserting embeddings into the database

Today, let's take a look at how to insert embeddings into our vector database, a PostgreSQL instance hosted by Supabase with the pgvector plugin enabled.

First, we need to tell the script where our model lives since we’re relying on local storage to host our model:

import { AutoTokenizer, AutoModel } from '@huggingface/transformers';

// Set correct ONNX model path - AutoTokenizer will search for a 'onnx' directory automatically

const modelPath = "/root/.cache/huggingface/hub/BAAI_bge-large-en-v1.5/";

// Load the tokenizer from the ONNX directory

const tokenizer = await AutoTokenizer.from_pretrained(modelPath, {

local_files_only: true,

use_fast: false

});

We break down the content loaded from a file into context-aware chunks using Langchain's RecursiveCharacterTextSplitter :

const splitter = new RecursiveCharacterTextSplitter({

chunkSize: 1024, // Adjust to ensure context-aware chunking

chunkOverlap: 100,

separators: ["\n\n", "\n", " "], // Preserve sentence structure

});

const initialChunks = await splitter.createDocuments([text]);

console.log(`🔹 LangChain created ${initialChunks.length} character chunks`);

Then we use AutoTokenizer from the '@huggingface/transformers' library to transform those text-based chunks into actual token-based chunks that our database can understand:

async function chunkTextWithTokens(text, maxTokens = 1000, overlapTokens = 50) {

// Tokenize input correctly

const tokens = tokenizer.encode(text.replace(/\n/g, " "), { add_special_tokens: true });

console.log("🔢 Tokenized IDs:", tokens);

if (tokens.length === 0) {

console.error("❌ Tokenizer produced 0 tokens. Possible issue with encoding.");

return [];

}

const chunks = [];

let start = 0;

while (start < tokens.length) {

const end = Math.min(start + maxTokens, tokens.length);

const chunkTokens = tokens.slice(start, end);

// Convert tokens back to text

const chunkText = tokenizer.decode(chunkTokens, { skip_special_tokens: true });

chunks.push(chunkText);

start += maxTokens - overlapTokens; // Move forward with overlap

}

return chunks;

}

At this point, our token-based chunks look like the following:

🔢 Tokenized IDs: [

101, 1001, 6583, 9626, 28282, 23809, 27431, 1001, 2203,

8462, 18886, 9232, 1001, 2203, 8462, 18886, 12650, 1001,

3756, 7849, 2818, 1001, 8879, 8067, 16570, 2080, 8879,

23204, 18349, 18178, 3995, 2226, 4012, 10722, 3527, 999,

9686, 3366, 2033, 2015, 20216, 2590, 2063, 10975, 2050,

16839, 9765, 2050, 2272, 3597, 2226, 18178, 3695, 2139,

22981, 3619, 1041, 2724, 2891, 2590, 2229, 1012, 29542,

3207, 3597, 14163, 9956, 1051, 28667, 2239, 5369, 6895,

23065, 2139, 29536, 9623, 17235, 22981, 3619, 4078, 3366,

17540, 2890, 9686, 3366, 2033, 2015, 100, 9353, 4747,

5886, 1010, 9706, 25463, 2099, 1041, 14262, 13338, 2063,

4012,

... 214 more items

]

Now we’re ready to generate the actual embeddings and insert them into Supabase:

// Step 3: Insert each chunk into Supabase

for (const chunk of finalChunks) {

const embedding = await generateEmbedding(chunk);

if (!embedding || embedding.length !== 1024) {

console.error(`Skipping chunk - Invalid embedding length: ${embedding?.length}`);

continue;

}

const { statusText, error} = await supabase

.from('documents')

.insert([{ content: chunk, embedding }]);

if (error) console.error("❌ Error inserting chunk:", error);

else console.log("✅ Inserted chunk! API response:", statusText);

A hybrid approach

In this context, generateEmbedding is a function that will use a local model or a hosted one. In this case, I'm testing out a hybrid approach. There's an offline, hosted version of the model that allows me to generate tokens quickly and without incurring more costs, which is what you see here:

const modelPath = "/root/.cache/huggingface/hub/BAAI_bge-large-en-v1.5/";

// Load the tokenizer from the ONNX directory

const tokenizer = await AutoTokenizer.from_pretrained(modelPath, {

local_files_only: true,

use_fast: false

});

But there’s also a call to a hosted version of the same model on TogetherAI that we use when generating actual embeddings:

import { togetherAiClient, EMBEDDING_MODEL_URL } from '../config/togetherAi.js';

const buildEmbeddingQueryPayload = (text) => {

return {

input: text,

model: "BAAI/bge-large-en-v1.5",

}

}

export async function generateEmbedding(text) {

try {

const payload = buildEmbeddingQueryPayload(text)

const response = await togetherAiClient.post(EMBEDDING_MODEL_URL, payload);

return response?.data?.data?.[0]?.embedding ?? null; // Expecting an array of numbers

} catch (error) {

console.error('Error generating embedding:', error);

throw error;

}

}

This is an unconventional approach, so now is a good time to ponder the positive and negative sides. In terms of pros and cons, this is a few things I've observed when trying out this approach:

Pros

We can run the project on a very tight budget. Right now we're hosted by the most basic of Digital Ocean’s droplets (1GB RAM + 25gbs SSD) and the model's cost on TogetherAI is reasonably low ($0.02). We're not yet getting high-volume traffic though, so this might change.

I'm able to self-host a resource-intensive side of the project locally. Since I can run the logic that inserts embeddings into our database from my computer, we don't need to upgrade the Digital Ocean droplet to perform this task.

Cons

I'm running into the negative side of doing everything myself: I've had a major issue today because the

@huggingfacelibrary could not load a local model if it's not in the ONNX format, so I had to spend quite some time writing a script to transform the version of the model available in Hugging Face's repository into the ONNX. There's a chance I'll write another blog post about it later, but for now, you can check it out here.There's a decent chance I'm over-engineering this, so I may switch to relying solely on the TogetherAI's endpoint. The aforementioned ONNX issue led me to modify the Dockerfile significantly for the project and include a conditional to run the insert-embedding logic only locally. While this isn't an issue per se, I'd prefer to keep things as lean as possible in the long term. The reasoning behind this is that while I want to keep our flexibility as much as possible in terms of AI model hosting vendors, I also don't want to spend too much time on ML Ops.

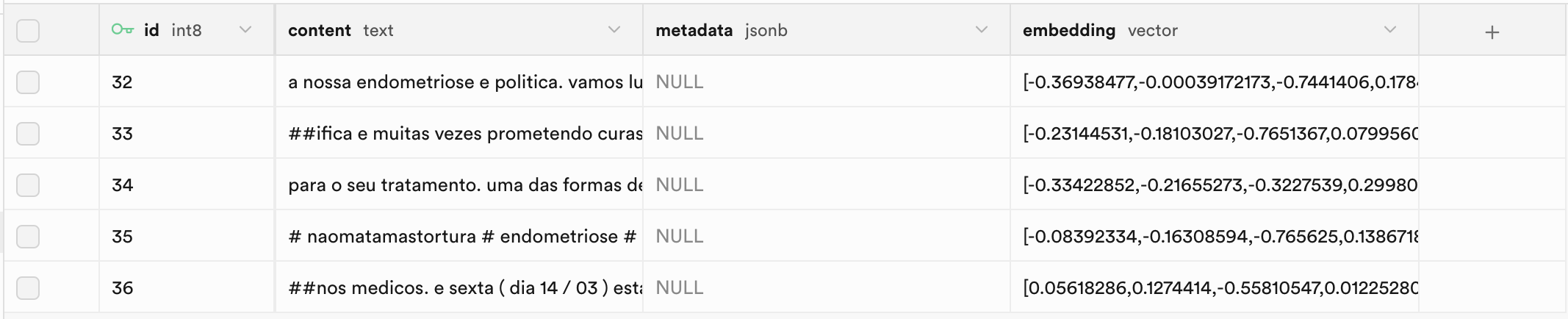

Anyway, after running our code this is the result:

As we can see from Supabase, we're now storing chunks of text in our “content” column for future reference and generating an embedding for each of them so we can enrich LLM responses.

Conclusion

While this has been an interesting experiment, I'm still not sure this approach is viable long term. Right now everything is running smoothly and we’re able to insert custom content into our vector database. There could be a mismatch between the local version of the model and the one living in TogetherAI's servers at some point though, so that's a worrying point for me. Of course, I made sure to double-check that the two are the same version of the same model, but it might still be a bit of a black box that might lead to issues in the future.

If this proves unsustainable, I'll probably generate tokens without relying on a local model and leave that responsibility to a third party. However, if this path proves successful, we'll have ensured the high quality of our embeddings by using token-based chunks while learning a bit about hosting models.

Whatever the way forward is, I'm happy to share this journey with you.

Thank you for reading.

Subscribe to my newsletter

Read articles from Teogenes Moura directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Teogenes Moura

Teogenes Moura

Software engineer transitioning into ML/AI Engineering