System Design: Replication vs. Redundancy

Omolere Asama

Omolere AsamaTable of contents

When discussing system design, it is not uncommon to find multiple closely related terms that can be muddled up or conflated, one with the other. Two such terms are Replication and Redundancy.

In good system design, it is important that a system is highly operational or accessible and also able to run successfully without unexpected failures or faults—these characteristics of a well-designed system are known as Availability and Reliability respectively. Replication and Redundancy help achieve these goals but serve different purposes.

Replication focuses on duplicating data or services across multiple locations to enhance performance and consistency. Redundancy, however, ensures backup components are available to prevent system failures. While both strategies contribute to fault tolerance, i.e., the ability of a system to withstand unexpected failures, they are not interchangeable. Understanding their distinctions and use cases is key to building resilient and scalable systems.

Replication

Replication is essentially the duplication of content across multiple locations or systems. Think having your pictures, videos and important files both on your device and somewhere in the cloud via some cloud service. You do this because you recognize the potential danger of having all your data localized in one source. You lose access to that source—in this case, your phone or laptop—then, all of your data is gone. In system design, this scenario is known as a Single Point of Failure (SPOF), i.e. a singular weakness in our system that could cause everything to crumble if it fails—think of that one Jenga block that could send the entire structure crumbling if removed.

Types of Replication

Replication may be of two types depending on the synchronicity of data copying i.e. depending on whether the data replication is parallel and concurrent with user action (writes), or after it. These two types are; Synchronous and Asynchronous Replication.

Synchronous Replication: If you have ever used a document editing service that simultaneously saves every change you make to your document to the cloud, you will find that it prevents you from making further changes to your document every time your internet connection fails and it is unable to sync/save your changes. This can come across as pretty annoying, but what you see at play is really synchronous replication. In this replication approach, an action is only marked as completed to the client when data replication to all nodes or replicas is complete. This ensures strong consistency across all nodes but can keep users waiting—and annoyed—a concept known as latency, especially in geographically distributed systems but will also ensure data accuracy and consistency. Databases like PostgreSQL with synchronous commit settings use this replication approach.

Asynchronous Replication: Some services mark a user action as complete to save users time to do other things, while the write operation or replication process is really just running in the background. This is asynchronous replication. The primary node processes the user’s write operation and then propagates changes to replicas in the background. The client receives confirmation as soon as the primary completes the write, without waiting for replicas to update. This reduces latency but creates a window where replicas may have outdated data i.e., if replication fails at any point, some nodes are left with outdated data. This is called a Replication Lag. A replication lag most often leads to Stale Reads, i.e. some nodes serving outdated data back to the users. Most distributed databases like MongoDB, Apache Cassandra, and MySQL with replication use this approach by default.

Which to choose?

In line with Brewer’s Theorem or CAP Theorem, replication presents a fundamental trade-off between consistency and latency—with asynchronous replication, we reduce latency but at the cost of serving inconsistent data temporarily, while with synchronous replication, we risk latency to provide users with constant consistent data.

With this in mind, the use case determines which of these approaches to opt for. For example, banking systems processing money transfers need immediate consistency across all nodes. When a bank processes a transaction, it must ensure the debit and credit are recorded consistently across all systems to prevent issues like double-spending, asynchronous replication in this case may eventually lead to a disaster. In contrast, an application like Instagram uses asynchronous replication for storing and distributing photos and comments. It's acceptable if your new profile picture takes a few seconds to appear on all servers worldwide but it absolutely would suck if you had to wait five minutes for a simple photo upload.

Redundancy

Redundancy is the availability of backup systems to ensure reliability and availability. Think of having a solar unit or an alternative power source in case of a blackout. Your desktop computer remains available and operational during a blackout because your solar power automatically kicks in to keep your electronic devices running. In terms of system design, this may look like having multiple servers in a system such that when one server fails or is overloaded, the remaining servers distribute the load or traffic among themselves and are able to keep the system operational. A single server in this scenario introduces a Single Point Of Failure which, in the event that the server fails, causes downtime.

Types of Redundancy

Redundancy may either be active or passive depending on whether the backups are actively being used to support the system or if they’re only used in cases of failure or faults.

Active Redundancy: Active redundancy involves multiple components running simultaneously, each performing the same function in parallel. If one component fails, the others continue operating without interruption. For example, in an active-active web server configuration, multiple servers handle requests simultaneously. Each server processes a portion of the traffic, and if one fails, the load balancers simply redirect traffic to the remaining servers without downtime.

Passive Redundancy: Passive redundancy involves backup components that remain idle until needed. When a primary component fails, a mechanism detects the failure and activates the backup component. For example, a standby generator for a data centre remains dormant until the main power supply fails, at which point an automatic transfer switch activates the generator to provide backup power.

Redundancy Configurations

Depending on the number of components and their setups, redundancy configurations can be grouped in three main categories;

N+1 Redundancy: This configuration includes the minimum required components (N) plus one additional component for backup. This provides protection against a single-component failure.

Consider a data centre with four air conditioning units needed to maintain temperature (N=4) plus one additional unit (N+1=5). If one unit fails, the system continues to function normally.

2N Redundancy: This configuration duplicates the entire system, effectively providing two complete, independent systems. This allows for maintenance on one system while the other remains operational. For example, two independent power distribution paths in a data centre, each capable of carrying the full load. One path can be taken offline for maintenance while the other continues to power all equipment.

2N+1 Redundancy: This combines the 2N approach with an additional backup, protecting against multiple failures even during maintenance. For example, two independent UPS systems, each with its own backup. This allows for maintenance on one system while still maintaining N+1 redundancy on the other system.

Cost Implications of Redundancy

Redundancy is not without its cost implications, whether in terms of purchase, space or maintenance. A few of them include;

Capital Expenditure: Redundant systems require additional equipment, significantly increasing upfront costs. A 2N configuration, for example, essentially doubles hardware costs.

Operational Costs: More equipment means higher energy consumption, maintenance requirements, and potential licensing costs. A fully redundant data centre might consume nearly twice the power of a non-redundant one.

Space Requirements: Redundant systems require more physical space, which increases real estate costs, especially in premium locations.

Complexity Management: More complex redundant systems require specialized skill sets and sophisticated management tools, increasing labour costs.

Efficiency Trade-offs: Some redundant configurations operate less efficiently when not at full capacity. For example, UPS systems often operate at lower efficiency when lightly loaded.

Content Delivery in Streaming Services: A Case Study

Streaming services are excellent real-world examples of how redundancy and replication work together at massive scale.

Think of your favourite streaming platforms like Amazon Prime, Netflix, Spotify or Disney+. These platforms have millions of users across tens of countries on multiple, different continents. To stay in business, these services must deliver consistent, high-quality experiences to millions of concurrent users worldwide while managing petabytes of data. They are able to manage this via a geographically distributed network of servers, called a Content Delivery Network(CDN), designed to deliver content to users with high availability and performance.

What is a CDN?

A Content Delivery Network (CDN) is a geographically distributed network of servers designed to deliver content to users with high availability and performance. Rather than serving all content from a single origin server, CDNs place copies of content (replication) on multiple servers (called edge servers or PoPs - Points of Presence) located closer to end users around the world (redundancy).

How CDNs Work

When content is uploaded to a CDN, it gets distributed and stored on multiple edge servers worldwide. When a user requests content (like a video or webpage):

The request is directed to the nearest edge server based on factors like geographic proximity and server load

This routing typically happens through DNS resolution that maps to the optimal server

The edge server then delivers the requested content directly to the user, dramatically reducing latency compared to fetching from a distant origin server. Edges servers also serve as intelligent caches, thus further reducing latency and requests made to the CDNs. Because of the cache functionality of these edge servers, CDNs intelligently manage what content to keep on which edge servers based on different criteria like popularity, freshness, and custom rules, hence maximizing space, time and resources. Netflix, for example, maximizes performance by keeping popular movies or fresh releases on edge servers, closer to the users so they are easily accessible as these movies are guaranteed to generate more traffic and user engagement. Less popular and older movies or content generally unavailable on the edge server ("cache miss") are retrieved from the origin server on demand, delivered to the user, and typically cached for future requests.

Major CDN providers include Akamai, Cloudflare, Fastly, Amazon CloudFront, and Google Cloud CDN.

Replication in Streaming Services

Streaming services replicate the same content across multiple CDN providers simultaneously. For example, Disney+ might distribute the same video files to Akamai, Fastly, and Amazon CloudFront such that when a user presses play, intelligent switching algorithms determine which CDN should serve that particular session based on:

Current CDN performance in the user's location

Available capacity on each CDN

Cost considerations

CDN health status

Advanced implementations can even switch CDNs mid-stream if performance degrades, often without the viewer noticing.

Media Asset Replication

Streaming services replicate media assets (videos, audio, subtitles) across numerous storage systems:

Master Copy Storage: The original, highest-quality version of content is stored in multiple redundant storage systems. Netflix, for example, stores original masters in Amazon S3 with cross-region replication.

Transcoding Outputs: From each master, multiple versions are transcoded i.e. created at different quality levels and formats, generating dozens of files for a single title. Disney+ creates approximately 20+ variants of each video to accommodate different devices and connection speeds.

CDN Distribution: These files are then replicated to hundreds or thousands of edge locations worldwide. Netflix's Open Connect Appliances (OCAs) are placed within ISP networks globally, each containing copies of popular content.

Metadata Replication

Beyond the actual media files, streaming services must replicate metadata such as:

Catalogue Information: Title, synopses, covers, and categorization data are replicated across multiple database instances.

User State: Watch history, preferences, and account information are replicated to ensure consistent experiences when users switch devices.

Netflix, for example, employs Apache Cassandra, a NoSQL database, for distributed metadata storage, replicating this data across multiple regions to ensure availability even during regional outages.

Redundancy in Streaming Services

Streaming services implement multiple, different layers of redundancy to ensure continuous service availability. These redundancy strategies are critical to ensure their content remains accessible even during major infrastructure disruptions to deliver the uninterrupted viewing and listening experiences that users expect.

Multi-CDN Strategy

The most common form of redundancy in streaming platforms is the multi-CDN approach, where streaming services distribute the same content across multiple CDN providers simultaneously:

Services typically adopt provider diversity by partnering with 2-4 major CDN providers and sometimes, with an internal CDN as in the case of Netflix’s internal Open Connect. During normal operations, traffic might be distributed across these providers based on:

Cost optimization (routing to the most cost-effective CDN)

Performance (directing users to the fastest CDN for their location)

Contractual commitments (meeting minimum traffic guarantees)

Advanced implementations use real-time performance metrics to dynamically route users to the optimal CDN, often switching providers mid-session if performance degrades. CDN points of presence (PoPs) are distributed across multiple regions to ensure content remains available during regional outages. If an entire region becomes unavailable, traffic automatically routes to the next closest region(cross-region failover). Services with their own CDNs (like Netflix) implement internal redundancy, however, even with their own CDN infrastructure, maintain relationships with commercial CDNs as backup.

Aside from CDN redundancy, other forms of redundancy exist within streaming services to ensure system resilience. They include:

Server Redundancy

Stateless API Servers: Services like Disney+ and HBO Max deploy redundant API servers that handle user authentication, content browsing, and playback initiation.

Encoding Farms: Netflix maintains redundant encoding servers to continuously process new content and re-encode existing content as encoding technologies improve.

Network Redundancy

Multiple Internet Providers: Major streaming providers connect to multiple Tier 1 internet providers in each region to ensure network path diversity.

Redundant Routing: BGP routing protocols automatically redirect traffic if primary routes become congested or fail.

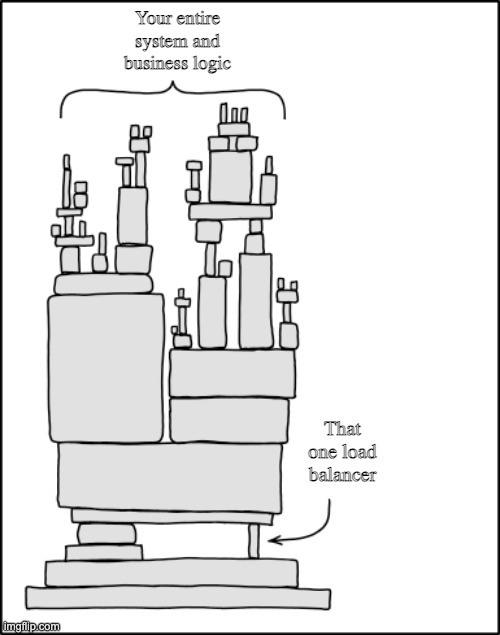

Load Balancers: Redundant load balancers distribute traffic and can redirect users if individual components become unavailable.

DNS Redundancy: Multiple DNS servers ensure users can always resolve streaming service domain names to working IP addresses.

Others include: redundant power systems, multiple data centers, etc.

Conclusion

Replication and redundancy are not merely distinct but also complementary strategies when building resilient systems. Replication without redundancy leaves you with multiple copies of data but no guarantee of access. Redundancy without replication ensures system availability but potentially without the necessary data.

What makes these approaches successful is recognizing that each addresses different aspects of system reliability. Most important is determining where and how to apply each for maximum benefit while managing the inevitable trade-offs in cost, complexity, and performance.

Have questions? Please leave them below or email me at temitopeasama@gmail.com!

Subscribe to my newsletter

Read articles from Omolere Asama directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Omolere Asama

Omolere Asama

I'm a Frontend Engineer and Technical Writer from Nigeria. Concrete design systems, accessibility and building scalable products are my passion.