Automating Data Cleaning (Part 1): Setting Up AWS Chalice with S3

Abhishek Soni

Abhishek Soni

If you're new to AWS Chalice, I’ve written an article on Deploying Serverless Apps with Chalice: A Beginner’s Guide. I recommend giving it a read for a step-by-step guide on setting up and deploying your first serverless app with Chalice.

Prerequisites

Before we start, make sure you have the following installed and set up:

Creating the Chalice app

Now that we have all the prerequisites, let's create our Chalice app using the following command:

chalice new-project data-cleaning-app

Navigate to the project directory using the command:

cd data-cleaning-app

Project Structure

data-cleaning-app

├── .chalice/

├── .gitignore

├── app.py

├── requirements.txt

Creating & Configuring the S3 Bucket

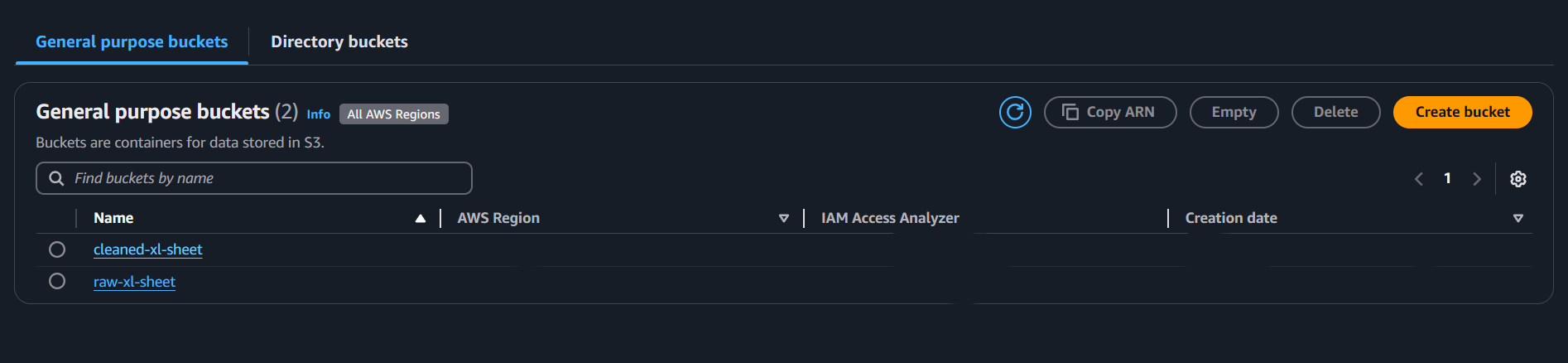

We will create two S3 buckets: one for the raw data and another for the cleaned data. You can easily create an S3 bucket using the AWS CLI. The following command will create S3 buckets named raw-xl-sheet and cleaned-xl-sheet:

aws s3 mb s3://raw-xl-sheet ; aws s3 mb s3://cleaned-xl-sheet

The mb command stands for make bucket and creates a new bucket in your default region. You should be able to see the bucket in the AWS console as shown below:

Implementing the S3 Event Handler

Now that the S3 buckets are created, we can move on to implementing the S3 event handler. To do this, replace the existing index function in your app.py with the following code:

# ----- rest of the code remains the same -----

@app.on_s3_event(bucket='raw-xl-sheet', events=["s3:ObjectCreated:*"])

def index(event):

print(f"New object created: {event.key}")

# ----- rest of the code remains the same -----

Deploy the Chalice App

So far so good! You can now deploy the Chalice app by using the following command:

chalice deploy

You should see the following output as shown below:

Creating deployment package.

Updating policy for IAM role: data-cleaning-app-dev

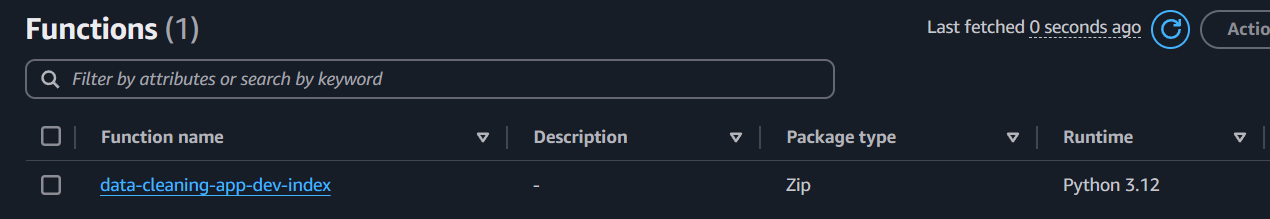

Creating lambda function: data-cleaning-app-dev-index

Configuring S3 events in bucket raw-xl-sheet to function data-cleaning-app-dev

-index

Resources deployed:

- Lambda ARN: arn:aws:lambda:XX:XXXXXX:function:data-cleaning-app-dev-index

To verify the deployment, go to the AWS Management Console for your region (e.g., us-east-1) and navigate to the Lambda service. You should see that the function has been successfully deployed.

Testing the Chalice App with S3 File Upload

Now the most exciting part has arrived! Let's test the Chalice app with an S3 file upload! You can use the AWS CLI or the AWS Management Console to do this. Follow the steps below and let the fun begin!

Navigate to the project directory using the command:

cd data-cleaning-app; md raw_filesDownload this sample.csv file and put it in your

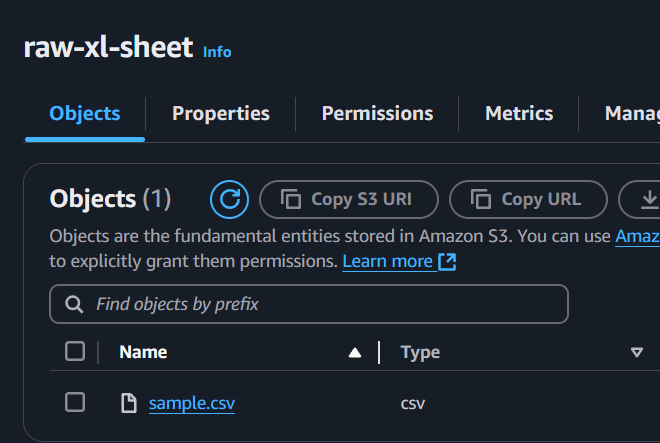

raw_filesfolder.Run the following command to test the Chalice app with an S3 file upload:

aws s3 cp raw_files/sample.csv s3://raw-xl-sheet/sample.csvYou can check if the file was uploaded:

You can to CloudWatch > Log groups to see the logs. The logs should be similar to:

XXXXXXXXXXXXX INIT_START Runtime Version: python:3.12.v56 Runtime Version ARN: arn:aws:l ambda:XX::runtime:XXX XXXXXXXXXXXXX START RequestId: XXXX Version: $LATEST XXXXXXXXXXXXX New object created: sample.csv XXXXXXXXXXXXX END RequestId: XXXX XXXXXXXXXXXXX REPORT RequestId: XXXX Duration: 1.71 ms Billed Duration: 2 ms Mem ory Size: 128 MB Max Memory Used: 37 MB Init Duration: 123.07 ms

Wrapping Up

Well, the end of one chapter is just the beginning of another! We've made it halfway through building our data cleaning app. In Part 2, we'll use Pandas, automate data cleaning, and save the cleaned data back to another S3 bucket. Stay tuned!

Subscribe to my newsletter

Read articles from Abhishek Soni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Abhishek Soni

Abhishek Soni

🚀 Full-Stack Developer | Aspiring Data Engineer | Tech Enthusiast I build scalable, high-performance applications and am transitioning into Data Engineering to work with large-scale data pipelines and distributed systems. With 3.5+ years of experience, I specialize in MERN, Next.js, NestJS, Python, and AWS, solving real-world problems through code. 💡 What I Do: Full-Stack Development – Scalable, high-performing web apps. Backend Engineering – API design, database architecture, and system optimization. Cloud & Automation – AWS-powered solutions for modern applications. Data Engineering (Learning) – Building pipelines, working with data lakes, and optimizing ETL processes. 💻 Tech I Love: JavaScript, TypeScript, Python, React, Tailwind CSS, Celery, AWS Lambda, ECS, S3. 🎯 Currently Learning: Data Engineering concepts, ETL pipelines, Apache Airflow, Spark, and Data Warehousing. 📢 Writing About: Full-stack development, system design, scaling applications, and my journey into Data Engineering.