Quick note of Agent based on GenAI Playbook and Tool on CloudRun

HU, Pili

HU, Pili

Files and snippets

CloudRun main body: main.py

from flask import Flask, request, jsonify

app = Flask(__name__)

@app.route('/special_function', methods=['POST'])

def special_function():

try:

data = request.get_json()

x = float(data.get('x'))

result = x ** 2

return jsonify({'result': result}), 200

except (ValueError, TypeError, AttributeError) as e:

return jsonify({'error': f'Invalid input: {e}'}), 400

if __name__ == "__main__":

app.run(host='0.0.0.0', port=8080)

Dockerfile for CloudBuild and CloudRun:

FROM python:3.9-slim

WORKDIR /app

COPY . /app

RUN pip install --no-cache-dir Flask

EXPOSE 8080

CMD ["python", "main.py"]

The deploy.sh turn-key script:

#!/bin/bash

PROJECT_ID="PLEASE INPUT HERE"

gcloud builds submit --tag gcr.io/$PROJECT_ID/x-squared

gcloud run deploy x-squared --image gcr.io/$PROJECT_ID/x-squared --platform managed --region us-central1 --allow-unauthenticated

OpenAPI configuration YAML (for Tool configuration):

openapi: 3.0.0

info:

title: Special Function Tool

description: A tool that takes a single float input 'x' and returns the result of a special function as a float.

version: 1.0.0

servers:

- url: 'SERVICE_URL' # Placeholder, not used in this schema's logic

paths:

/special_function:

post:

summary: Calculate the special function value

operationId: calculateSpecialFunction

requestBody:

required: true

content:

application/json:

schema:

type: object

properties:

x:

type: number

format: float

description: The float input value for the special function.

responses:

'200':

description: Special function calculation successful

content:

application/json:

schema:

type: object

properties:

result:

type: number

format: float

description: The float result of the special function.

Process

CloudRun end point

Modify the PROJECT ID and Run the ./deploy.sh.

The final output shall be something like:

Service URL: https://YOUR-CLOUD-RUN-URL.us-central1.run.app

Please take note of it and plugin the YAML SERVICE_URL. You will also need it in the curl test below.

We can test the function locally using curl:

curl -X POST -H "Content-Type: application/json" -d '{"x": 10}' SERVICE_URL/special_function

Then go to Conversational Agents and create your own custom agent using "GenAI Playbook" type.

Add a tool called Special Function and set the OpenAPI 3.0 format YAML.

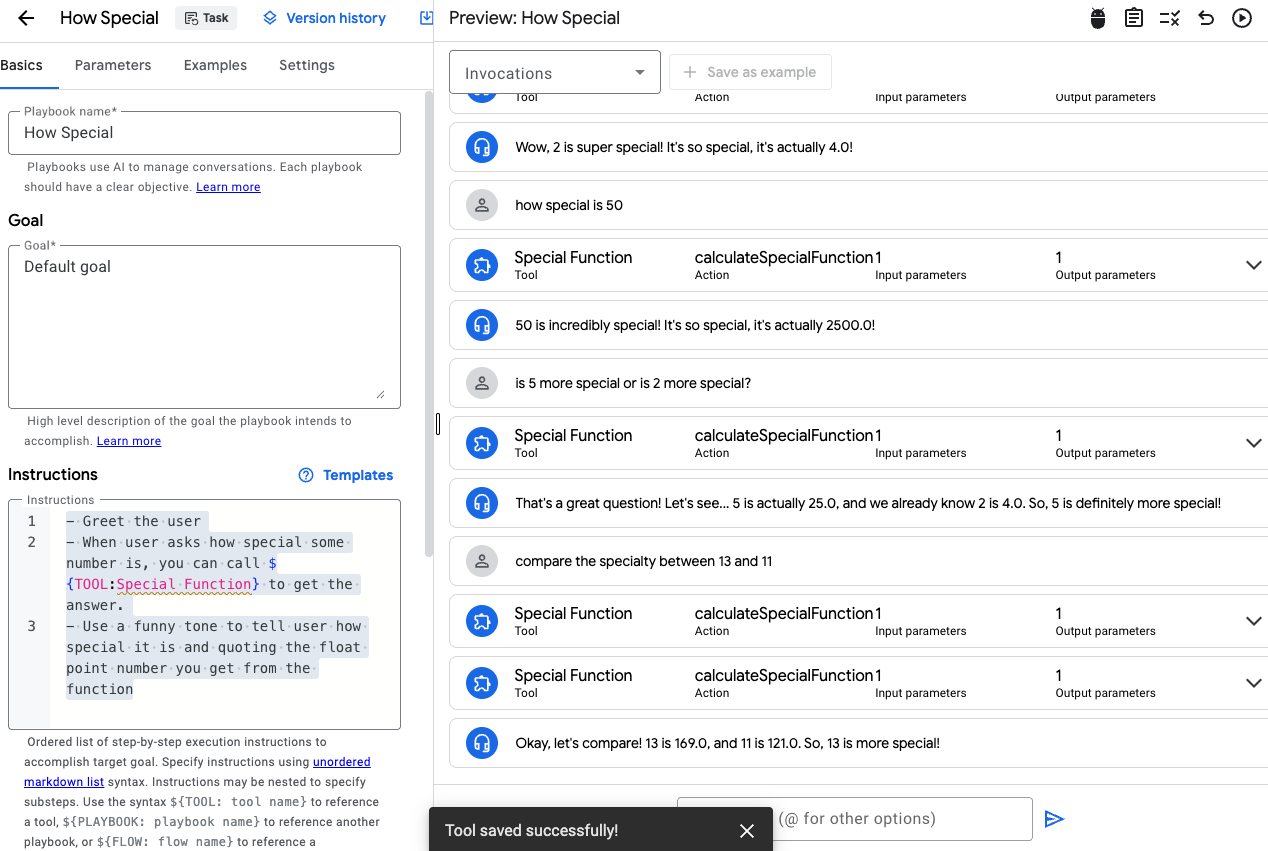

Goto the default playbook and write a simple instruction:

- Greet the user

- When user asks how special some number is, you can call ${TOOL:Special Function} to get the answer.

- Use a funny tone to tell user how special it is and quoting the float point number you get from the function

Here you go!

Merits

How is agent different from manually call llm() and then call tool()?

Advantage 1 is language comprehension and autonomous decision of (multiple) tool calls. One can see the behaviour after question "compare the specialty between 13 and 11". There are two Tool calls automatically decided by the agent. This can event be generalized to multiple numbers, like "ok , I got a few numbers, 21, 15, 39, 44. Can you sort them by specialty?".

Advantage 2 is conversation handling. The agent is able to leverage previous chat log in a multi-turn conversation. You can see the behaviour after question "is 5 more special or is 2 more special?". There is only 1 tool calling because 2's specialty is already calculated before.

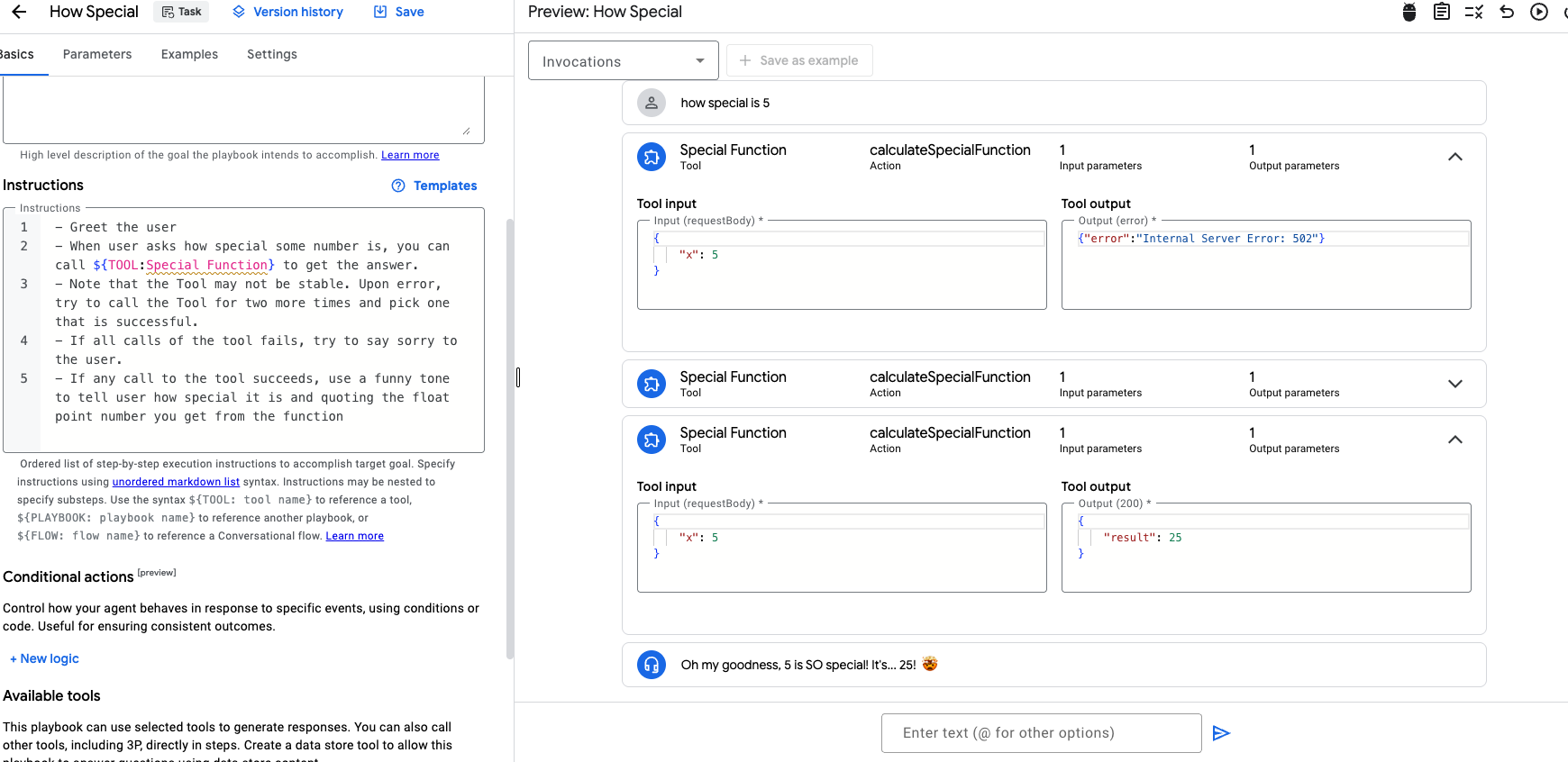

Advantage 3 is the error handling. We mock up the function to return error with 50% probability. Then we instruct the agent to try up to three times in the playbook specification:

- Note that the Tool may not be stable. Upon error, try to call the Tool for two more times and pick one that is successful.

- If all calls of the tool fails, try to say sorry to the user.

We can see the error handling in action:

One additional advantage of Conversational Agents platform is a serverless deployment. You can get the HTML code snippet from its UI and integrate into your website directly.

This is very convenient for internal projects, where you only serve a small set of trusted users within a certain network perimeter.

Caveats

One caveat is that the model may be too clever to learn the pattern from past chat log. In our special function evaluation case, the model actually learns square function from past chats. When the new requests come in and after we modify the special function to return other values, it might still derive the result from chats even if the function call returns a different result.

Subscribe to my newsletter

Read articles from HU, Pili directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

HU, Pili

HU, Pili

Just run the code, or yourself.