Data Pre-processing: Essential Techniques for Machine Learning

techGyan : smart tech study

techGyan : smart tech study

In Machine Learning and Artificial Intelligence, raw data is often unstructured and unsuitable for direct use. To make it meaningful and usable for training models, data preprocessing is essential. This process cleans, organizes, and optimizes data, improving the accuracy and efficiency of machine learning models.

In this article, presented by TechGyan, we will explore key preprocessing techniques, including Mean Removal, Scaling, Normalization, Binarization, One-Hot Encoding, and Label Encoding. These methods help transform raw data into a structured format, making it ready for effective model training.

Data preprocessing is the process of preparing raw data for analysis by cleaning and transforming it into a usable format. In data mining it refers to preparing raw data for mining by performing tasks like cleaning, transforming, and organizing it into a format suitable for mining algorithms.

Goal is to improve the quality of the data.

Helps in handling missing values, removing duplicates, and normalizing data.

Ensures the accuracy and consistency of the dataset.

1. Mean Removal

Mean removal is a technique used to center data by subtracting the mean value from each feature. This ensures that the dataset has a zero mean, which helps improve the performance of algorithms like Principal Component Analysis (PCA) and Gradient Descent.

Example: Suppose we have a dataset with a feature column:

[10, 15, 20, 25, 30]

Mean = (10+15+20+25+30)/5 = 20 After mean removal:

[-10, -5, 0, 5, 10]

When working with machine learning, we need to remove bias from features so that they don't affect our model unfairly. One way to do this is through standardization, which ensures that data follows a normal distribution with:

Mean (average) = 0

Standard deviation (spread of data) = 1

How to Do It in Python

We use the sklearn library to process data. First, let's import it:

from sklearn import preprocessing

sklearn is a popular machine learning library in Python that includes many useful tools for processing data.

Checking the Mean and Standard Deviation

Before standardizing, let's check the mean and standard deviation of our dataset:

print("Mean: ", data.mean(axis=0))

print("Standard Deviation: ", data.std(axis=0))

Here:

mean()calculates the average of each column.std()calculates how much the values vary from the mean.axis=0means we are checking values column-wise.

Example Output:

tMean: [ 1.33 1.93 -0.07 -2.53]

Standard Deviation: [1.25 2.44 1.60 3.31]

Standardizing the Data

Now, we apply standardization:

data_standardized = preprocessing.scale(data)

This function makes the mean = 0 and standard deviation = 1.

Checking Again

print("Mean standardized data: ", data_standardized.mean(axis=0))

print("Standard Deviation standardized data: ", data_standardized.std(axis=0))

Example Output:

Mean standardized data: [ 0. 0. 0. 0.]

Standard Deviation standardized data: [1. 1. 1. 1.]

Now, the data is properly scaled and ready for use in machine learning models! 🚀

2. Scaling

When working with different types of data, some features may have large values (e.g., salary in thousands) while others have small values (e.g., age in years). This can make machine learning models behave inconsistently. Scaling helps to bring all features to a similar range, improving model performance.

There are two common scaling techniques:

Min-Max Scaling (Normalization)

Standard Scaling (Standardization - already explained)

(i). Min-Max Scaling (Normalization)

Min-Max Scaling is a simple way to resize data so that all values fall within a specific range, usually 0 to 1. This helps ensure that no single feature dominates the model due to larger values.

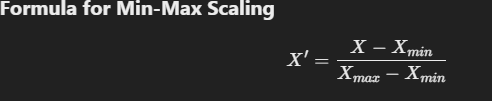

Formula for Min-Max Scaling

Where:

X = Original value

Xmin = Smallest value in the dataset

Xmax = Largest value in the dataset

X' = Scaled value (between 0 and 1)

Example: Scaling Data Using MinMaxScaler

Step 1: Import the Required Library

from sklearn.preprocessing import MinMaxScaler

import numpy as np

Step 2: Create Sample Data

data = np.array([[200], [400], [800], [1000], [1500]])

Step 3: Apply Min-Max Scaling

scaler = MinMaxScaler(feature_range=(0, 1)) # Scale between 0 and 1

data_scaled = scaler.fit_transform(data)

Step 4: Print the Scaled Data

print("Scaled Data:\n", data_scaled)

Example Output:

Scaled Data:

[[0. ]

[0.133]

[0.4 ]

[0.533]

[1. ]]

Now, all values are between 0 and 1, making it easier for machine learning models to process.

(ii). Standard Scaling (Standardization - Quick Recap)

Instead of scaling between 0 and 1, this technique transforms data to have:

Mean = 0

Standard Deviation = 1

Formula:

X′=X−μσX' = \frac{X - \mu}{\sigma}X′=σX−μ

Where:

μ\muμ = Mean

σ\sigmaσ = Standard Deviation

Example: Scaling Data Using StandardScaler

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

data_standardized = scaler.fit_transform(data)

print("Standardized Data:\n", data_standardized)

Example Output:

Standardized Data:

[[-1.14]

[-0.79]

[-0.02]

[ 0.32]

[ 1.63]]

Now, the values are centered around 0 with a unit variance.

Key Differences: Min-Max Scaling vs. Standard Scaling

| Feature | Min-Max Scaling (Normalization) | Standard Scaling (Standardization) |

| Range | Scales between 0 and 1 (or custom range) | Mean = 0, Standard Deviation = 1 |

| Effect on Outliers | Sensitive to outliers | Less sensitive to outliers |

| Use Cases | Recommended for deep learning, image processing | Best for algorithms like SVM, k-means |

When to Use Which?

✔ Use Min-Max Scaling when you need values between 0 and 1, like when working with deep learning.

✔ Use Standard Scaling when data follows a normal distribution (bell curve) and is used for algorithms like SVM, K-Means, and Linear Regression.

Both techniques help models train faster and perform better by ensuring features have a consistent scale! 🚀

Normalization

Normalization is another technique to adjust data for machine learning. Unlike standardization, which makes the mean 0 and standard deviation 1, normalization scales all values between 0 and 1 (or sometimes between -1 and 1).

This is useful when our data has different ranges, ensuring that no single feature dominates the model.

How to Do Normalization in Python

First, let's import the required library:

from sklearn import preprocessing

Applying Normalization

We use the MinMaxScaler() function from sklearn.preprocessing to scale values between 0 and 1.

scaler = preprocessing.MinMaxScaler()

data_normalized = scaler.fit_transform(data)

MinMaxScaler()transforms data so that all values fall within a specified range (default: 0 to 1).fit_transform(data)applies the transformation to our dataset.

Checking the Result

Now, let's print the normalized data:

print("Normalized Data:\n", data_normalized)

Example Output

If our original data was:

[[10, 200, 30],

[20, 400, 40],

[15, 300, 35]]

After normalization, it would look like:

[[0.0, 0.0, 0.0],

[1.0, 1.0, 1.0],

[0.5, 0.5, 0.5]]

Key Difference Between Standardization and Normalization

| Technique | Transformation | When to Use |

| Standardization | Mean = 0, Std Dev = 1 | When data follows a normal (bell-shaped) distribution. |

| Normalization | Values between 0 and 1 | When data has different scales and needs to be compared fairly. |

Now, our data is properly scaled and ready for machine learning models! 🚀

Binarization:-

Binarization is a simple data preprocessing technique where we convert numerical values into 0s and 1s based on a threshold. This is useful in Machine Learning when we need to transform continuous data into a binary format, making it easier for models to process.

Why Use Binarization?

✅ Helps in simplifying complex data

✅ Useful for image processing, text classification, and ML models

✅ Makes data more structured and easier to analyze

How to Do Binarization in Python?

We use sklearn.preprocessing.Binarizer() to convert values into 0s and 1s.

📌 Step 1: Import the Required Library

from sklearn.preprocessing import Binarizer

import numpy as np

📌 Step 2: Create Sample Data

data = np.array([[2], [8], [5], [10], [3]])

📌 Step 3: Apply Binarization with a Threshold of 5

binarizer = Binarizer(threshold=5)

data_binarized = binarizer.transform(data)

📌 Step 4: Print the Results

print("Original Data:\n", data)

print("Binarized Data:\n", data_binarized)

💡 Example Output:

lessCopyEditOriginal Data:

[[ 2]

[ 8]

[ 5]

[10]

[ 3]]

Binarized Data:

[[0]

[1]

[0]

[1]

[0]]

Where is Binarization Used?

📌 Image Processing – Converting grayscale images into black and white

📌 Text Processing – Transforming word frequencies into binary values

📌 Machine Learning Models – Preparing categorical data for classification

By using Binarization, you can optimize your machine learning models and make data processing more efficient. Try it today with TechGyan! 🚀💡

One-Hot Encoding:

In machine learning, computers don’t understand words; they only understand numbers. But what if we have categorical data like colors, cities, or product types? That’s where One-Hot Encoding (OHE) comes in!

One-Hot Encoding is a technique that converts categorical data into numerical format without giving any category more importance than the other. This helps machine learning models understand and process categorical variables correctly.

Why is One-Hot Encoding Important?

Imagine we have a dataset with different colors:

🚗 Red

🚙 Blue

🚕 GreenIf we assign numbers directly (e.g., Red = 1, Blue = 2, Green = 3), the model might think Green (3) is greater than Red (1), which is wrong!

Solution? 👉 One-Hot Encoding ✅

Easy Example of One-Hot Encoding

Let’s take an example of car colors:

| Car Color | One-Hot Encoding | | --- | --- | | Red | [1, 0, 0] | | Blue | [0, 1, 0] | | Green | [0, 0, 1] |

Each color gets a unique binary vector, meaning no ranking, just representation. 🚀

How to Apply One-Hot Encoding in Python?

Step 1: Import Required Libraries

from sklearn.preprocessing import OneHotEncoder import pandas as pdStep 2: Create a Sample Dataset

data = pd.DataFrame({'Color': ['Red', 'Blue', 'Green', 'Red', 'Green']})Step 3: Apply One-Hot Encoding

coder = OneHotEncoder(sparse=False) encoded_data = encoder.fit_transform(data[['Color']])Step 4: Display the Encoded Data

print(encoded_data)Output:

[[1. 0. 0.] [0. 1. 0.] [0. 0. 1.] [1. 0. 0.] [0. 0. 1.]]✅ Now, each color is represented as a separate column with 1s and 0s, making it easier for ML models to process!

When to Use One-Hot Encoding?

🔹 When your data has categorical values (like gender, cities, colors).

🔹 When there is no order or ranking in categories.

🔹 When you are working with machine learning algorithms that don’t understand text data.Conclusion

One-Hot Encoding is a powerful technique to handle categorical data in machine learning. It prevents models from misinterpreting relationships between categories and improves accuracy.

💡 Want to learn more about data preprocessing? Stay tuned to TechGyan for more easy-to-understand machine learning tutorials! 🚀✨

6. Label Encoding:

What is Label Encoding?

In machine learning, models only understand numbers, not words. But real-world data often contains text labels like "Male/Female" or "Red/Blue/Green."

Label Encoding is a simple technique that converts these text labels into numeric values, making them machine-readable.

Why is Label Encoding Important?

Makes text data usable for machine learning models.

Speeds up computations by replacing words with numbers.

Helps algorithms understand categorical data like names, colors, and categories.

How to Perform Label Encoding in Python?

We use scikit-learn (sklearn) to apply Label Encoding easily.

Step 1: Import the Required Library

from sklearn.preprocessing import LabelEncoder

Step 2: Create Sample Data

Let's say we have a dataset with different colors:

colors = ['Red', 'Green', 'Blue', 'Green', 'Red', 'Blue']

Step 3: Apply Label Encoding

coder = LabelEncoder()

encoded_colors = encoder.fit_transform(colors)

Step 4: Display the Encoded Values

print("Original Labels:", colors)

print("Encoded Labels:", encoded_colors)

Output:

Original Labels: ['Red', 'Green', 'Blue', 'Green', 'Red', 'Blue']

Encoded Labels: [2, 1, 0, 1, 2, 0]

Here, the labels are converted into numbers:

Red → 2

Green → 1

Blue → 0

Limitations of Label Encoding

If a model treats numbers as rankings (like 2 > 1 > 0), it might create errors. To avoid this, we use One-Hot Encoding, which we'll cover in another article.

Conclusion

Label Encoding is a must-know technique for data preprocessing in Machine Learning. It converts text labels into numbers, making data ready for training models.

🔥 Stay tuned to TechGyan for more Machine Learning tutorials! 🚀

Subscribe to my newsletter

Read articles from techGyan : smart tech study directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

techGyan : smart tech study

techGyan : smart tech study

TechGyan is a YouTube channel dedicated to providing high-quality technical and coding-related content. The channel mainly focuses on Android development, along with other programming tutorials and tech insights to help learners enhance their skills. What TechGyan Offers? ✅ Android Development Tutorials 📱 ✅ Programming & Coding Lessons 💻 ✅ Tech Guides & Tips 🛠️ ✅ Problem-Solving & Debugging Help 🔍 ✅ Latest Trends in Technology 🚀 TechGyan aims to educate and inspire developers by delivering clear, well-structured, and practical coding knowledge for beginners and advanced learners.