Understanding Chunking, Embeddings, and Reconstruction in Data Processing

karthik nadar

karthik nadar

Introduction to Data Processing Techniques

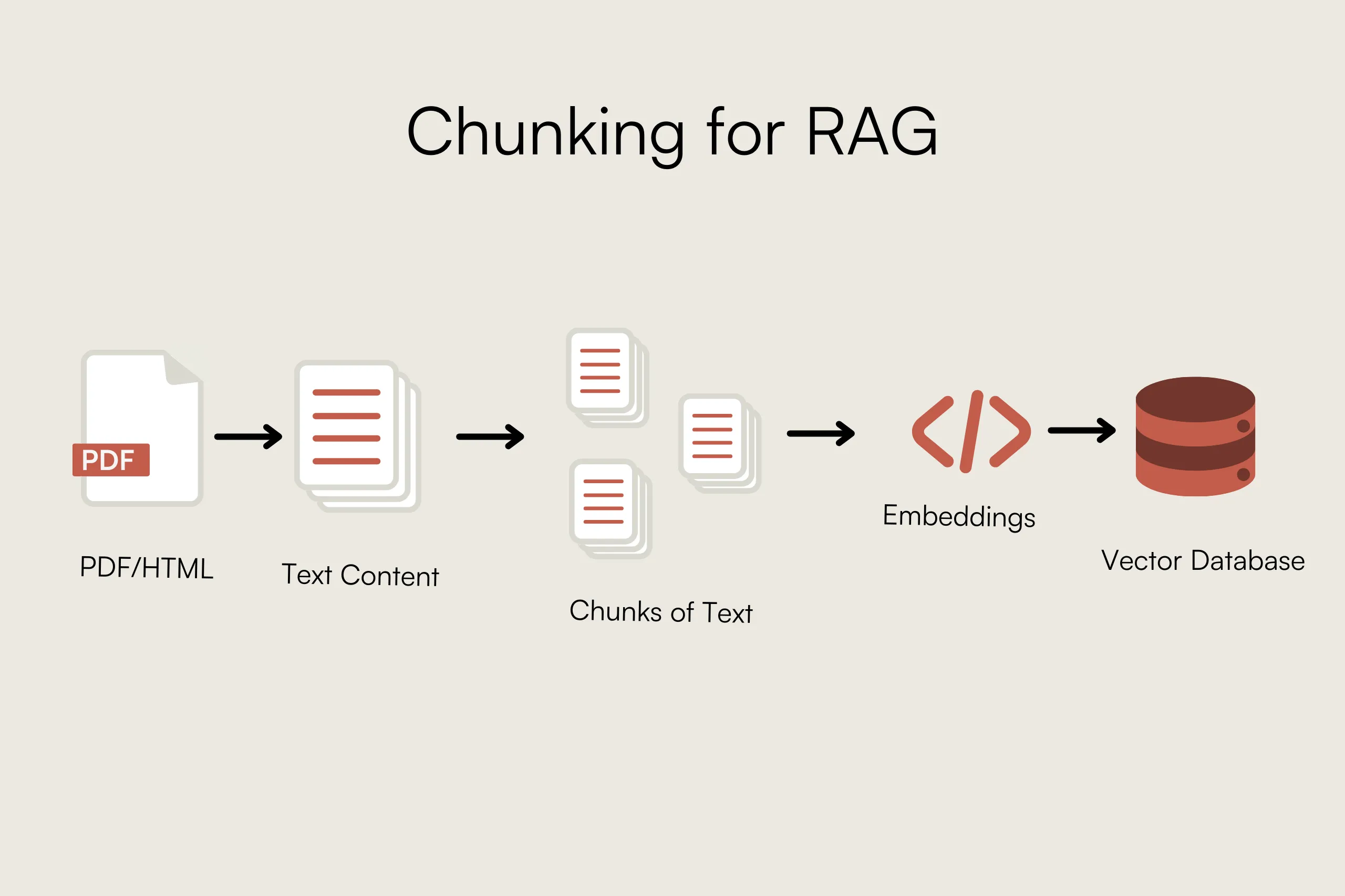

If you've ever worked with large structured datasets, you know the struggle of indexing them efficiently while still retrieving meaningful, context-aware results. When it comes to unstructured data, like PDFs or text documents, the solution is relatively straightforward. Tools like LangChain’s RecursiveCharacterTextSplitter offer out-of-the-box chunking that maintains overlapping context across chunks. This works well because natural language follows a sequential flow, and breaking it down intelligently preserves meaning.

But what about structured tabular data? I quickly discovered that the same principles don’t apply. Unlike text, tables lack a natural order or flow, and splitting them arbitrarily can destroy the relationships between rows and columns. The usual method of dumping everything into a vector database at once didn’t work either—it led to context loss, inefficient querying, and an inability to retrieve relevant information correctly.

Initially, I tried several approaches, but they all fell short:

Byte-wise chunking for entire rows failed for large databases because individual rows could exceed chunk limits.

Naïve row-based chunking destroyed relationships between columns, leading to incomplete queries.

Storing full tables as single embeddings made retrieval useless, as we couldn’t fetch granular data efficiently.

I needed a way to break down large tables into smaller pieces while keeping context intact—similar to how RecursiveCharacterTextSplitter works for text, but tailored for tabular data. That’s when I developed an approach that chunks structured data by:

Identifying primary keys to maintain row context.

Splitting attributes while preserving their relationships.

Ensuring chunks remain queryable while staying under size constraints.

In this blog, I’ll walk you through how I solved this problem, step by step, with real-world examples of how structured data can be stored, chunked, and retrieved efficiently—while maintaining context in a vector database.

Let’s get started!

Chunking Data for Efficient Processing

Chunking is the process of breaking down large data into smaller, structured units to improve performance and avoid memory constraints.

Sample Dataset

Imagine we have the following user data:

[

{ "id": 1, "name": "Alice", "age": 25, "email": "alice@example.com" },

{ "id": 2, "name": "Bob", "age": 30, "email": "bob@example.com" },

{ "id": 3, "name": "Charlie", "age": 35, "email": "charlie@example.com" }

]

id is the primary key.

name, age, and email are attributes.

Step 1: Identifying Primary Keys

Before chunking, we need to determine the primary key (PK).

const commonPkNames = ['id', 'ID', '_id', 'uuid', 'key'];

→ We check if any column names match common primary key names.

const columnCounts = {};

const uniqueValueColumns = [];

→ We track unique values for each column.

Loop Through Each Column

columnCounts = {

"id": { 1, 2, 3 }, // All unique ✅

"name": { "Alice", "Bob", "Charlie" },

"age": { 25, 30, 35 },

"email": { "alice@example.com", "bob@example.com", "charlie@example.com" }

};

→ Since id is unique and matches common primary key names, we select it as the primary key.

Step 2: Splitting Data Into Chunks

Now, we split the dataset into smaller parts without exceeding 4000 bytes.

Each row is transformed into a PK-attribute format:

[

{ "pk": { "id": 1 }, "attribute": { "name": "Alice" } },

{ "pk": { "id": 1 }, "attribute": { "age": 25 } },

{ "pk": { "id": 1 }, "attribute": { "email": "alice@example.com" } },

{ "pk": { "id": 2 }, "attribute": { "name": "Bob" } }

]

→ Each attribute is stored separately while preserving the primary key.

Step 3: Handling Large Data Entries

if (entrySize > CHUNK_SIZE) {

console.warn(`Oversized entry (${entrySize} bytes) detected`);

chunks.push([entry]); // Store it as its own chunk

continue;

}

→ If an entry is too large, it is stored separately.

Step 4: Fallback Chunking (If No PK Found)

If no primary key is found, we use row-based chunking:

[

[{ "id": 1, "name": "Alice", "age": 25, "email": "alice@example.com" }],

[{ "id": 2, "name": "Bob", "age": 30, "email": "bob@example.com" }]

]

→ This groups full rows into chunks.

Understanding Query Embeddings & Reconstruction

Now, let's look at how we process embeddings and reconstruct data.

Step 1: Generating the Embedding

We convert a query into a vector representation.

const questionEmbedding = await openai.embeddings.create({

model: "text-embedding-ada-002",

input: message

});

→ Transforms the query into numerical values.

Example

message = "Show me all users above 30";

The model outputs a vector representation:

[0.123, -0.456, 0.789, ...]

Step 2: Querying the Vector Database (Pinecone)

const queryResult = await index.query({

vector: questionEmbedding.data[0].embedding,

filter: { connectionId: String(connectionId) },

topK: 15,

includeMetadata: true

});

→ This finds 15 most similar stored embeddings.

Step 3: Processing Retrieved Results

We extract relevant matches:

{

"matches": [

{

"id": "123",

"score": 0.98,

"metadata": {

"type": "data",

"data": "{ \"id\": 2, \"name\": \"Bob\", \"age\": 30, \"email\": \"bob@example.com\" }"

}

}

]

}

→ A higher score means a better match.

Step 4: Reconstructing Data

We merge primary keys and attributes back into rows.

chunk.entries.forEach(entry => {

const pkKey = JSON.stringify(entry.pk);

rowsByTable[tableName][pkKey] = rowsByTable[tableName][pkKey] || { ...entry.pk };

Object.assign(rowsByTable[tableName][pkKey], entry.attribute);

});

→ Restores full rows from PK-attribute format.

Before Merging

[

{ "pk": { "id": 2 }, "attribute": { "name": "Bob" } },

{ "pk": { "id": 2 }, "attribute": { "age": 30 } },

{ "pk": { "id": 2 }, "attribute": { "email": "bob@example.com" } }

]

After Merging

[

{ "id": 2, "name": "Bob", "age": 30, "email": "bob@example.com" }

]

→ The original row is reconstructed successfully.

Step 5: Returning Final Data

The final result:

{

"schema": [{ "column": "id", "type": "integer" }, { "column": "name", "type": "string" }],

"sampleData": [

{

"tableName": "users",

"sampleData": [

{ "id": 2, "name": "Bob", "age": 30, "email": "bob@example.com" }

]

}

]

}

→ Schema and sample data are returned in a structured format.

Conclusion

Effectively handling both structured and unstructured data is crucial for efficient querying and retrieval,by employing techniques like chunking, embedding, and reconstruction, we can maintain context and relationships within datasets, ensuring that information remains accessible and meaningful. Whether dealing with natural language or tabular data, understanding these processes allows us to optimize data storage and retrieval, ultimately enhancing performance and accuracy. As data continues to grow in complexity and volume, mastering these techniques will be essential for anyone working in data-driven fields.

Subscribe to my newsletter

Read articles from karthik nadar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by