Caching Explained: A Comprehensive Guide

Chinu Anand

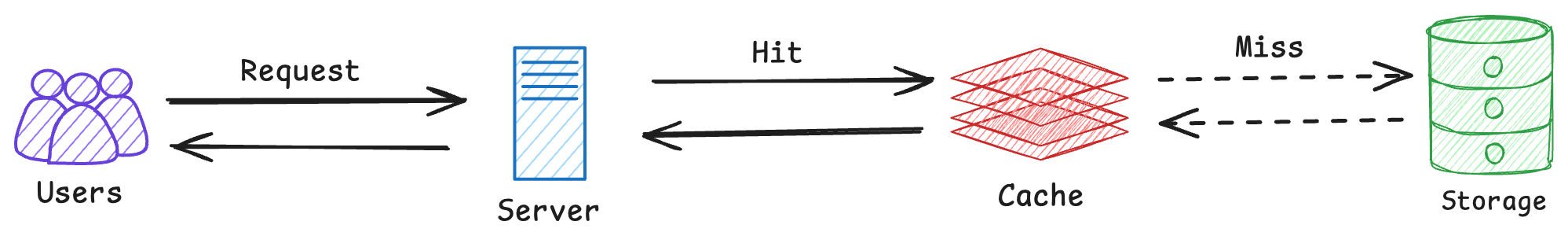

Chinu AnandCaching is the process of storing copies of frequently accessed data in a temporary storage layer to server future requests faster. It improves performance, reduces latency and scales systems efficiently. By temporarily storing frequently accessed data, caching minimizes the redundant communications and database queries, leading to faster response time and reduced server load.

Why Use Caching?

Reduces Latency: serves data quickly instead of fetching it from the database.

Reduces Load on Backend: minimizes database and server queries.

Improves Scalability: helps handle high traffic efficiently.

Enhances User Experience: faster page loads and responses.

Types of Caching

Application-Level Caching

Stores frequently accessed computations and objects in memory.

Example: Local in-memory caching in a web server (e.g., caching API responses).

Tools: Redis, Memcached.

Database Caching

Caches frequently executed database queries and table rows to avoid redundant queries.

Example: MySQL Query Cache, Read Replicas.

Content Caching

Stores static content like images, CSS, Javascript.

Example: CDN Caching via Cloudflare, Akamai.

Distributed Caching

Spreads cache across multiple servers for high availability and fault tolerance.

Example: Distributed Redis Cluster for large-scale applications.

Cache Invalidation Strategies

Since cache data can become stale, cache invalidation ensures the cache stays up to date.

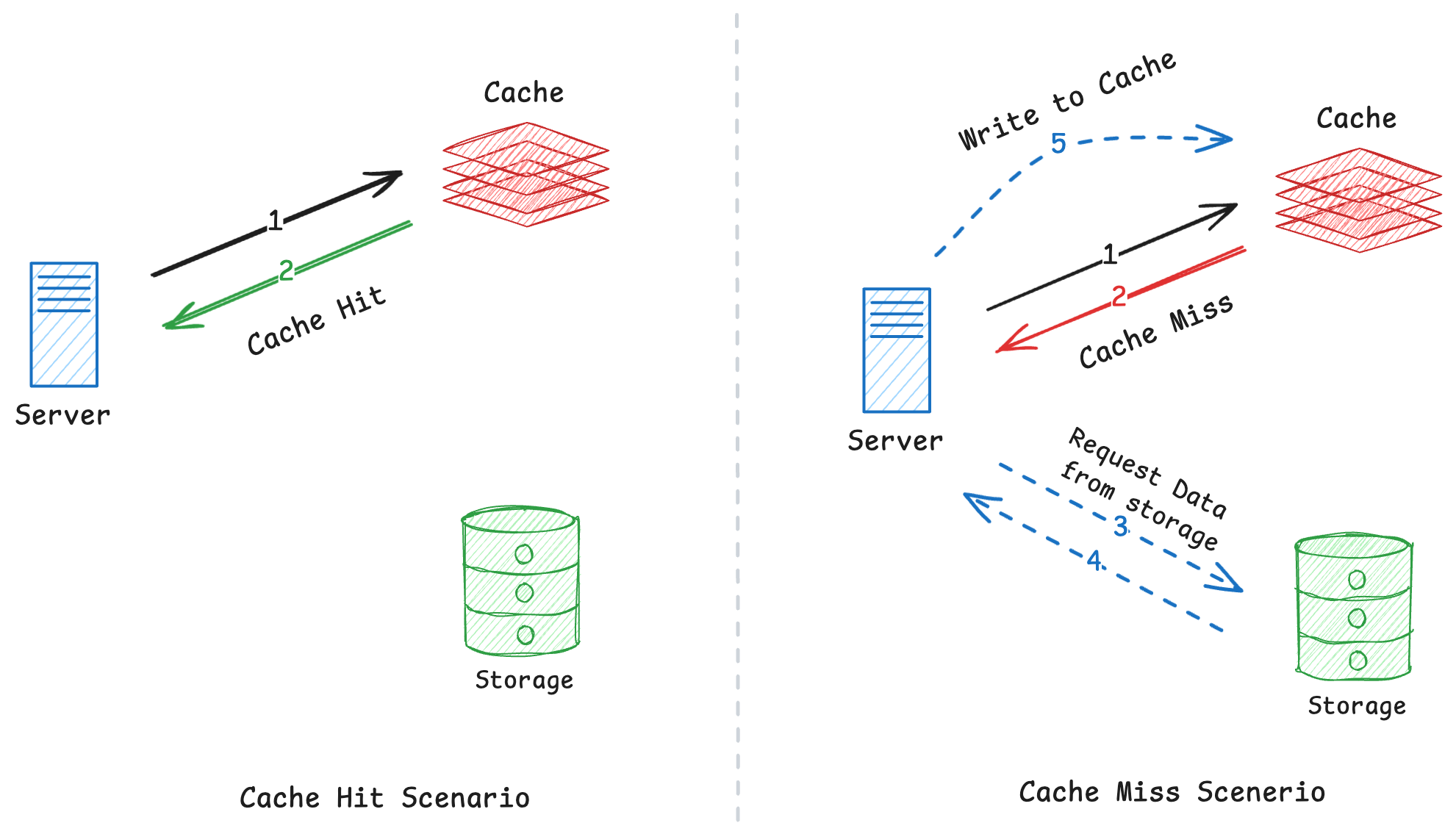

Cache-Aside (Lazy Loading) [Most Common]

Application checks the cache first before quering the database.

If data is not in cache, fetch from database → store in cache for future use.

Example: Redis used with MySQL in an e-commerce platform.

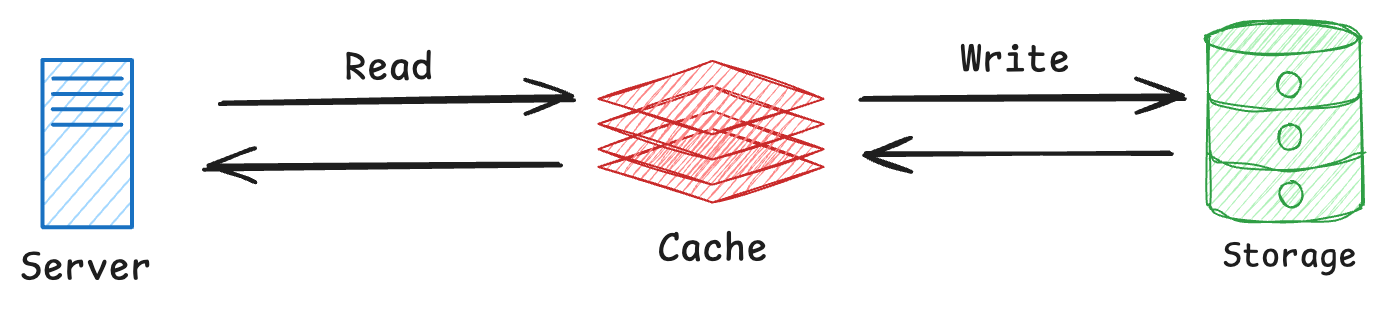

Write-through Caching

Data is written to both cache and database at the same time.

Ensure consistency but increases write latency.

Example: User profile data caching.

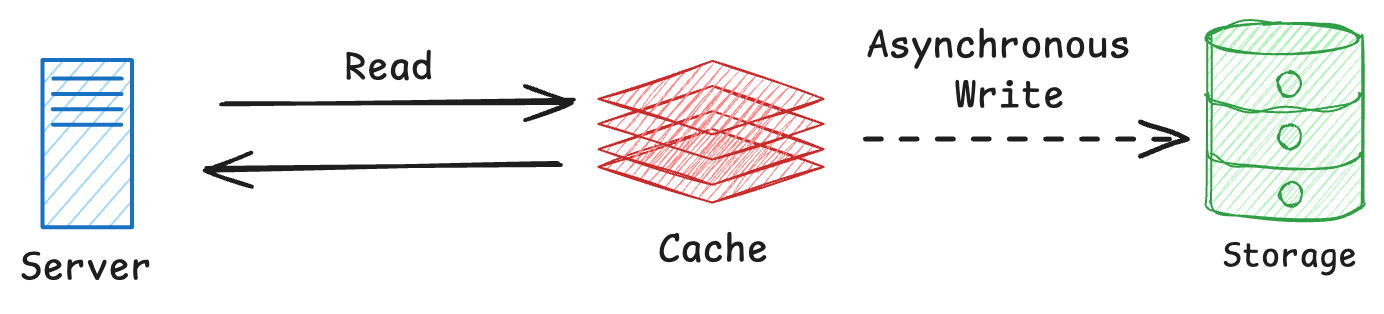

Write-back Caching

Data is first written to the cache and then asynchronously updated in the database.

Improves write performance but may lead to data loss if cache crashes.

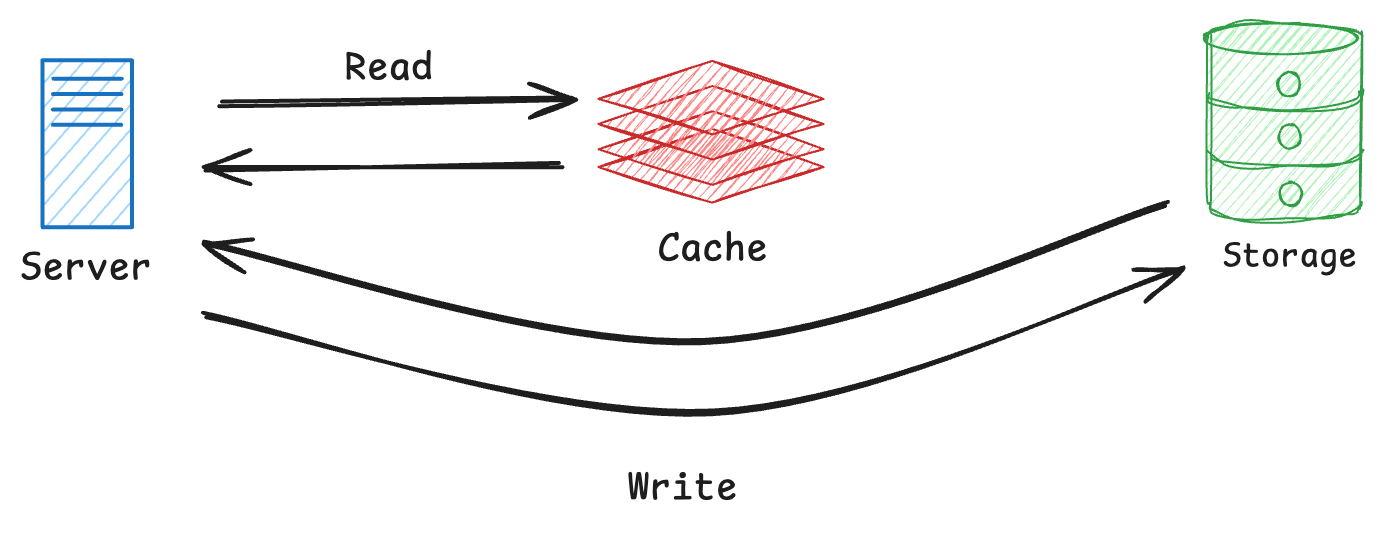

Write-around Caching

Data is written only to the database, bypassing the cache.

Useful when cached data is not frequently read.

Cache Eviction Policies

Since caches have limited space, they need eviction policies to remove old data when full.

LRU (Least Recently Used)[Most Popular]

Removes the least recently accessed data.

Best for most applications where recent data is frequently needed.

LFU (Least Frequently Used)

Removes data that is accessed least often.

Useful when certain data is accessed much more frequently than others.

FIFO (First In, First Out)

Removes oldest data first.

Useful in circular buffer implementations.

Random Replacement

Removes random cache entries

Used in scenarios where no clear usage pattern exists.

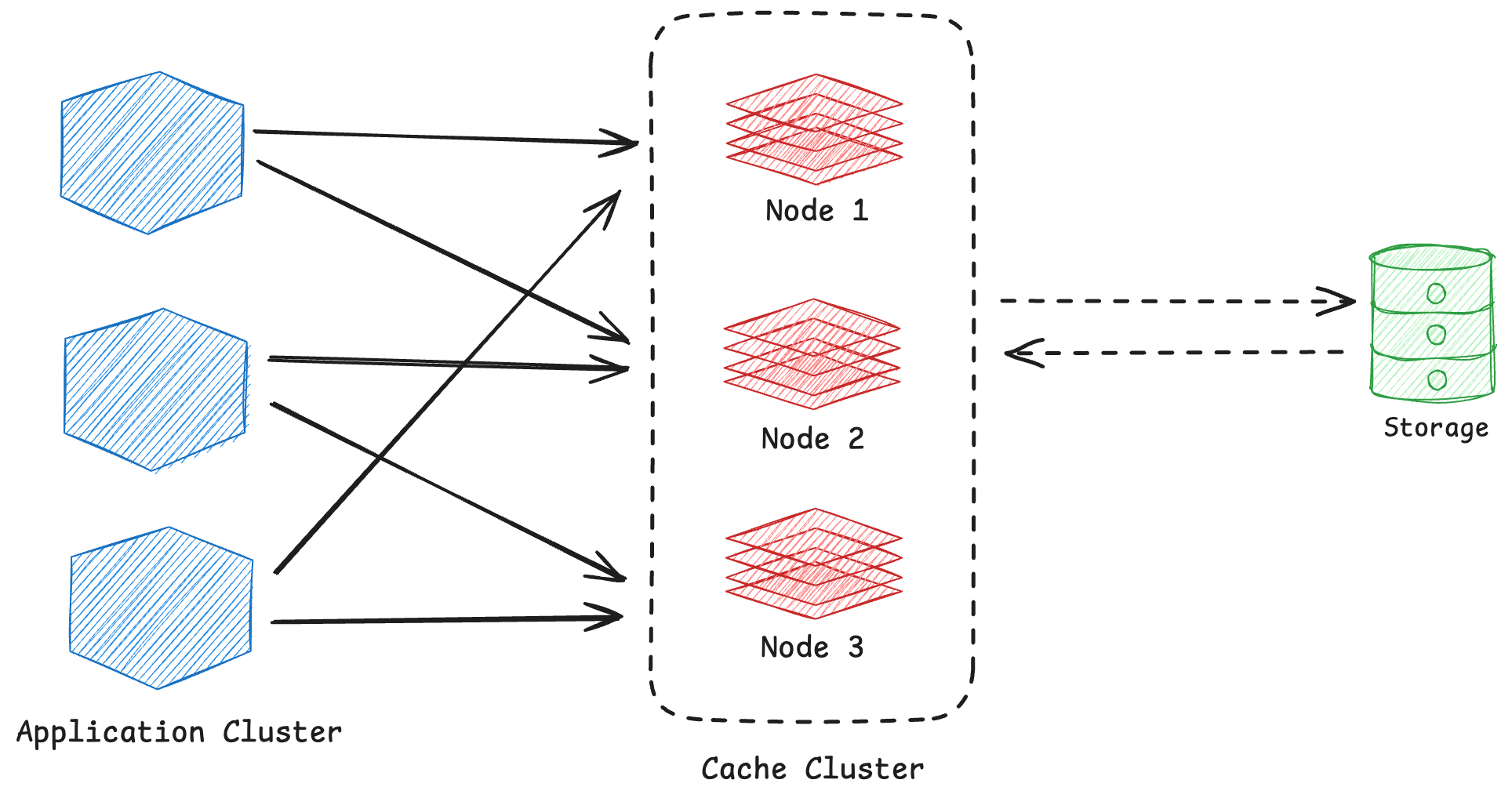

Caching in Distributed Systems

As application grows, a single cache instance becomes a bottleneck, and distributing cache across multiple nodes ensures high availability, fault tolerance, and faster response times. It basically means spreading the cache over multiple servers or nodes.

Challenges in Distributed Caching

Cache Consistency

Issue: Ensuring data consistency across multiple cache nodes is complex.

Solution: Implement cache invalidation strategies like cache-aside, write through, or write-back.

Data Partitioning

Issue: How to distribute cached data across nodes.

Solution: Use consitent hashing to ensure even distribution and prevent data loss.

Node Failures

Issue: If a cache node goes down, data might be lost.

Solution: Implement replication and failover mechanisms.

Cache Stampede (Thundering Herd Problem)

Issue: Mulitple users requesting the same data at the same time, overwhelming the database.

Solution: Use locking mechanisms, request coalescing or state-while-revalidate techniques.

When Not to Use Caching

Highly Dynamic Data (Real-time Systems)

Like a stock trading platform where stock prices change multiple times per second.

Issue - Cached data becomes stale too quickly and cost of cache updates will outweigh the benefits of it.

Alternative Solution - Use of a real-time event streaming systems like WebSockets, Apache Kafka.

Low Read Traffice (Write-Heavy Workloads)

Like a Log Processing System where data is continuosly written but rarely read.

Issue - Cache gets invalidated frequently making it inefficient, and data is processed and stored rather than frequently queried.

Alternative Solution - Use a high-throughput database solution like Apache Cassandra, Kafka.

Strict Data Consistency Requirements

Like a Banking application handling real-time account balances and transactions.

Issue - If Cache is slightly outdated, it would led to data inconsistency.

Alternative Solution - Use a strong consistent database with transactions like PostgreSQL or MySQL.

Large Data Sets with Limited Memory

Like a Data Analytics Dashboard processing terabytes of logs and metrics

Issue - Dataset is too large to fit in memory-based caches like Redis or Memcached, could lead to slow performance.

Alternative Solution - Use columnar databases optimized for analytical queries like Apache Durid, Clickhouse.

Best Practices

Use Consistent Hashing to distribute load evenly.

Implement Replication to ensure high availability.

Use Cache Eviction Policies (LRU, LFU, TTL-based expiration).

Handle Cache Faliures Gracefully, fallback to database with retries.

Avoid Cache Stampede, use request coalescing or stale-while-revalidate.

Monitor Cache Performance (Hit/Miss ratios, Eviction rates)

Use Proper Expiry Policies, set proper TTLs to prevent stale data.

Encrypt Sensitive Data, never store unecrypted information in caches.

Summary

Caching enhances system performance by storing frequently accessed data in temporary storage, reducing latency, server load, and improving scalability and user experience. It includes application-level, database, content, and distributed caching, each catering to different needs. Cache invalidation strategies like cache-aside, write-through, and write-back help maintain data freshness, while eviction policies like LRU and LFU manage limited cache space. Distributed caching offers high availability and fault tolerance but faces challenges like maintaining consistency and handling node failures. Caching is not ideal for highly dynamic data, infrequent read operations, strict data consistency needs, or large data sets with limited memory. Following best practices ensures effective caching implementation.

Subscribe to my newsletter

Read articles from Chinu Anand directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Chinu Anand

Chinu Anand

OpenSource crusader, Full Stack Web Developer by day, DevOps enthusiast by night. Saving the world one commit at a time.