K8s Multi Node Setup

Sai Praneeth

Sai Praneeth

We will see the steps to set up a multi-cluster. We can set this up in AWS or Azure, but they have their services, such as EKS and AKS, so everything comes prebuilt, and we don’t have to worry about anything. We also have a lot of programs in the market, such as Minikube and KOPS.

First of all, we need to know all the components, and then we would get to the practical part.

Let’s say we have an application and are doing a containerized deployment. We have the image to run this image, and we need to have a Container Engine such as Docker / Podman / CRIO / Rocket.

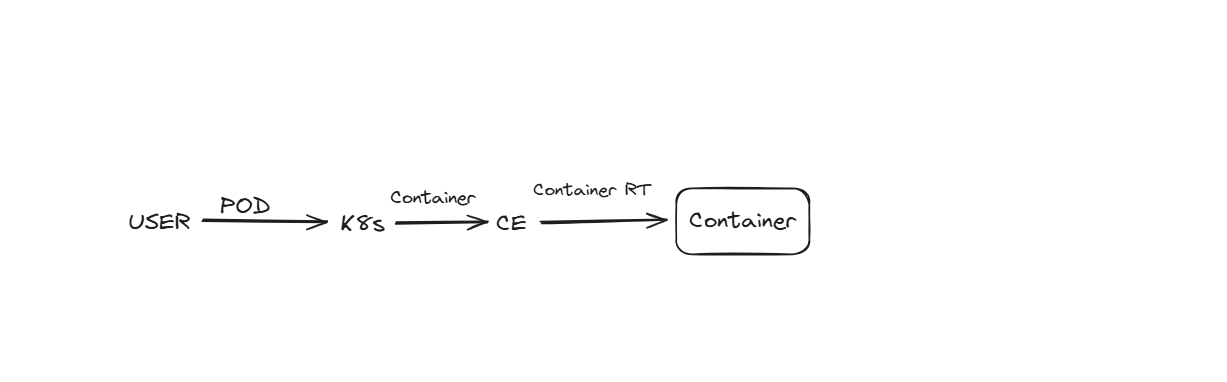

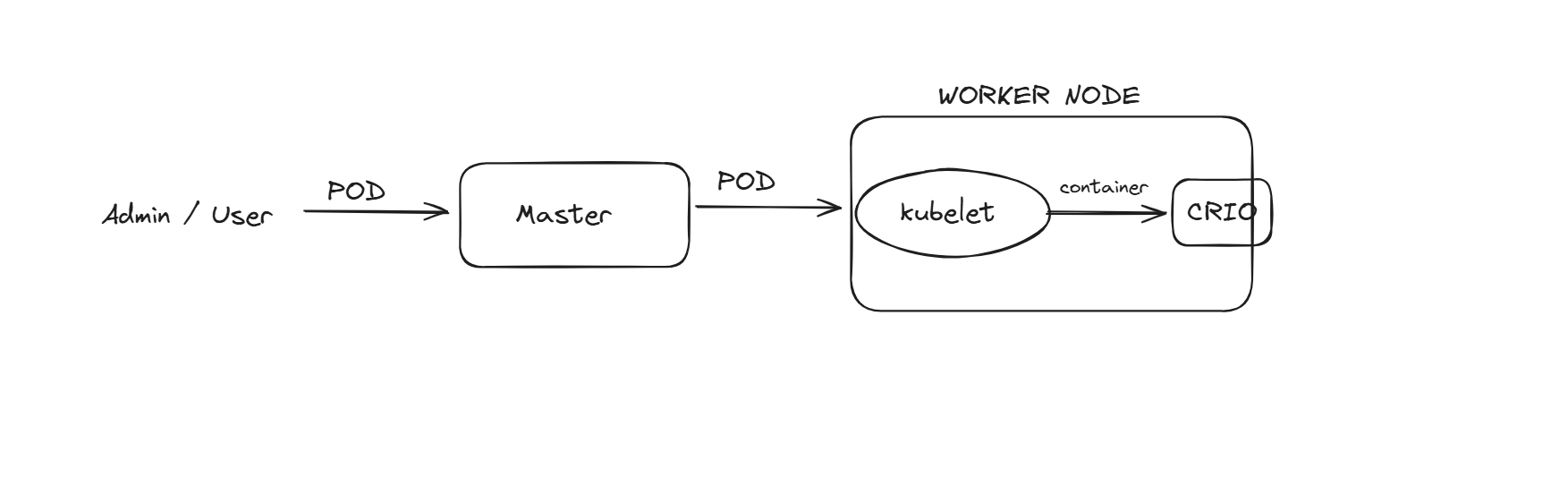

On top of CE, we use container orchestration tools such as K8s; it will do the management and orchestration here. As a user, we will talk to K8s to launch a pod. K8s don’t have the capability to create a container, so it talks to CE first, and CE will create a container for us.

CRIO vs Docker as CE :

K8s recommends using CRIO as Ca ontainer Engine, as CRIO is faster and compatible with K8s. Moreover, CRIO is designed for the K8s only. We set up using kubeadm, so it’s our choice to select the Container Engine.

We, as a user, ask K8s to launch a pod, and the Container Engine will talk to the Container Runtime, and CRT will launch a container.

So this is how the flow looks like, each CE has their own CRT, in the CRIO we have CRT such as runc and kata.

Minikube Challenges :

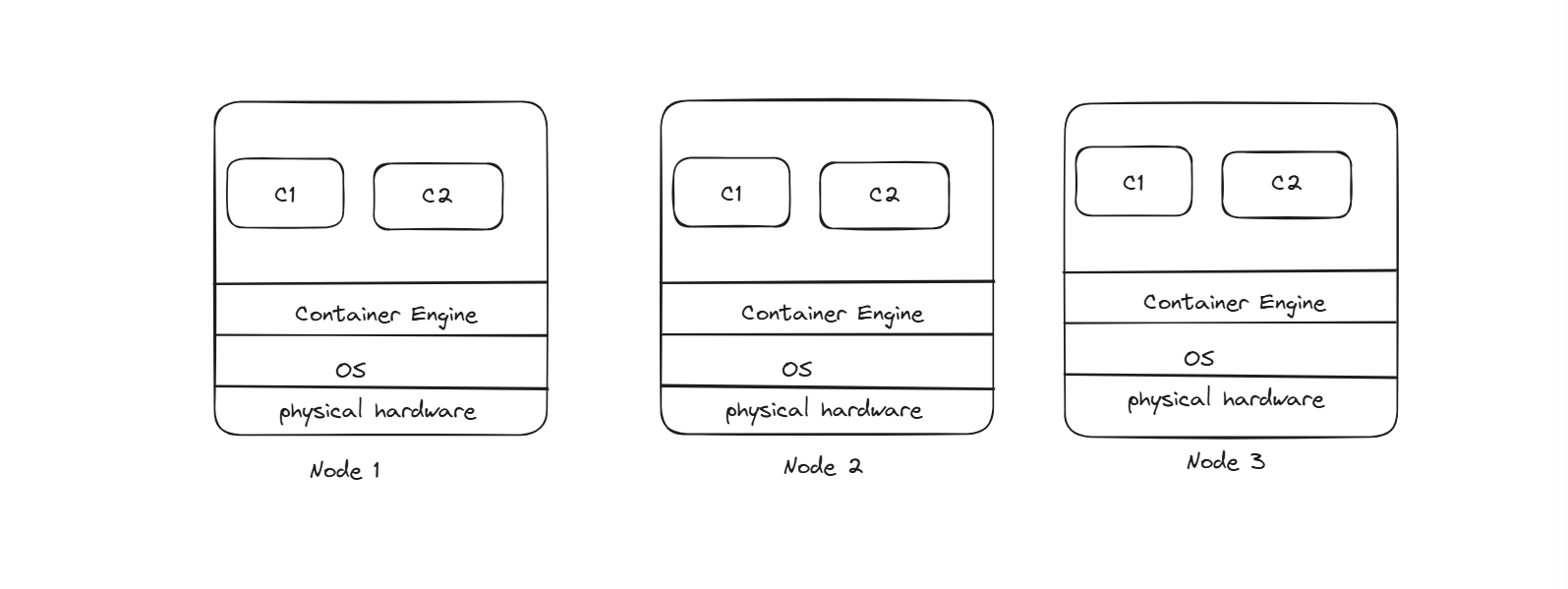

As minikube is a single node cluster, it’s a single point of failure architecture; if this node fails, then all our pods will stop, and the application will stop running. We need to have multiple nodes, and we know each node will have physical hardware, OS, CE, and K8s. This setup will be there for all the nodes.

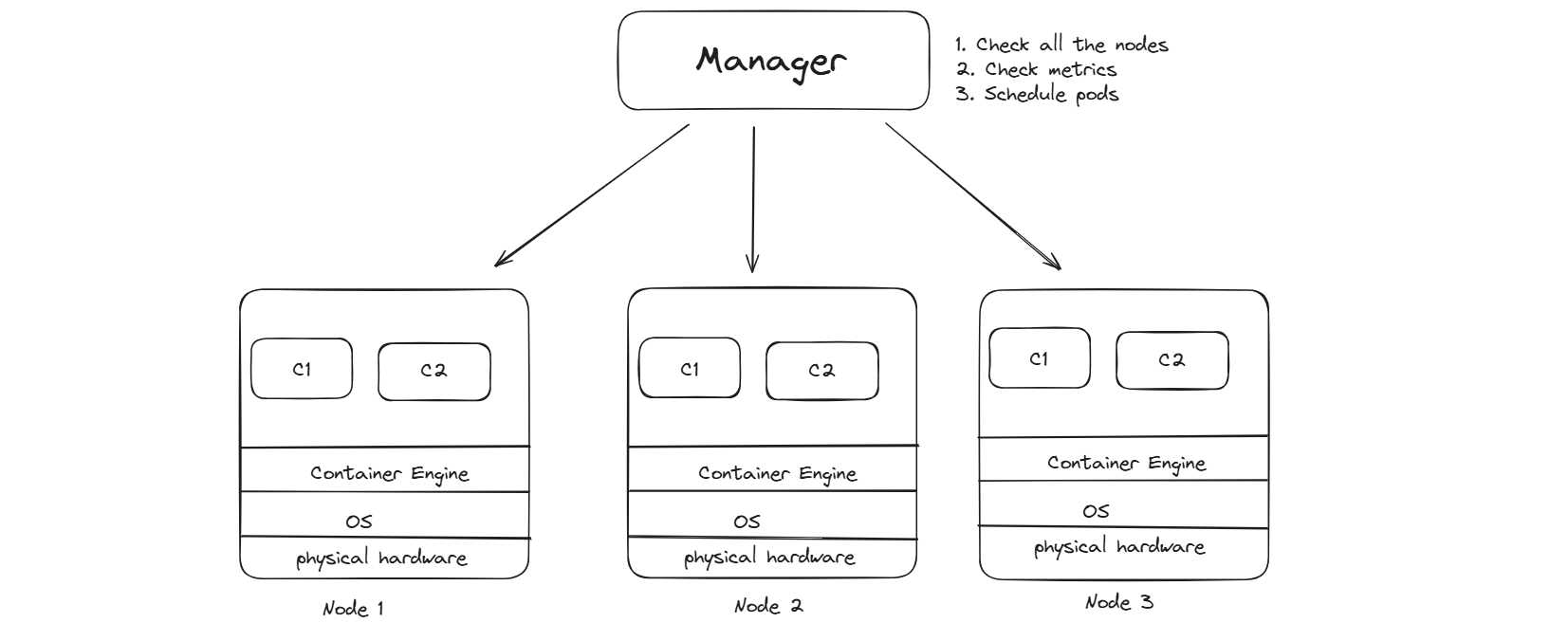

This is how the setup looks like, so here rather than 1 node we have 3 nodes so its not a SPOF ( Single Point of Failure ). But to manage these 3 nodes, we need to have a manager. This manager has 3 tasks here,

Schedule the Pods

Resources Checking

Check if nodes are alive or not

So the user first talks to the manager, and the manager schedules the pod here. It also stores state information in the etcd DB. If we need to launch a pod with 3GB RAM, it checks which node has enough space and will assign to that node. If 3 nodes are not enough, we can launch a new node as well.

In manager we have few components -

etcd: It’s a DB store used to maintain state information.

kube-Scheduler: It schedules the pods.

kube-api: User talks to kube API for pod creation

We have an important component here, which is kubelet; if a user asks to launch a pod to master, it will talk to the kubelet present in the node, and this kubelet asks CE to launch the pod. How can CRIO know that somebody wants to launch a container, We don’t need to use docker/crio by ourselves, so we go with K8s, which can manage the containers. The manager goes to the worker node using Kubelet, and Kubelet will contact the CE and ask to launch a pod.

CRIO will pull the image from the registry and then launch the container here, it means K8s never downloads the image, CE will download the image here.

We have various Container Engine technologies such as Podman, CRIO, Docker, and Rocket, and all of them need to follow rules called the Open Container Initiative ( OCI ), so irrespective of the CE, all follow the same rules, so we can use any of these CE for the K8s.

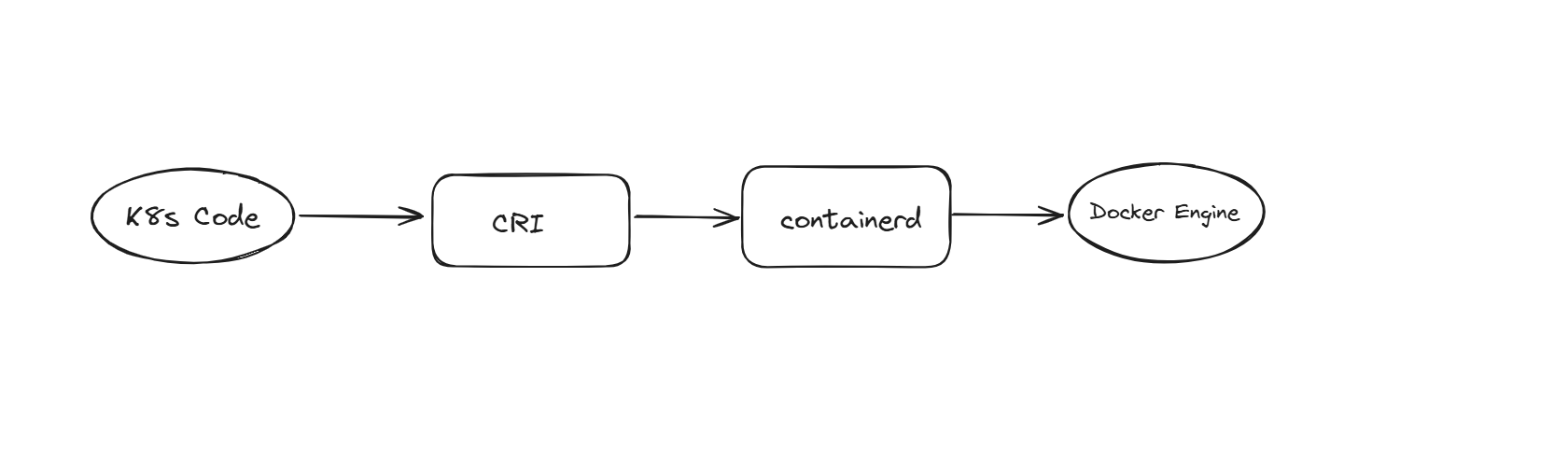

OCI is just a standard name based on the standards we have a Container Runtime Interface ( CRI ); we need to have a common interface so anyone who wants to use CE can contact this layer only. CRI is based on the OCI rules.

Docker has been deprecated by K8s because Docker is not fully compatible with K8s, and it also doesn’t comply well with the OCI rules.

Need for Overlay Network :

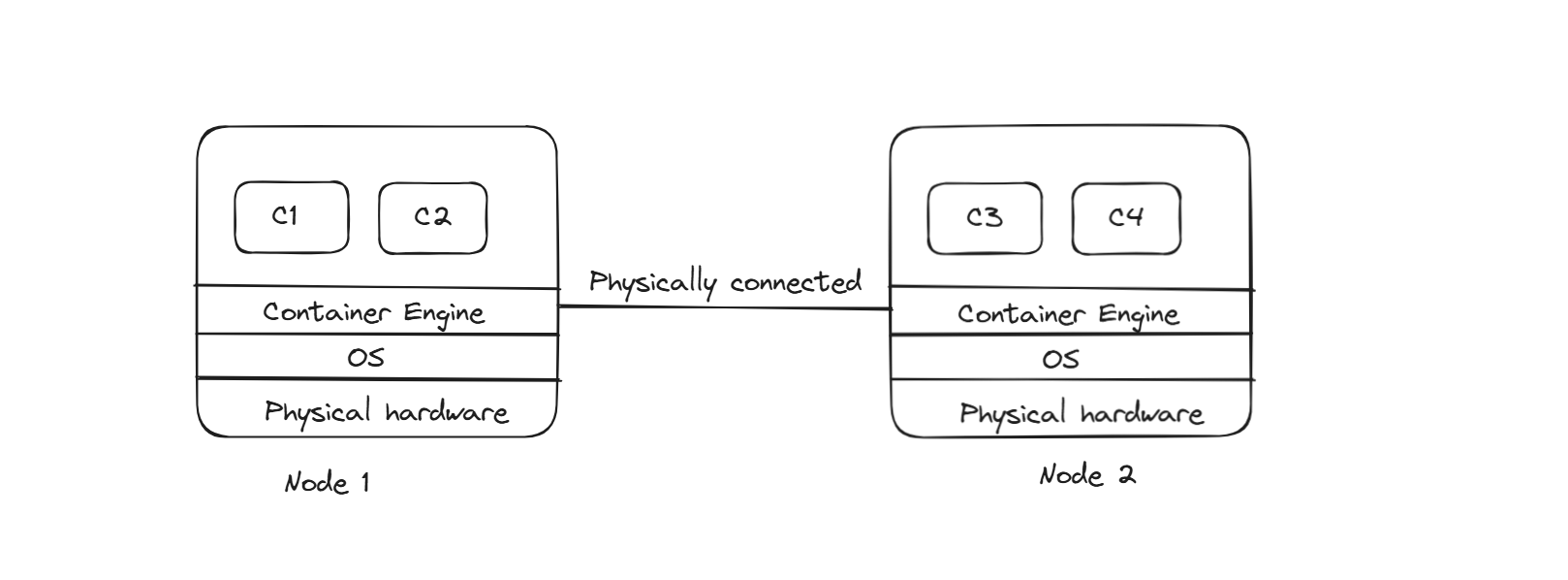

One more very important component in this setup is the Overlay network. We know that CRIO launches a container on top of it. Let’s say we have 2 nodes, and in the 1st node we launch 2 containers, and even in the 2nd node, we launch 2 containers. Containers in the same node can talk to each other, but containers from another cannot talk to it.

What if we are running frontend pods in 1 node and DB pods in other nodes, here, we need to have pod communication, but due to the default nature of docker, it will not allow other machines to talk to the containers as docker creates isolation.

If nodes are physically connected with wires, they can talk to each other, but still, the containers are still isolated, so communication will not happen.

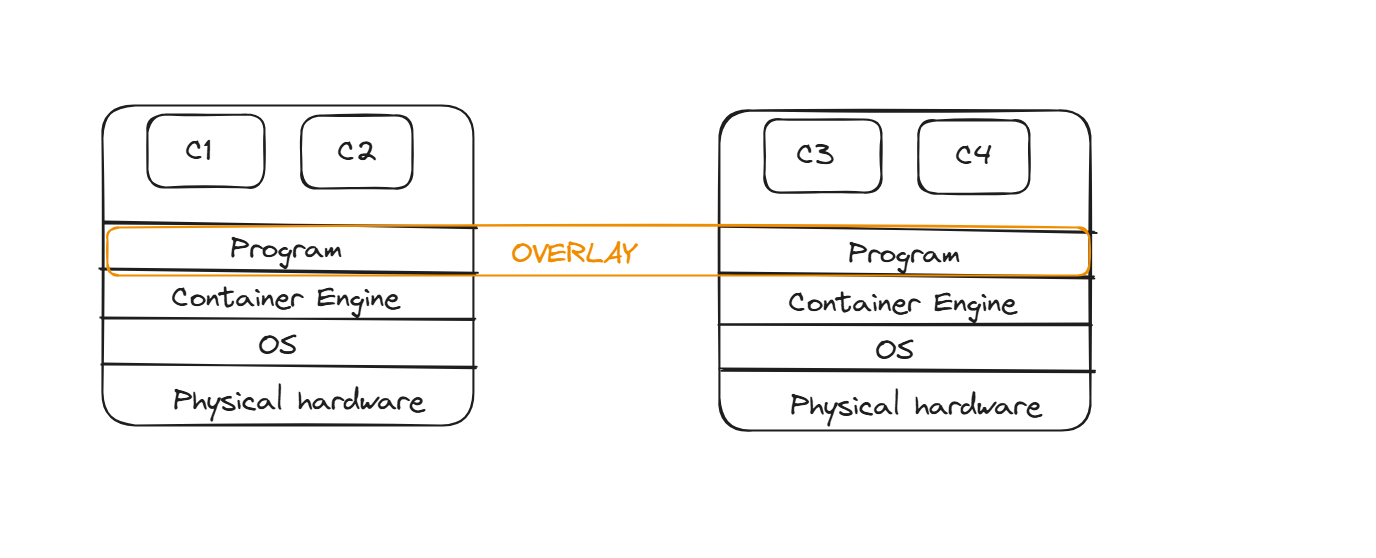

For the container communication to happen, we need to create an overlay network. This overlay network can be given using the “Calico” tool. With the help of the Calico tool, it will create an extended LAN, and it considers all the nodes as one node so container communication will happen.

Calico is a Software Defined Network, it will run a program on top of docker. This software is meant for networking purposes; it understands IP, routers, switches, DNS Servers, Firewalls, etc. Using Calico, we can control the way we need.

It creates a LAN, and the entire networking is managed by this LAN; all containers across nodes can talk to each other here; we term it as VXLAN. C1 can ping C3 and vice versa

Virtaul Network → Overlay

Real Network → Underlay

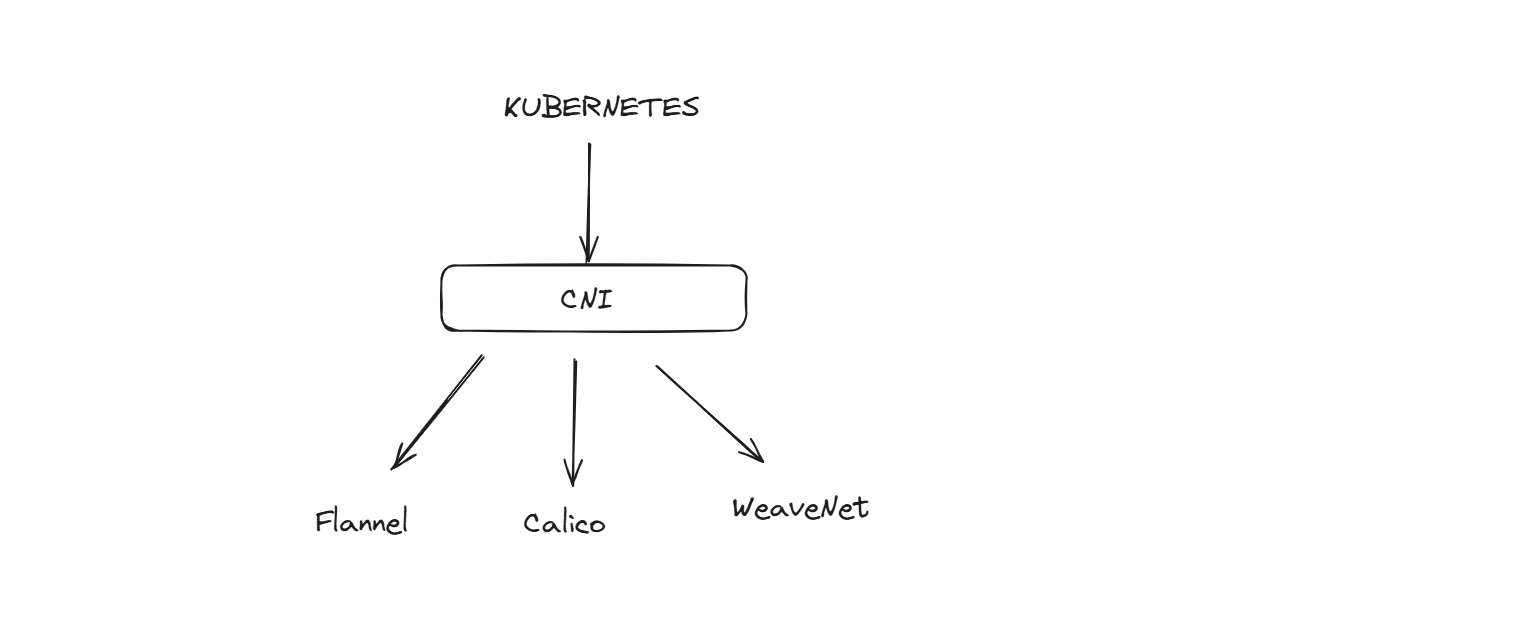

In the market, we have various tools for this - Calico, Flannel, and WeaveNet. Every tool has its features. Here, we have CNI ( Container Network Interface ); K8s will only connect to the CN, and we can change the tools. K8s only talks to the CNI layer, so we can swap CNI plugins as long as they follow the standard.

So similar to CRI ( Container Runtime Interface ), here we have CNI ( Container Network Interface ).

Kube-proxy :

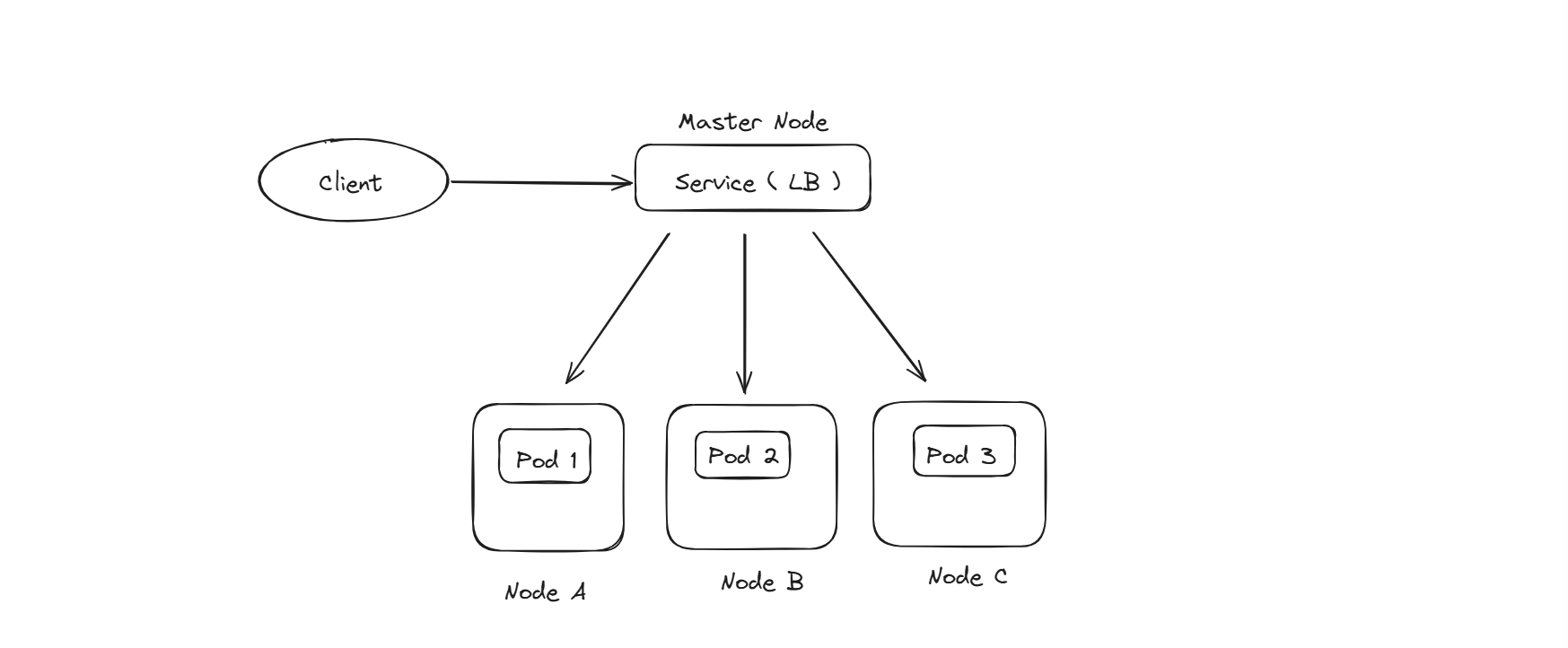

If we have 3 nodes and we are running 5 pods, these 5 pods are spread across all the nodes. We generally keep service behind the pods for the load-balancing purpose; due to this distribution, we are achieving fault-tolerance. Even if a node is down, as our pod is running in another node, our application will still be accessible to the end users.

How does the service connect to the pods across the nodes ?

This Service to Pod Connectivity is done by the kube-proxy here, it is a critical component in the architecture. kube-proxy needs to run in all the nodes.

These are all the components involved in a multi-node setup.

Subscribe to my newsletter

Read articles from Sai Praneeth directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by