Unlocking the Power of Redis: Cache, Database, and Message Queue

Renjitha K

Renjitha KTable of contents

- What is Redis?

- What Makes Redis Unique?

- How Is Redis Different from Traditional Databases?

- Redis Is Single-Threaded – But Don't Be Fooled!

- Non-Blocking I/O and Multiplexing – The Secret Sauce

- Basic Setup: Running Redis

- Redis as a Cache

- Redis Persistence – Keeping Your Data Safe

- Redis as a Message Queue

- Conclusion

In today's world of high-performance applications, every millisecond counts. Whether you're serving millions of requests per second or processing real-time data streams, Redis is a game changer. And here's a fun thought—have you noticed how people talk about using Redis as a cache, then later claim it's their go-to message queue, or even say it's their primary database? How can one tool do it all? Well, that's exactly what makes Redis so unique—it wears multiple hats.

In this article, we'll cover some key concepts you need to know before diving into Redis:

How Redis Differs from Traditional Databases: Learn why storing data in RAM makes Redis ultra-fast compared to disk-based systems.

Key Features of Redis: Understand the core features and capabilities that set Redis apart.

Using Redis as a Cache, Database, and Message Queue: We'll explore practical examples and use cases.

Let's get started on this journey to mastering Redis!

What is Redis?

Imagine having a tool that delivers lightning-fast read and write operations. That’s Redis for you. It’s an in-memory data structure store, which means it keeps your data in RAM instead of on a disk. This makes it incredibly fast—ideal for scenarios where every microsecond matters. Plus, Redis isn't limited to simple key-value pairs; it supports various data structures like strings, hashes, lists, sets, and sorted sets, which makes it versatile for a wide range of applications.

What Makes Redis Unique?

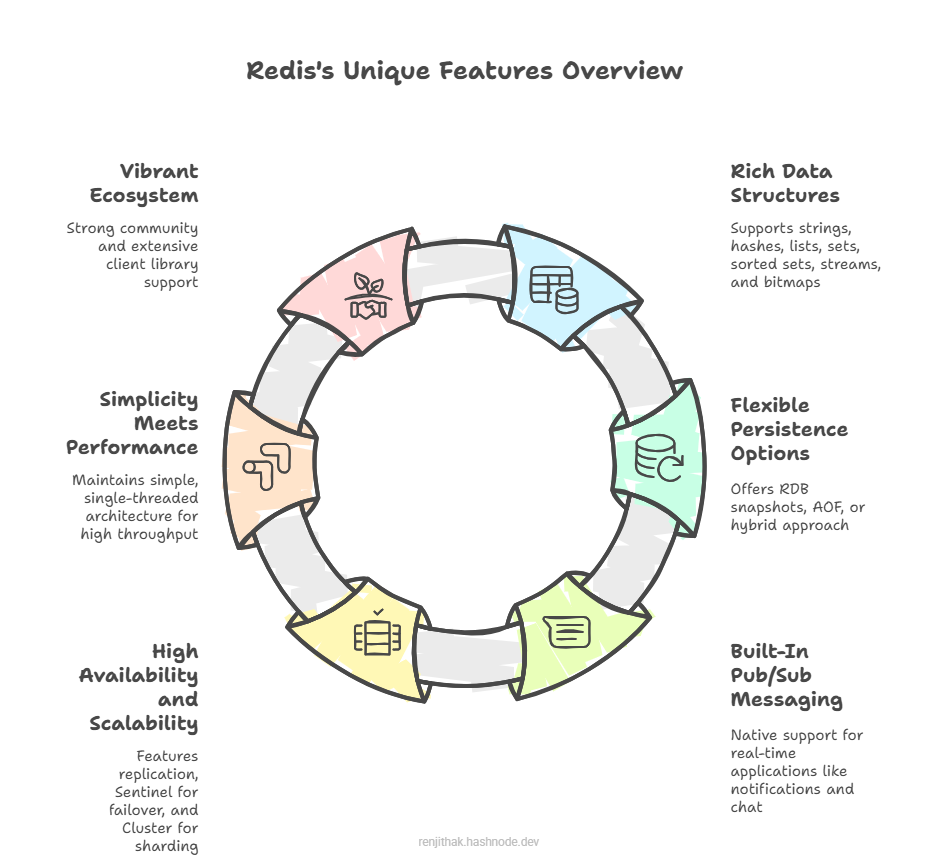

You might wonder, "There are many in-memory databases out there—what makes Redis stand out?" Well, here are some key points:

How Is Redis Different from Traditional Databases?

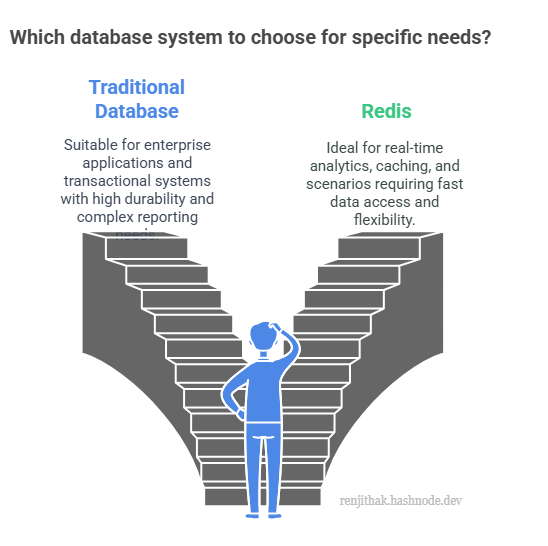

You might ask, "If Redis is so fast, why not just use it instead of MySQL or PostgreSQL?" The key difference lies in where data is stored.

Traditional databases, such as MySQL or PostgreSQL, store data on disk. This means every read or write operation involves disk access, which can slow things down. However, they provide strong ACID compliance, support complex queries, and are excellent for handling structured data with multiple relationships.

Redis, on the other hand, keeps all data in RAM. This results in blazing-fast operations, making it ideal especially for read-heavy workloads and scenarios requiring low-latency responses., such as caching, real-time analytics, and session management. But this speed comes at the cost of higher memory usage and a different approach to durability (managed via persistence options like RDB and AOF).

Redis Is Single-Threaded – But Don't Be Fooled!

At first glance, you might think, "Wait, Redis is single-threaded? How can that be fast?" Let me explain.

Why Single-Threaded?

Redis operates in memory, so the major bottleneck you see in disk-based systems is gone. By avoiding the overhead of managing multiple threads and context switching, Redis delivers a simple and predictable performance. This design ensures that every command is processed one after the other, which might sound limiting—but in practice, it's a huge advantage.

What Does This Mean for You?

High Throughput: Thanks to its efficient event loop and non-blocking I/O, Redis can handle hundreds of thousands of operations per second.

Concurrency with Multiplexing: Even though it uses a single thread, Redis can manage thousands of simultaneous client connections using an event loop. This is like juggling multiple tasks without dropping the ball.

Non-Blocking I/O and Multiplexing – The Secret Sauce

You might wonder, "How does Redis handle so many connections if it’s only using one thread?" Here’s the secret: non-blocking I/O and multiplexing.

Breaking It Down

Non-Blocking I/O: Instead of waiting for one operation to finish before starting another, Redis processes multiple requests at the same time. This means if one request is slow, it doesn’t hold up the others.

Multiplexing: Redis uses an event loop to keep an eye on all active connections. It listens for new data, processes requests quickly, and then moves on. This is made possible by system calls like epoll (on Linux), kqueue (on macOS/BSD), or select/poll on older systems.

In simpler terms, Redis efficiently manages many socket connections (it uses TCP sockets on port 6379 by default) without getting bogged down. The result? Efficient resource usage, high concurrency, and ultra-fast responses.

Basic Setup: Running Redis

For installation instructions, please refer to the official Redis documentation: Install Redis.

Once Redis is running, you can test it by running redis-cli:

redis-cli

Test the connection with the ping command:

127.0.0.1:6379> ping

PONG

Redis as a Cache

Setting up Redis as a cache is really straightforward. Think of it like this: connect to your Redis server, then use a simple caching strategy. If data is already in Redis, you use it (a cache hit). If not, you fetch it from your main data source, store it in Redis with a Time-to-Live (TTL), and then serve it.

Here’s a quick Python example:

import redis

# Connect to Redis

r = redis.Redis(host='localhost', port=6379, db=0)

def get_data(key):

data = r.get(key)

if data:

return data # Cache hit!

else:

data = fetch_from_db(key) # Replace this with your actual data fetching logic

r.setex(key, 3600, data) # Cache for 1 hour

return data

And that’s it—your Redis cache is ready to go!

Redis Persistence – Keeping Your Data Safe

Since Redis stores data in memory, you need to set up persistence to avoid losing your data on a restart. Redis offers two main persistence options:

1. RDB (Redis Database File)

RDB takes periodic snapshots of your dataset and stores them as a compressed binary file. It’s great for backups but can result in some data loss if a crash happens between snapshots.

Pros: Fast startup and minimal disk I/O.

Cons: Data may be lost between snapshots.

save 900 1 # Save every 900 seconds if at least 1 change is made

save 300 10 # Save every 300 seconds if at least 10 changes are made

2. AOF (Append-Only File)

AOF logs every write operation, so you can rebuild your dataset by replaying these logs. It’s more durable than RDB but can be slower due to constant disk writes.

appendonly yes

3. Hybrid Approach

Using both RDB and AOF offers a balance between durability and performance. With RDB, you get efficient snapshots that speed up startup, while AOF ensures minimal data loss by logging every operation. This way, you get the best of both worlds: fast recovery and high durability without overwhelming your disk with writes.

redis-server --appendonly yes --dbfilename dump.rdb

Redis as a Message Queue

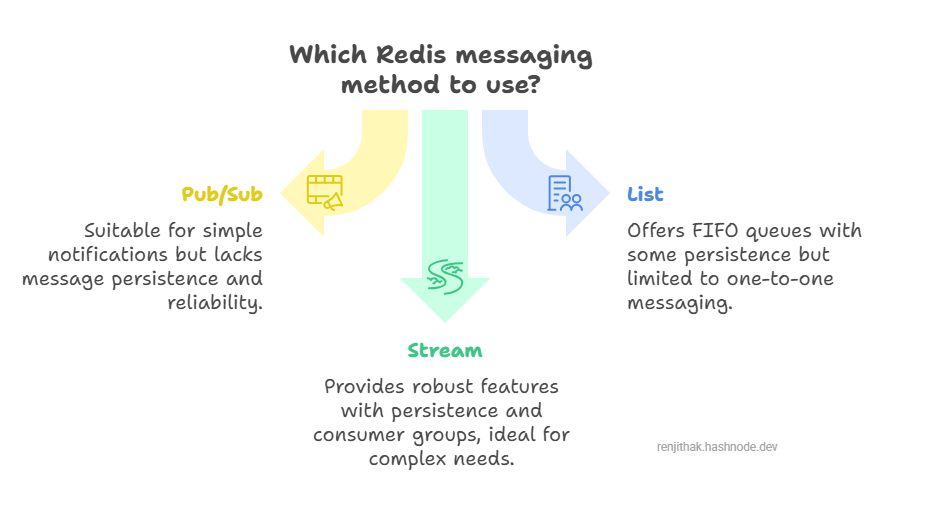

Redis isn’t just for caching and data storage—it can also serve as a lightweight message queue. However, Redis offers three different messaging mechanisms, each suited for different scenarios.

1. Pub/Sub

Best for: Simple, real-time notifications.

How It Works: A publisher sends messages to a channel, and any subscribers listening to that channel immediately receive the messages.

Pros: Very easy to implement; great for broadcasting events (e.g., live notifications, chat rooms).

Cons: No persistence—if a subscriber is offline, they miss the messages.

2. List-Based Queues

Best for: Simple FIFO queues and one-to-one messaging.

How It Works: Producers push tasks onto a list (e.g., using

LPUSH), and consumers retrieve tasks from the other end (usingBRPOP).Pros: Straightforward FIFO (first-in, first-out) approach; easy to code for background job queues.

Cons: Limited to basic queueing; lacks advanced features like consumer groups or message replay.

3. Redis Streams

Best for: Robust messaging needs with persistence, consumer groups, and complex workflows.

How It Works: Streams store messages in a persistent log, each tagged with a unique ID. Consumers can form groups to share the workload and acknowledge messages, ensuring more reliable processing.

Pros: Built-in persistence, consumer groups, and message replay; ideal for event-driven architectures.

Cons: More complex to set up and manage than Pub/Sub or Lists.

Conclusion

Redis is much more than just a caching tool. It’s a versatile, high-performance system that can serve as a database, message queue, and more—all while delivering blazing-fast speed. With features like replication, sharding, and high availability options such as Sentinel, Redis is well-equipped to meet the demands of modern applications.

Stay tuned for the next part, where I'll dive deeper into scaling Redis and making it highly available using Redis Cluster and Sentinel.

How do you use Redis? Share your experiences in the comments!

Subscribe to my newsletter

Read articles from Renjitha K directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Renjitha K

Renjitha K

Electronics engineer turned into Sofware Developer🤞On a mission to make Tech concepts easier to the world, Let's see how that works out 😋