K8s-Ingress

Md Nur Mohammad

Md Nur MohammadWe saw how we can access our deployed containerized application from the external world via Services. Among the ServiceTypes the NodePort and LoadBalancer are the most often used. For the LoadBalancer ServiceType, we need to have support from the underlying infrastructure. Even after having the support, we may not want to use it for every Service, as LoadBalancer resources are limited and they can increase costs significantly. Managing the NodePort ServiceType can also be tricky at times, as we need to keep updating our proxy settings and keep track of the assigned ports.

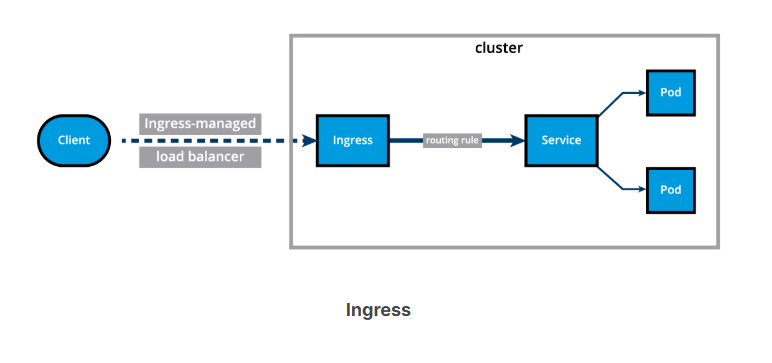

In this chapter, we will explore the Ingress API resource, which represents another layer of abstraction, deployed in front of the Service API resources, offering a unified method of managing access to our applications from the external world.

Ingress (1)

With Services, routing rules are associated with a given Service. They exist for as long as the Service exists, and there are many rules because there are many Services in the cluster. If we can somehow decouple the routing rules from the application and centralize the rules management, we can then update our application without worrying about its external access. This can be done using the Ingress resource - a collection of rules that manage inbound connections to cluster Services.

To allow the inbound connection to reach the cluster Services, Ingress configures a Layer 7 HTTP/HTTPS load balancer for Services and provides the following:

TLS (Transport Layer Security)

Name-based virtual hosting

Fanout routing

Loadbalancing

Custom rules.

Ingress (2)

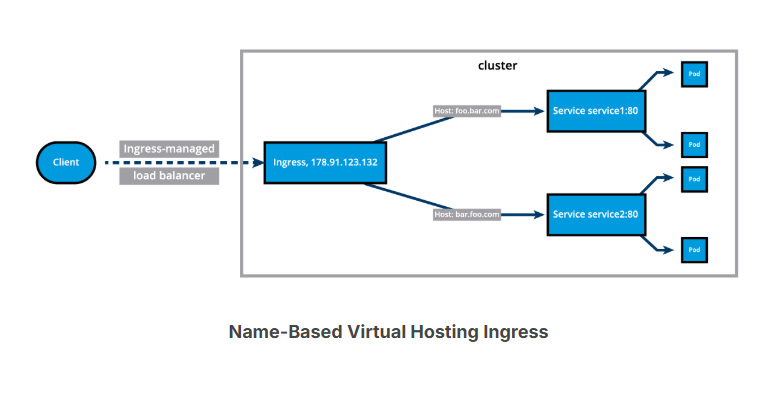

With Ingress, users do not connect directly to a Service. Users reach the Ingress endpoint, and, from there, the request is forwarded to the desired Service. You can see an example of a Name-Based Virtual Hosting Ingress definition below:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/service-upstream: "true"

name: virtual-host-ingress

namespace: default

spec:

ingressClassName: nginx

rules:

- host: blue.example.com

http:

paths:

- backend:

service:

name: webserver-blue-svc

port:

number: 80

path: /

pathType: ImplementationSpecific

- host: green.example.com

http:

paths:

- backend:

service:

name: webserver-green-svc

port:

number: 80

path: /

pathType: ImplementationSpecific

In the example above, user requests to both blue.example.com and green.example.com would go to the same Ingress endpoint, and, from there, they would be forwarded to webserver-blue-svc, and webserver-green-svc, respectively.

This diagram presents a Name-Based Virtual Hosting Ingress rule:

Ingress (3)

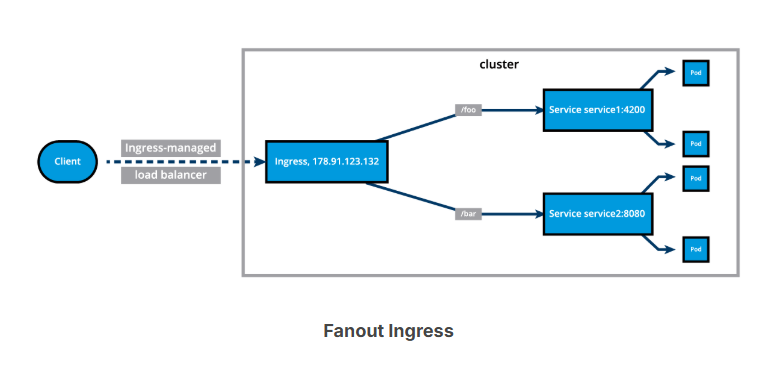

We can also define Fanout Ingress rules, presented in the example definition and the diagram below, when requests to example.com/blue and example.com/green would be forwarded to webserver-blue-svc and webserver-green-svc, respectively:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/service-upstream: "true"

name: fan-out-ingress

namespace: default

spec:

ingressClassName: nginx

rules:

- host: example.com

http:

paths:

- path: /blue

backend:

service:

name: webserver-blue-svc

port:

number: 80

pathType: ImplementationSpecific

- path: /green

backend:

service:

name: webserver-green-svc

port:

number: 80

pathType: ImplementationSpecific

The Ingress resource does not do any request forwarding by itself, it merely accepts the definitions of traffic routing rules. The ingress is fulfilled by an Ingress Controller, which is a reverse proxy responsible for traffic routing based on rules defined in the Ingress resource.

Ingress Controller

An Ingress Controller is an application watching the Control Plane Node's API server for changes in the Ingress resources and updates the Layer 7 Load Balancer accordingly. An Ingress Controller is also known as Controllers, Ingress Proxy, Service Proxy, Reverse Proxy, etc. Kubernetes supports an array of Ingress Controllers, and, if needed, we can also build our own. GCE L7 Load Balancer Controller, AWS Load Balancer Controller, and Nginx Ingress Controller are commonly used Ingress Controllers. Other controllers are Contour, HAProxy Ingress, Istio Ingress, Kong, Traefik, etc. In order to ensure that the ingress controller is watching its corresponding ingress resource, the ingress resource definition manifest needs to include an ingress class name, such as spec.ingressClassName: nginx and optionally one or several annotations specific to the desired controller, such as nginx.ingress.kubernetes.io/service-upstream: "true" (for an nginx ingress controller).

Starting the Ingress Controller in Minikube is extremely simple. Minikube ships with the Nginx Ingress Controller set up as an addon, disabled by default. It can be easily enabled by running the following command:

$ minikube addons enable ingress

Deploy an Ingress Resource

Once the Ingress Controller is deployed, we can create an Ingress resource using the kubectl create command. For example, if we create a virtual-host-ingress.yaml file with the Name-Based Virtual Hosting Ingress rule definition that we saw in the Ingress (2) section, then we use the following command to create an Ingress resource:

$ kubectl create -f virtual-host-ingress.yaml

Access Services Using Ingress

With the Ingress resource we just created, we should now be able to access the webserver-blue-svc or webserver-green-svc services using the blue.example.com and green.example.com URLs. As our current setup is on Minikube, we will need to update the host configuration file (/etc/hosts on Mac and Linux) on our workstation to the Minikube IP for those URLs. Do not remove any existing entries from the /etc/hosts file, only add the Minikube IP and the two host entries for blue and green services respectively. After the update, the file should look similar to:

$ sudo vim /etc/hosts

127.0.0.1 localhost

::1 localhost

192.168.99.100 blue.example.com green.example.com

Now we can open blue.example.com and green.example.com on the browser and access each application.

Demo: Using Ingress Rules to Access Applications

We demonstrate how to use the Ingress resource to capture traffic from outside the cluster and route it internally to services and applications running inside the Kubernetes cluster.

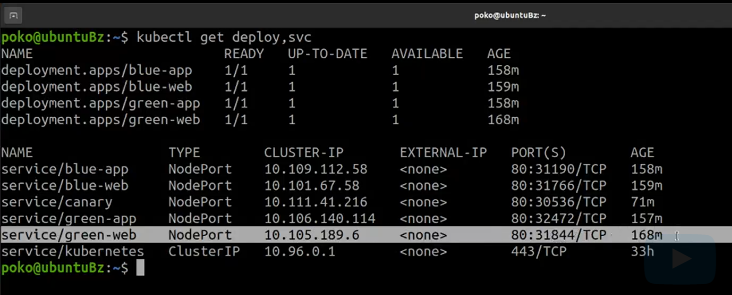

So let's see what deployments and what services we have in our cluster.

we're reminding ourselves that we are looking at the 'blue-web' deployment, at the 'green-web' deployment. So the 'green-web', we have demonstrated it while the 'blue-web' was assigned as a homework and then their associated services, the 'green-web' service, a NodePort type service, and then the 'blue-web' service, that was part of the homework assignment.

Well, both services are NodePort type services. Allowing clients to access them this way through the high port is probably undesirable. Everyone is trying to reach services on the famous port 80.

So in that scenario, we would need to use the Ingress in conjunction with an Ingress controller to achieve that.

So the first thing we would need to do is to make sure that we have a controller, or an Ingress proxy in our Minikube cluster.

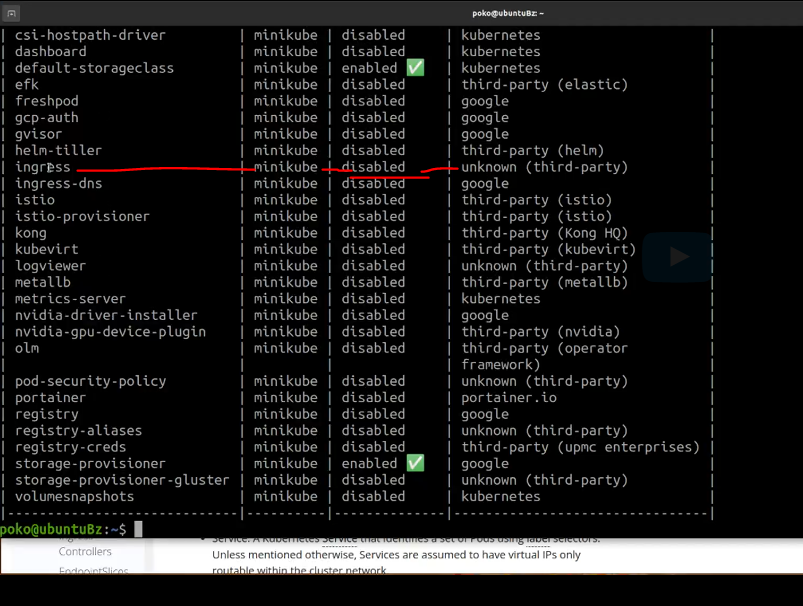

Now Minikube comes pre-installed with an Ingress add-on, an nginx Ingress add-on. So let's first list minikube add-ons [minikube addons list] and see where the Ingress add-on i And it seems that by default, it is disabled.

minikube addon list

So the next thing we need to do is make sure that we are enabling the Ingress add-on.

minikube addons enable ingress

So we have the Ingress proxy. Now, all we have to do is take a look at an Ingress definition file for the Ingress resource of Kubernetes.

vim ingress-demo.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

namespaces: default

spec:

rules:

- host: Blue.io

#host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: blue-web

port:

number: 80

- host: green.io

#host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: green-web

port:

number: 80

So this is a very simple definition for two rules. We'll be using two separate hosts. Each host to define a rule for a different service. So the 'blue-web' service who is servicing the blue.io request, while the 'green-web' service will be servicing the green.io request. Now the yaml definition file created, all we need to do now is to create the Ingress resource.

kubectl apply -f ingress-demo.yaml

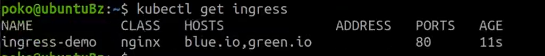

let's run 'kubectl get ingress'.

kubectl get ingress

So the 'ingress-demo' is listed here with the two hosts, 'blue.io' and 'green.io'.

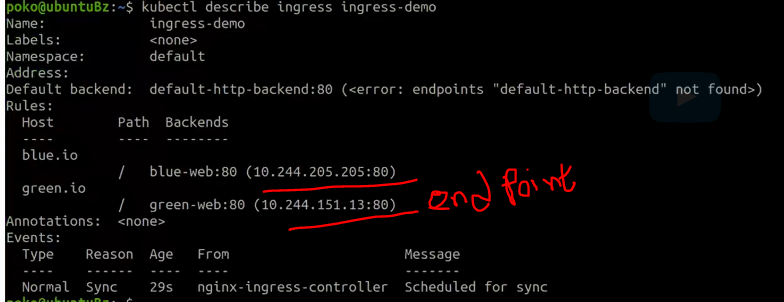

And we can also run the 'kubectl describe ingress ingress-demo'.

kubectl describe ingress ingress-demo

And with the 'describe', we see the two hosts, their paths and their backend services, and these are the endpoints of their respective services.

In order to take full advantage of the Ingress resource from a local browser, we would need to make sure that our /etc/hosts file includes the IP address of the Minikube control plane node and also the hosts that we are planning to use in the browser. So we will run a command that will update the /etc/hosts file.

Basically, we'll extract the minikube IP, and we'll set aliases for the 'blue.io' and 'green.io' services.

sudo bash -c "echo $(minikube ip) blue.io green.io >> /etc/hosts"

So it has been accomplished. And now all we need to do is go to the browser and type 'blue.io'. and then [type] 'green.io'. And this is one method of using the Ingress controller.

And then another method to use the Ingress is for blue-green strategies, blue-green deployment strategies.So considering that I have a stable application, typically the blue one, maybe the green one is not stable and it's not production-ready yet.

So when green becomes production-ready, it becomes stable, then I want to use an Ingress to shift traffic from the service that would otherwise send traffic to the stable blue application,

to shift traffic to the new stable green application. Okay, so this is typically done in enterprise settings.

So let's try to use the same two applications, the blue and green applications, the same two services, but use it it for a different purpose. For that, we want to go back to our terminal and we want to edit the 'ingress-demo' yaml definition file.

vim infress-demo.yaml

So first we will edit this and then we will replace the Ingress resource.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

namespaces: default

spec:

rules:

#host: Blue.io

- host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: blue-web

port:

number: 80

#host: green.io

- host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: green-web

port:

number: 80

So now, instead of the two hosts, 'blue.io' and 'green.io', I want to use the 'stable-color.io' as the primary, and then I want to use the 'tested-color.io' for the secondary service.

So let's make the necessary changes. So let's start with the 'stable-color'. And then we will comment out to the 'blue.io' host. All right. And then we'll do the same thing with the 'green.io' host.

Okay, so now the 'stable-color', right. My stable website will be serviced by the blue service. And eventually, in the future, when the green service becomes stable, then we'll just make the shift from here. So the 'stable-color.io' will become green and then the 'tested-color.io' will become possibly a different service. So let's save this and let's replace the Ingress resource.

So run the 'kubectl replace' command, it will force a replacement.

kubectl replace --force -f ingress-demo.yaml

The demo ingress deleted, and then replaced.

But now with these changes, we also want to make sure that they are reflected in our /etc/hosts file.

sudo bash -c "echo $(minikube ip) stable-color.io tested-color.io >> /etc/hosts"

So the command we want to run, again, for DNS purposes, for local DNS purposes I want to say, is the 'sudo bash' command and we will echo the minikube ip and now the aliases will be to the 'stable-color.io' and the 'tested-color.io'. 'stable-color.io' for now should be our blue application, okay.

So let's go back to the browser, open a new tab, [type] 'stable-color.io'. If we hit enter, this should be blue.

So it doesn't matter how many times we request, it should consistently respond with blue. And then 'tested-color.io'. Here it is green. So I want to stay on stable. Okay, 'stable-color.io'. And when I think that the green app is stable

Then it can replace in production the blue application. Then all I need to do is go back to my Ingress resource, so I will run 'vim ingress-demo'

vim infress-demo.yaml

And then for the 'stable-color.io', for the stable rule, we will update the service name. So instead of 'blue-web', it will become the 'green-web'

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

namespaces: default

spec:

rules:

#host: Blue.io

- host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: green-web

port:

number: 80

#host: green.io

- host: stable-color.io

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: blue-web

port:

number: 80

And then we don't want to have both services. Well, the same service in both rules, let's replace this with blue. But now the main... point here is to make sure that now green becomes the stable application. So we save this, now we just need to replace Ingress.

kubectl replace --force -f ingress-demo.yaml

It is being replaced. So we go back to the 'stable-color.io' webpage and if we hit refresh, it should now be green. Okay, we did not change the URL. It was the same 'stable-color.io', but we've changed the service behind Ingress. So we've shifted traffic from one service to another with the help of the Ingress controller. So this is how you would use an Ingress together with two services and deployments for traffic shifting in a blue-green deployment strategy.

Subscribe to my newsletter

Read articles from Md Nur Mohammad directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Md Nur Mohammad

Md Nur Mohammad

I am pursuing a Master's in Communication Systems and Networks at the Cologne University of Applied Sciences, Germany.