Automating Data Cleaning (Part 2): Using pandas in our AWS Chalice app

Abhishek Soni

Abhishek Soni

In our Part 1: Setting Up AWS Chalice with S3. We set up AWS Chalice with S3 events and deployed it. In this part, We will dive into data cleaning using pandas.

Prerequisites

Install Dependencies

We need to first install the pandas and boto3 . Run the following command:

pip install boto3 pandas

Add the packages name to your /requirements.txt :

boto3

pandas

Download from S3

Using boto3, we can download the sample.csv file that we uploaded to S3 in Part 1.

In your app.py file, create S3 client and download the file by using get_object method.

from chalice import Chalice

import boto3

import pandas as pd

import io

import os

app = Chalice(app_name="data-cleaning-app")

# Step 1: Create a S3 client

s3_client = boto3.client("s3")

@app.on_s3_event(bucket="raw-xl-sheet", events=["s3:ObjectCreated:*"])

def index(event):

source_bucket = event.bucket

input_file_key = event.key

# Step 2: Download the CSV file from the source S3 bucket

response = s3_client. get_object(Bucket=source_bucket, Key=input_file_key)

Now that we have downloaded the file, we will read the csv using pandas using the following code:

df = pd.read_csv(io.BytesIO(response["Body"].read()))

We will perform cleaning on this Data Frame (df). The read_csv reads the csv file from a given location. In this code it is reading directly from memory. Here, response["Body"].read() returns a byte object. io.BytesIO() creates a in-memory binary stream from the bytes object. This allows Pandas to treat it like a file.

Cleaning the data

So downloading and reading the file was piece of a cake. The real excitement is cleaning the data! We will cover the following edge cases.

Remove duplicates

Strip and capitalize the headers

Normalize numbers - For example, 9,000 will be normalized to 9000

Format any date in YYYY-MM-DD format

Blank values will be replaced by NA

We will write small functions to keep the code clean.

Clean the Values

Add the function in your app.py :

# Step 3: Writing this function to clean the values in the Data Frame

def clean_values(x):

if isinstance(x, str):

x = x.strip().lower()

# Normalize numbers (e.g., "9,000" -> 9000)

if x.replace(",", "").isdigit():

return int(x.replace(",", ""))

try:

x = pd.to_datetime(x, errors="coerce", dayfirst=True).strftime("%Y-%m-%d")

except ValueError:

pass

return x if pd.notna(x) else "NA"

Let’s understand the function more deeply:

x.strip().lower()trims the extra space and transform the text to lower case.x.replace(",", "").isdigit()detects if the text is a number with comma (,).pd.to_datetime(x, errors="coerce", dayfirst=True).strftime("%Y-%m-%d")formats any kind to date toYYYY-MM-DDx if pd.notna(x) else "NA"returnsNAif the value is blank otherwise simply return that value.

Clean the Data Frame

The following function will clean the entire Data Frame.

# Step 4: Clean the dataframe. This function removes duplicates, transforms headers

def clean_dataframe(df):

df.columns = [col.strip().replace(' ', '_').upper() for col in df.columns]

df = df.drop_duplicates()

df = df.apply(lambda col: col.map(clean_values))

return df

Let’s understand the function more deeply:

col.strip().replace(' ', '_').upper()transforms the headers of your csv. For example, ‘joining Date‘ will be transformed to JOINING_DATE.drop_duplicatesfunction of pandas, remove all the exact duplicate rows.df.apply(…)applies the function to each column.col.map(clean_values)uses clean_values function to fix the each value of every column

So far our code looks like:

from chalice import Chalice

import boto3

import pandas as pd

import io

import os

app = Chalice(app_name="data-cleaning-app")

# Step 1: Create a S3 client

s3_client = boto3.client("s3")

# Step 3: Writing this function to clean the values in the Data Frame

def clean_values(x):

if isinstance(x, str):

x = x.strip().lower()

# Normalize numbers (e.g., "9,000" -> 9000)

if x.replace(",", "").isdigit():

return int(x.replace(",", ""))

try:

x = pd.to_datetime(x, errors="coerce", dayfirst=True).strftime("%Y-%m-%d")

except ValueError:

pass

return x if pd.notna(x) else "NA"

# Step 4: Clean the dataframe. This function removes duplicates, transforms headers

def clean_dataframe(df):

df.columns = [col.strip().replace(' ', '_').upper() for col in df.columns]

df = df.drop_duplicates()

df = df.apply(lambda col: col.map(clean_values))

return df

@app.on_s3_event(bucket="raw-xl-sheet", events=["s3:ObjectCreated:*"])

def index(event):

source_bucket = event.bucket

input_file_key = event.key

# Step 2: Download the CSV file from the source S3 bucket

response = s3_client. get_object(Bucket=source_bucket, Key=input_file_key)

df = pd.read_csv(io.BytesIO(response["Body"].read()))

df = clean_dataframe(df)

Saving the output

We will save the output the same way we stored the downloaded csv. The following code will give us the cleaned csv ready to be uploaded to the S3 Bucket

output_buffer = io.BytesIO()

df.to_csv(output_buffer, index=False)

output_buffer.seek(0)

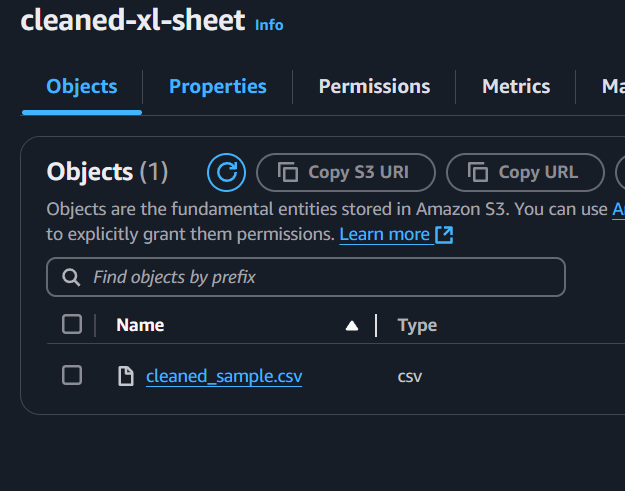

Uploading to S3 bucket

Just like get_object, we can upload to S3 using put_bucket. The following code uploads the cleaned csv file to cleaned-xl-sheet bucket that we created.

s3_client.put_object(Bucket="cleaned-xl-sheet", Key=f"cleaned_{input_file_key}", Body=output_buffer)

Final code

The final code of app.py will look like:

from chalice import Chalice

import boto3

import pandas as pd

import io

import os

app = Chalice(app_name="data-cleaning-app")

# Step 1: Create a S3 client

s3_client = boto3.client("s3")

# Step 3: Writing this function to clean the values in the Data Frame

def clean_values(x):

if isinstance(x, str):

x = x.strip().lower()

# Normalize numbers (e.g., "9,000" -> 9000)

if x.replace(",", "").isdigit():

return int(x.replace(",", ""))

try:

x = pd.to_datetime(x, errors="coerce", dayfirst=True).strftime("%Y-%m-%d")

except ValueError:

pass

return x if pd.notna(x) else "NA"

# Step 4: Clean the dataframe. This function removes duplicates, transforms headers

def clean_dataframe(df):

df.columns = [col.strip().replace(' ', '_').upper() for col in df.columns]

df = df.drop_duplicates()

df = df.apply(lambda col: col.map(clean_values))

return df

@app.on_s3_event(bucket="raw-xl-sheet", events=["s3:ObjectCreated:*"])

def index(event):

source_bucket = event.bucket

input_file_key = event.key

# Step 2: Download the CSV file from the source S3 bucket

response = s3_client. get_object(Bucket=source_bucket, Key=input_file_key)

df = pd.read_csv(io.BytesIO(response["Body"].read()))

df = clean_dataframe(df)

output_buffer = io.BytesIO()

df.to_csv(output_buffer, index=False)

output_buffer.seek(0)

s3_client.put_object(

Bucket="cleaned-xl-sheet",

Key=f"cleaned_{input_file_key}",

Body=output_buffer

)

print(

f"Processed {input_file_key} from {source_bucket} and saved first row to {target_bucket}/{output_file_key}"

)

Deploy the application

Before deploying, update config.json with the following JSON:

{

"version": "2.0",

"app_name": "data-cleaning-app",

"stages": {

"dev": {

"api_gateway_stage": "api",

"manage_iam_role": true,

"autogen_policy": true,

"lambda_memory_size": 320,

"lambda_timeout": 300,

"automatic_layer": true

}

}

}

Deploy using command:

chalice deploy

If everything goes right, you will see the similar output in your console:

Creating shared layer deployment package.

Creating app deployment package.

Creating lambda layer: data-cleaning-app-dev-managed-layer

Updating policy for IAM role: data-cleaning-app-dev

Updating lambda function: data-cleaning-app-dev-index

Configuring S3 events in bucket raw-xl-sheet to function data-cleaning-app-dev-index

Resources deployed:

- Lambda Layer ARN: arn:aws:lambda:XXXXXX:XXXXXXXXX:layer:data-cleaning-app-dev-managed-layer:1

- Lambda ARN: arn:aws:lambda:XXXXXX:XXXXXXXXX:function:data-cleaning-app-dev-index

Chalice will automatically create all the dependencies. Now simply upload your raw file in the bucket and see the magic!

aws s3 cp raw_files/sample.csv s3://raw-xl-sheet/sample.csv

Go to your S3 bucket, you should be able to see the cleaned_sample.csv file.

Conclusion

We just touched the tip of the iceberg! There is so much to do when it comes to data cleaning. However, I tried to show case how simple and scalable it can be! With more power to lambda function, you can easily clean your excel or csv file of size up to 1 GB in just minutes!

Subscribe to my newsletter

Read articles from Abhishek Soni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Abhishek Soni

Abhishek Soni

🚀 Full-Stack Developer | Aspiring Data Engineer | Tech Enthusiast I build scalable, high-performance applications and am transitioning into Data Engineering to work with large-scale data pipelines and distributed systems. With 3.5+ years of experience, I specialize in MERN, Next.js, NestJS, Python, and AWS, solving real-world problems through code. 💡 What I Do: Full-Stack Development – Scalable, high-performing web apps. Backend Engineering – API design, database architecture, and system optimization. Cloud & Automation – AWS-powered solutions for modern applications. Data Engineering (Learning) – Building pipelines, working with data lakes, and optimizing ETL processes. 💻 Tech I Love: JavaScript, TypeScript, Python, React, Tailwind CSS, Celery, AWS Lambda, ECS, S3. 🎯 Currently Learning: Data Engineering concepts, ETL pipelines, Apache Airflow, Spark, and Data Warehousing. 📢 Writing About: Full-stack development, system design, scaling applications, and my journey into Data Engineering.