Canary Deployments Using Argo Rollouts and Istio Service-mesh

George Ezejiofor

George Ezejiofor

Introduction 🚀

🌟 In today’s cloud-native environments, Canary Deployment stands out as a powerful technique for achieving zero-downtime releases. By incrementally rolling out new application versions, canary deployments reduce risk and ensure a seamless user experience. When combined with the advanced rollout strategies of Argo Rollouts and the fine-grained traffic management of Istio Service Mesh, you gain precise control over traffic shifts, enabling smooth transitions between versions. This project also has Automatic Rollback capability for the stable version when canary version deployment goes wrong.

🎯 This guide will show you how to implement Canary Deployment using Argo Rollouts’ intelligent strategies alongside Istio’s traffic-splitting capabilities. You’ll learn how to gradually shift traffic between application versions while maintaining full observability and control. By the end of this guide, you’ll have a robust, production-ready setup that deploys new features seamlessly—without impacting your end users.

✅ Prerequisites 🛠️

To successfully implement Zero Downtime Canary Deployment with Argo-Rollouts and Istio Service-Mesh, ensure you have the following:

🐳 Kubernetes Cluster: A working Kubernetes cluster set up using KUBEADM on a bare-metal setup, with MetalLB configured for LoadBalancer functionality.

💻 kubectl: Install and configure the Kubernetes command-line tool to interact with your cluster.

🧩 Helm: The Kubernetes package manager for simplified application deployment and configuration.

🔒 Cert-Manager (optional): Installed in the cluster for automated TLS certificate management.

🌐 Istio Ingress Controller: Deploy the Istio Ingress Gateway to handle HTTP(S) traffic routing effectively.

📂 Namespace Configuration: Create distinct namespaces or use labels to separate stable and canary deployments for clear isolation.

🌐 Domain Name: Set up a domain (e.g.,

terranetes.co.uk) or a subdomains pointing to your LoadBalancer IP address. You can manage DNS using providers like Cloudflare.📧 Let's Encrypt Account: Ready with a valid email address for certificate issuance to enable HTTPS.

📡 MetalLB: Configured for bare-metal Kubernetes clusters to manage LoadBalancer services.

📈 Kiali: Installed for monitoring Istio's traffic flow and gaining visibility into service dependencies and metrics.

📦 Argo Rollouts: Installed to handle advanced Canary Deployment strategies. Use the following

kubectl create namespace argo-rollouts kubectl apply -n argo-rollouts -f https://github.com/argoproj/argo-rollouts/releases/latest/download/install.yaml📡 Basic Networking Knowledge: Familiarity with Kubernetes networking concepts like Ingress, Services, and LoadBalancer mechanisms.

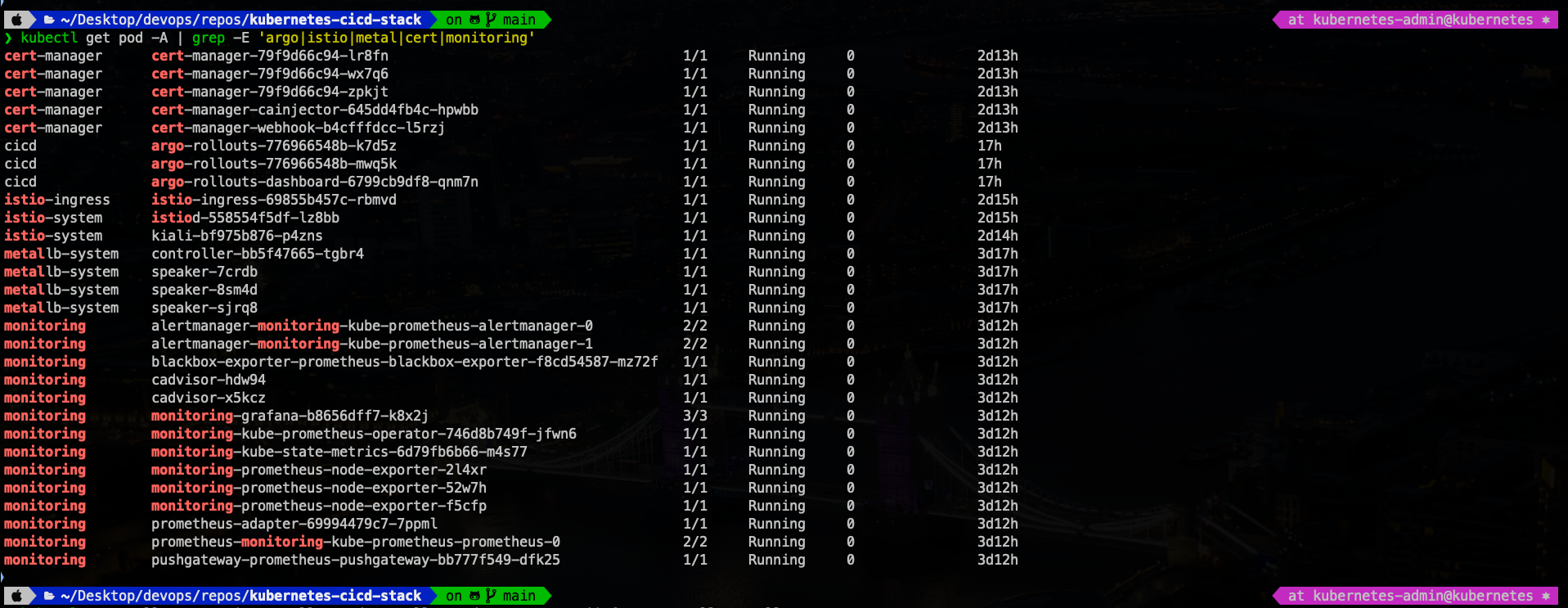

kubectl get pod -A | grep -E 'argo|istio|metal|cert|monitoring'

With these prerequisites ready, you're equipped to dive into setting up Canary Deployments! 🚀

Architecture 📈

Deployments 🚀

You can get certificate deployment from my BlueGreen deployment article

Argo-rollouts deployment 💻

Deploy Clusterissuer with the same method as Bluegreen Deployment.

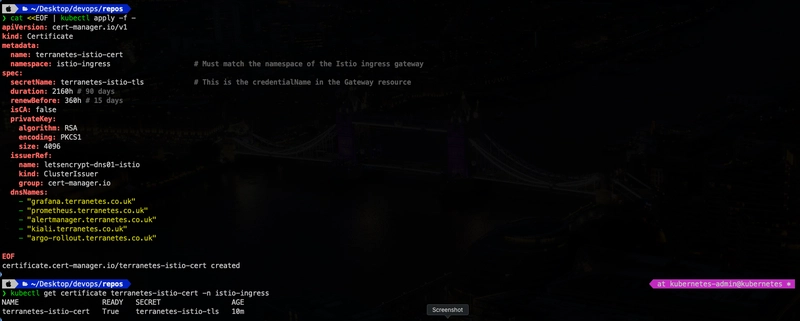

Deploy certificate for istio-ingress

cat <<EOF | kubectl apply -f -

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: terranetes-istio-cert

namespace: istio-ingress # Must match the namespace of the Istio ingress gateway

spec:

secretName: terranetes-istio-tls # This is the credentialName in the Gateway resource

duration: 2160h # 90 days

renewBefore: 360h # 15 days

isCA: false

privateKey:

algorithm: RSA

encoding: PKCS1

size: 4096

issuerRef:

name: letsencrypt-dns01-istio

kind: ClusterIssuer

group: cert-manager.io

dnsNames:

- "grafana.terranetes.co.uk"

- "prometheus.terranetes.co.uk"

- "alertmanager.terranetes.co.uk"

- "kiali.terranetes.co.uk"

- "argo-rollout.terranetes.co.uk"

EOFCertificate Issued ✅

Certificate Issued ✅

Deploy argo rollout namespace (canary)🏠

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Namespace

metadata:

name: canary

labels:

istio-injection: enabled

EOF

Deploy argo rollout Gateway

cat << EOF | kubectl apply -f -

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: terranates-app-gateway

namespace: istio-ingress

spec:

selector:

istio: ingress # use istio default controller

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"

tls:

httpsRedirect: true # Redirect HTTP to HTTPS

- port:

number: 443

name: https

protocol: HTTPS

tls:

mode: SIMPLE

credentialName: terranetes-istio-tls # Reference the TLS secret

hosts:

- "argo-rollout.terranetes.co.uk"

EOF

Deploy argo rollout Services

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: terranates-app-canary

namespace: canary

labels:

app: terranates-app # Add this label

spec:

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: terranates-app

# This selector will be updated with the pod-template-hash of the canary ReplicaSet. e.g.:

# rollouts-pod-template-hash: 7bf84f9696

---

apiVersion: v1

kind: Service

metadata:

name: terranates-app-stable

namespace: canary

labels:

app: terranates-app # Add this label

spec:

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: terranates-app

# This selector will be updated with the pod-template-hash of the stable ReplicaSet. e.g.:

# rollouts-pod-template-hash: 789746c88d

EOF

Deploy argo rollout VirtualServices

cat << EOF | kubectl apply -f -

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: terranates-app-vs1

namespace: canary

spec:

gateways:

- istio-ingress/terranates-app-gateway

hosts:

- "argo-rollout.terranetes.co.uk"

http:

- name: route-one

route:

- destination:

host: terranates-app-stable

port:

number: 80

weight: 100

- destination:

host: terranates-app-canary

port:

number: 80

weight: 0

EOF

Deploy argo rollout Terranetes webapp

cat << EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: terranates-app

namespace: canary

spec:

replicas: 10

strategy:

canary:

canaryService: terranates-app-canary

stableService: terranates-app-stable

analysis:

startingStep: 2

templates:

- templateName: istio-success-rate

args:

- name: service

value: terranates-app-canary # ✅ Canary service name

- name: namespace

valueFrom:

fieldRef:

fieldPath: metadata.namespace

trafficRouting:

istio:

virtualServices:

- name: terranates-app-vs1

routes:

- route-one

steps:

- setWeight: 10

- pause: {}

- setWeight: 20

- pause: {duration: 30s}

- setWeight: 30

- pause: {duration: 30s}

- setWeight: 40

- pause: {duration: 30s}

- setWeight: 50

- pause: {duration: 30s}

- setWeight: 60

- pause: {duration: 30s}

- setWeight: 70

- pause: {duration: 30s}

- setWeight: 80

- pause: {duration: 30s}

- setWeight: 90

- pause: {duration: 30s}

- setWeight: 100

selector:

matchLabels:

app: terranates-app

template:

metadata:

labels:

app: terranates-app

spec:

containers:

- name: terranates-app

image: georgeezejiofor/argo-rollout:yellow

ports:

- name: http

containerPort: 8080

EOF

Deploy argo rollout AnalysisTemplate

cat << EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: istio-success-rate

namespace: canary

spec:

args:

- name: service

- name: namespace

metrics:

- name: success-rate

interval: 10s

successCondition: result[0] < 0.2 or result[1] < 10 # ✅ Handle low traffic

failureCondition: result[0] >= 0.2

failureLimit: 3

provider:

prometheus:

address: http://monitoring-kube-prometheus-prometheus.monitoring.svc.cluster.local:9090

query: >+

(

sum(irate(istio_requests_total{

reporter="source",

destination_service=~"{{args.service}}.{{args.namespace}}.svc.cluster.local",

response_code!~"2.*"}[2m])

)

/

sum(irate(istio_requests_total{

reporter="source",

destination_service=~"{{args.service}}.{{args.namespace}}.svc.cluster.local"}[2m])

)

)

# Add total requests as second metric

,

sum(irate(istio_requests_total{

reporter="source",

destination_service=~"{{args.service}}.{{args.namespace}}.svc.cluster.local"}[2m])

)

EOF

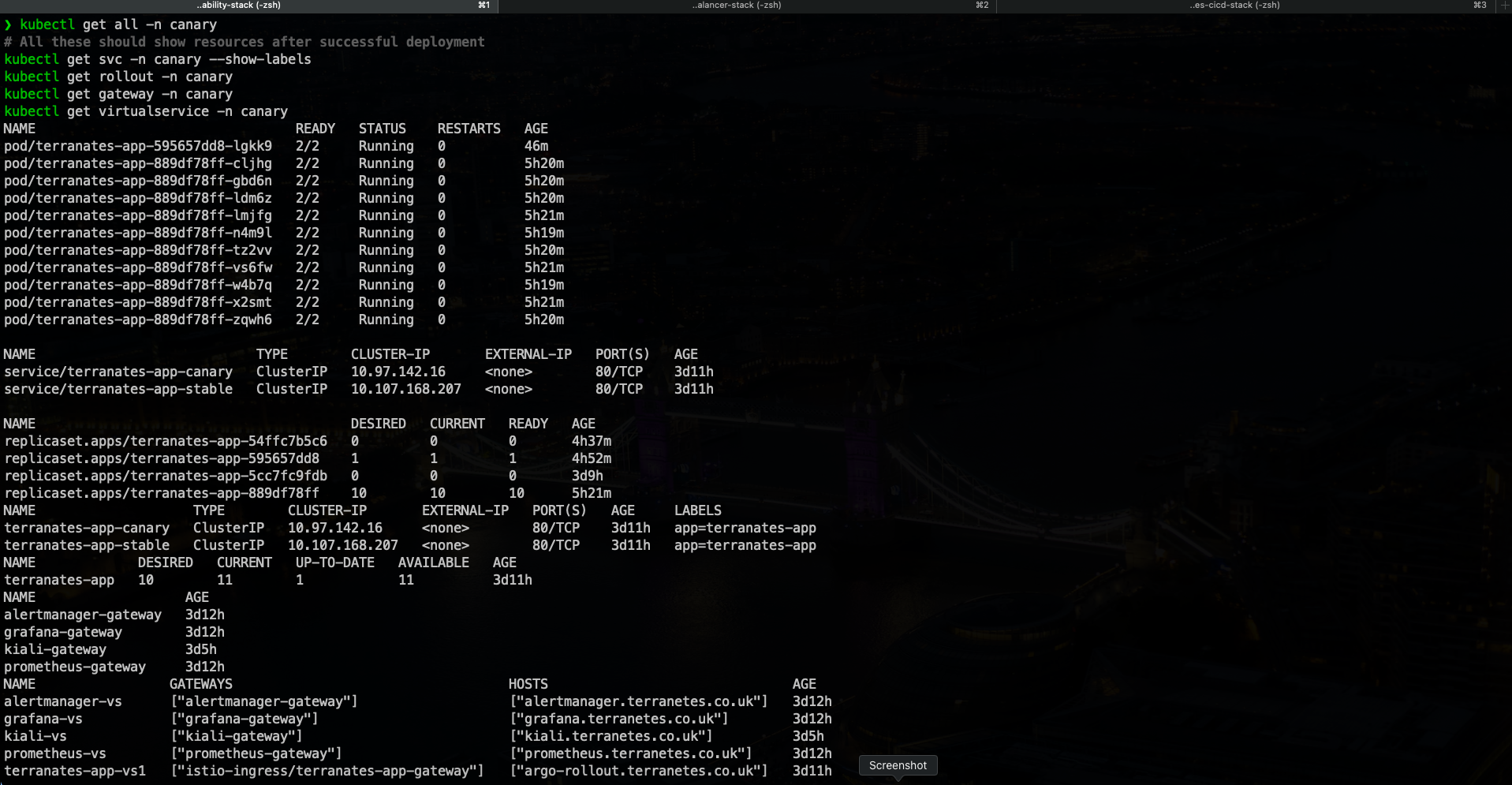

Validate deployments in “canary” namespace

kubectl get all -n canary

# All these should show resources after successful deployment

kubectl get svc -n canary --show-labels

kubectl get rollout -n canary

kubectl get gateway -n canary

kubectl get virtualservice -n canary

Generate Traffic (Essential!): using hey command for macOS users

hey -z 5m -q 10 https://argo-rollout.terranetes.co.uk

Set new Green image for argo rollout

kubectl argo rollouts set image terranates-app terranates-app=georgeezejiofor/argo-rollout:green -n canary

Set new Red image for argo rollout

kubectl argo rollouts set image terranates-app terranates-app=georgeezejiofor/argo-rollout:red -n canary

Set new Blue image for argo rollout

kubectl argo rollouts set image terranates-app terranates-app=georgeezejiofor/argo-rollout:blue -n canary

Set new Yellow image for argo rollout

kubectl argo rollouts set image terranates-app terranates-app=georgeezejiofor/argo-rollout:yellow -n canary

Set new Purple image for argo rollout

kubectl argo rollouts set image terranates-app terranates-app=georgeezejiofor/argo-rollout:purple -n canary

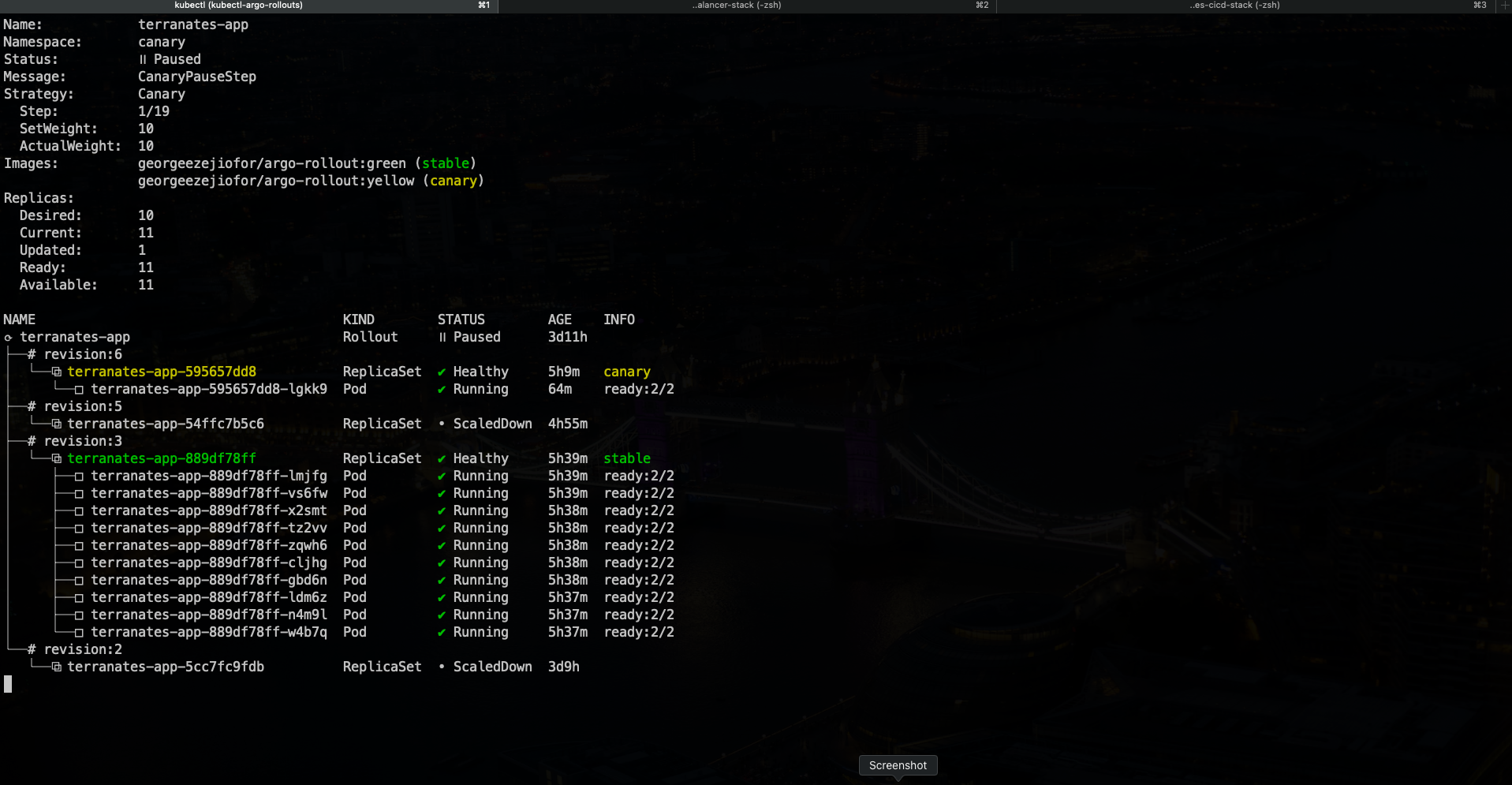

Watch argo-rollouts

kubectl argo rollouts get rollout terranates-app -n canary -w

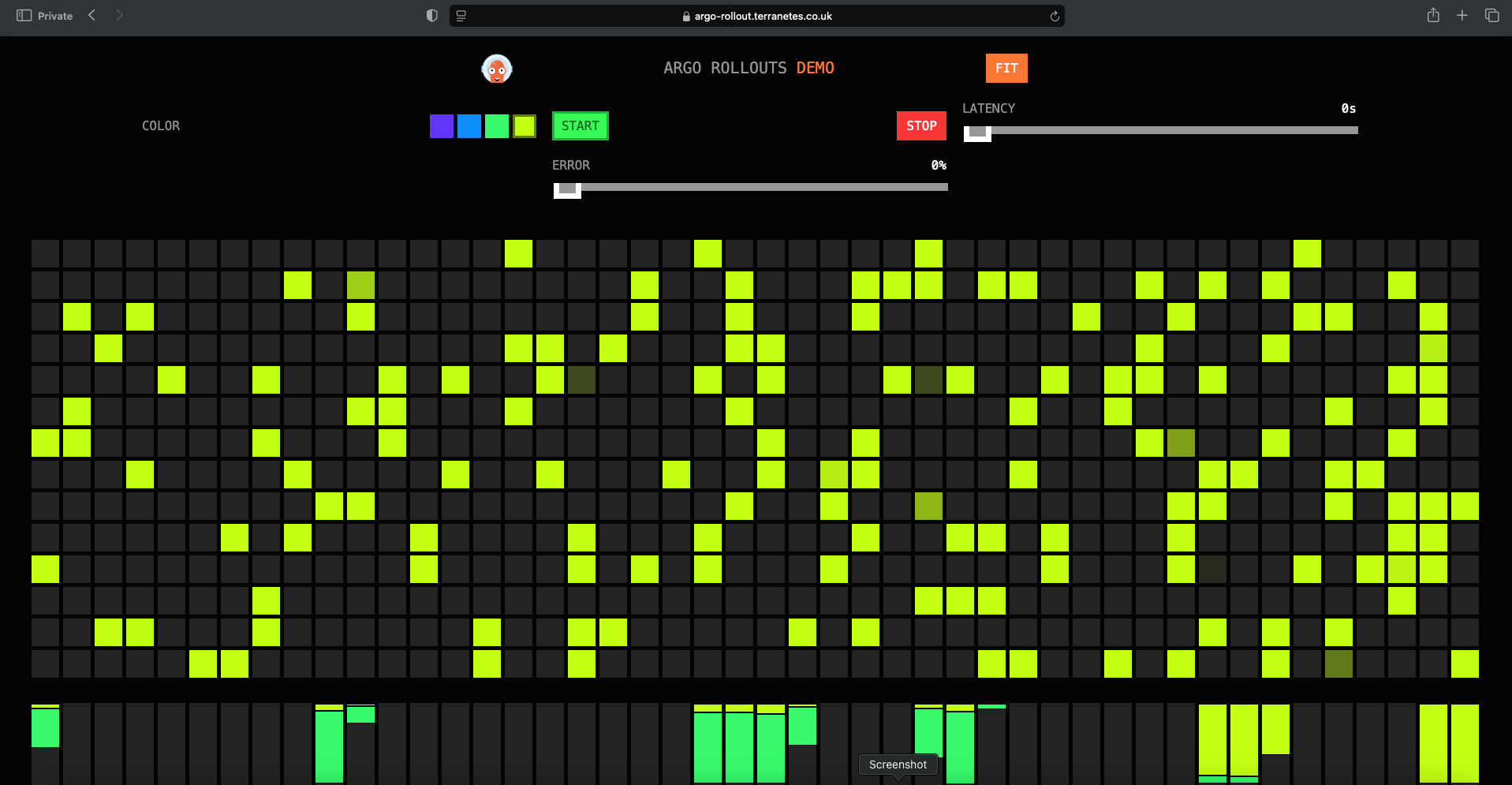

Visual Testing for Rollouts and Automatic Rollback 😊

Conclusion 🎉

Congratulations! You’ve just unlocked the power of zero-downtime deployments with Argo Rollouts and Istio! 🚀 By combining Argo Rollouts’ intelligent canary strategies with Istio’s granular traffic management, you’ve built a robust system that:

Reduces Risk 😌: Gradually shift traffic to new versions while monitoring real-time metrics.

Ensures Smooth User Experience 🌟: No downtime, no disruptions—just seamless updates.

Automates Rollbacks 🛡️: Detect issues early and revert to stable versions effortlessly.

Optimizes Traffic Control 🎛️: Istio’s dynamic routing ensures precise traffic splitting.

With this setup, you’re not just deploying code—you’re delivering confidence. 💪 Whether you’re rolling out mission-critical features or experimenting with new updates, this integration empowers you to innovate fearlessly.

Next Project: Observability Stacks 📈

Now that you've mastered canary deployments, it's time to build a powerful observability stack for deeper insights into your applications! 🚀 In this next project, we'll explore tools that provide real-time monitoring, centralized logging, and distributed tracing to help you maintain a reliable and performant system.

Observability Tools We’ll Cover 🛠️

Dive into building a powerful observability stack for deeper insights! We'll explore tools like Prometheus, Grafana, Loki, Jaeger, OpenTelemetry, Kiali, and the promtail for real-time monitoring, logging, and tracing. 🛠️

Stay tuned for hands-on implementations and best practices! 🎯This stack will help you monitor, troubleshoot, and optimize your applications with full visibility into system behavior.

Stay tuned as we explore hands-on implementations and best practices! 🎯

Follow me on Linkedin George Ezejiofor to stay updated on cloud-native observability insights! 😊

Happy deploying! 🚀🎉

Subscribe to my newsletter

Read articles from George Ezejiofor directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

George Ezejiofor

George Ezejiofor

As a Senior DevSecOps Engineer, I’m dedicated to building secure, resilient, and scalable cloud-native infrastructures tailored for modern applications. With a strong focus on microservices architecture, I design solutions that empower development teams to deliver and scale applications swiftly and securely. I’m skilled in breaking down monolithic systems into agile, containerised microservices that are easy to deploy, manage, and monitor. Leveraging a suite of DevOps and DevSecOps tools—including Kubernetes, Docker, Helm, Terraform, and Jenkins—I implement CI/CD pipelines that support seamless deployments and automated testing. My expertise extends to security tools and practices that integrate vulnerability scanning, automated policy enforcement, and compliance checks directly into the SDLC, ensuring that security is built into every stage of the development process. Proficient in multi-cloud environments like AWS, Azure, and GCP, I work with tools such as Prometheus, Grafana, and ELK Stack to provide robust monitoring and logging for observability. I prioritise automation, using Ansible, GitOps workflows with ArgoCD, and IaC to streamline operations, enhance collaboration, and reduce human error. Beyond my technical work, I’m passionate about sharing knowledge through blogging, community engagement, and mentoring. I aim to help organisations realize the full potential of DevSecOps—delivering faster, more secure applications while cultivating a culture of continuous improvement and security awareness.