Nginx: The Web Server Powering the Modern Internet

Yash Patil

Yash Patil

Introduction

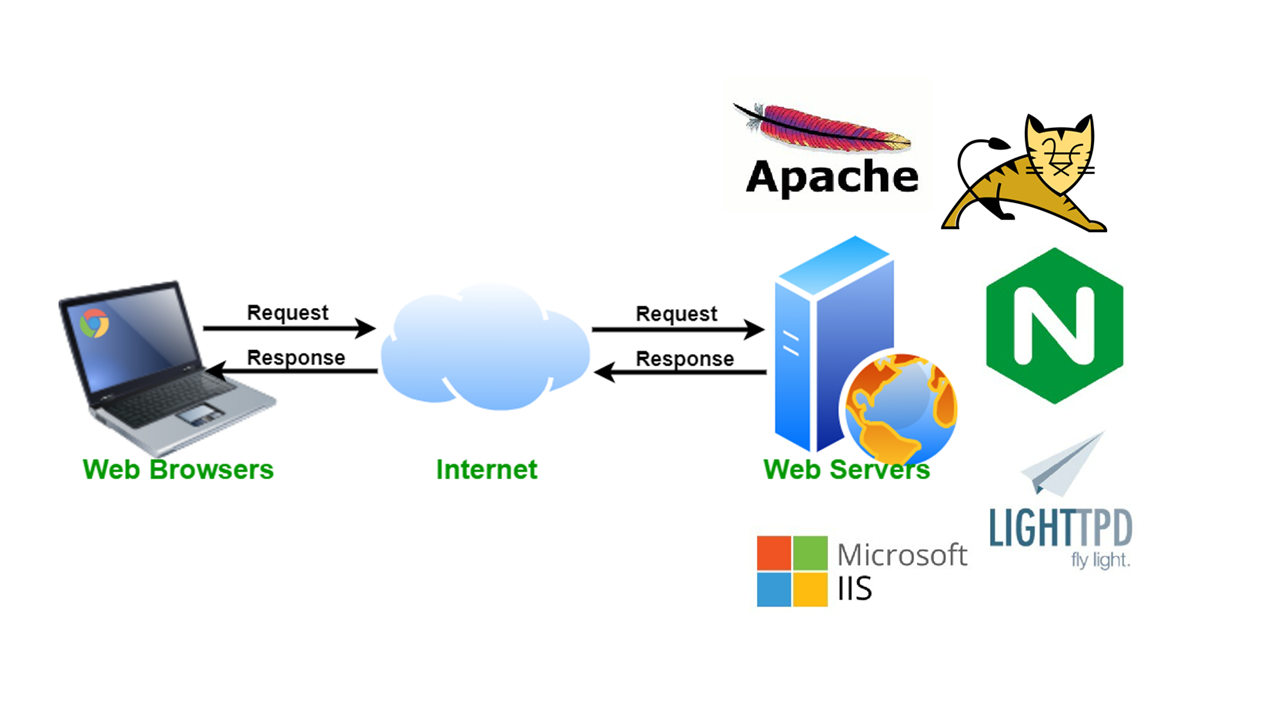

Web servers are the backbone of the internet, responsible for delivering web pages, handling user requests, and managing website traffic. Over the years, web servers have evolved to meet the demands of high-traffic applications. In this blog let’s explore the history and significance of web servers, the rise of Nginx, its key applications and features, and why many have shifted from Apache to Nginx. Finally, we’ll conclude with a practical demonstration of setting up Nginx.

Introduction to Web Servers

Web servers enable communication between users and websites, ensuring data is processed and delivered efficiently. Since the early days of the internet, web servers have transitioned from simple static content delivery to dynamic, scalable solutions.

A Brief History

The first web server, CERN HTTPd, was created in 1990 by Tim Berners-Lee.

Apache HTTP Server emerged in 1995 and dominated for years.

As websites grew in complexity, Apache struggled with performance, leading to the development of Nginx in 2004.

Why Do We Need Web Servers?

Web servers play a crucial role in:

Hosting websites and applications

Handling HTTP/HTTPS requests

Load balancing and traffic management

Acting as reverse proxies for backend services

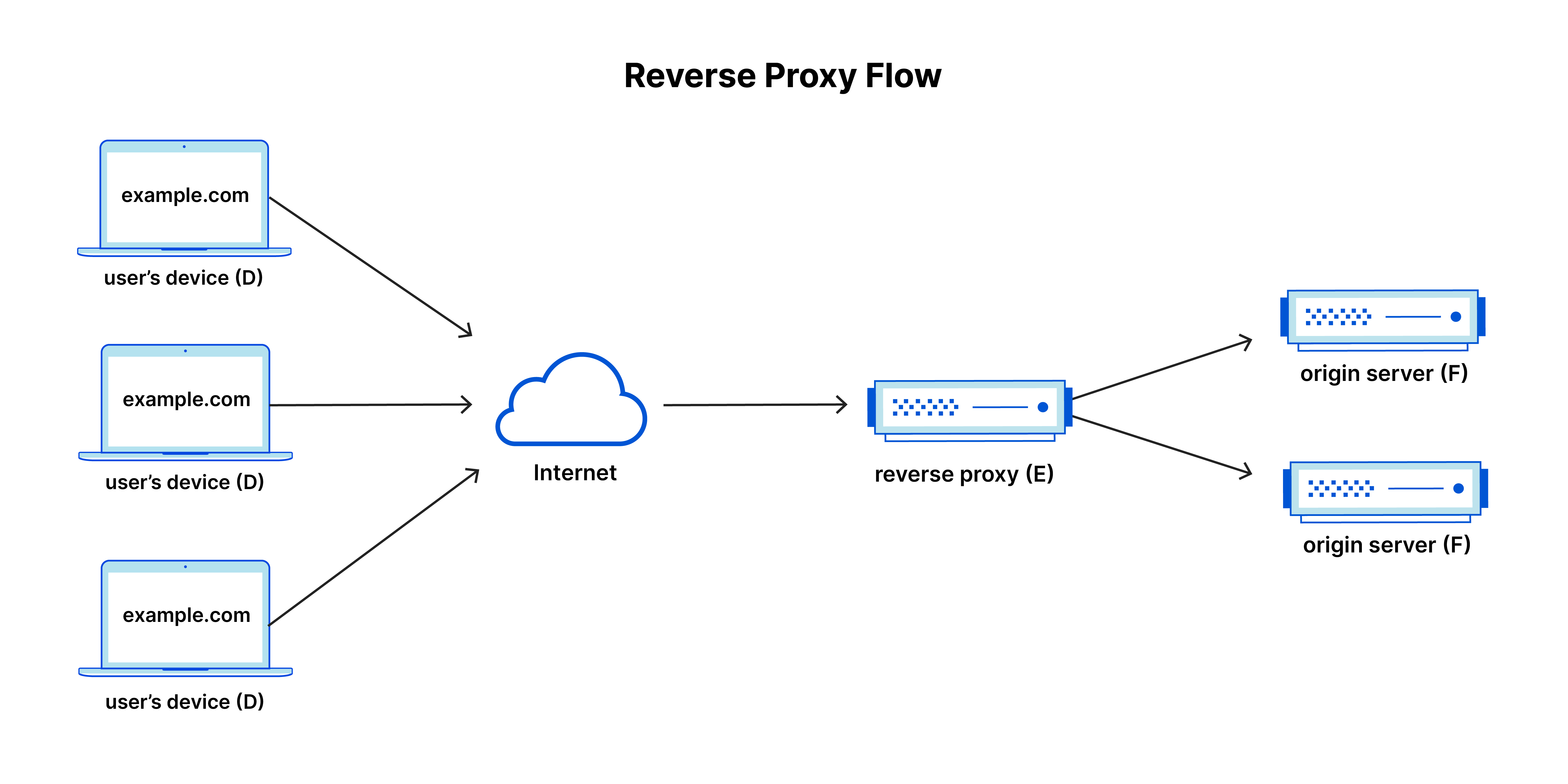

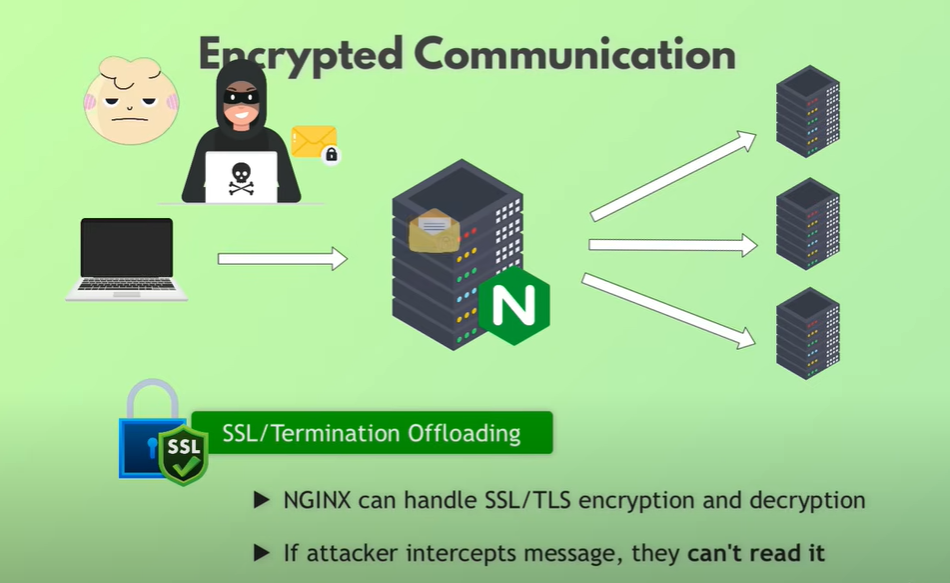

What is a Reverse Proxy?

A reverse proxy is a server that sits between clients (users) and backend servers, forwarding requests while providing additional features like load balancing, security filtering, and caching. Unlike a traditional forward proxy (which helps clients access external resources), a reverse proxy protects and optimizes traffic for backend servers.

Why Use a Reverse Proxy?

Hides backend server details, improving security.

Distributes requests to multiple servers, reducing load.

Caches content to serve users faster.

Handles SSL termination, offloading encryption overhead.

This is one of the primary reasons Nginx is widely used in modern architectures—it seamlessly acts as a reverse proxy for web applications, APIs, and microservices.

The Rise of Nginx

Nginx was created by Igor Sysoev in 2004 to solve Apache’s performance bottlenecks. It introduced an event-driven, asynchronous architecture, making it far more efficient than Apache’s thread-based model.

Key Applications of Nginx

Nginx is more than just a web server—it’s a powerful tool used in modern infrastructure for various critical roles.

1. Web Server

Nginx is widely used as a web server to efficiently serve static content such as HTML, CSS, JavaScript, and images.

Unlike traditional web servers, Nginx uses an event-driven architecture, making it highly efficient in handling thousands of concurrent connections.

It consumes fewer system resources, making it ideal for hosting high-traffic websites.

Many large-scale companies use Nginx as their primary web server for speed and reliability.

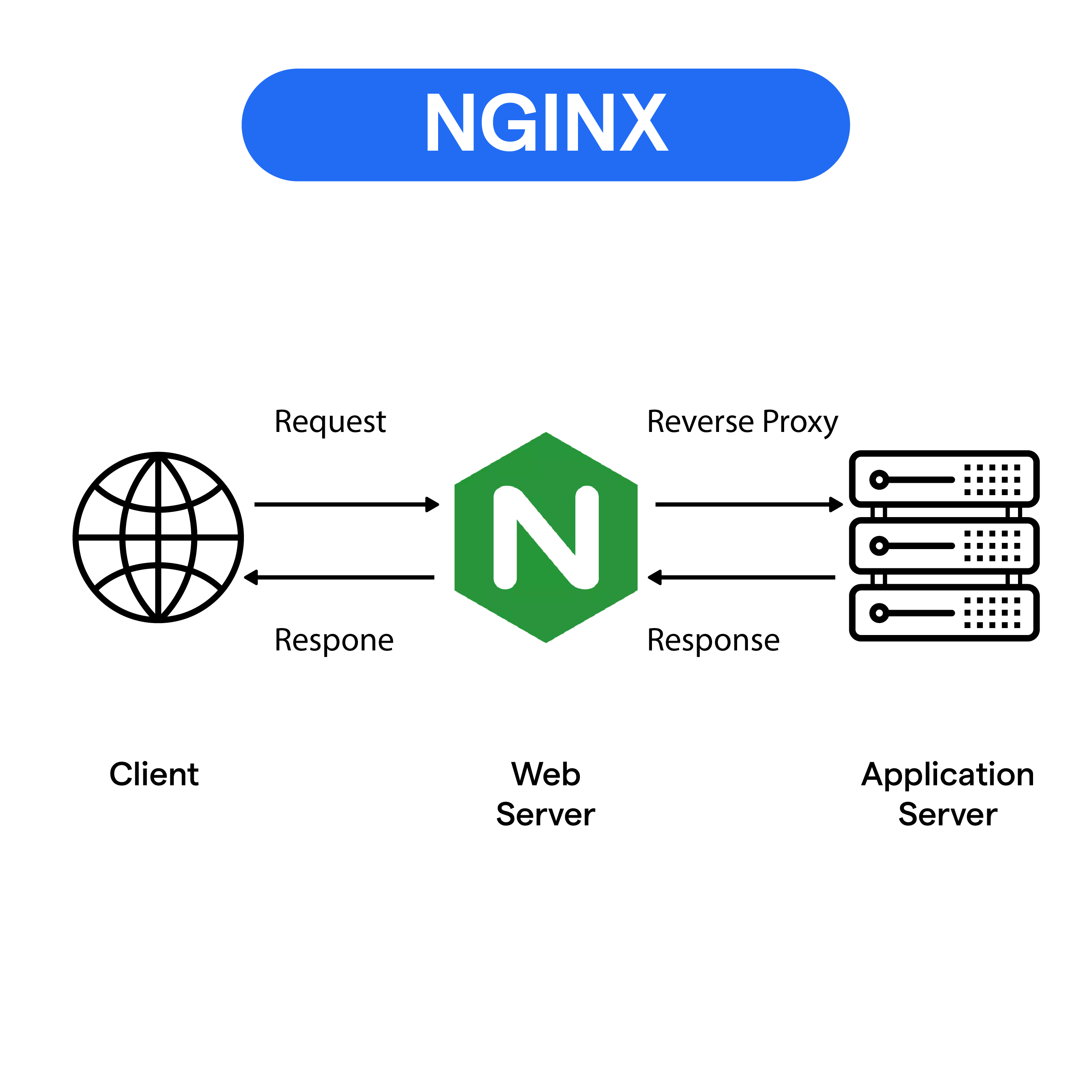

2. Reverse Proxy

A reverse proxy sits between the client (user) and backend servers, forwarding requests while providing additional benefits:

Performance Optimization – Caches static files and compresses content to reduce server load.

Security Enhancement – Hides backend server IPs, protecting against direct attacks.

SSL Termination – Offloads SSL encryption to reduce the processing burden on application servers.

Traffic Control – Filters and blocks malicious requests to prevent DDoS attacks.

Example: A company with multiple backend servers running Node.js, Python, or Java apps can use Nginx as a reverse proxy to efficiently route traffic and improve security.

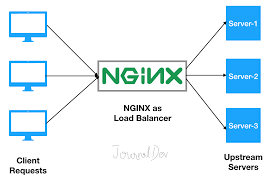

3. Load Balancer

Load balancing ensures high availability and reliability by distributing incoming requests across multiple servers.

Round Robin – Requests are distributed evenly to all available servers.

Least Connections – Directs traffic to the server with the lowest number of active connections.

IP Hashing – Ensures a client always connects to the same backend server for session persistence.

Health Checks – Monitors server health and routes traffic away from failed servers.

Example: Netflix and Airbnb use Nginx’s load balancing capabilities to handle millions of concurrent users without downtime.

4. Security & Caching

Nginx enhances security and improves performance through caching mechanisms:

DDoS Protection – Filters out bad requests and limits excessive traffic from single IPs.

Web Application Firewall (WAF) – Protects against SQL injections, XSS, and other vulnerabilities.

Content Caching – Stores frequently accessed content, reducing backend server load and speeding up response times.

Rate Limiting – Prevents brute-force attacks and abuse by limiting the number of requests per user.

Example: E-commerce websites like Amazon and Flipkart use caching to deliver pages faster and prevent overload during peak traffic.

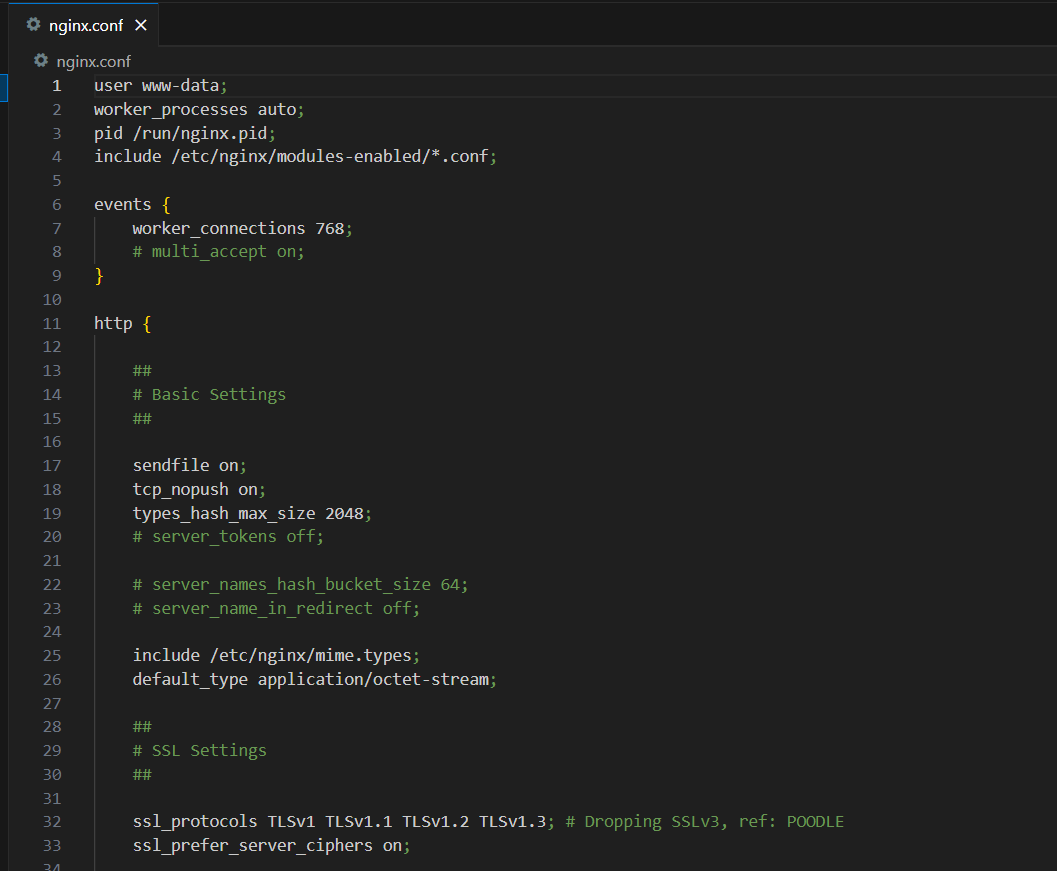

How does Nginx manage all this and how to configure it?

You might be wondering how do you make Nginx do all these things how do you configure them how do you tell Nginx whether it should act as a web server or a proxy server and how do you configure all these caching and SSL traffic and all this stuff.

Well that's where Nginx configuration file comes in which lets you define all this configuration with so-called directives this is where you can define whether you want your Nginx to be a web server or a proxy server simply by configuring whether it should forward the traffic to other web servers or whether it should handle it itself.

The main config file is typically named “nginx.conf” and is located in /etc/nginx.

Lets install Nginx and checkout its default configuration file!

sudo apt update

sudo apt install nginx

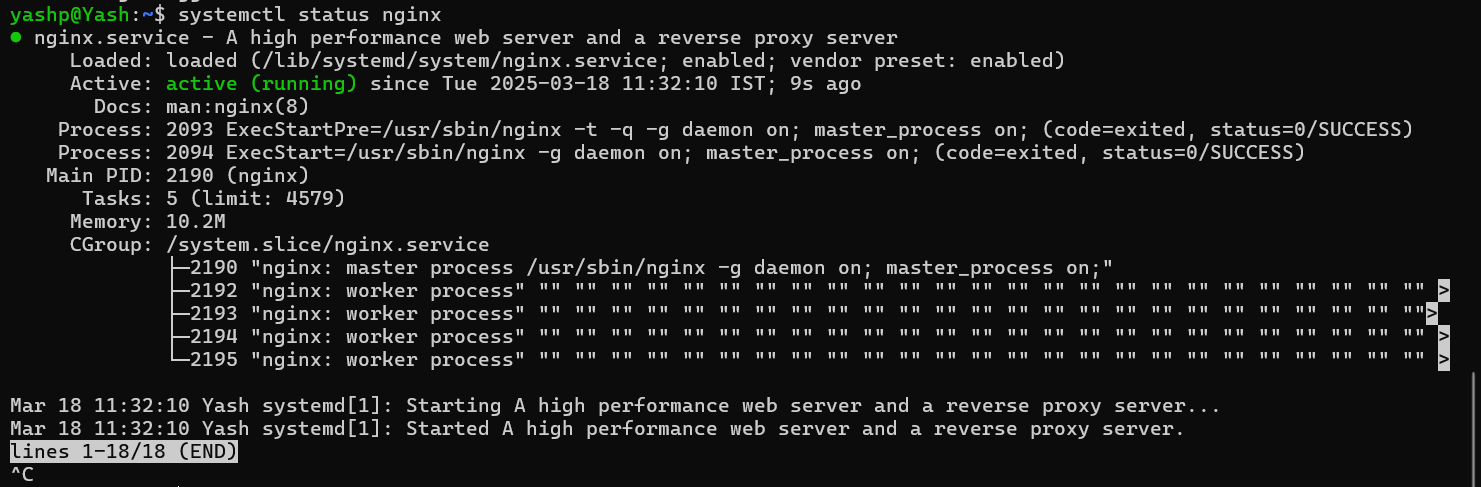

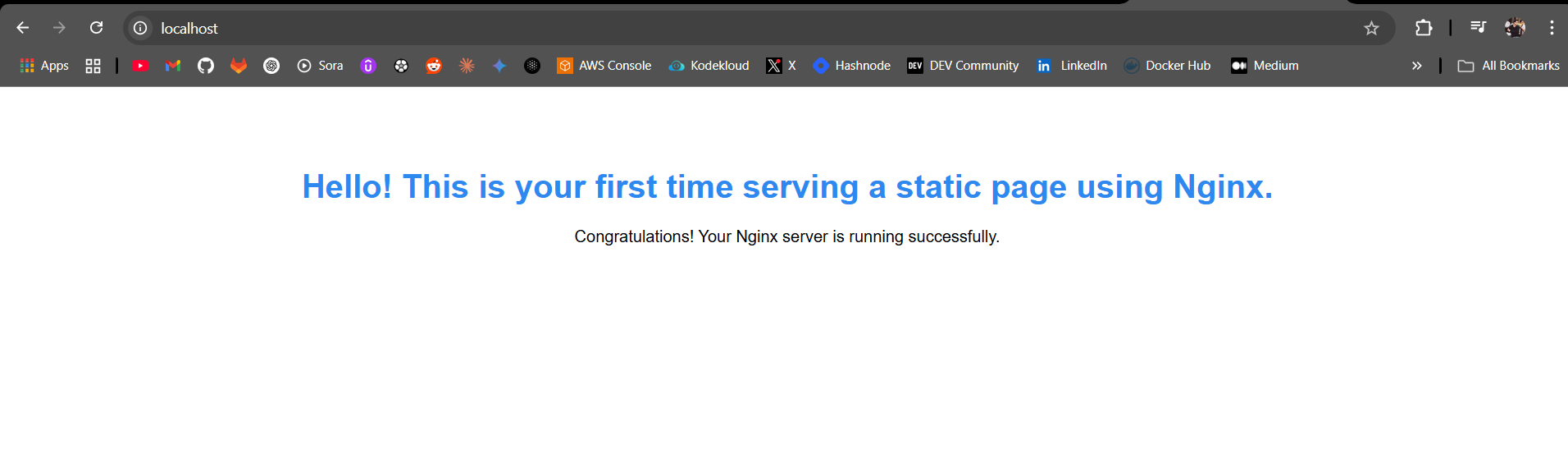

As you can see our Nginx server is up and running, so lets head over to the browser and see what is its default configuration file serving!

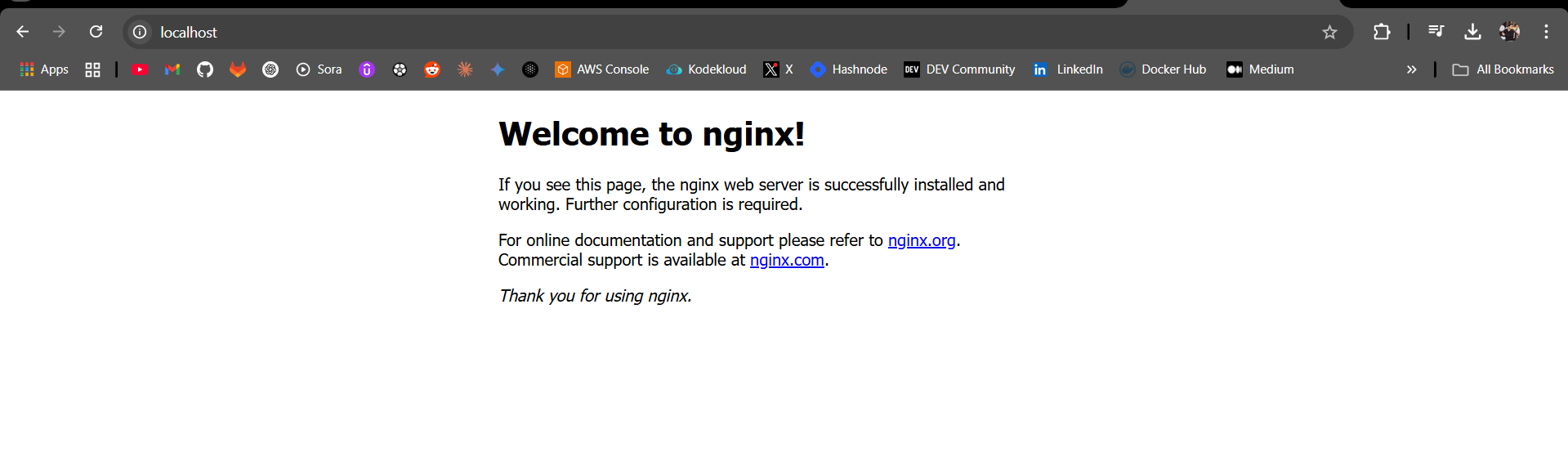

The good old “Welcome to Nginx!“ message, of course. Now lets leverage Nginx to showcases its applications as a Web Server, a proxy and a load balancer.

Lets write a Nginx configuration file from scratch!

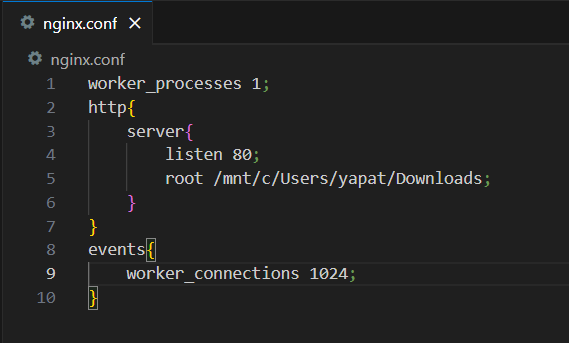

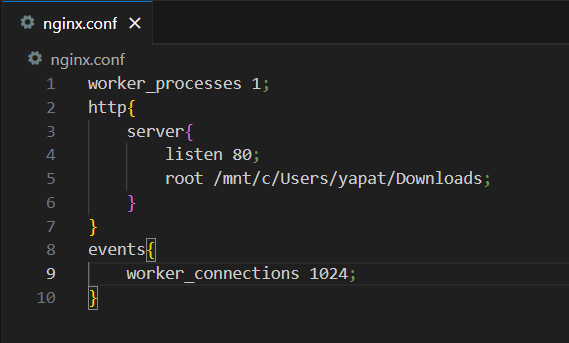

Here’s how the default Nginx Configuration file looks like :

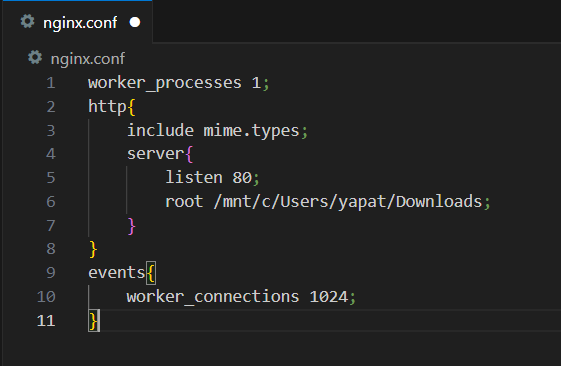

Here is a very basic configuration file I made to demonstrate Nginx serving a static page :

Make sure you reload your Nginx Server using :

nginx -s reload

And there you go, you have just your first static web page using Nginx locally!

Let us understand what each fields actually mean in that configuration file.

- worker_processes

This is a Directive. This basically sets the number of worker processes Nginx should start with.

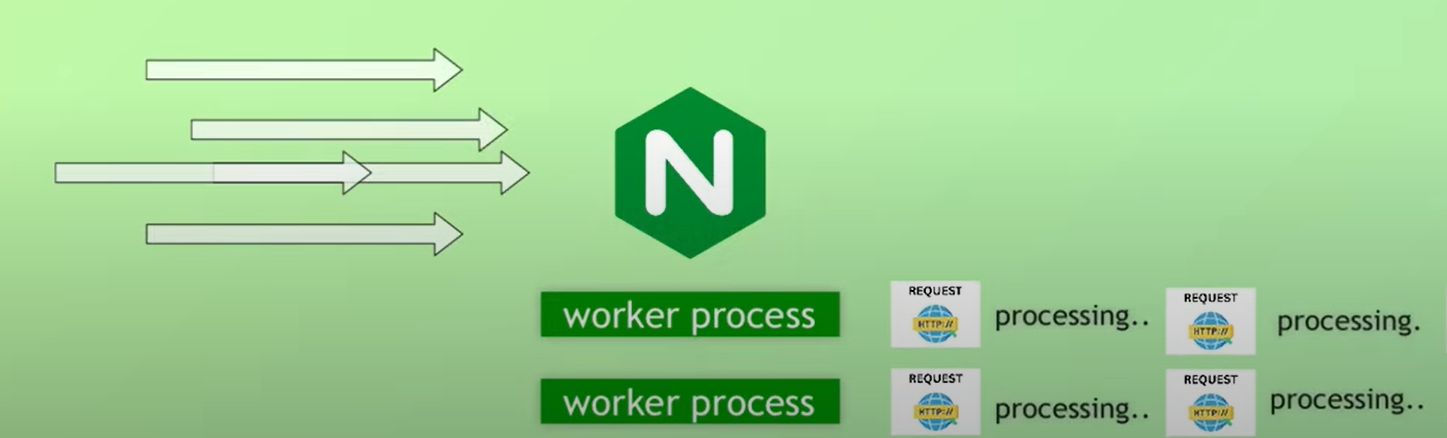

What is Worker Process?

Worker Processes are the ones doing the work of getting and processing the requests from clients and browsers.

The number of worker processes directly influence how well Nginx handles high load of traffic. More worker processes we have, more request Nginx can handle.

This should be tuned according to the server’s hardware(CPU Cores) and expected traffic load. At production level it is always a practice to set the worker processes with equivalent number of CPU Cores to take advantage of parallel processing and handle traffic load efficiently.

If we set them to auto, Nginx automatically detects the number of CPU Cores available in the server and starts the corresponding number of worker processes.

- worker_connections

Defined in the events block, this directive defines how many connections each worker process should handle i.e. per worker how many simultaneous connections can be opened. Eg : worker_connections 1024; means the one worker process we have defined will handle 1024 simultaneous client requests.

This was like a meta configuration of our Nginx server, how it should start and what must be its general connection processing.

- http{}

The main core logic of the Nginx server goes into the http block. This is where we define how to handle http requests. Inside http we have the main configuration of the server inside the server{} block.

It defines on what port is nginx is listening for client requests, defines how Nginx should handle a request for particular domain or IP address, which domain and subdomain the configuration applies to and how to route the incoming requests.

- root

This directive is the file path consisting of the static content we have to serve when we hit the the port mentioned in the listen directive.

In the context of our configuration file once we hit the localhost:80 it will serve the content inside the path mentioned in the root directive :

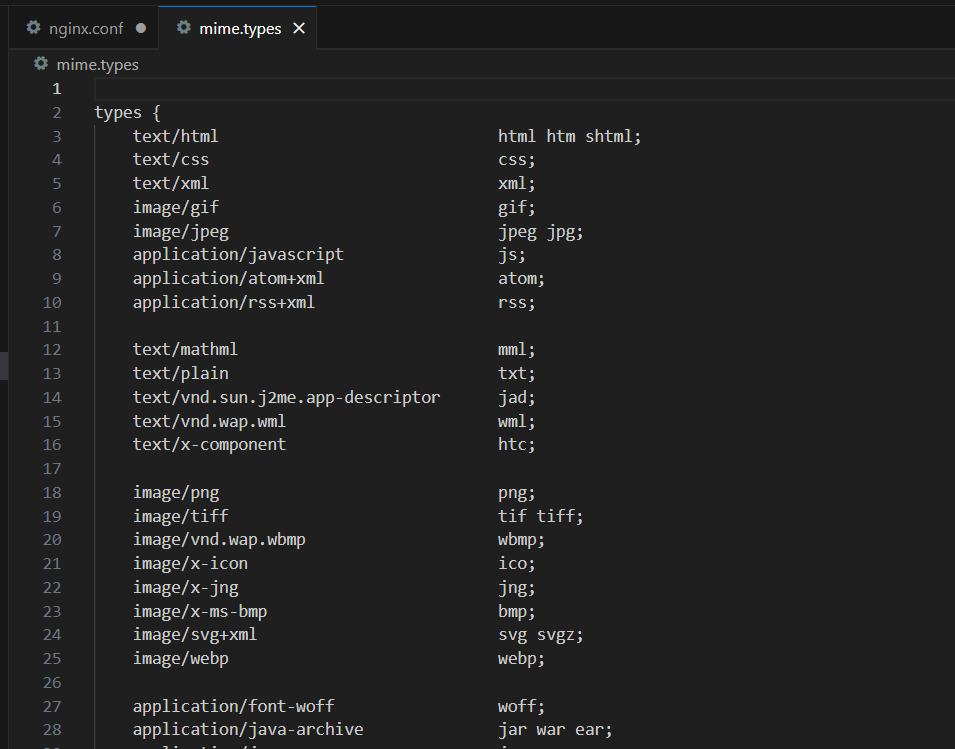

Another important configuration can be including the file types in response to the client. When Nginx returns the response requested by the client, whether it be HTML, Styles or Images it must include the type of the file in response to the client as well. This helps the client or the browser to render and process the file.

In order to do so we have to add another directive include mime.types; inside the http{} block. mime.types consists if a bunch of type of files which can tell Nginx to include the corresponding MIME types in the “content-type“ header, when sending a file as a response.

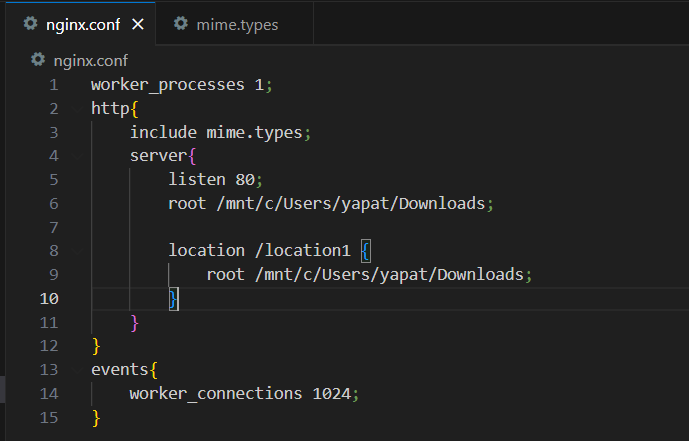

- Location{} block

This is a very important concept in Nginx because it allows us it hit certain endpoints and serve different types of HTML elements.

Lets add another endpoint to our to our webserver and serve another HTML page.

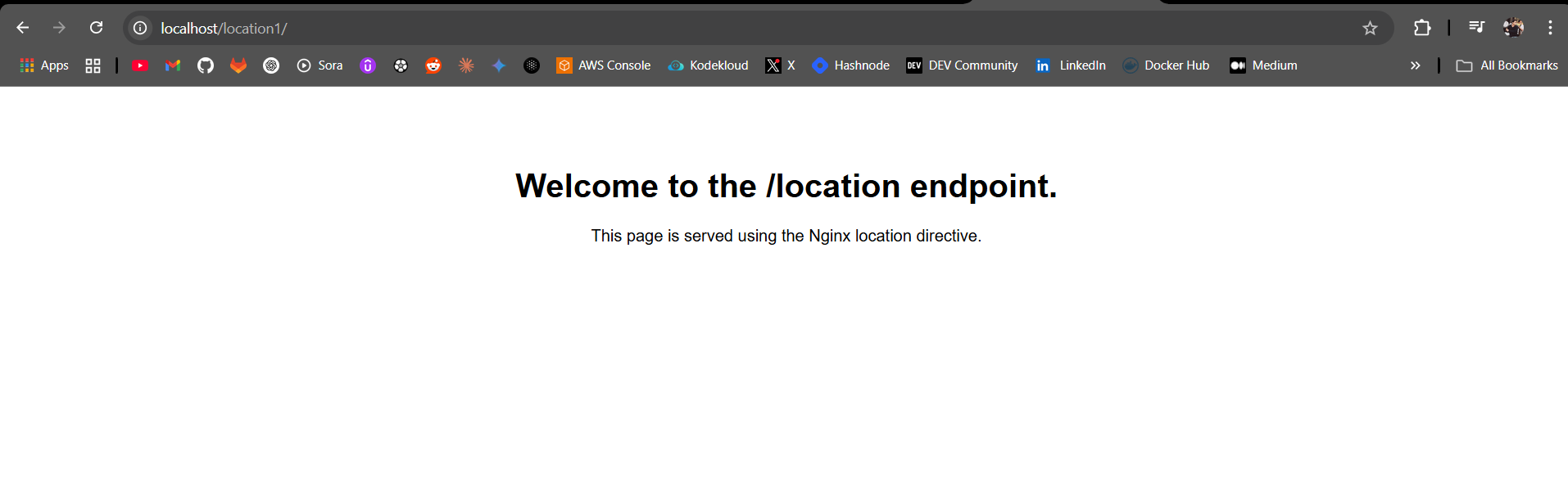

As mentioned above in the location{} block, it has a endpoint /location1. So what basically happens is that Nginx will look for a location1 folder inside the root path and serve its index HTML page. And once you hit the localhost/location1/ path, VOILA !

Keep in mind though that Nginx always looks looks for a index.html page unless specified anything else. Not flexible huh? Lets configure that :

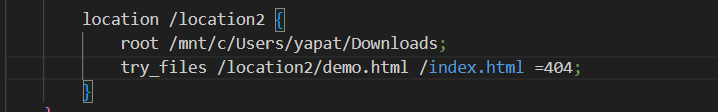

Lets add another location{} block and create another HTML element but this type with a different name instead of index.html :

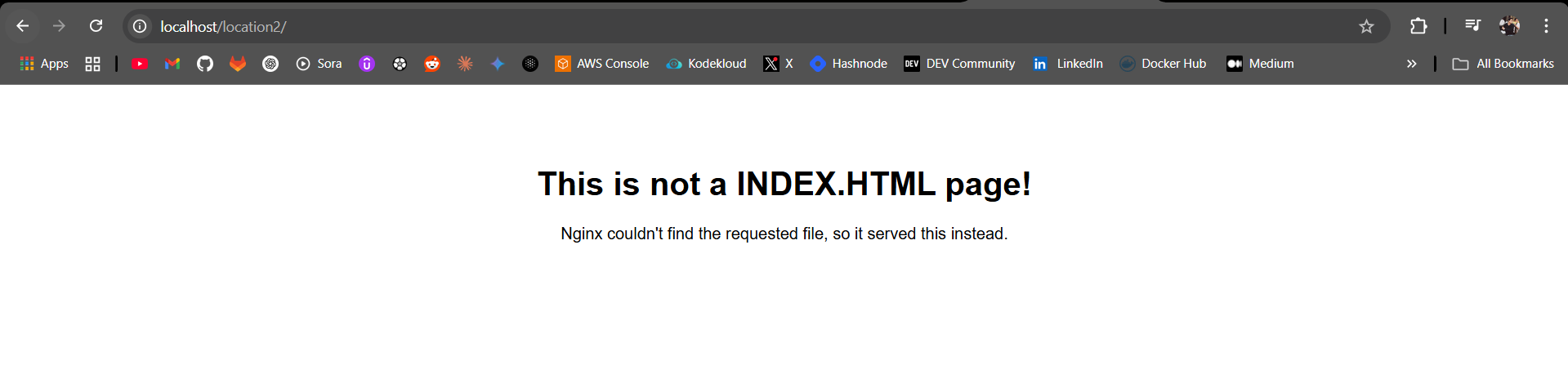

What this will do is once we hit the /location2 endpoint it will look for a index.html file but since we have included the try_files directive Nginx will serve demo.html file under ${root}/location2/ . If it doesn’t exist it will serve the index.html file under the root path or if neither exist it will throw a 404 error!

- Redirection

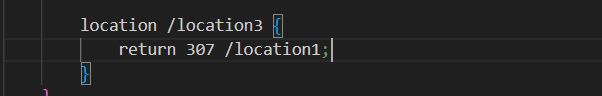

In this scenario if a user hit a particular endpoint we will redirect the user to a pre-existing endpoint.

Hence anytime a user tries to hit the /location3 endpoint, Nginx will return a 307 redirection and redirect to /location1 endpoint.

Nginx LoadBalancing and Reverse Proxy Application

To demonstrate Nginx loadbalancing and reverse proxy functions I have already executed a project where I have used Nginx as a load balancer to distribute traffic across three upstream Docker containers running a Node.js application. The architecture ensures efficient traffic handling, scalability, and fault tolerance.

Do check out its Github and Blog .

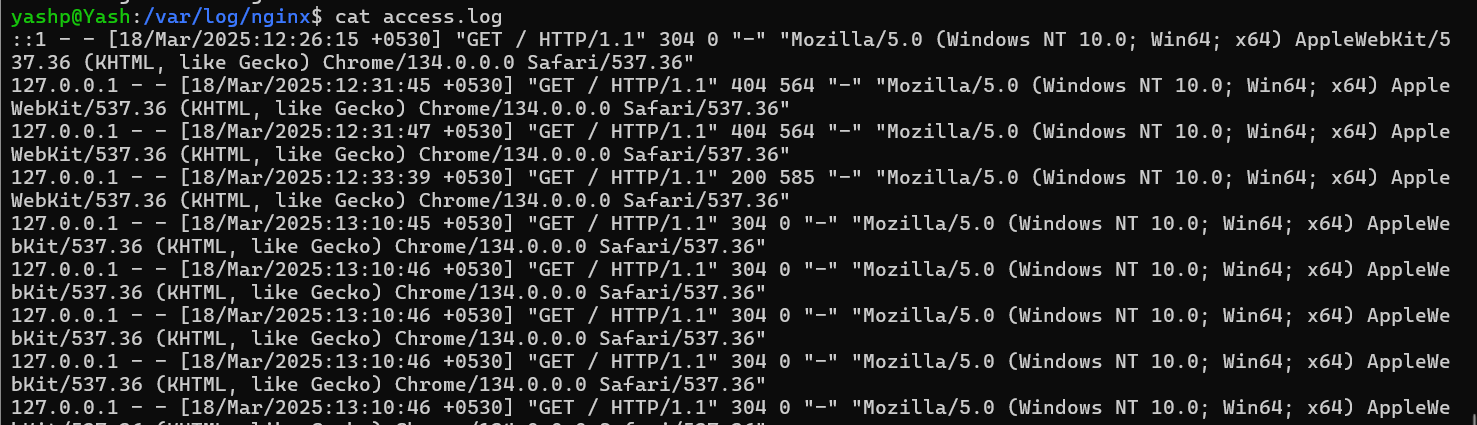

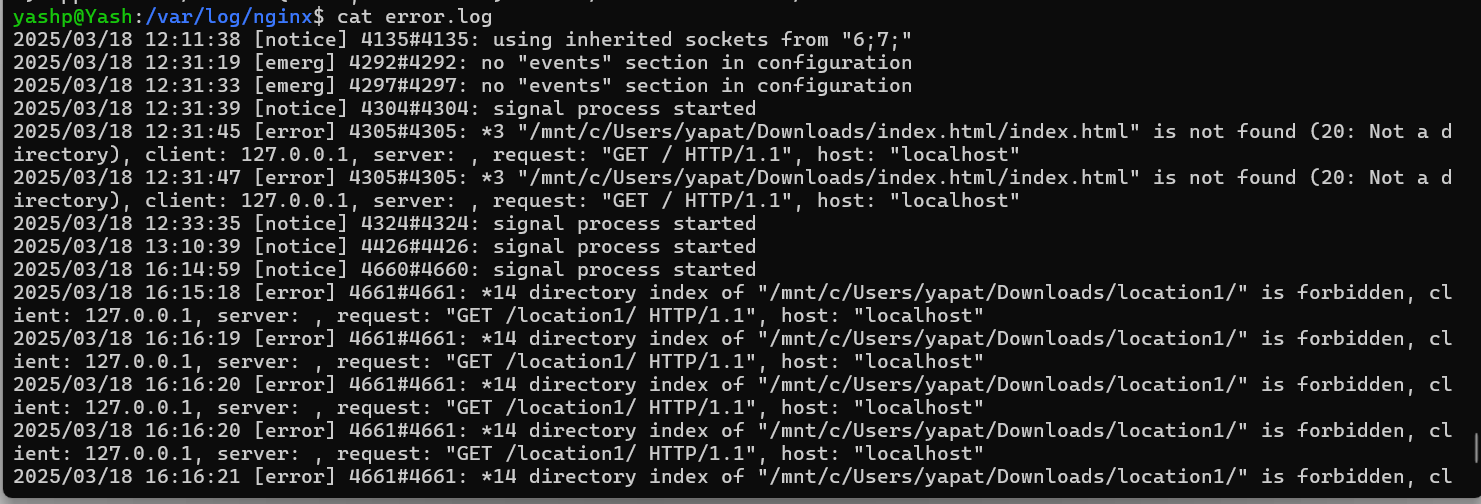

Nginx Access and Error Logs

Nginx Server’s Access Logs can be used to assess the incoming requests to the server, it includes type of the HTTP request (GET, POST, DELETE, etc), the endpoint at which the request came, request’s IP address and many more. This depends on the request’s header to include which details of the clients.

Nginx Server’s Error Logs can be used to assess what errors were encountered while serving a request to the client.

Conclusion

Nginx has revolutionized web serving by offering a high-performance, lightweight, and scalable alternative to traditional web servers like Apache. Its versatility as a reverse proxy, load balancer, and caching layer makes it a critical component in modern web architectures. The shift from Apache to Nginx highlights the growing demand for speed, efficiency, and better resource management.

By understanding how Nginx works and setting up a practical demo, you now have a strong foundation to explore its advanced features, optimize configurations, and use it in real-world projects. Whether you're deploying static sites, hosting APIs, or managing traffic at scale, Nginx remains a powerful tool for developers and DevOps engineers alike. 🚀

Subscribe to my newsletter

Read articles from Yash Patil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by