Understanding Knowledge Graphs and Graph RAG with TypeScript, Neo4j, and Embeddings

Rodolfo Yabut

Rodolfo YabutWhat Are Knowledge Graphs?

A knowledge graph (KG) is a structured representation of information that organizes entities (e.g., people, places, or concepts) and their relationships in a graph format. Unlike traditional databases, knowledge graphs excel at modeling interconnected, complex data with a focus on relationships, making them invaluable for semantic reasoning and efficient information retrieval.

At its core, a knowledge graph consists of:

Nodes: Represent entities like articles, people, or concepts.

Edges: Represent relationships between entities, such as "authored by" or "cites."

Properties/Attributes: Metadata associated with nodes or edges, such as publication dates or confidence scores.

Key Features of Knowledge Graphs:

Semantic Understanding: Ontologies or schemas define relationships and entity types, enabling machines to interpret the meaning of data.

Flexible Schema: Unlike rigid relational databases, knowledge graphs adapt dynamically as new relationships or entities emerge.

Graph Traversal: Queries can traverse relationships to uncover indirect or multi-hop connections.

What Is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) combines traditional information retrieval with generative AI models (e.g., OpenAI’s GPT) to produce accurate, contextually grounded responses. The process enhances the quality of generated content by grounding it in external, factual data.

How RAG Works:

Retrieval: A query triggers a search across a knowledge base, document store, or vector database to retrieve relevant data (e.g., text chunks, documents, or graph nodes).

Augmentation: The retrieved information is passed to a generative model as additional context.

Generation: The model generates a response, incorporating both the retrieved data and its internal knowledge.

Why do we use RAG?

Improved Accuracy: Grounding responses in external data reduces reliance on potentially outdated or incomplete internal model knowledge.

Dynamic Updates: Retrieval allows the system to incorporate new information without retraining the model.

Why use knowledge graphs + RAG? (Graph RAG)

Integrating knowledge graphs with RAG creates a powerful paradigm: Graph RAG. This approach leverages the graph structure to enhance retrieval and reasoning capabilities in several ways:

Contextual Retrieval: Knowledge graphs enable multi-hop reasoning, retrieving not only directly relevant nodes but also related entities through graph traversal. For example, a query about a medical condition might retrieve related symptoms, treatments, and case studies.

Semantic Enrichment: Graph-based relationships provide richer context, leading to more coherent and accurate responses.

Dynamic Updates: Knowledge graphs can continuously evolve with new entities and relationships, ensuring the RAG system remains up-to-date.

Enhanced Explainability: Graph-based retrieval offers a clear, interpretable path of how results were derived, critical in high-stakes domains like healthcare or finance.

High-Level Example Structure of a Knowledge Graph

Below is a high-level diagram illustrating the structure of a knowledge graph. Don’t worry too much about understanding the data-model here. It’s just an illustration.

graph TD

P[Project] -->|Has Task| T1[Task 1]

P -->|Has Task| T2[Task 2]

T1 -->|Assigned To| U1[User A]

T2 -->|Assigned To| U2[User B]

U1 -->|Member Of| G1[Team X]

U2 -->|Member Of| G2[Team Y]

T1 -->|Depends On| T2

P -->|Uses| R[Resource]

R -->|Provided By| V[Vendor]

This structure supports queries such as:

Find all tasks assigned to users in a specific team

Retrieve dependencies between tasks in a project

Identify vendors supplying resources to a project

Building a Knowledge Graph with TypeScript and Neo4j

This section explores how to construct a knowledge graph using Neo4j as the graph database and TypeScript for implementation. The focus will be on linking articles, their constituent text chunks, and metadata (e.g., sources) into a graph structure. We'll also integrate vector embeddings for semantic search, enabling similarity-based queries that go beyond traditional keyword matching.

In the example repo, the data is scraped from a popular RPG news site: https://www.rpgfan.com/

Key Concepts

1. Data

- You need to prepare your data! I’ve provided example data pre-chunked in

/data

2. Graph Database Structure

A graph database like Neo4j organizes information into:

Nodes: Represent entities (e.g., articles, text chunks, sources).

Relationships: Capture connections between nodes (e.g., "belongs_to", "is_chunk_of").

Properties: Store metadata about nodes or relationships (e.g., publication date, embedding vectors).

The Neo4j query language is known as Cypher

3. Node Relationships

The relationships between nodes define the graph's topology. For our use case:

Articles are connected to their source (e.g., a publisher or website).

Articles are broken into text chunks, linked sequentially to preserve document order.

Metadata and embeddings are attached to nodes as properties, enabling both semantic and metadata-based queries.

4. Semantic Search with Embeddings

Vector embeddings are high-dimensional representations of text that capture semantic meaning. By embedding both articles and their chunks we can:

Perform similarity-based search identifies related content even when exact keywords differ.

Queries are matched to nodes using cosine similarity in the vector space.

For the example codebase, we are using OpenAI embeddings

Core Implementation

The provided repo focuses on creating graph nodes, establishing relationships, integrating similarity search via embeddings, and finally, the steps to run an actual similarity search. Don’t worry too much about the schema itself. What we’re focusing on for this post is the RAG implementation; but in order to explain that, we do need to go over ingestion and embeddings.

https://github.com/rodocite/chat-your-articles

Article Ingestion

This “pipeline” processes article data and structures it within a Neo4j knowledge graph, optimizing it for semantic search using vector embeddings.

import { createGraphConstraints } from "./services/createGraphConstraints";

import { createVectorIndexes } from "./services/createVectorIndexes";

import {

createArticleGraphNodes,

addEmbeddingsToArticles,

addEmbeddingsToChunks,

} from "./services/graphOperations";

async function main() {

try {

const articles = await getArticles(); // Load articles from a JSON file

await createGraphConstraints(); // Ensure Neo4j constraints are in place

await createArticleGraphNodes(articles); // Create nodes and relationships

await addEmbeddingsToArticles(); // Generate and attach embeddings to articles

await addEmbeddingsToChunks(); // Generate and attach embeddings to chunks

await createVectorIndexes(); // Create vector indexes for similarity search

} catch (error) {

console.error("Failed to process data:", error);

} finally {

await driver.close();

}

}

await main();

Ingestion Steps

Load Articles and Setup the Database

- Load article data into memory from

data/articles.json. Then setup the database constraints withcreateGraphConstraints()

- Load article data into memory from

Ingest Articles into the Graph

Use

createArticleGraphNodes(articles)to populate Neo4j with nodes representing articles, their metadata, and their text chunks.Establish relationships between articles, sources, and content fragments.

Generate and Attach Embeddings to Nodes you want to include in similarity searches

We generate the embeddings using OpenAI’s embeddings model

addEmbeddingsToArticles(): Convert full articles into vector embeddings, capturing their semantic meaning.addEmbeddingsToChunks(): Generate embeddings for individual chunks, enabling finer-grained semantic search.

Create the vector indexes

- Run

createVectorIndexes()to index embeddings, enabling semantic search for retrieving relevant articles based on meaning rather than keywords.

- Run

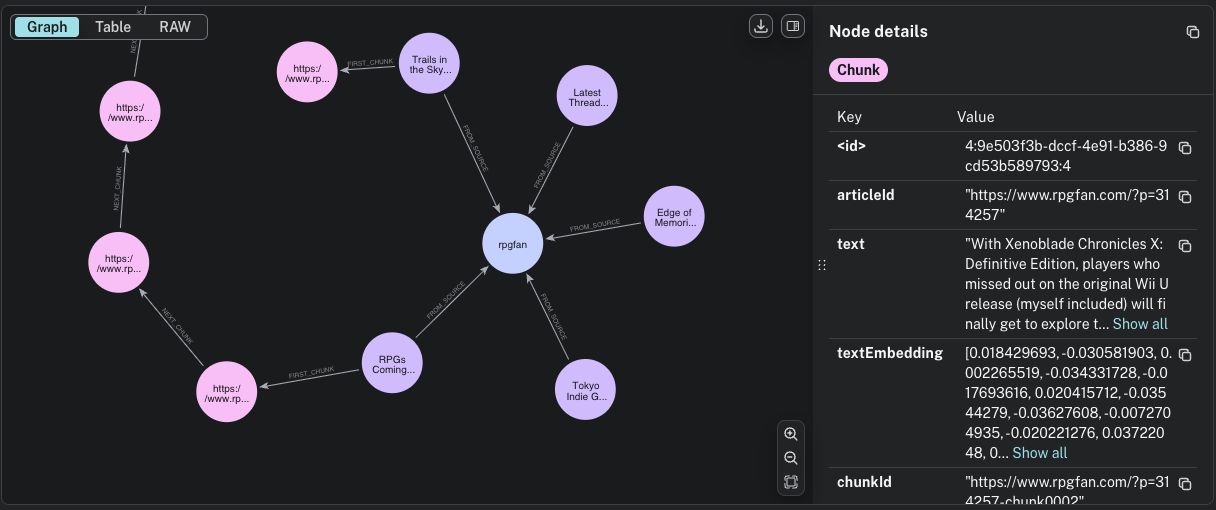

End Result

A fully structured knowledge graph where articles and text chunks are semantically indexed. The graph is queryable via vector search, allowing similarity-based retrieval beyond keyword matching. Note what textEmbedding looks like and how article chunks are in a linked-list structure.

When chunking an article or document, there is context in the order of text. That’s why I chose to represent the chunks as a linked-list.

Semantic Search Code

src/services/semanticSearch.ts

Contextual retrieval combines embedding-based similarity search with graph traversal to deliver precise and relevant results. This code is not invoked directly, but is instead used by the RAG implementation under the hood to augment the prompt with data.

export async function searchSimilarArticles(query: string, topK: number = 5) {

const session = driver.session();

try {

const [queryEmbedding] = await embeddings.embedDocuments([query]);

const limit = Math.floor(topK);

const result = await session.run(cypherQuery, {

queryEmbedding,

topK: limit,

});

return result.records.map((record) => ({

score: record.get("score"),

title: record.get("title"),

link: record.get("link"),

source: record.get("sourceName"),

publishDate: record.get("publishDate"),

content: record.get("relatedContent").join("\n"),

}));

} finally {

await session.close();

}

}

Semantic Search Steps

Query Embedding

A user query is converted into an embedding vector.

This step is very important because we are trying to compare the query in embedding format to the embeddings already stored in the KG. This means that we need to call the embeddings model for this step (in this case OpenAI). This is done under the hood using a Neo4j plugin called GDS (graph-data-science) already included in the

docker-compose.ymlfile.

Vector Similarity Search

- The query vector is compared against stored embeddings in Neo4j using cosine similarity.

Graph-Based Enrichment

- Relevant nodes (e.g., chunks) are traced back to their parent articles or related nodes to provide enriched context.

Graph RAG

When you run this code, createArticleRetrieverChain creates the context space for the the prompt and under the hood calls searchSimilarArticles to retrieve the relevant articles.

async function main() {

try {

const chain = await createArticleRetrieverChain();

const answer = await chain.invoke({

question: "Which RPGs from Nintendo do you know about?",

});

console.log(answer);

} catch (error) {

console.error("Error:", error);

} finally {

process.exit(0);

}

}

Example response to running the above code with the default prompt

- Facts and Announcements:

- Xenoblade Chronicles X: Definitive Edition is releasing on March 20th for the Nintendo Switch. This remaster includes improvements and new story content. Source: RPGFan, 2025-03-16.

- Supporting Details and Context:

- Xenoblade Chronicles X: Definitive Edition allows players to explore the world of Mira and use mechas known as Skells. The original game was released on the Wii U, and this remaster provides an opportunity for new players to experience it.

- The remaster is anticipated by fans who missed the original release, and it includes a praised soundtrack.

- Conflicting or Ambiguous Reports:

- None identified.

- Summary of all retrieved information:

The only Nintendo RPG mentioned in the provided articles is Xenoblade Chronicles X: Definitive Edition, which is set to release on March 20th for the Nintendo Switch. This remaster of the original Wii U game includes various improvements and new story content, offering both new players and veterans a chance to explore the world of Mira with its unique mechas, Skells. The game is noted for its engaging soundtrack and expansive world.

- List of sources:

- RPGFan - 2025-03-16 - [Link](https://www.rpgfan.com/2025/03/16/rpgs-coming-this-week-3-16-25/)

This response is based on article data with a cutoff date of March 16, 2025, covering approximately the past month. It looks like the only Nintendo release RPG Fan wrote about was Xenoblade Chronicles X.

Try changing the prompt in the code and see what you get 🙂

What Sets Graph RAG Apart from Standard RAG?

Below is a table highlighting the key differences:

| Feature | Standard RAG | Graph RAG |

| Data Structure | Unstructured text | Knowledge graph (nodes and edges with metadata) |

| Retrieval Method | Vector similarity search | Graph traversal + vector similarity |

| Contextual Awareness | Limited (based on embedding similarity) | High (explicit relationships between entities) |

| Complexity | Simpler to implement | More complex (requires graph construction & traversal) |

| Use Cases | FAQ systems, document search, simple Q&A | Semantic search, multi-hop reasoning, entity-rich domains |

I think Graph RAG is slept on a bit by many engineers developing agentic AI. Personally, I prefer greater control over the context I send to the LLM, and Graph RAG provides just that. That being said, should you use Graph RAG? Well it depends. Do you need that control over context? Then you should at least consider using a knowledge graph and finding out what goes into designing and maintaining one.

Subscribe to my newsletter

Read articles from Rodolfo Yabut directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by