Fine Tuning Basics: An Introductory Guide

Vikas Srinivasa

Vikas Srinivasa

What is Fine-Tuning?

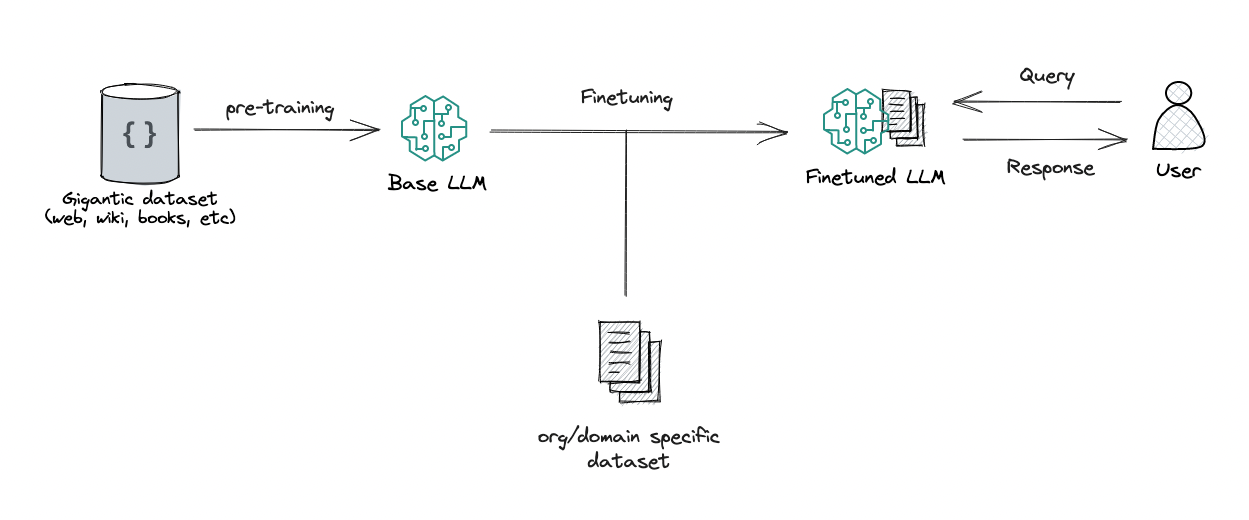

Fine-tuning is the process of further training a pretrained model on a specific dataset to adapt it for a particular task. Instead of training a model from scratch—a process that is computationally expensive and requires vast amounts of data—we specialize an already trained model (like BERT, GPT, or LLaMA) to perform better on domain-specific tasks.

Analogy: Fine-Tuning is Like Training an Athlete

Think of fine-tuning like adapting a general athlete for a specific sport:

A pretrained model (e.g., BERT, GPT-3) is like an athlete trained in general fitness (strength, endurance, agility).

Fine-tuning is like training the athlete for a specific sport (e.g., sprinting, weightlifting, or swimming), where different skill sets and muscle memory are required.

Instead of training the athlete from scratch, we leverage their existing knowledge and refine the skills needed for their specialized sport.

Similarly, a pretrained model already understands language, context, and reasoning, but fine-tuning helps it adapt to specialized domains like medical diagnosis, legal document summarization, or chatbot conversations for a specific company.

Why is Fine-Tuning Needed?

Pretrained models are incredibly powerful, but they have limitations when dealing with domain-specific or private datasets. For example:

A general-purpose LLM might not perform well when asked about proprietary data from a private company (since it was never trained on that data).

Pretrained models may hallucinate when asked a question outside their knowledge base, generating incorrect or misleading information.

This is where fine-tuning comes in—it allows us to customize a model’s knowledge for specific use cases.

But Wait… Can’t We Just Use RAG Instead of Fine-Tuning?

Retrieval-Augmented Generation (RAG) is an alternative approach that enhances an LLM by retrieving relevant information from an external knowledge base before answering a query. Essentially, RAG helps models access external documents in real time instead of relying purely on their internal knowledge.

A question that might come to your mind is "Why should I invest resources into fine-tuning when I can use Retrieval-Augmented Generation (RAG) to fetch relevant knowledge dynamically?".

To answer this, let's explore when to use Fine-Tuning, RAG, or a Hybrid approach that combines both.

Fine-tuning is most effective when a model requires deep contextual understanding of proprietary or domain-specific information. It enables the model to learn patterns, relationships, and nuances, making it more fluent and adaptable across specialized fields such as medical terminology, legal documents, and scientific research. Additionally, fine-tuned models offer low-latency inference, as responses are generated instantly without external retrieval.

However, fine-tuning has limitations:

It requires significant computational resources.

Retraining is needed when domain knowledge evolves, and

It is inefficient for constantly changing information.

RAG, by contrast, enhances model responses by retrieving external knowledge in real time instead of relying solely on stored knowledge. It is particularly useful when factual accuracy is critical or when working with frequently updated domains like news, financial reports, and company documentation. Since RAG does not modify the model, it is a more flexible and cost-effective option when frequent updates are required.

However, it has drawbacks:

Retrieval adds latency

The model does not inherently "understand" retrieved information, and

Effectiveness depends on a well-structured knowledge base.

A hybrid approach combines the strengths of both methods. Fine-tuning equips the model with domain expertise and fluency, while RAG ensures real-time accuracy by retrieving up-to-date knowledge. This balance is particularly valuable for enterprise chatbots, medical AI assistants, and legal research tools, where both deep understanding and factual correctness are necessary for optimal performance.

Fine-Tuning in the Context of Transformers

Most modern fine-tuning is performed on transformer-based models such as BERT, GPT, T5, and LLaMA. Instead of training a model from scratch, fine-tuning leverages a pretrained transformer model, adapting it to a specific task while retaining the general knowledge acquired from large-scale pretraining.

For example:

BERT (pretrained on Wikipedia and books) → fine-tuned for legal contract classification.

GPT-3 (pretrained on internet text) → fine-tuned for a customer support chatbot.

T5 (pretrained on text-to-text tasks) → fine-tuned for medical report summarization.

Now that we understand the role of fine-tuning in transformers, let's explore how the process works.

How Does Fine-Tuning Work?

Fine-tuning involves adapting a pretrained model to a new dataset by modifying its weights. The typical process includes:

Loading a pretrained model (e.g.,

bert-base-uncased).Modifying the model architecture - Modifying the model architecture involves adjusting key components to align with the specific task. This typically includes replacing the output layer to match the required output dimensions.

Training the model on domain-specific data.

Optimizing using loss functions like cross-entropy (for classification tasks).

Evaluating performance on a validation set to ensure generalization.

Fine-Tuning Techniques

Fine-tuning can be categorized into several techniques, each suited for different scenarios:

Supervised Fine-Tuning:

Full Fine-Tuning – Updating all model weights.

Last-Layer Fine-Tuning – Freezing lower layers and training only the final layer.

Parameter-Efficient Fine-Tuning (PEFT) – Fine-tuning a subset of parameters for efficiency.

LoRA (Low-Rank Adaptation) – Efficiently fine-tunes transformer layers with low-rank updates.

QLoRA (Quantized LoRA) – Extends LoRA by using quantized models for memory efficiency.

Prompt Tuning – Optimizes input prompts instead of modifying model weights.

Self-Supervised & Reinforcement-Based Fine-Tuning:

Reinforcement Learning-Based Fine-Tuning:

Direct Policy Optimization (DPO) – Fine-tunes a model using reinforcement learning without reward models.

Proximal Policy Optimization (PPO) – Optimizes policy updates while maintaining stability.

Reinforcement Learning from Human Feedback (RLHF) – Uses human preferences to refine responses.

Conclusion

In the next blog, we will explore full fine-tuning, its advantages, and how to implement it using PyTorch and Hugging Face. This will provide a hands-on understanding of fine-tuning transformer models for custom applications.

Subscribe to my newsletter

Read articles from Vikas Srinivasa directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vikas Srinivasa

Vikas Srinivasa

My journey into AI has been unconventional yet profoundly rewarding. Transitioning from a professional cricket career, a back injury reshaped my path, reigniting my passion for technology. Seven years after my initial studies, I returned to complete my Bachelor of Technology in Computer Science, where I discovered my deep fascination with Artificial Intelligence, Machine Learning, and NLP —particularly it's applications in the finance sector.