FPGAs Part II - Practical Implementation

Omar Morales

Omar MoralesReal-world applications you could replicate:

Object Detection for Autonomous Vehicles: Utilize FPGAs to accelerate image preprocessing and inference tasks, ensuring real-time performance.

AI in Medical Imaging: Implement image analysis for pathology detection using a FPGA-based pipeline.

Edge Video Analysis: Use FPGA for low-latency analysis in smart cameras, like face detection and action recognition in real-time.

Energy-Efficient AI at Home: Run lightweight AI models on FPGA-enhanced boards to build IoT solutions, such as smart home automation.

Accelerating AI/ML Workflows with FPGAs

FPGA logic behaves like “soft silicon” that adaptively morphs

Field-Programmable Gate Arrays (FPGAs) are integrated circuits that can be reprogrammed at the hardware level, unlike fixed-function chips. In contrast to CPUs (few complex cores) and GPUs (many parallel cores), FPGAs consist of a reconfigurable fabric of logic blocks and interconnects that developers can wire to implement custom architectures. This flexibility allows an FPGA to be tailored to mimic a neural network’s structure directly in hardware – the FPGA’s interconnected logic can resemble the layered connectivity of a neural network, effectively acting like “silicon neurons”. By loading a configuration (bitstream), the FPGA’s hardware is shaped to perform specific computations (e.g. matrix multiplications for a CNN) with massive parallelism and pipelining.

— Essentially, FPGAs blur the line between hardware and software, allowing AI engineers to create custom hardware accelerators without fabricating a new chip. —

Modern FPGAs used in AI come from vendors like Intel (formerly Altera), AMD Xilinx, and Lattice Semiconductor.

High-end FPGAs (Intel Agilex, AMD Versal, etc.) are often paired with CPUs on accelerator boards for data centers, while smaller FPGAs (Lattice iCE40, ECP5) cater to ultra-low-power AI at the edge.

Compared to GPUs, which execute AI operations on a fixed array of CUDA cores, an FPGA can be configured to directly implement the datapath of a neural network.

For example, an FPGA design can instantiate an array of multiply-accumulate units mirroring a convolutional layer, achieving deterministic low latency without the overhead of instruction scheduling. This ability to rewire its logic gives the FPGA a unique role in AI: morphing to accelerate different algorithms as needed.

FPGAs in AI Workflows

FPGAs can accelerate multiple stages of the AI/ML pipeline – from data preprocessing to neural network inference. Their reconfigurable parallelism is especially useful for streaming data and real-time processing tasks. For instance, FPGAs can ingest sensor or image data and perform filtering, transformations, or feature extraction on the fly, preparing batches for a model. During the inference stage (model execution), an FPGA-based accelerator can be customized for the target model’s compute pattern, whether it’s a CNN, RNN, or transformer. This leads to speed-ups in throughput and latency for inference, and even for certain training tasks (though training on FPGAs is less common). In practical terms, AI workflows often deploy FPGAs alongside CPUs/GPUs: the CPU might handle high-level application logic, while an FPGA offloads intensive kernels (e.g. matrix multiplications, convolutions, or decision tree traversals) to dedicated hardware circuits.

Example – Autonomous Vehicles*: FPGAs are used on self-driving car platforms to interface with cameras, LiDAR, and radar sensors and run DNN models with ultra-low latency. The diagram shows an Advanced Driver Assistance System (ADAS) where FPGAs could perform real-time sensor fusion and object detection, feeding results to the car’s CPU.*

Real-world applications of FPGAs in AI include:

Autonomous Vehicles & Robotics: In self-driving cars, FPGAs process raw sensor data (from LiDAR, radar, cameras) in real time, enabling tasks like object detection, lane keeping, and sensor fusion with minimal latency. Their deterministic timing is crucial for safety-critical decisions (braking or steering). Similarly in robotics, FPGAs can handle vision and control algorithms on the edge, e.g. onboard a drone or industrial robot, where a GPU’s power draw or latency might be prohibitive.

Medical AI Devices: FPGAs are powering portable and real-time medical AI systems, from ultrasound image analysis to endoscopic video enhancement. By directly interfacing with sensors and on-device memory, an FPGA can perform inference during a medical procedure with very low delay. For example, researchers demonstrated an FPGA-based neural network for cancer detection that achieved immediate feedback during surgery, outperforming a GPU by 21× in latency for that task. This enables on-the-fly diagnostics in devices like smart MRI machines or patient monitors.

Edge AI in IoT: Many IoT applications demand AI processing in the field under strict power and cost constraints – think smart cameras, voice assistants, or predictive maintenance sensors. FPGAs excel here by accelerating AI models (e.g. keyword spotting, anomaly detection) while consuming only a few milliwatts to a few watts. For instance, a FPGA-enabled security camera can run a face recognition model locally with minimal lag, or a home automation device could use an FPGA to run a tiny neural network to detect gestures, all without relying on cloud compute. The FPGA’s programmability extends device lifespan: as models improve, the hardware can be updated via a remote bitstream update instead of replacing the device.

Data Center Acceleration: In cloud and HPC environments, FPGAs serve as specialized accelerators for inference at scale. Companies like Microsoft have deployed large FPGA clusters (Project Brainwave) to accelerate search engine ranking and translation models, achieving high throughput with low latency by networking FPGAs together. In database and analytics workloads, FPGAs handle tasks like data filtering, compression, or pattern matching, working alongside CPUs. These use cases show that FPGAs are not limited to edge use – they are equally at home boosting performance in servers for AI services (often via PCIe accelerator cards).

Benefits of FPGAs for AI Engineers

— Why should AI/ML engineers care about FPGAs? —

The key benefits include low-level control over computation, potential to alleviate memory and I/O bottlenecks, improved energy efficiency, and long-term adaptability of deployments:

Custom Parallelism & Low Latency: FPGAs enable model-specific parallelism. Instead of running your neural network on a general-purpose array of cores, you can create a data path that exactly matches your model’s graph. This means, for example, an FPGA can pipeline the entire inference of a CNN – each layer’s operations start as soon as data is available, with minimal buffering. The result is often ultra-low latency. FPGAs routinely achieve deterministic response times in the order of microseconds to a few milliseconds, which is important for real-time AI (robot control, high-frequency trading, etc.). GPUs, by contrast, excel at high throughput but generally incur more latency (their SIMD architecture thrives on large batches). By cutting out instruction scheduling and using dedicated circuits, an FPGA can complete certain operations in a single clock cycle where a CPU might need dozens of instructions. This deterministic execution is valuable in systems where timing predictability equals correctness.

Memory and I/O Optimization: FPGAs can be the antidote to memory bottlenecks that plague many AI systems. With a traditional CPU/GPU setup, data often has to move through multiple memory hierarchies and buses (system RAM, PCIe, etc.), incurring delays. An FPGA, however, can be placed inline with data sources (sensors, network streams) to process data as it arrives. In a medical AI context, an FPGA design was able to interface directly with sensors and on-board memory, eliminating the costly data movement overhead that the CPU/GPU incurred. Intel highlights that FPGAs are used to “accelerate data ingestion”, removing or reducing the need for intermediate buffers. For AI pipelines, this means an FPGA can stream data through a model without ever sitting idle waiting on memory. Many FPGA designs incorporate on-chip SRAM blocks as caches or FIFOs that are tailored to the access pattern of the neural network (e.g. line buffers for streaming convolution across an image). By bringing memory closer to computation and customizing how data flows, FPGAs overcome I/O bottlenecks that limit CPU/GPU performance. This is especially useful when merging data from multiple sources – e.g., an FPGA can ingest and fuse multi-sensor data (audio, video, lidar) concurrently, something that would saturate a CPU.

Energy Efficiency: FPGAs are often far more power-efficient for inference than their CPU/GPU counterparts. Because an FPGA implements only the logic needed for the task (with no excess overhead), it can perform more operations per watt in optimized workloads. Academic and industry studies consistently show FPGAs providing better performance-per-watt on AI inference. For example, a Microsoft research project on image recognition found that an Intel Arria 10 FPGA achieved nearly 10× lower power consumption than a GPU for similar work. Likewise, Xilinx reported their 16nm FPGA delivered about 4× the compute efficiency (GOP/s per Watt) of an NVIDIA V100 GPU on general AI tasks. This efficiency is critical for edge devices running on batteries or under tight thermal constraints. It also translates to cost savings in data centers (where power and cooling are big expenses). GPUs have made strides with tensor cores and lower precision arithmetic to improve efficiency, but an FPGA still has an edge by letting you fine-tune resource usage: you can choose 4-bit or binary precisions, use only on-chip memory, etc., to cut power usage dramatically. In one design, by minimizing redundant circuits, an FPGA used ~50% less power than a GPU for the same AI task. In short, FPGAs let you achieve more inference work under a strict power budget. This is why they’re popular for “AI at the edge” – you can deploy an FPGA-based CNN accelerator in a drone or wearable device where a GPU’s battery drain would be unacceptable.

Deployment Flexibility & Longevity: An FPGA-based accelerator can evolve as your models and workloads do. This is a major advantage in the fast-moving AI field. Need to update your model architecture? On a GPU or ASIC, you’re limited by the fixed hardware – but on an FPGA you can recompile a new bitstream to optimally support the changes. The reconfigurability of FPGAs means one device can be reprogrammed for many different AI applications over its lifetime. Intel’s FPGA platform strategy emphasizes long product lifecycles; FPGA cards can live in deployment for years and be repurposed via reconfiguration, whereas GPUs might need to be swapped out for a newer model to support the latest networks. This makes FPGAs attractive for longevity-critical systems (industrial or aerospace) and helps avoid repeated hardware upgrade costs. Additionally, FPGAs often allow multiple functions on one chip: e.g., part of the FPGA can run AI inference while other logic on the chip handles encryption, sensor interfacing, or other tasks. This consolidation can reduce component count and cost in a device. Ultimately, for AI engineers, FPGAs offer a path to “future-proof” acceleration – the hardware adapts as the models change. In a world where new neural network architectures or layer types emerge frequently, this adaptability is invaluable.

FPGAs vs GPUs for AI/ML: A Comparison

Both FPGAs and GPUs are commonly used to accelerate deep learning, but they have different strengths. Here’s a side-by-side look at how they compare for AI workloads:

| Factor | FPGA | GPU |

| Architecture & Flexibility | Reconfigurable fabric with no fixed instruction set – can be customized at the logic level for each workload. This allows implementing arbitrary dataflows and native support for novel NN operators. | Fixed architecture of thousands of cores and memory hierarchies optimized for common parallel patterns. Very efficient for standard dense tensor operations, but less adaptable to custom dataflows. |

| Parallelism & Throughput | Can exploit fine-grained and coarse-grained parallelism by creating many parallel processing units or pipelines specific to the model. Achieves high throughput when design fully utilizes FPGA logic (e.g., an FPGA with AI cores reached 24× the throughput of an NVIDIA T4 on certain real-time inference tasks). However, scaling up requires sufficient FPGA resources and careful design – peak performance is workload-dependent and not always reached if the design underutilizes the fabric. | Massive data-parallel throughput on regular workloads. GPUs excel at matrix multiplies, convolutions, and other operations that map to SIMD execution – they can reach extremely high FLOPs for well-batched computations. In practice, GPUs often still deliver higher raw speed on large neural networks, especially for training or very large batch inference. Their fixed datapath can become a limitation for irregular or memory-bound tasks, where FPGA custom pipelines might pull ahead. |

| Latency | Able to achieve ultra-low latency and deterministic response. FPGAs can be architected to process inputs with minimal buffering – ideal for batch-1 inference and streaming applications. Small models can be entirely unrolled in hardware for inference in a few microseconds. Even larger networks benefit from pipelining across layers, avoiding the batching needed on GPUs. Example: Xilinx reported 3× lower latency with their FPGA vs. a GPU on real-time inference tasks. | Typically require batching to maximize utilization, which adds latency. GPUs handle single-stream inference less efficiently (the hardware may sit partially idle). For instance, a GPU might incur tens of milliseconds latency for a batch-1 inference that an FPGA can do in a few milliseconds. That said, high-end GPUs with tensor cores have improved their low-batch performance, and optimized GPU inference engines (TensorRT, etc.) reduce overheads. But generally, if minimal latency is the priority, FPGAs have the edge. |

| Energy Efficiency | Designed for efficiency – no unnecessary work is done beyond what the algorithm requires. FPGAs often achieve more inferences per watt. Studies show 5–10× efficiency gains in certain tasks (e.g. FPGAs hitting 10–100 GOP/J, comparable or better than state-of-the-art GPUs). FPGAs can also be partially reconfigured to power-gate unused logic, and run at lower clock rates if needed to save energy. | Gains in efficiency through specialized cores (e.g. NVIDIA’s tensor cores) and optimized memory, but still power-hungry at peak performance. High-end GPUs can draw 200–300W under load. They also dissipate energy on aspects FPGAs avoid (instruction control, general caches, etc.). As a result, GPUs in edge devices often struggle with thermal limits unless underclocked. In data centers, GPU power consumption is a major factor – one reason companies explore FPGA accelerators for better performance-per-watt. |

| Developer Ecosystem & Ease of Use | Historically more challenging – designing FPGA accelerators meant learning hardware description languages (Verilog/VHDL) or high-level synthesis, which is a steep learning curve for software ML engineers. However, modern FPGA tools (OpenVINO, HLS compilers, etc.) and pre-built IP cores are greatly lowering the barrier (see next section). In deployment, FPGAs lack the large unified memory of GPUs, requiring careful memory management by the developer. | Mature and familiar ecosystem for AI developers. Programmers can leverage prevalent frameworks (TensorFlow/PyTorch) and GPU libraries (CUDA, cuDNN) without deep knowledge of GPU architecture. The tooling for profiling and optimizing GPU code is well-developed after years of refinement. On the flip side, this means the average ML engineer is far more comfortable with GPUs than FPGAs. GPU development is almost entirely software-based, whereas FPGA development straddles software and hardware considerations. |

Use Cases Where FPGAs Outperform GPUs: Given the above, FPGAs tend to shine for low-latency inference, streaming data processing, and scenarios with unusual computation patterns or strict power limits. If you need inference on a batch of 1 with a 5 ms deadline, an FPGA can likely meet that deadline where a GPU might not. For example, one FPGA design achieved 10,000+ inferences per second on a complex neural network – outperforming a GPU in throughput when latency was constrained.

FPGAs also excel when the model doesn’t fit well into a GPU’s memory hierarchy (e.g. very large sparse models or multi-tenant inference where different small networks run concurrently). In multi-sensor systems (autonomous machines, IoT gateways), an FPGA can act as a hub that pre-processes and combines data in real time, which would be inefficient on a GPU that expects large uniform workloads. Furthermore, at the extreme edge (few milliwatts power budget), GPUs simply have no presence – tiny FPGAs or microcontrollers are the only option to run AI, so any ML in that domain relies on FPGA/ASIC solutions.

Use Cases Where GPUs Outperform FPGAs:

GPUs still rule for training deep neural networks – training is computationally intensive and benefits from the 1000s of math cores and high memory bandwidth of GPUs (and frameworks like PyTorch are heavily optimized for GPU training). It’s generally impractical to train a large model on FPGAs today due to tool limitations and lower precision support, though research is ongoing.

For very large-scale inference (think processing millions of requests on a server), GPUs can be easier to scale out – you can add more GPU instances and use well-tested load balancing, whereas using FPGAs at scale may require more custom infrastructure.

For algorithms that map perfectly to GPU architectures (e.g. dense matrix ops with high reuse), a single GPU might reach higher absolute performance than a single FPGA, especially given NVIDIA’s aggressive hardware advancements.

GPUs are “general-purpose accelerators” that are excellent for the average deep learning task, whereas FPGAs are “special-purpose accelerators” that win on specific metrics (latency, power, custom functions) in niche scenarios. In practice, many AI deployments mix both: e.g. use GPUs to train models and FPGAs to deploy them in the field.

Getting Started with FPGA AI Development

One of the hurdles that has historically kept AI engineers from using FPGAs is the complexity of programming them. In recent years vendors have introduced high-level tools and frameworks that make FPGA development more accessible to software and ML engineers. Here we focus on the Intel ecosystem as an example (since Intel has invested heavily in bridging AI and FPGA workflows), and we’ll briefly note comparable tools from competitors like AMD Xilinx.

Intel’s FPGA AI Toolchain:

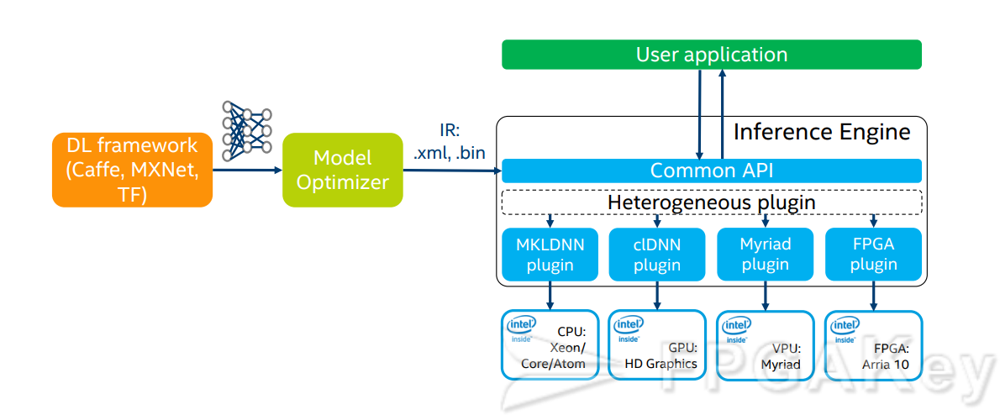

The centerpiece is the Intel Distribution of OpenVINO™ Toolkit, which is a software framework to deploy trained models on various Intel hardware targets (CPU, GPU, FPGA, VPU) with optimizations. OpenVINO provides a unified API called the Inference Engine and uses a device plugin architecture – you can load a model and run inference on a CPU, or switch to an FPGA, simply by changing the target device flag.

Under the hood, you use OpenVINO’s Model Optimizer to convert your trained model (from TensorFlow, PyTorch via ONNX, etc.) into an Intermediate Representation (IR) format that the Inference Engine can consume.

This IR is essentially a compute graph optimized for inference.

Intel FPGAs have a plugin that takes the IR and runs it on the FPGA, using libraries of optimized FPGA IP for neural network layers.

You don’t have to write HDL - Use your Tensorflow model

you can take a TensorFlow model and deploy to an FPGA through OpenVINO with minimal code changes. Intel also offers the FPGA AI Suite, which works closely with OpenVINO. This toolkit provides pre-optimized neural network IP blocks and templates for FPGAs, and helps with tasks like quantization to lower precision (since using INT8 or INT4 on FPGAs can greatly speed up inference).

FPGA AI Suites essentially automate a lot of the FPGA-specific design, allowing engineers to focus on the model. It interfaces with OpenVINO so that your model, once optimized, can be compiled into an FPGA-friendly form and executed with the same OpenVINO runtime API.

Workflow: The Intel OpenVINO toolkit workflow for deploying a deep learning model to different hardware, including FPGAs. A trained model (from frameworks like Caffe, TensorFlow, etc.) is converted to an Intermediate Representation (IR) format by the Model Optimizer, then the Inference Engine’s FPGA plugin handles execution on an Intel FPGA (e.g., Arria 10) via a common API. This allows AI developers to use familiar frameworks and let OpenVINO orchestrate the FPGA acceleration.

Lets Illustrate:

# Traditional ML Deployment Stack

Python/PyTorch/TensorFlow

↓

CUDA/ROCm (for GPUs)

↓

Hardware

# FGPA-accelerated ML Stack

Python/PyTorch/TensorFlow

↓

OpenVINO™ Toolkit

↓

Intel FPGA AI Suite

↓

FPGA Hardware

Illustration source: FPGAKey.com

To demonstrate how one would port a model to an FPGA using these tools, consider a simple example: suppose you have a CNN trained in PyTorch and saved as an ONNX file. Using OpenVINO, you would run the Model Optimizer to convert this ONNX model to an IR (.xml and .bin files). Then, using the Python API of OpenVINO’s Inference Engine, you can load the model on an FPGA device and perform inference, as shown below:

Basic setup steps

pip install openvino-dev pip install numpy tensorflow torch

# Use exclamation point in Notebook files while importing libs

## !pip install openvino-dev pip install numpy tensorflow torch

Step 1: .sh (CLI)

# Convert the model to IR (FP16 precision for FPGA) using OpenVINO Model Optimizer

mo --input_model model.onnx --data_type FP16 --output_dir model_ir/

Step 2: .py Python script

In this example, aside from the one-time model conversion, the code to run inference on an FPGA is very similar to running on a CPU or GPU – OpenVINO handles the device specifics. The compiled FPGA model will use Intel’s FPGA libraries (like an FPGA-friendly convolution implementation) under the hood. Intel’s Open FPGA Stack (OFS) can also come into play for more advanced use cases; OFS is an open-source platform that provides reusable FPGA infrastructure (interfaces, drivers) so developers can more easily build custom FPGA accelerators and integrate them with software. For instance, if you wanted to write a custom FPGA kernel (in RTL or using high-level synthesis) for a novel ML operation, OFS would provide a template to hook your IP into a standard FPGA PCIe card interface and memory controller, so it can work alongside the OpenVINO runtime or be invoked from a host program.

# Step 2: Load the IR model on an FPGA and run inference using OpenVINO Inference Engine

import numpy as np

from openvino.runtime import Core

core = Core()

# Read the network and corresponding weights from IR files

model = core.read_model(model="model_ir/model.xml", weights="model_ir/model.bin")

compiled_model = core.compile_model(model=model, device_name="FPGA") # targeting FPGA

# Prepare an input (assuming the model has a single input for an image)

input_tensor = np.load("sample_input.npy")

# Create an inference request and do inference

infer_request = compiled_model.create_infer_request()

infer_request.infer(inputs={0: input_tensor})

output = infer_request.get_output_tensor(0).data

print("Inference result:", output)

Competitor Toolchains:

AMD (Xilinx) offers a comparable stack with its Vitis unified software platform and Vitis AI toolkit. Vitis AI allows you to take trained deep learning models and compile them to run on Xilinx FPGAs (often using a pre-defined deep learning processing unit, DPU, which is an IP core optimized for neural networks).

Developers can quantize models to INT8 and use the Vitis AI Compiler to target devices like Xilinx Alveo accelerator cards or system-on-chip FPGAs.

The experience is analogous to OpenVINO – you work at the model level, not the RTL level.

Xilinx’s solution also integrates with frameworks: for example, you can deploy a TensorFlow model on an edge FPGA (like the Kria SOM) using Vitis AI with only minor modifications to your code. Similarly, Lattice provides an sensAI software stack for its small FPGAs, including a neural network compiler and runtime for low-power inference. These tools often come with reference designs – e.g., demo projects for object detection or keyword spotting – that you can use as a starting point.

In summary, getting started with FPGA development for AI no longer means “learn VHDL and design a processor from scratch.” Instead, you can leverage high-level workflows:

Model Conversion/Quantization: Convert or quantize your model to a format suitable for FPGA (OpenVINO’s Model Optimizer, or Xilinx’s quantizer).

Compilation to FPGA bitstream: Use a toolchain (Intel FPGA AI Suite or Xilinx Vitis AI) that takes the optimized model and generates an FPGA configuration (bitstream or firmware) or configures an existing NN accelerator IP.

Deployment & Runtime: Use a runtime API (OpenVINO Inference Engine, Xilinx’s Vitis AI Runner) in your application to load the model onto the FPGA and execute inferences, similar to how you would use TensorFlow Serving or TensorRT for GPUs.

This flow is becoming increasingly streamlined. For example, there are cloud platforms and developer sandboxes (like the Intel DevCloud for FPGA) where you can upload a model and test it on real FPGA hardware through a web interface, without dealing with FPGA hardware setup locally.

Lowering the Learning Curve for ML Engineers

While the tools above make FPGA acceleration more reachable, there is still a learning curve for software engineers. Let’s address some of the challenges and resources to overcome them:

Challenges: The primary challenge is the mindset shift – FPGAs require thinking about parallelism, data movement, and resource constraints at a much lower level than typical software development. An ML engineer used to writing Python may not be comfortable with the idea of timing closure, LUT counts, or finite-state machines. Even with high-level tools, understanding what happens under the hood (e.g., how an operation gets implemented in hardware) can be important for optimizing performance. Another challenge is debugging and profiling on FPGAs – you can’t simply print intermediate tensors easily as in PyTorch; you often need to use vendor-specific debuggers or logic analyzers to peer into the hardware’s operation. Lastly, not all neural network operations or layers are supported equally on FPGA toolchains – if your model uses a very custom layer, the out-of-the-box IP might not handle it, forcing you to implement that part yourself. This fragmentation in supported ops can be frustrating.

High-Level Abstractions: To mitigate these challenges, the FPGA industry is providing higher-level abstraction libraries and middleware. We’ve discussed OpenVINO and Vitis AI which abstract at the level of whole models. Additionally, languages like OpenCL and SYCL (oneAPI) allow writing kernels for FPGAs in a C/C++ based language, which is then compiled to hardware. For example, Intel’s oneAPI DPC++ compiler can compile a parallel C++ program to run on an FPGA – you get to use a high-level language with parallel extensions, and the tool handles creating the logic. This is conceptually similar to writing CUDA C++ for GPUs. There are also domain-specific libraries: if you’re doing only linear algebra, tools like Blas-like Library for FPGAs (BLAS) provide ready-made FPGA implementations of matrix operations. If you’re focused on inference, frameworks like hls4ml (an open-source project from CERN) can automatically convert small neural network models written in Python (Keras) into an FPGA firmware using high-level synthesis. These abstractions mean you don’t have to design at the circuit level – you express the algorithm in a familiar form, and let compilers synthesize the hardware. The trade-off is usually some efficiency loss vs hand-tuned HDL, but for many, the convenience is worth it.

Resources and Learning: To get started, there are free training courses and community resources emerging. Intel and Xilinx both have developer hubs with tutorials – for instance, Intel’s FPGA Academic Program provides an AI Design using FPGAs course that covers the basics of OpenCL on FPGAs. Xilinx’s community forums and GitHub repositories have numerous reference designs (like how to run YOLOv3 on a Xilinx FPGA). Sites like FPGAdev.com or the r/FPGA subreddit have discussions aimed at newcomers. You’ll also find increasing content on Medium and personal blogs from ML engineers who ventured into FPGAs, sharing “How I sped up my CNN 3x with an FPGA” experiences. These can be very enlightening for practical tips. Moreover, academic collaborations are bridging the gap – for example, universities offering courses on AI hardware where students use high-level frameworks to deploy networks on real FPGA boards (often on cloud FPGA platforms so no physical device is needed).

One great way to learn is to experiment with a starter FPGA kit that supports the high-level flow. For under $200, you can get boards like the Intel OpenVINO Starter Kit or a Xilinx PYNQ board. PYNQ in particular is interesting – it allows you to program a Xilinx FPGA using Python, by abstracting the FPGA logic as callable Python functions running on an embedded ARM processor. This kind of environment can be very inviting to someone with a Python/AI background, since you can treat the FPGA as a Python accelerator library.

In short, the ecosystem is growing to “make FPGAs friendlier”. High-level synthesis, AI-specific compilers, and extensive documentation are demystifying FPGA development. As a result, we’re seeing a new generation of AI engineers who, without years of hardware design experience, can still leverage the power of FPGA acceleration. The learning curve, while still present, is continually lowering. Engineers can start by accelerating a small part of a pipeline on FPGA (e.g. offload just a convolution layer via OpenCL), and gradually learn to map more of the model as they become comfortable. With persistent community and vendor support, using FPGAs for AI may soon feel as natural as using GPUs – giving practitioners another powerful tool in their arsenal for building efficient AI systems.

Conclusion: FPGAs offer a compelling complement to CPUs and GPUs in the AI/ML workflow. They bring the customizability of hardware to the masses – allowing AI models to run on circuits tailored exactly to their needs, which can mean faster, leaner, and more power-efficient AI applications. Intel’s and AMD’s latest toolchains have made it easier than ever to integrate FPGAs into familiar ML pipelines, and real-world successes in vehicles, healthcare, and edge devices prove the technology’s value. For AI engineers with no prior FPGA experience, the key takeaway is that FPGA acceleration is approachable and worth exploring, especially when your application demands that extra bit of performance or efficiency that general-purpose hardware can’t provide. With the rich set of resources and frameworks now available, the once steep learning curve of FPGAs is flattening – enabling a broader community of engineers to ride the coming wave of heterogenous computing where FPGAs play an integral role alongside GPUs and CPUs in advancing AI.

FPGAs Part III: Computer vision pipeline

Sources:

E. Nurvitadhi et al., “Accelerating Binarized Neural Networks: Comparison of FPGA, CPU, and ASIC,” Proc. Intl. Conf. on Field-Programmable Logic and Applications, 2016 – FPGA’s reconfigurability offers high performance and low power for binary neural nets.

S. K. Kim et al., “Real-time Data Analysis for Medical Diagnosis using FPGA-accelerated Neural Networks,” International Conference on Computational Approaches for Cancer (IEEE, 2017) – FPGA can directly interface with sensors and provide 144× speedup over CPU (21× over GPU) in a cancer detection MLP, enabling real-time analysis during procedures.

K. Guo et al., “A Survey of FPGA-Based Neural Network Inference Accelerators,” ACM TRETS, vol. 9, no. 4, 2017 – FPGAs can surpass GPUs in energy efficiency (10–100 GOP/J) but typically lag in absolute speed; lack of high-level tools was a noted challenge.

F. Yan et al., “A Survey on FPGA-based Accelerators for Machine Learning,” arXiv:2412.15666, 2024 – Highlights that ~81% of recent research focuses on inference acceleration on FPGAs, with CNNs dominating; emphasizes low-latency and efficiency as main reasons for FPGA use in ML.

Xilinx Inc., Xilinx Claims FPGA vs. GPU Lead, Oct. 2018 – Press release claiming Alveo FPGA cards deliver 4× the throughput of high-end GPUs for sub-2ms low-latency inference, and 3× lower latency in real-time AI workloads.

Intel Corp., Compare Benefits of CPUs, GPUs, and FPGAs for oneAPI Workloads, Intel.com, 2021 – Describes how FPGAs are reconfigurable hardware allowing custom data paths, and notes they can eliminate I/O bottlenecks by ingesting data directly from sources.

IBM, “FPGA vs. GPU for Deep Learning Applications,” IBM Developer Blog, 2019 – Discusses trade-offs; notes GPUs offer ease-of-use and high peak FLOPs, whereas FPGAs offer flexibility and deterministic performance, often winning on specific metrics (power, latency).

J. C. Hoe et al., “Beyond Peak Performance: Comparing the Real Performance of AI-Optimized FPGAs and GPUs,” Proc. IEEE FPT, 2020 – Detailed benchmark of Intel Stratix 10 NX vs. NVIDIA T4/V100 GPUs: FPGA achieved up to 24× speedup at batch-6 and maintained 2–5× at batch-32, with 10× lower latency in a streaming RNN scenario thanks to 100 Gbps network integration.

Intel OpenVINO Documentation, Intel Developer Zone, 2023 – Guide for deploying deep learning models on Intel hardware. Explains model optimizer and inference engine workflow, including FPGA plugin support for Arria 10/Agilex FPGAs.

Intel FPGA AI Suite User Guide, Intel.com, 2022 – Describes tools for accelerating AI inference on FPGAs and how it interfaces with OpenVINO (providing templates for common networks and FPGA-optimized layers).

Lattice Semiconductor, “Lattice sensAI Stack – Bringing AI to the Edge,” LatticeSemi.com, 2024 – Describes Lattice’s low-power FPGA AI solution (under 1 mW to 5 W operation) and its end-to-end stack (model compiler, IP cores, reference designs) for edge AI in IoT devices.

A. Mouri Zadeh Khaki and A. Choi, “Optimizing Deep Learning Acceleration on FPGA for Real-Time Image Classification,” Applied Sciences, vol. 15, 2025 – Presents methods to optimize VGG16/VGG19 on FPGA and achieve real-time throughput with resource-efficient design; demonstrates techniques like loop unrolling and quantization for FPGA efficiency.

Fidus Systems, “The Role of FPGAs in AI Acceleration,” Fidus Tech Blog, 2023 – Industry blog noting FPGAs can be tuned for specific workloads (e.g. tenfold performance gain on convolution vs GPU by customizing logic) and citing 50% lower power usage in certain AI tasks.

Aldec, “FPGAs vs GPUs for Machine Learning: Which is Better?,” Aldec Blog, 2020 – Summarizes research: Nvidia Tesla P40 vs Xilinx FPGA had similar compute throughput, but FPGA had far more on-chip memory, reducing external memory bottlenecks; also cites a Microsoft study where an FPGA was ~10× more power efficient than a GPU for image recognition, and flexibility of FPGAs to support arbitrary numeric precision as an advantage.

M. Vaithianathan et al., “Real-Time Object Detection and Recognition in FPGA-Based Autonomous Driving Systems,” IEEE Int. Conf. on Consumer Electronics, 2024 – Demonstrates an FPGA accelerator for YOLO object detection running in an autonomous vehicle setup, achieving real-time (30+ FPS) inference with significantly lower latency than an equivalent GPU implementation, validating FPGAs for ADAS applications.

Subscribe to my newsletter

Read articles from Omar Morales directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Omar Morales

Omar Morales

Driving AI Innovation, Cloud Observability, and Scalable Infrastructure - Omar Morales is a Machine Learning Engineer and SRE Leader with over a decade of experience bridging AI-driven automation with large-scale cloud infrastructure. His work has been instrumental in optimizing observability, predictive analytics, and system reliability across multiple industries, including logistics, geospatial intelligence, and enterprise cloud services. Omar has led ML and cloud observability initiatives at Sysco LABS, where he has integrated Datadog APM for performance monitoring and anomaly detection, cutting incident resolution times and improving SLO/SLI compliance. His work in infrastructure automation has reduced cloud provisioning time through Terraform and Kubernetes, making deployments more scalable and resilient. Beyond Sysco LABS, Omar co-founded SunCity Greens, a small and local AI-powered agriculture and supply chain analytics indoor horticulture farm that leverages predictive modeling to optimize farm-to-market logistics serving farm-to-table chefs and eateries. His AI models have successfully increased crop yield efficiency by 30%, demonstrating the real-world impact of machine learning on localized supply chains. Prior to these roles, Omar worked as a Geospatial Applications Analyst Tier 2 at TELUS International, where he developed predictive routing models using TensorFlow and Google Maps API, reducing delivery times by 20%. He also has a strong consulting background, where he has helped multiple enterprises implement AI-driven automation, real-time analytics, ETL batch processing, and big data pipelines. Omar holds multiple relevant certifications and has completed his Postgraduate Certificate (PGC) in AI & Machine Learning from the Texas McCombs School of Business. He is deeply passionate about AI innovation, system optimization, and building highly scalable architectures that drive business intelligence and automation. When he’s not working on AI/ML solutions, Omar enjoys virtual reality sim racing, amateur astronomy, and building custom PCs.