Understanding EMR Architecture: Key Components, Configuration Options, and Scaling Strategies

Mohammad Arsalan

Mohammad Arsalan

Introduction to EMR Architecture

Amazon EMR (Elastic MapReduce) is a cloud-native, fully managed service provided by AWS (Amazon Web Services) for processing large volumes of data quickly and cost-effectively. It enables the distributed processing of vast amounts of data across a scalable cluster of virtual machines (EC2 instances), making it an essential tool for big data processing, data analysis, and machine learning tasks.

Key Purposes and Use Cases of Amazon EMR:

Big Data Processing:

EMR is designed to run distributed data processing frameworks such as Hadoop and Spark. These frameworks can process petabytes of data in parallel across many EC2 instances, ensuring fast and efficient computations.

Common use cases include batch processing, data transformation, and machine learning tasks at scale.

Data Storage and Analysis with S3 and HDFS:

EMR can leverage Amazon S3 as a storage system for input and output data. This integration makes it easy to manage large datasets stored in S3 while processing them using distributed computing on EMR.

It also supports HDFS (Hadoop Distributed File System) if you want to store data locally within the EMR cluster.

Cost Optimization with On-Demand and Spot Instances:

EMR allows you to scale your clusters up or down based on demand. You can provision clusters with a mix of On-Demand and Spot Instances, ensuring you get the most cost-effective performance for your processing needs.

Spot Instances enable you to take advantage of unused EC2 capacity at a lower price, significantly reducing costs for data processing jobs.

Real-time Stream Processing:

- With Apache Kafka and Spark Streaming, EMR can process data in real-time, making it ideal for use cases like log analysis, clickstream analysis, and IoT data processing, where timely insights are critical.

Machine Learning at Scale:

EMR supports frameworks like Apache Spark MLlib, TensorFlow, and other machine learning libraries to process large datasets and build machine learning models in a distributed environment.

Using EMR for machine learning allows businesses to handle large volumes of data and perform model training and inference at scale.

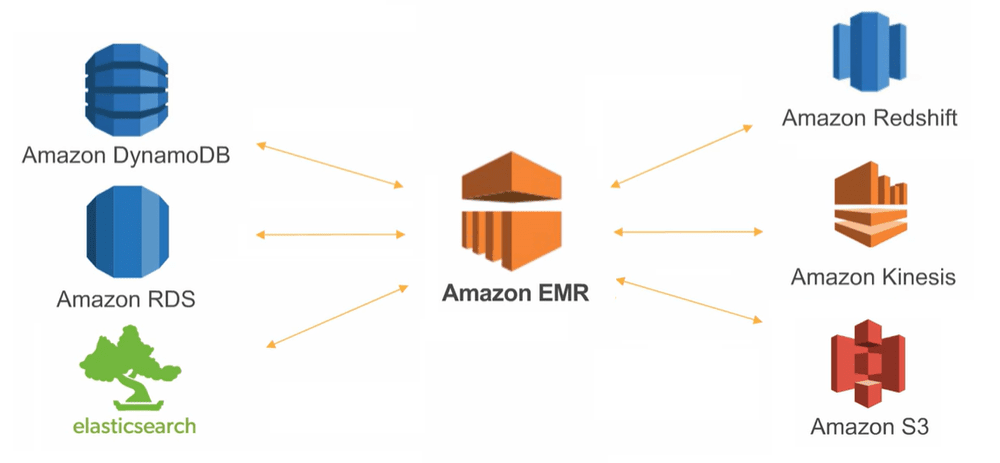

Easy Integration with AWS Services:

EMR is fully integrated with other AWS services, such as AWS Lambda, AWS Glue, Amazon RDS, Amazon Redshift, and more, making it easy to orchestrate end-to-end data processing pipelines.

It also integrates with AWS CloudWatch for monitoring and AWS IAM for access control and security.

Fault Tolerance and Scalability:

EMR provides high availability and fault tolerance. If a task or instance fails, EMR can automatically recover from failures by re-running tasks on other instances.

The service is also scalable, allowing users to increase or decrease the number of instances in the cluster as per their workload requirements.

Simplified Cluster Management:

EMR eliminates the need for managing infrastructure manually. AWS automatically takes care of cluster provisioning, configuration, and tuning, letting users focus on their data processing and analysis tasks.

It supports auto-scaling, so clusters can automatically expand or shrink based on workload demands.

Key integration options that EMR offers:

Amazon S3 (Simple Storage Service)

Amazon RDS (Relational Database Service)

Amazon Redshift

Amazon DynamoDB

AWS Lambda

Amazon CloudWatch

AWS Glue Amazon

Kinesis

Amazon ElasticSearch (Amazon OpenSearch Service)

Apache HBase (via Amazon EMR)

Amazon SageMaker

Apache Kafka

Apache Hive and Apache HCatalog

AWS IAM (Identity and Access Management)

AWS Data Pipeline

AWS Step Functions

Third-Party Tools (e.g., Jupyter Notebooks, Apache Zeppelin)

The Building Blocks: Primary, Core, and Task Nodes

The building blocks of Amazon EMR (Elastic MapReduce) represent the key components that make up an EMR cluster. These components work together to process large-scale data efficiently and cost-effectively.

Let's dive deeper into each of these building blocks:

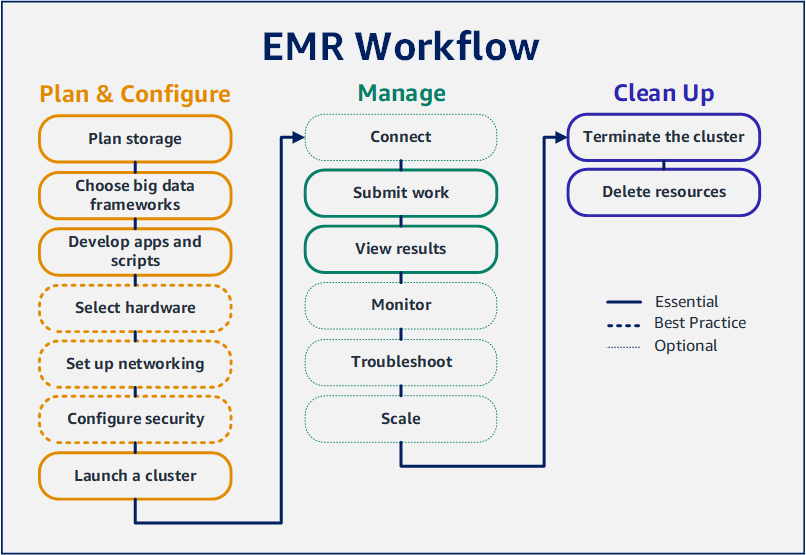

1. Cluster

Definition: An EMR cluster is a collection of Amazon EC2 instances that work together to process and analyze large datasets. A cluster can consist of different types of nodes (primary, core, task nodes) that serve various purposes.

Cluster Setup: When creating an EMR cluster, you can choose the number and type of EC2 instances for each node, configure software (like Hadoop or Spark), and select additional options like storage and scaling methods.

Cluster Lifecycle: EMR clusters can be provisioned on-demand, and you have full control over the cluster lifecycle, including scaling, termination, and instance configurations.

Nodes

The fundamental unit of an EMR cluster is the node. Each node in an EMR cluster runs a portion of the distributed data processing. There are three types of nodes in an EMR cluster:

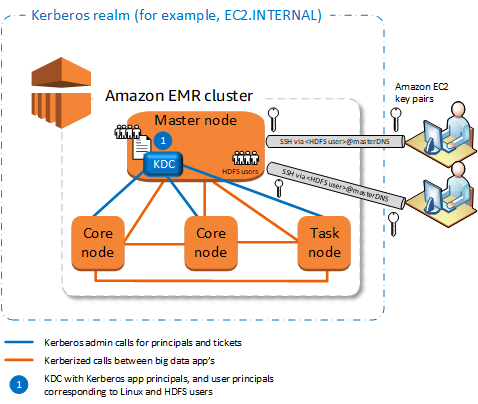

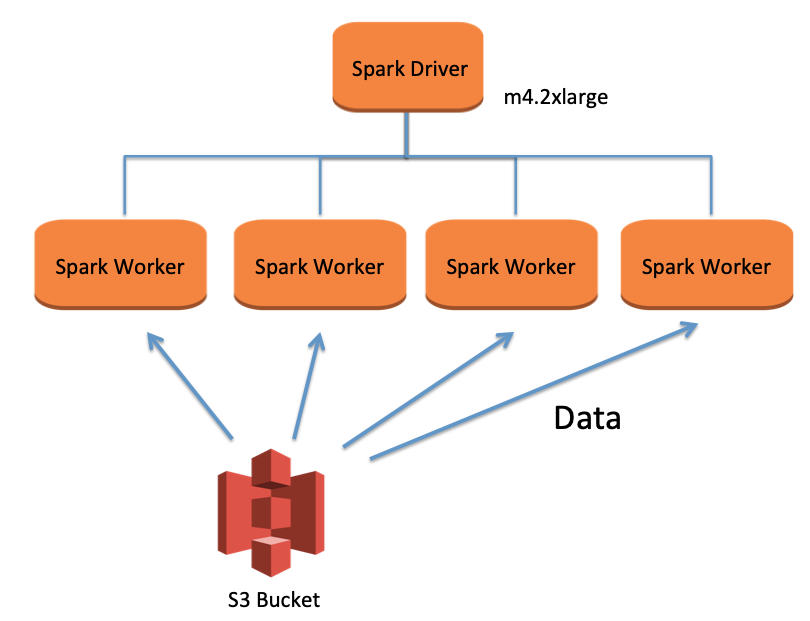

Primary Node (Master Node):

Role: The master node is responsible for managing the cluster's overall operation. It runs the resource manager (e.g., YARN for Hadoop or the Spark driver), which coordinates the distribution of tasks across the cluster.

Responsibilities: It tracks the health of other nodes, schedules jobs, and manages job execution across the cluster. The master node also manages the cluster configuration and keeps track of the logs and results.

Core Nodes:

Role: Core nodes perform the actual data processing. They run the worker tasks (such as mappers and reducers in Hadoop) and store the data in the cluster's HDFS (Hadoop Distributed File System).

Responsibilities: These nodes handle the core tasks of computation and data storage, and they are typically required for the cluster to function. The loss of core nodes may impact the cluster's performance.

Task Nodes (Optional):

Role: Task nodes are optional nodes that provide additional computational resources for performing tasks such as running map-reduce jobs. They don't store data but act as extra compute capacity for specific tasks.

Responsibilities: Task nodes only run computations and are transient, meaning they can be added or removed dynamically from the cluster to handle fluctuations in workload or processing capacity.

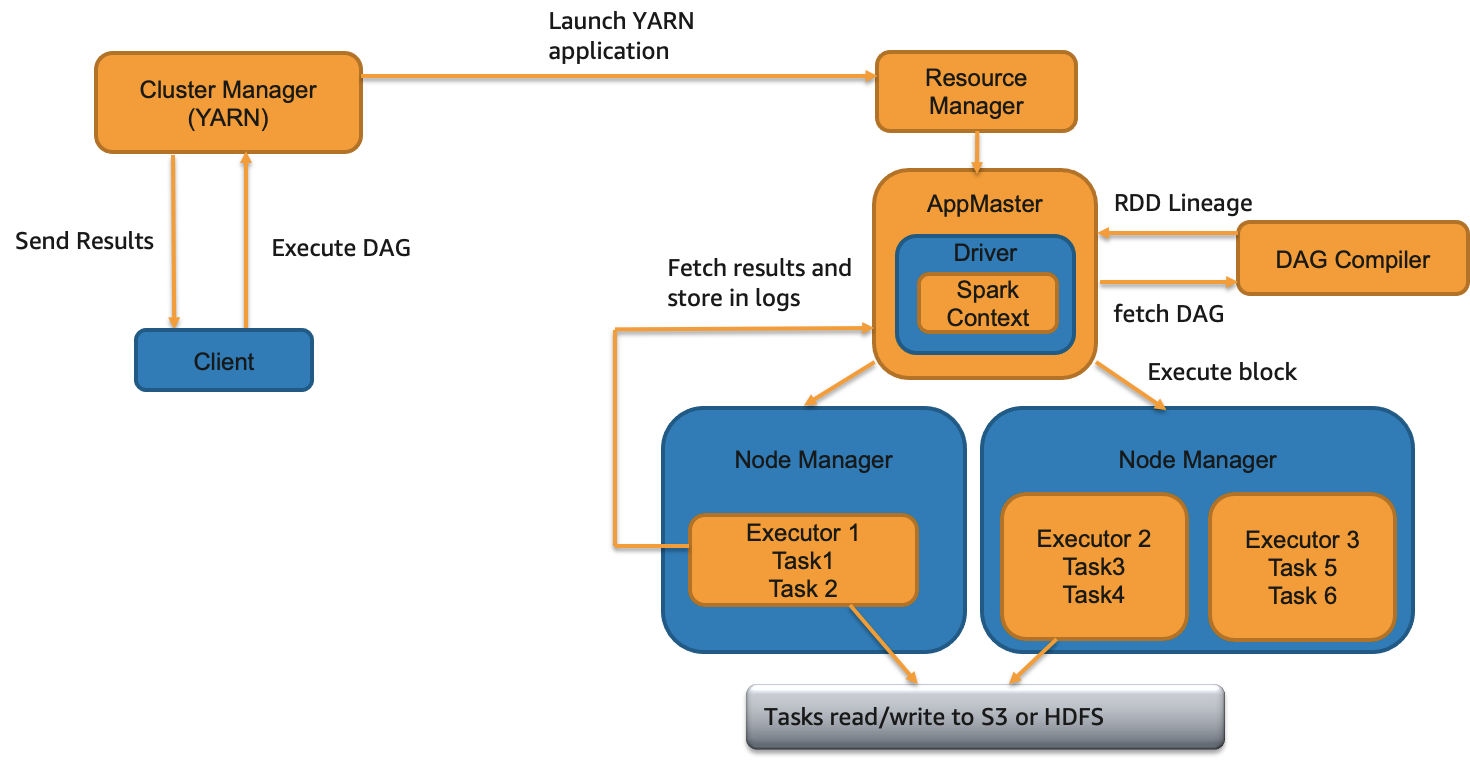

Executors

When you run a Spark job on Amazon EMR, the Executor runs on the core nodes (or task nodes, if used). Here's how it fits into the EMR ecosystem:

The Primary node (Master node) coordinates the cluster and assigns tasks to executors.

The Core nodes run executors, executing the actual Spark jobs.

If Task nodes are used, they also run executors to provide additional computational capacity when needed.

HDFS (Hadoop Distributed File System)

Definition: HDFS is the distributed file system that is used by Hadoop (and Spark when running in a Hadoop-compatible mode) for storing large datasets across multiple nodes.

Role in EMR: EMR leverages HDFS (or optionally Amazon S3 as storage) to distribute data across the cluster so that it can be processed in parallel by the nodes. HDFS is designed for high throughput and fault tolerance, enabling data to be replicated across nodes in the cluster.

Amazon S3 (Simple Storage Service)

Definition: While HDFS is typically used for data storage within an EMR cluster, Amazon S3 is commonly used for long-term storage of input/output data.

Integration with EMR: You can use Amazon S3 to store data that is used for batch processing or streaming, as well as for storing the results of data processing jobs. It's a highly scalable and durable storage system, and EMR clusters can be configured to read and write data directly to/from S3.

Storage Flexibility: Unlike HDFS, S3 is more flexible and cost-efficient for storing large volumes of data without the overhead of managing local storage.

Resource Manager

Definition: The Resource Manager is the component of the cluster responsible for managing resources across the nodes. It allocates resources to the various tasks that need to run in the cluster.

Examples:

YARN (Yet Another Resource Negotiator): For managing resources in a Hadoop ecosystem, YARN is responsible for resource management and job scheduling.

Spark’s Driver Program: For Spark applications, the Spark driver manages the resources and tasks.

Use cases for each node type

Primary Node (Master Node): The Primary Node is the central coordination unit of the EMR cluster. It doesn't handle data storage but is responsible for managing the cluster's lifecycle, running resource management services, and coordinating the execution of tasks. The Primary Node manages job scheduling and distributes tasks across the other nodes.

Core Node: The Core Nodes are responsible for data storage and data processing in the cluster. These nodes are the heart of the EMR cluster because they handle both the computation and store the data in HDFS (Hadoop Distributed File System). Core nodes store actual data that is being processed, utilizing HDFS for distributed storage. Each core node keeps part of the data and ensures redundancy and fault tolerance by replicating data blocks across other core nodes in the cluster.

Task Node: Task Nodes are optional nodes that can be added to an EMR cluster to provide additional computational resources for running tasks but without storing data. These nodes are typically used for scaling the cluster based on computational needs. Task nodes provide additional compute capacity when there is a need to process large datasets, and they are usually added when the workload increases beyond the capability of the core nodes.

| Node Type | Role | Use Case | Storage | Compute |

| Primary Node | Master node responsible for coordination, management, and resource allocation | - Manages job scheduling and resource allocation |

- Tracks cluster health

- Stores metadata | No | Manages resources |

| Core Nodes | Responsible for both data processing and storage | - Runs data processing tasks (MapReduce/Spark)

- Stores data in HDFS or S3 | Yes (HDFS/S3) | Yes (Data Processing) |

| Task Nodes | Provide additional compute capacity for processing tasks without storing data | - Adds more processing power when workload demands scaling

- Handles computation tasks only | No | Yes (Data Processing) |

Subscribe to my newsletter

Read articles from Mohammad Arsalan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Mohammad Arsalan

Mohammad Arsalan

I am Computer Science Graduate and Web Developer.