Observability with OpenTelemetry and Grafana stack Part 4: Setting up metrics monitoring with Prometheus, Mimir, Grafana, and OpenTelemetry Collector

Driptaroop Das

Driptaroop Das

Now that we have log aggregation and visualization in place, the rest of the observability stack is fairly easy to setup. We will be setting up the metrics monitoring with Prometheus, Mimir, Grafana, and OpenTelemetry Collector.

Prometheus

Prometheus is an open-source monitoring and alerting toolkit that is designed for reliability, scalability, and simplicity. It works by polling/scraping metrics from instrumented services at regular intervals, storing them in a time-series database, and providing a query language to analyze the data.

Prometheus is a popular choice for monitoring cloud-native applications and microservices due to its support for multi-dimensional data collection, powerful query language, and extensive ecosystem of exporters and integrations.

However, Prometheus is not optimally designed for long-term storage. For these use cases, Prometheus can be paired with a long-term storage solution like Thanos, Cortex, or Mimir. In this guide, we will use Mimir, a lightweight, open-source, and easy-to-use Prometheus long-term storage time series database.

For scraping the metrics from the services, we will be using the OpenTelemetry Collector. The OpenTelemetry Collector has a Prometheus receiver that is a drop-in replacement for getting Prometheus to scrape your services.

In fact, we will not be using Prometheus at all. We will be using the OpenTelemetry Collector to scrape the metrics from the services and export/store them to Mimir. And, then we will be using Grafana to visualize the metrics.

Now, the system should look like this (apologies for the horrible component diagram, but plantuml is failing me):

Setting up OpenTelemetry Collector for metrics

We will be using the Prometheus receiver in the OpenTelemetry Collector to scrape the metrics from the services and export/store them to Mimir.

To do that, we need to update the otel collector config file to include the Prometheus receiver and the Mimir exporter.

NOTE: the prometheus metrics in the services are exposed at

/actuator/prometheusendpoint. This is already done by spring boot actuator and micrometer.

receivers:

otlp:

protocols:

http:

endpoint: "0.0.0.0:4318"

prometheus: # new receiver for scraping metrics from the services

config:

scrape_configs:

- job_name: 'mimir'

scrape_interval: 5s

static_configs:

- targets: ['mimir:9009']

labels:

group: 'infrastructure'

service: 'mimir'

- job_name: 'user-service'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['user-service:8080']

labels:

group: 'service'

service: 'user-service'

- job_name: 'account-service'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: [ 'account-service:8080' ]

labels:

group: 'service'

service: 'account-service'

- job_name: 'transaction-service'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: [ 'transaction-service:8080' ]

labels:

group: 'service'

service: 'transaction-service'

- job_name: 'notification-service'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: [ 'notification-service:8080' ]

labels:

group: 'service'

service: 'notification-service'

- job_name: 'auth-server'

scrape_interval: 5s

metrics_path: '/actuator/prometheus'

static_configs:

- targets: [ 'auth-server:9090' ]

labels:

group: 'service'

service: 'auth-server'

- job_name: 'grafana'

static_configs:

- targets: [ 'grafana:3000' ]

labels:

service: 'grafana'

group: 'infrastructure'

processors:

batch:

exporters:

otlphttp/mimir:

endpoint: http://mimir:9009/otlp

otlphttp/loki:

endpoint: http://loki:3100/otlp

service:

pipelines:

metrics: # new pipeline for metrics

receivers: [otlp, prometheus]

processors: [batch]

exporters: [otlphttp/mimir]

logs:

receivers: [otlp]

processors: [batch]

exporters: [otlphttp/loki]

In this otel config file, we are setting up the otel collector pipeline(s). So far we have the logs pipeline setup. Now, we added the metrics pipeline.

Setting up Mimir

Mimir is a lightweight, open-source, and easy-to-use Prometheus long-term storage time series database. Setting up Mimir is fairly easy. We just need to create the Mimir config file and add the Mimir service to the compose file.

Let's create the config file for Mimir in the new directory called config/mimir and name it mimir.yaml.

# For more information on this configuration, see the complete reference guide at

# https://grafana.com/docs/mimir/latest/references/configuration-parameters/

# Disable multi-tenancy and restrict to single tenant.

multitenancy_enabled: false

# The block storage configuration determines where the metrics TSDB data is stored.

blocks_storage:

# Use the local filesystem for block storage.

# Note: It is highly recommended not to use local filesystem for production data.

backend: filesystem

# Directory in which to store synchronised TSDB index headers.

bucket_store:

sync_dir: /tmp/mimir/tsdb-sync

# Directory in which to store configuration for object storage.

filesystem:

dir: /tmp/mimir/data/tsdb

# Direction in which to store TSDB WAL data.

tsdb:

dir: /tmp/mimir/tsdb

# The compactor block configures the compactor responsible for compacting TSDB blocks.

compactor:

# Directory to temporarily store blocks underdoing compaction.

data_dir: /tmp/mimir/compactor

# The sharding ring type used to share the hashed ring for the compactor.

sharding_ring:

# Use memberlist backend store (the default).

kvstore:

store: memberlist

# The distributor receives incoming metrics data for the system.

distributor:

# The ring to share hash ring data across instances.

ring:

# The address advertised in the ring. Localhost.

instance_addr: 127.0.0.1

# Use memberlist backend store (the default).

kvstore:

store: memberlist

# The ingester receives data from the distributor and processes it into indices and blocks.

ingester:

# The ring to share hash ring data across instances.

ring:

# The address advertised in the ring. Localhost.

instance_addr: 127.0.0.1

# Use memberlist backend store (the default).

kvstore:

store: memberlist

# Only run one instance of the ingesters.

# Note: It is highly recommended to run more than one ingester in production, the default is an RF of 3.

replication_factor: 1

# The ruler storage block configures ruler storage settings.

ruler_storage:

# Use the local filesystem for block storage.

# Note: It is highly recommended not to use local filesystem for production data.

backend: filesystem

filesystem:

# The directory in which to store rules.

dir: /tmp/mimir/rules

# The server block configures the Mimir server.

server:

# Listen on port 9009 for all incoming requests.

http_listen_port: 9009

# Log messages at info level.

log_level: info

# The store gateway block configures gateway storage.

store_gateway:

# Configuration for the hash ring.

sharding_ring:

# Only run a single instance. In production setups, the replication factor must

# be set on the querier and ruler as well.

replication_factor: 1

# Global limits configuration.

limits:

# A maximum of 100000 exemplars in memory at any one time.

# This setting enables exemplar processing and storage.

max_global_exemplars_per_user: 100000

ingestion_rate: 30000

We can now add the Mimir service to the compose file.

mimir:

image: grafana/mimir:2.15.0

command: [ "-ingester.native-histograms-ingestion-enabled=true", "-config.file=/etc/mimir.yaml" ]

volumes:

- "./config/mimir/mimir.yaml:/etc/mimir.yaml"

profiles:

- grafana

Setting up Grafana for metrics

We just need to add the Mimir datasource to Grafana to visualize the metrics. Let's update the datasource config file for Grafana in the config/grafana and add the Mimir datasource.

apiVersion: 1

datasources:

- name: Mimir

type: prometheus

uid: mimir

access: proxy

orgId: 1

url: http://mimir:9009/prometheus

basicAuth: false

isDefault: true

version: 1

editable: false

jsonData:

httpMethod: POST

prometheusType: "Mimir"

tlsSkipVerify: true

- name: Loki

type: loki

uid: loki

access: proxy

url: http://loki:3100

basicAuth: false

isDefault: false

version: 1

editable: false

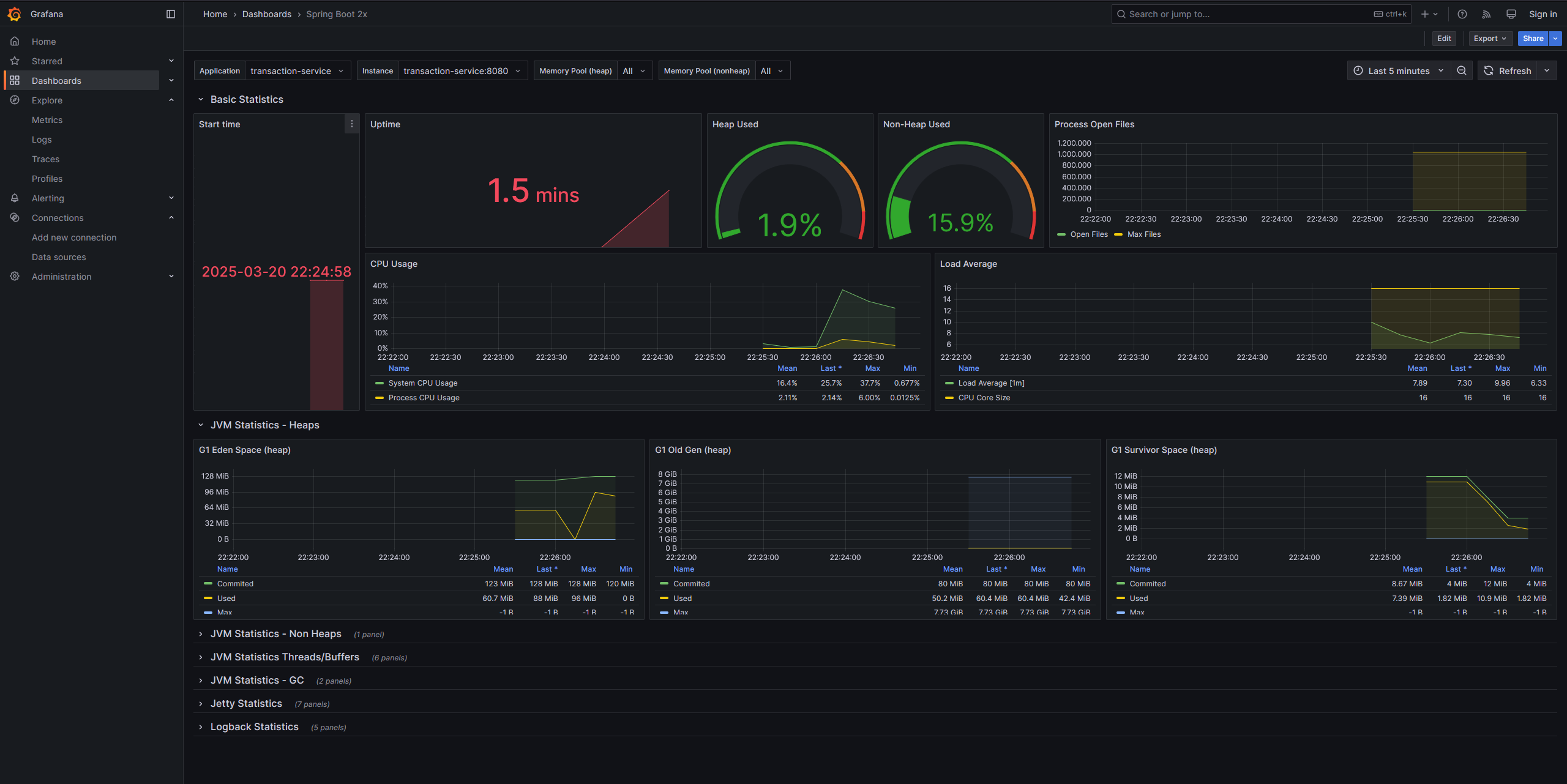

Adding a dashboard for the services in Grafana

We can also add a dashboard for the services in Grafana. There are a lot of pre-built dashboards available for Grafana. You can use them or create your own. Once you have downloaded the dashboard json (either from the internet or use my sample from here), you need to add them in the Grafana volumes and add config to read the dashboards.

grafana:

image: grafana/grafana:11.5.2

volumes:

- "./config/grafana/provisioning:/etc/grafana/provisioning"

- "./config/grafana/dashboard-definitions:/var/lib/grafana/dashboards"

- "./ca.crt:/etc/ssl/certs/ca-certificates.crt"

ports:

- "3000:3000"

environment:

- GF_FEATURE_TOGGLES_ENABLE=flameGraph traceqlSearch correlations traceQLStreaming metricsSummary traceqlEditor traceToMetrics traceToProfiles datatrails

- GF_INSTALL_PLUGINS=grafana-lokiexplore-app,grafana-exploretraces-app,grafana-pyroscope-app

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

- GF_AUTH_DISABLE_LOGIN_FORM=true

depends_on:

- loki

- mimir

- tempo

profiles:

- grafana

Add the grafana dashboard definitions to the config/grafana/dashboard-definitions directory. ALso add the config config/grafana/provisioning/dashboards to read the dashboards from the dashboard path.

./config/grafana/provisioning/dashboards/otel.yaml

apiVersion: 1

providers:

- name: 'otel'

orgId: 1

type: file

disableDeletion: false

editable: false

updateIntervalSeconds: 10

options:

path: /var/lib/grafana/dashboards/

Test the setup

The only thing left to do is to change the Java agent configuration to export the metrics to the OpenTelemetry Collector. We can do that by adding the OTEL_METRICS_EXPORTER environment variable to the x-common-env-services anchored extension section in the compose file.

x-common-env-services: &common-env-services

SPRING_DATASOURCE_URL: jdbc:postgresql://db-postgres:5432/postgres

SPRING_DATASOURCE_USERNAME: postgres

SPRING_DATASOURCE_PASSWORD: password

OTEL_EXPORTER_OTLP_ENDPOINT: "http://otel-collector:4318"

OTEL_EXPORTER_PROMETHEUS_HOST: "0.0.0.0"

OTEL_METRICS_EXPORTER: otlp # set the metrics exporter to otlp

OTEL_LOGS_EXPORTER: otlp

Now, you can start the services and the auth server along with the OpenTelemetry Collector, Loki, Grafana, and Mimir.

docker compose --profile "*" up --force-recreate --build

If everything is running fine, you can access Grafana at http://localhost:3000. Navigate to the added dashboard. You will be able to see the metrics from the services by selecting the Mimir datasource. You may have to filter by the service name to see the metrics from a particular service.

Now we are more than halfway through the observability stack. In the next part, we will setup the distributed tracing with Tempo, Grafana, and OpenTelemetry Collector.

Subscribe to my newsletter

Read articles from Driptaroop Das directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Driptaroop Das

Driptaroop Das

Self diagnosed nerd 🤓 and avid board gamer 🎲