Supercharging Cursor IDE: How the dbt Power User Extension’s Embedded MCP Server Unlocks AI-Driven dbt Development

Anand Gupta

Anand Gupta

Introduction

We’re excited to announce a major new capability in the dbt Power User VSCode extension: an embedded Model Context Protocol (MCP) server. This MCP server is now built directly into the extension, acting as a bridge between AI-powered development tools and your dbt project. The motivation behind implementing an embedded MCP server is to enable seamless communication between AI assistants (like those in Cursor IDE) and dbt, eliminating the friction of context switching. In practical terms, this means your AI coding assistant can query your dbt project’s schema, compile models, run tests, and more – all through a standardized protocol – leading to faster development workflows and greater efficiency for dbt developers.

By leveraging the MCP server, dbt Power User can expose rich project context and operations to AI agents in real-time. This empowers data engineers to automate repetitive tasks (like looking up column definitions or running model builds) and accelerates development. The embedded MCP server unlocks new AI-driven workflows for dbt, from intelligent autocompletion to on-demand documentation generation, all while keeping the interaction localized and secure within your development environment.

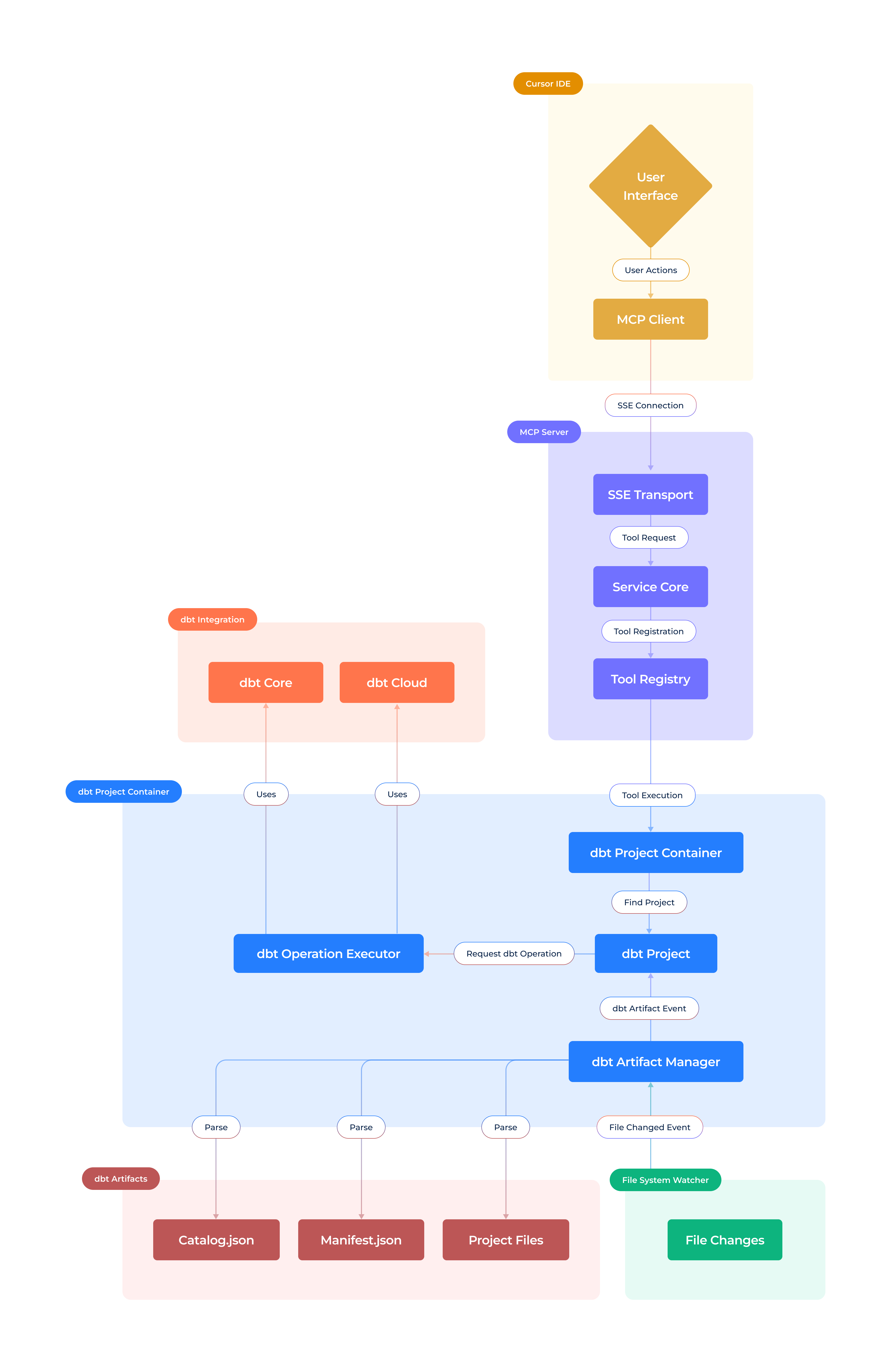

Technical Architecture and Cursor IDE Integration

At a high level, the MCP server runs as a lightweight web service within the dbt Power User extension environment. The server spins up automatically when you open a dbt project (if you have enabled the MCP feature) and listens on a local port. It selects an available port dynamically at launch to avoid conflicts, and registers itself with Cursor by updating Cursor’s MCP configuration. Once running, the Cursor IDE detects the MCP server and establishes a connection so its AI assistant can invoke dbt-related “tools” exposed by the server.

Server Components and Design: The MCP server architecture is composed of a few key components working in tandem:

Server Core: the core HTTP server (built with the MCP SDK) that handles incoming requests and responses. It defines the MCP endpoints and protocol handling (e.g. registering available tools, managing sessions, etc.). The server core orchestrates requests from Cursor’s AI and ensures each is routed to the appropriate handler in the extension.

Tool Registry: a registry of tools – individual operations or actions related to dbt. Each tool has a name and a function implementing its behavior. When the server starts, it registers a suite of tools (detailed in the next section) that allow the AI to perform various dbt tasks. The Tool Registry essentially maps MCP requests to the extension’s internal methods or dbt CLI calls. It also defines metadata for each tool (like input parameters and descriptions) that Cursor’s AI can utilize to formulate requests.

SSE Transport: the server uses Server-Sent Events (SSE) for real-time communication back to the client. After Cursor connects to the MCP server, it maintains an open SSE channel. When a tool is executed, results (and any interim output or logs) are sent as a stream of events over this channel to Cursor. This means the AI can receive data or status updates from long-running tasks in real time, without polling. For example, if you execute a model run via the AI, the MCP server can stream back logs or a success/failure message as soon as it’s available. Using SSE for output ensures a responsive, event-driven interaction between the extension and the AI assistant.

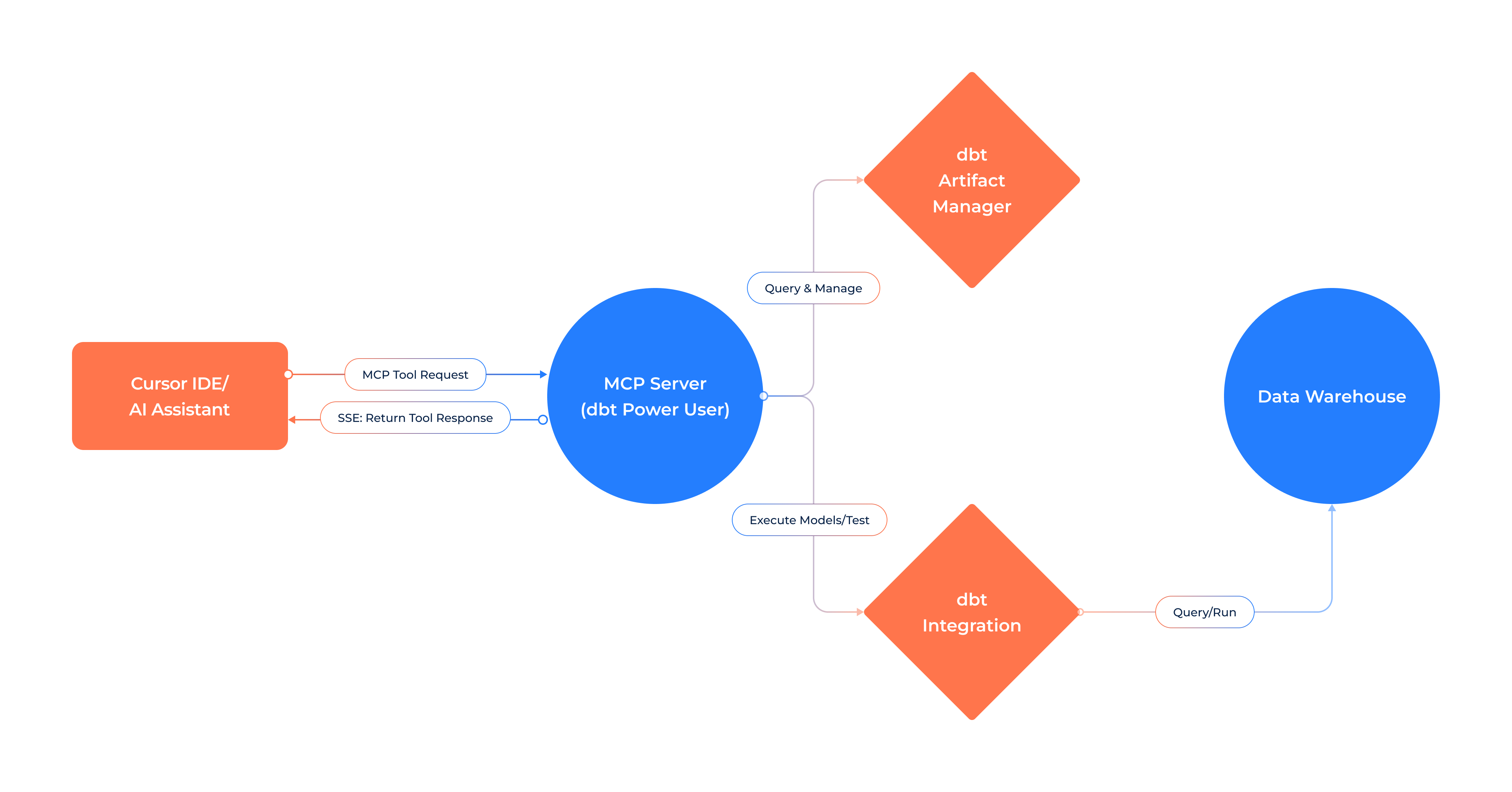

Architecture of the MCP Server integration with Cursor.

The diagram shows the Cursor IDE’s AI assistant interacting with the MCP server via tool requests. The MCP server (within the dbt Power User extension) processes these requests using its registered tools. It interacts with in-memory dbt project artifacts for quick context and, when needed, invokes the dbt Integration module (which in turn may query the data warehouse) to fulfill the request. Results and logs are returned back to the AI client in real-time via SSE.

Communication Flow: The end-to-end flow of a typical interaction involves several steps that connect the AI to your dbt project:

AI Initiates a Tool Request: Within Cursor, the AI assistant decides to call a tool (for example, “get the SQL definition of model X” or “run tests for model Y”) and sends a request through the MCP protocol.

MCP Server Receives the Request: The server core matches the incoming request to one of the registered tools in its registry and hands off execution to that tool’s handler.

Tool Execution: The tool’s handler runs inside the extension process. It may fetch information from the in-memory dbt artifacts (e.g. to get a model’s definition or lineage) or execute a dbt operation. The handler leverages the extension’s existing capabilities – for example, calling a function in the extension that wraps dbt operations to perform the task.

In-Memory Artifact Access: If the tool needs to read project structure (models, sources, tests, etc.), it queries the up-to-date in-memory representation of the dbt project (more on this in the next section). This avoids expensive disk or CLI operations for retrieving metadata. If the tool needs to run SQL or dbt operations, it might invoke the dbt integration module and use the database connections configured in the dbt profile.

In essence, the MCP server turns the dbt extension into a real-time API for your dbt project. Cursor’s AI no longer operates in a vacuum – it can actively query your project’s state and perform operations, treating the dbt project as an interactive context it can reason about. All of this happens locally within your development environment, leveraging the work the extension is already doing to maintain dbt context.

In-Memory dbt Artifacts Lifecycle and Performance Optimization

One of the standout benefits of the MCP server is that it can serve data to the AI assistant directly from memory, without constantly hitting your filesystem or database. The dbt Power User extension maintains an in-memory representation of your project’s artifacts as you work. This includes the parsed project manifest (models, sources, tests, exposures, etc. along with their relationships), and metadata like the active target and project name. By tapping into these in-memory artifacts, the MCP server can answer many questions instantly and run operations more efficiently.

Creation and Initialization: When you open a dbt project in VS Code, the extension immediately initializes and parses the project. It loads manifest information (by invoking dbt’s parse operation under the hood and translating it to its own in-memory representation through its own parser) to build a graph of models and references in memory. This means the extension knows about all your model definitions, sources, tests, and their lineage as soon as the project is ready. At the moment the MCP server starts (which typically coincides with project load or when you enable the feature), it attaches to this ready-to-use project context. The server core may wait until the dbt project is fully initialized and then mark its tools as available for the AI. Essentially, the server boots up after the dbt context is prepared, ensuring the AI always sees a consistent project state.

Live Updates: As you modify files or the project state changes, the in-memory artifacts are updated incrementally. The extension listens to file system events and dbt execution outcomes – for example, if you edit a model file, it can re-parse that model and update the manifest graph in memory. Likewise, running dbt deps will refresh the list of installed packages in memory. The MCP server subscribes to these internal events. If the manifest is updated or a new run is completed, the server can invalidate or refresh relevant cached data. This way, tools like “get children models of X” will always use the latest information. The lifecycle is managed such that any time the dbt project changes, the in-memory state and the MCP server’s view of it remain in sync. This is much faster than re-running dbt commands for each query and ensures the AI doesn’t get stale data.

Efficient Querying: Because the data is in memory, tools that retrieve info (e.g. listing models, showing a model’s SQL, computing lineage) execute extremely quickly. There’s no need to spawn a subprocess or read from disk for these – the extension can simply look up the Python/TypeScript object representing that model. Even complex lineage traversals or dependency graphs can be resolved by the extension’s code using pre-computed relationships. This optimization is critical for performance: it allows the AI to ask a series of detailed questions about your project without incurring heavy overhead. For example, an AI reasoning about why a model’s test failed can rapidly pull the model’s SQL, its parent models, and the test definition via multiple MCP tool calls in seconds, whereas doing this via CLI repeatedly would be far slower.

Cleanup and Lifecycle End: The MCP server’s lifetime is tied to your VS Code session and project. If you close the project or disable the extension, the server is stopped and the in-memory artifacts are freed. The implementation ensures that sockets are closed and event listeners are disposed to avoid resource leaks. Notably, the server core exposes a clean dispose() method that the extension calls on shutdown, which shuts down the SSE stream and prevents further tool calls.

Overall, the approach of leveraging in-memory artifacts means that the MCP server can provide a fast, rich context to the AI. It capitalizes on the work already being done by the dbt Power User extension’s internal DBT parser and project tracker. This not only speeds up AI interactions but also avoids unnecessary load on your data warehouse – the AI can get a lot of information without executing a single query, unless absolutely necessary for the task.

Capabilities Unlocked: Tools Provided by the MCP Server

The MCP server exposes a robust set of tools that the AI assistant can use. These tools cover most day-to-day dbt tasks and are grouped into a few categories for clarity. Below is a breakdown of major capabilities and examples of tools in each category:

Project & Environment Management Tools

- Project Information: Usually the AI will fetch all the dbt projects and its roots first, to know on which project it should work. The AI will use the root of the project as a parameter to the other tools. For instance, a tool

GET_PROJECT_NAMEreturns the dbt project name for the given root, andGET_SELECTED_TARGETreturns which target/profile is active. This is useful for the AI to contextually understand which environment it’s working with (production or development).

Model and Source Exploration Tools

Model SQL and Schema: Tools in this category allow the AI to fetch the content or properties of dbt models and sources. For example, a

COMPILE_QUERYtool can return the raw SQL of a model file, and aGET_COLUMNS_OF_SOURCEcould return details about a source (like database/schema and columns as defined in YAML). With these, the AI can, say, read the logic of a model to help debug or suggest changes.Lineage and Dependency Queries: We provide tools like

GET_CHILDREN_MODELSandGET_PARENT_MODELS, which return the immediate downstream and upstream models of a given model. The AI can use these to understand the dependency graph – for instance, finding what will be impacted if a model is changed or which upstream data sources feed into it. Internally, these tools leverage the manifest graph in memory to quickly compute the relationships. Additionally, there are count-based queries (like a tool to count the number of models depending on a source) to aid impact analysis.Column Lineage and Documentation: (Upcoming) The extension already has features for column-level lineage and documentation. Through MCP, we intend to expose tools so the AI can answer questions like “which models use column X from source Y” or even retrieve documentation strings for a model or column. This will bridge the gap between documentation and code by letting the AI pull in documented context as needed.

SQL Compilation & Execution Tools

Compile SQL: The AI might want to see how dbt renders a model’s SQL with Jinja templating. The

COMPILE_QUERYtool takes a query (and optionally the model it is derived from) and returns the compiled SQL, exactly as dbt would generate it. This is extremely useful for checking logic or debugging macros – the AI can get the SQL that would run on the warehouse, without actually running it. It uses dbt’s compilation engine (via the extension’s interface to dbt Core) under the hood.Execute Arbitrary SQL: Sometimes the AI will need to run a query to answer a question (for example, “preview the first 10 rows of model X”). The

EXECUTE_SQLtool (with a configurable row limit) allows running a SQL query against the project’s data warehouse connection and returns results, ensuring we don’t pull massive datasets by accident. The AI could use this to validate assumptions or provide sample data to the user. Users must explicitly permit this feature, as executing SQL will share data with the AI.Run dbt Models: For full pipeline execution, we offer tools to run or build models. The

RUN_MODELtool triggersdbt runfor a specific model (and optionally its dependencies), whileBUILD_MODELcan run the model and its tests (equivalent todbt buildfor that model). There’s also aBUILD_PROJECTfor running the entire project (dbt build). These commands execute in the background through the extension’s job runner. The AI can thus initiate a model run or a full project build from within the conversation – for example, “Re-run the revenue model to see if the issue is fixed.”

Testing and Validation Tools

Execute Tests: The server provides a

RUN_TESTtool to execute a specific dbt test by name, as well asRUN_MODEL_TESTto run all tests associated with a particular model. This allows the AI to verify data quality or detect if recent changes broke any tests. For instance, after modifying a model, the AI might callRUN_MODEL_TESTto ensure all tests still pass and then report the results. Test results or failures will be returned via the event stream.SQL Validation: (Planned) Even without running on the warehouse, the extension can do a lightweight SQL parse/validation. A tool is available to validate a SQL query (checking for syntax errors, missing refs, etc.) without executing it. This is similar to the extension’s existing SQL Lint feature, now accessible to the AI. It can prevent the AI from running a bad query by first validating it and catching errors.

Performance Analysis: (Planned) We aim to add tools that use dbt’s compilation stats or query planner insights to help the AI assist in performance tuning. For example, a tool to show the compiled query explain plan (if the warehouse supports

EXPLAIN) or to estimate the cost of a query. Such tools would let the AI caution the user if a query might be expensive or if a model lacks proper pruning filters, etc.

Package Management and Repository Tools

Install Packages: Managing dbt packages is made easier with tools like

INSTALL_DEPSwhich runsdbt depsto install the packages listed inpackages.yml. There’s also anADD_DBT_PACKAGEStool that can add new packages on the fly. For example, if the AI suggests using a package (like dbt-utils), it could callADD_DBT_PACKAGESwith the package name and version – the extension will add it topackages.ymland rundbt depsinternally to install it, returning the result. This showcases agentic behavior: the AI can not only suggest but also take action to modify your project structure (with your permission).Upgrade dbt Version: Although not explicitly a tool in this release, the infrastructure could allow the AI to assist in upgrading the dbt version or dependencies. For example, by reading

requirements.txtor checking the installed dbt version, and then running pip installs. This would likely be added as a safe tool in future once we ensure such actions are gated behind user approval.Git Operations: While primarily focused on dbt tasks, the larger vision includes enabling some project-level Git interactions via MCP (for example, checking out a new branch for a fix, or opening a pull request). The current server does not directly include Git tools, but it lays the groundwork for integrating such capabilities alongside dbt tasks, so the AI could orchestrate end-to-end workflows (code change -> run model -> test -> commit).

All these tools are defined in the extension and registered with the MCP server at startup. When Cursor’s AI is formulating a plan to help you, it queries the MCP server for an index of available tools (the MCP protocol allows the client to discover tools and their input schema). The AI sees something like “I have a tool called get_children_models that needs a table name” and it can then decide to use it if the user asks a question about model dependencies.

Under the Hood: Port Management, Error Handling, and Security

Building an embedded server inside an IDE extension comes with its own engineering challenges. We put significant effort into making sure the MCP server runs smoothly without disrupting your workflow.

Port Management: Since the MCP server is local, it needs to choose a port to listen on. We default to letting the OS assign an open port (by binding to port 0, which yields an ephemeral port). Once the server is listening, the extension logs the chosen port. If for some reason the port is not reachable or Cursor cannot connect, the extension will surface an error message. We also handle the case where the server might need to restart (for example, if you re-initialize the project) by cleaning up the old server and starting a new one potentially on a new port. The extension ensures there is only one MCP server instance per workspace to avoid port conflicts. In the future, we may allow a fixed port configuration if users want to expose the server to other tools, but in this beta it’s fully managed and isolated.

Error Handling and Resilience: The MCP server includes robust error handling so that failures in tools do not crash the server or hang the AI. Each tool execution is wrapped in try/catch logic. If a tool throws an exception (say, dbt fails to compile a query due to a syntax error), the error is caught and sent back as an error event to the AI, and the server remains up for subsequent requests. We’ve instrumented comprehensive logging via the extension’s output channel (the “dbt Power User” output panel in VS Code) to record any exceptions or problematic requests. This makes it easier to debug if something goes wrong – both for us as developers and for users who can check the logs. Overall, the server is designed to be long-running and robust, recovering gracefully from errors. In our tests, issues like missing profiles or syntax errors are caught and reported back to the AI without terminating the server.

Security Measures: Since the MCP server allows automated actions on your project, we have restricted its accessibility to ensure safety. The server binds only to localhost and is not accessible from external machines. This means only processes on your computer (like the Cursor IDE) can communicate with it, mitigating risk of any outside interference. Additionally, the server enforces that requests conform to the expected MCP schema – it will reject any malformed requests or unknown tool calls rather than executing them arbitrarily. We also incorporated a simple authentication check in this beta: the MCP server feature will only start if certain preconditions are met (for instance, verifying that you have a valid Altimate API key configured, which is used to enable AI features in the extension). This is mainly to gate the feature during beta and ensure usage can be monitored and supported. In the future, we plan to add more sophisticated security, such as an authentication token for the MCP connection or user consent prompts in VS Code before executing potentially destructive actions (like modifying files or running DDL statements). It’s important to note that the AI agent in Cursor will only use tools in ways you allow – you’re always in control and can disable or limit certain tools if desired. We recommend keeping the extension and Cursor up-to-date to get the latest security improvements. As the community and usage of MCP servers grow, we’ll continue to harden the server (for example, adding rate limiting or sandboxing if the scenario requires it).

Resource Usage: Running an MCP server is lightweight. It piggybacks on the extension’s process and uses minimal additional memory (mostly just for the HTTP server overhead). The in-memory artifact store is already in place for the extension’s normal operation. We’ve observed negligible impact on VS Code’s performance when the server is idle. During heavy use (like a lot of model runs triggered by AI), there is some CPU and memory use, but it’s equivalent to you running those dbt commands manually. When idle, the server simply holds open a socket for Cursor. If needed, you can always stop the server by disabling the feature in settings, which immediately frees the port and associated resources.

Future Enhancements: In upcoming releases, we plan to enhance both the capabilities and the internals of the MCP server. On the capability side, we’re looking at adding more tools (as mentioned, possibly Git operations, documentation queries, etc.) and integrating deeper with other systems (imagine the AI triggering an Airflow DAG after a successful dbt run, via a future Airflow MCP server). We’re also considering a plugin mechanism where users could write custom MCP tools for their own macros or operations and have the server load them. Finally, as the MCP protocol evolves (it’s an open standard in active development), we will adapt our server to remain compliant and take advantage of new features. For example, Anthropic’s Claude models recently embraced MCP natively, and we expect more LLMs to follow – our goal is to have the dbt MCP server work with any AI agent that speaks MCP, not just Cursor’s.

Conclusion: Our Vision Toward an AI-Integrated Data Platform

The introduction of the MCP server in the dbt Power User extension is a significant step toward an AI-integrated data development platform. We’ve turned the VS Code + dbt environment into an AI-accessible service, enabling smarter assistance and automation. This capability is not an isolated feature – it’s part of our broader vision to integrate AI with all facets of the modern data stack. In the near future, you can expect similar integrations with Snowflake and Postgres (for example, an AI agent that can profile or manipulate your warehouse data), with Airflow (an AI that can inspect DAGs or trigger workflows), and more. Our DataPilot platform is evolving into an ecosystem of AI “teammates” that collaborate across tools. The dbt MCP server is one of these teammates, focused on analytics engineering, and it will collaborate with others (imagine an AI assistant that understands your data warehouse, your pipelines, and your dbt models collectively).

We invite you to try out the MCP server. Since it’s a beta release, we greatly value your feedback. Join our community Slack channel (#tools-dbt-power-user on dbt Slack) to share your experiences or ask any questions. . If you need help setting it up or want to explore how this could fit into your team’s workflow, please reach out to us . We’re also eager to collaborate with teams interested in pushing the boundaries of AI in data engineering. Whether you have ideas for new MCP tools or want to integrate our platform with your own, let’s start a conversation. Together, let’s redefine what a “power user” can do by combining the strengths of dbt with the intelligence of AI. This launch is just the beginning, and we’re excited to build a more connected, intelligent data platform with your input.

Subscribe to my newsletter

Read articles from Anand Gupta directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Anand Gupta

Anand Gupta

I am co-founder at Altimate AI, a start-up that is shaking up the status quo with its cutting-edge solution for preventing data problems before they occur, from the moment data is generated to its use in analytics and machine learning. With over 12 years of experience in building and leading engineering teams, I am passionate about solving complex and impactful problems with AI and ML.