MCP - Model Context Protocol

Jan Malčák

Jan Malčák

High-Level Overview

Remember heads in jars from Futurama? That’s LLM. They may have a lot of knowledge, but they cannot do much with it. They are just sitting heads.

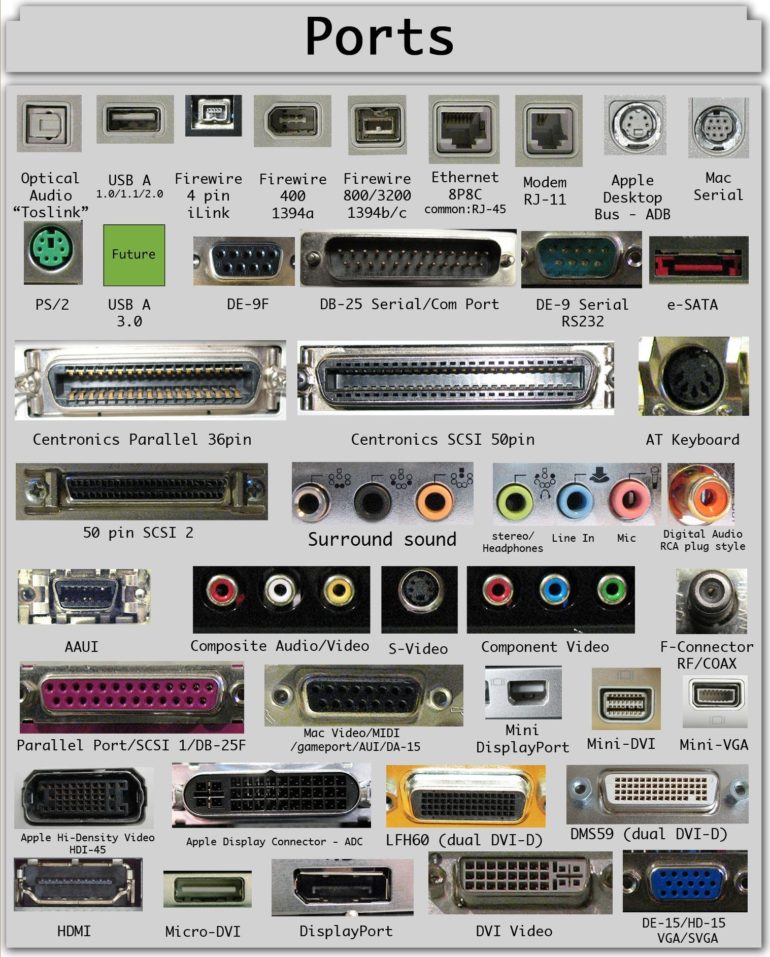

What if we could give them hands? They could move around and press buttons for us! It would not be just a smart oracle to answer questions anymore. That’s easier to say than do. There are many manufacturers producing hands. And each one decided to use a different port to connect.

Do you support all of them because there may be a specific hand you may like in the future? Congrats, the head now looks like a Frankenstein’s monster. Full of ports. Have you picked just one? You are limiting the possibilities and increasing your reliance on selected manufacturers.

Bit Lower Level Overview

Let’s speak LLMs. There are multiple ways how to allow the LLM to interact with the outer words. One of them is function-calling.

It’s a way to translate user prompts into “actions”. For example, you ask:

What’s the weather in New York?

LLM doesn’t know the current weather. It was trained months ago. So it looks at available functions, finds one that may match the desired use case, and generates a function call. The format of said function call differs from LLM vendor to vendor. For example, for OpenAI, it will look like:

{

"id": "call_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"New York, USA\"}"

}

}

So it’s just another type of output. LLM does not “call” anything and it still does not have access to the internet, nor knows how to use it.

The applicatioin using the LLM (be it chat, IDE or something else) has to pick up those “instructions”, find correct function to run and pass the results back to the LLM.

Hey, thanks to the data you found for me, I know that it’s 5 degrees Celsius in New York.

We gave LLM “access” to a very limited subset of interacting with outer world. However with some limitations:

The developer has to tell the LLM, which functions are available and how to use them.

The developer is responsible for writing those functions.

The developer has to take care of passing result back to LLM.

LLM is free to call any function it knows. The end user (someone using the chat app, for example) is not in control. This opens potential security issues.

There is no standard for function calls across LLM vendors.

We are in the same situation as in opening example. We can provide many functions, but it’s daunting to write them all and if we want to cover more than one LLM vendor, it means duplicating the work.

MCP?

Anthropic released MCP in November 2024. It took some time to get traction. Now almost everyone is speaking about it. Why?

MCP builds on the principle from above. However, it adds clear rules for the LLM to discover, connect to, and execute external tools. How?

It consists of three parts:

Host - An app that interacts with the LLM. This may be Cursor or Claude Desktop.

Client - Maintains 1:1 connection with the Server and takes care of the communication either via

stdio(when the server runs locally) or HTTP with SSE.Server - Maybe the most important part of the setup. It has three main capabilities and based on the request, it uses them to return required information/data back to the Client.

Tools - Tools are “functions” that the server provides.

Resources - List of files or data that may be shared with the Client.

Prompts - Reusable prompt templates.

Let’s start from the beginning. You start a Claude Desktop with a weather MCP server installed. When you ask:

What’s the weather in New York?

Similarly to the previous case with function-calling, LLM does not know the weather. So it looks at available MCP servers and their capabilities. The developer is no longer responsible for providing the list. MCP takes care of that.

The weather MCP server was chosen as the right one and LLM generates the following output:

{

"jsonrpc": "2.0",

"id": "call_abc123",

"method": "weather/current",

"params": {

"location": "New York, USA"

}

}

This format is standardized for all MCP-enabled LLMs. What happens next?

The Host picks this up and sends it to the correct MCP client.

Client routes the request to the corresponding Server.

Server runs one of the available tools and receives data about the current weather.

Data is returned to the Client.

Client ensures correct formatting, handles potential errors, and passes the data back to the Host application.

And voila, you have your answer.

Hmm, so we added a lot of complexity. What for? Let’s return back to the “ports” example I opened this article with. We are now in a situation where instead of using tens of ports to connect to different services, we can use just one - USB. Due to the standardization, any LLM that implements MCP can use it to connect to any tool.

MCP also takes care of the tool discovery, so you just have to register the server.

Sounds good? Let’s also mention some limitations to balance it out. :)

Limitations

Stateful and long-lived connection

The connection between the MCP Server and the MCP Client is stateful and long-lived. This allows the server to send notifications about resource/capabilities changes or to initiate sampling. Theoretically, it enables better agentic workflows.

The initial specification was intended for local use. When you try deploying it to serverless, the long-lived connection becomes a problem.

Especially, when most MCP servers are just thin wrappers around existing APIs. They take the request, pass it to the API and return the response back to the MCP client. Luckily, there is already a proposed change to the standard: https://github.com/modelcontextprotocol/specification/pull/206

Too many choices get the LLM confused

This is nothing new. When presented with too many choices (in terms of tools, function to call, etc), LLMs get confused. MCP addresses this with a structured tool description and specifications. However, the effect still depends on the quality of those descriptions.

Authentication

At the moment, there is no authentication implemented directly in the standard. Each MCP server can implement it in its own way. Even here, the team has a draft with a proposed change https://github.com/modelcontextprotocol/specification/blob/6828f3ef6300b25dd2aaff2a2e5e81188bdbd22e/docs/specification/draft/basic/authorization.md

Tedious Installation

Unless you use some third-party service, you are responsible for installing, setting up, and running the MCP servers. Very often, this means downloading docker images, getting access tokens and editing .env/conf files. Not friendly at all.

Closing words

Ending with limitations may have left you a bit confused. On one side, we have an amazing thing that simplifies providing access to external data or tools, on the other side, it’s limited and still harder than needed to set up. What to take from it? MCP is great. Go and play with it. Install some servers and build your own. It’s fun. But keep in mind, it’s not a silver bullet that fixes everything.

Links

Official Documentation - https://modelcontextprotocol.io/introduction

Why MCP Won - https://www.latent.space/p/why-mcp-won

MCP directory - https://cursor.directory/mcp

Subscribe to my newsletter

Read articles from Jan Malčák directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by