Amazon S3 Tables

Utkarsh Rastogi

Utkarsh Rastogi

Introduction

With tools to continuously enhance query performance and lower table storage costs, Amazon S3 Tables offers S3 storage that is tailored for analytics workloads. S3 Tables are specifically designed to hold tabular data, like ad impressions, streaming sensor data, and daily purchase transactions. Like a database table, tabular data is data organized into rows and columns.

The data in S3 Tables is stored in a new bucket type: a table bucket, which stores tables as sub resources. Apache Iceberg format tables can be stored in table buckets. You can use query engines that support Iceberg, such Apache Spark, Amazon Redshift, and Amazon Athena, to query your tables using conventional SQL commands.

Implementation

Default Region: us-east-1

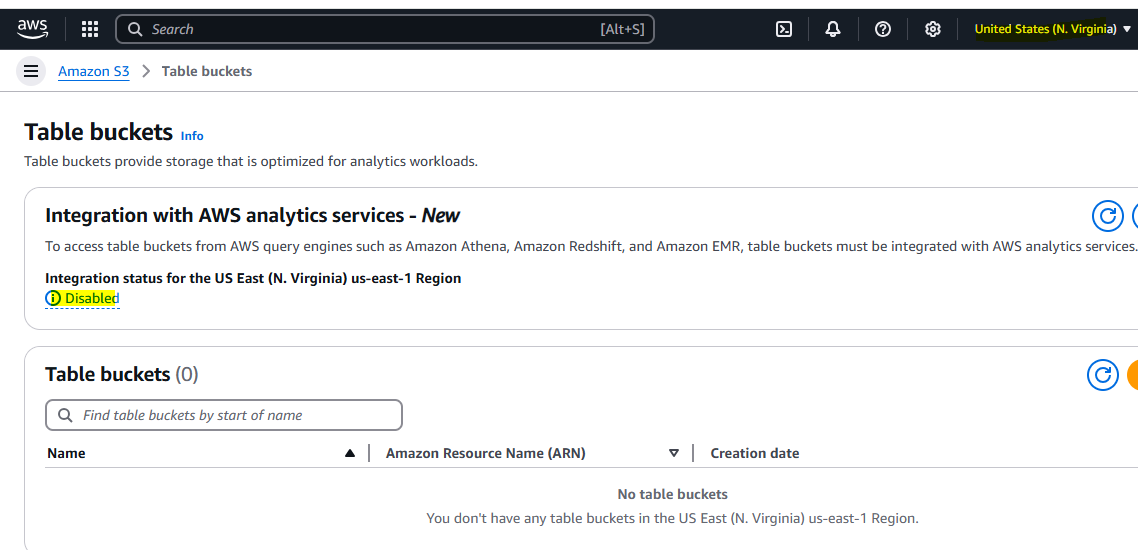

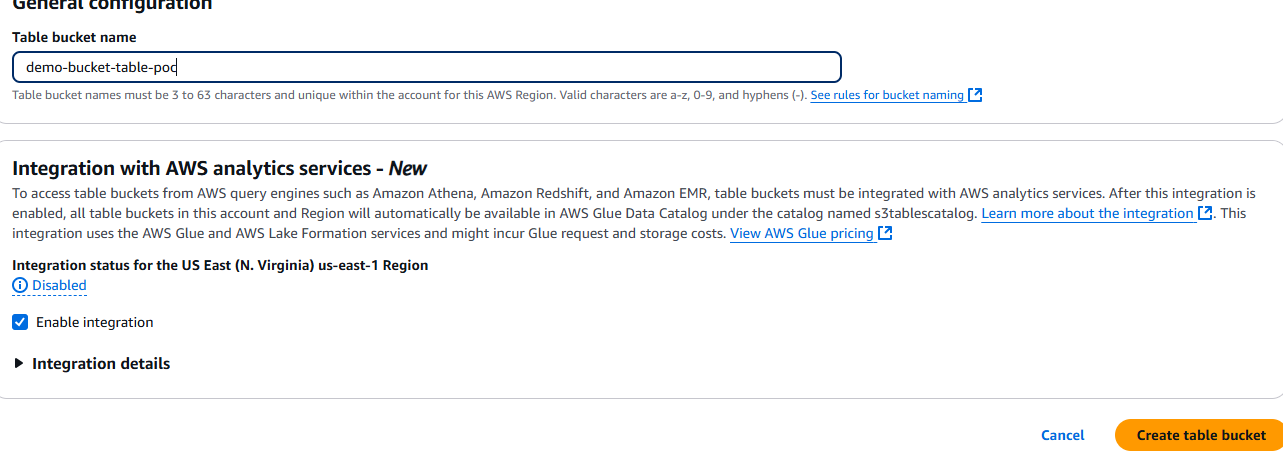

- Creating Table Bucket and enabling its integration

- After Creation of Table Bucket

Creating IAM Role

We need to create iam role with permissions as shown below and trust relationship policy as

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "glue.amazonaws.com" }, "Action": "sts:AssumeRole" } ] }

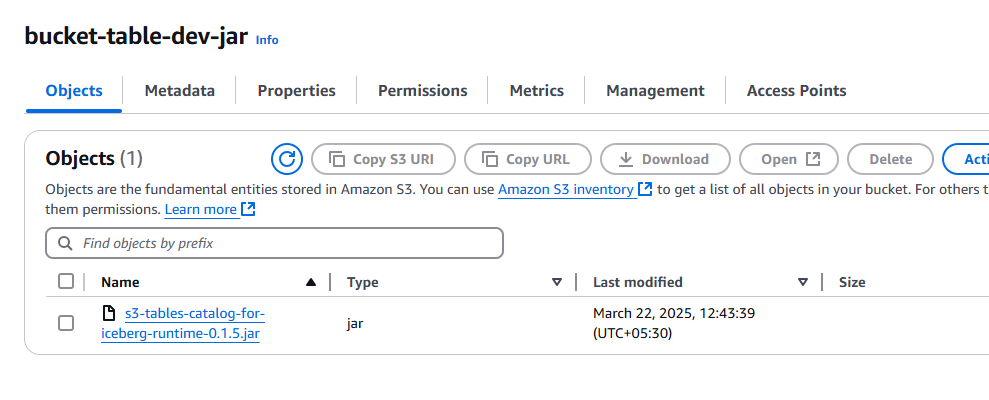

Downloading and Storing Jar Needed

Apache Iceberg Runtime Jar Download and storing it in s3 bucket

https://mvnrepository.com/artifact/software.amazon.s3tables/s3-tables-catalog-for-iceberg-runtime

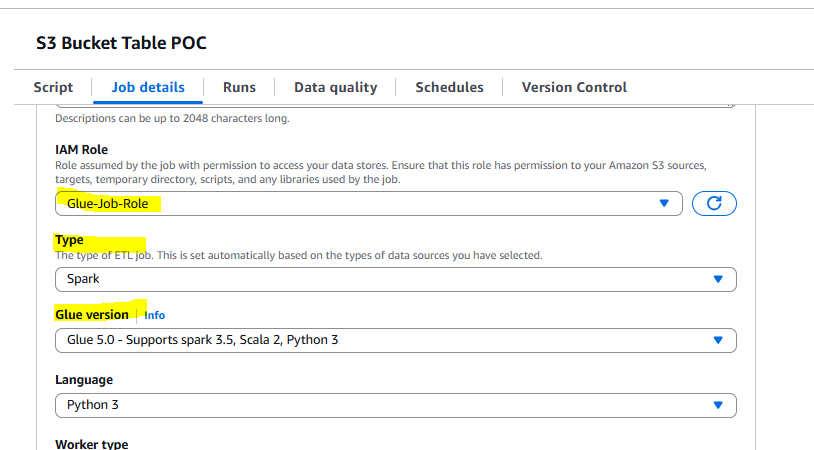

Creating Glue Job

Providing IAM Role which we have created in above mentioned step. Job type should be Spark and S3 Table Only Supports Glue Version 5.0

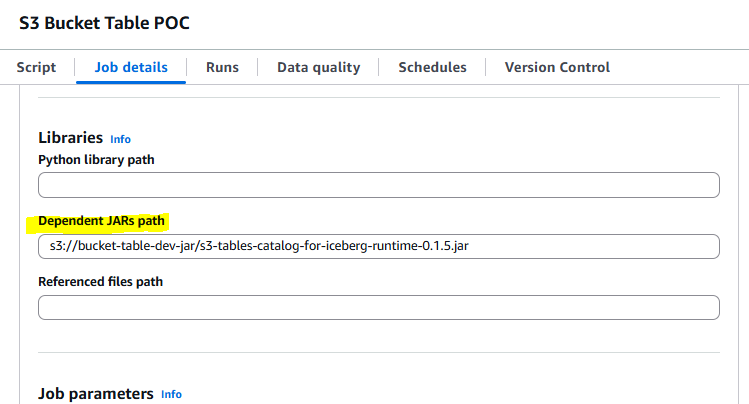

Adding Dependent jar path which we have stored in S3 Bucket.

Glue Script Code

Replace ACCOUNT_NO with actual account id in script.

import sys

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

from pyspark.sql import SparkSession

# Configure Spark session for Iceberg

spark_conf = SparkSession.builder.appName("GlueJob") \

.config("spark.sql.catalog.s3tablesbucket", "org.apache.iceberg.spark.SparkCatalog") \

.config("spark.sql.catalog.s3tablesbucket.catalog-impl", "software.amazon.s3tables.iceberg.S3TablesCatalog") \

.config("spark.sql.catalog.s3tablesbucket.warehouse", "arn:aws:s3tables:us-east-1:ACCOUNT_NO:bucket/demo-bucket-table-poc") \

.config("spark.sql.defaultCatalog", "s3tablesbucket") \

.config("spark.sql.extensions", "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions") \

.config("spark.sql.catalog.s3tablesbucket.cache-enabled", "false")

# Initialize Glue context with custom Spark configuration

sc = SparkContext.getOrCreate(conf=spark_conf.getOrCreate().sparkContext.getConf())

glueContext = GlueContext(sc)

spark = glueContext.spark_session

## @params: [JOB_NAME]

args = getResolvedOptions(sys.argv, ['JOB_NAME'])

job = Job(glueContext)

job.init(args['JOB_NAME'], args)

namespace = "demo"

table = "student_table"

def run_sql(query):

try:

result = spark.sql(query)

result.show()

return result

except Exception as e:

print(f"Error executing query '{query}': {str(e)}")

return None

def main():

try:

# Create a new namespace if it doesn't exist

print("CREATE NAMESPACE")

run_sql(f"CREATE NAMESPACE IF NOT EXISTS {namespace}")

# Show all namespaces

print("SHOW NAMESPACES")

run_sql("SHOW NAMESPACES")

# Describe a specific namespace

print("DESCRIBE NAMESPACE")

run_sql(f"DESCRIBE NAMESPACE {namespace}")

# Create table in the namespace

print("CREATE TABLE")

create_table_query = f"""

CREATE TABLE IF NOT EXISTS {namespace}.{table} (

rollno INT,

name STRING,

marks INT

)

"""

run_sql(create_table_query)

# Insert data into table

print("INSERT INTO")

insert_query = f"""

INSERT INTO {namespace}.{table}

VALUES

(1, 'ABC', 100),

(2, 'XYZ', 200)

"""

run_sql(insert_query)

# Show tables in the namespace

print("SHOW TABLES")

run_sql(f"SHOW TABLES IN {namespace}")

# Select all from a specific table

print("SELECT FROM TABLE")

run_sql(f"SELECT * FROM {namespace}.{table} LIMIT 20")

except Exception as e:

print(f"An error occurred in main execution: {str(e)}")

raise # Re-raise the exception for Glue to handle

finally:

job.commit()

if __name__ == "__main__":

try:

main()

except Exception as e:

print(f"Job failed with error: {str(e)}")

sys.exit(1)

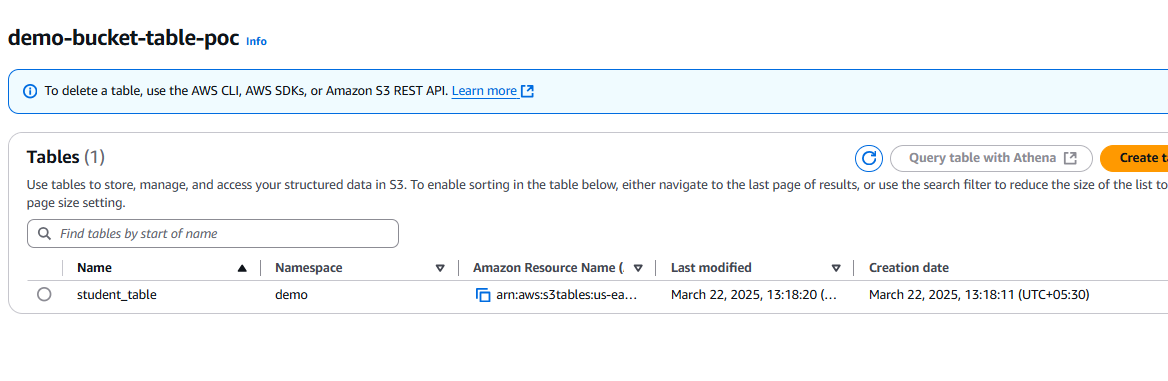

After Glue Job Execution you can able to see namespace and table details under s3 table.

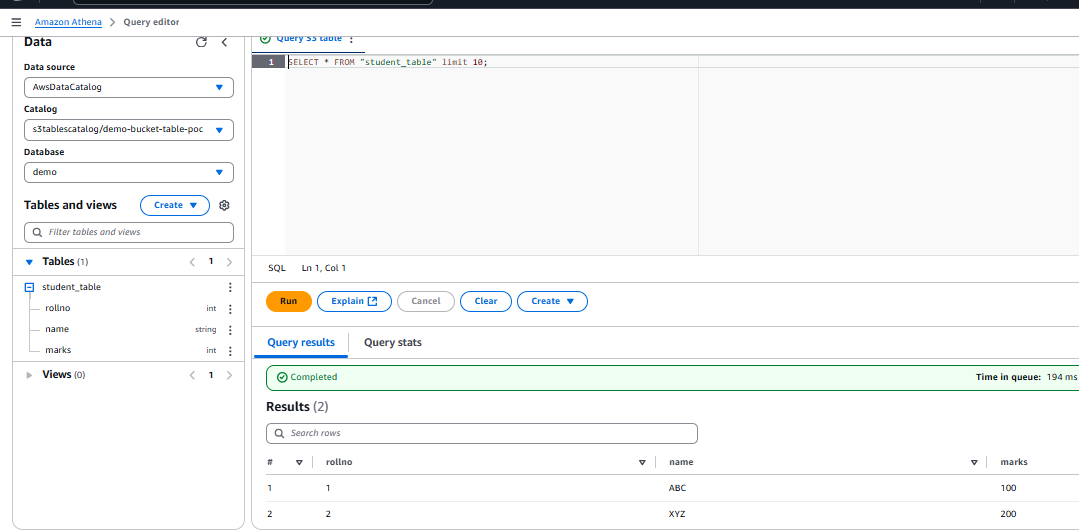

Querying data in athena

Athena Query: SELECT * FROM "student_table" limit 10;

References & Additional Resources

https://docs.aws.amazon.com/AmazonS3/latest/userguide/s3-tables.html

"Thank you for reading! If you found this blog helpful, don't forget to subscribe and follow for more insightful content. Your support keeps me motivated to bring you valuable insights. Stay updated and never miss out on our latest posts. Feel free to leave comments or suggestions for future topics. Happy learning!"

https://awslearner.hashnode.dev/amazon-web-services-via-category

https://awslearner.hashnode.dev/aws-beginner-level-project-ideas

Subscribe to my newsletter

Read articles from Utkarsh Rastogi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Utkarsh Rastogi

Utkarsh Rastogi

👨💻 AWS Cloud Engineer | Around 6 years of Corporate Experience | Driving Innovation in Cloud Solutions 🔧 Day-to-Day Tasks: Specialize in creating AWS infrastructure for Migration Projects. Leveraging services such as S3, SNS, SQS, IAM, Lambda, System Manager, Kinesis, OpenSearch, Cognito, Storage Gateway, Cloud Watch, API Gateway, AWS Event Scheduler, Secret Manager, ECS, Application Load Balancer, VPC among others. Additionally, I excel in crafting Splunk Dashboards and implementing alerting mechanisms for Cloud Watch logs to monitor failures. My approach involves constructing AWS infrastructure using the Serverless framework and Cloud Formation templates, while automating tasks through Boto3 (Python Scripting) Lambdas. 🎯 Passion: I am deeply passionate about continuously learning new technologies and eagerly anticipate the transformative impact of cloud computing on the tech landscape. 📧 Connect: Feel free to reach out to me at awslearningoals@gmail.com. Let's connect and explore potential collaborations! https://www.linkedin.com/in/rastogiutkarsh/