Text Summarizer - using LangChain

Harshita Sharma

Harshita Sharma

I recently started exploring Generative AI through the LangChain series by CampusX. LangChain offers a variety of components, such as models, prompts, memory, indexes, and agents, to build powerful LLM-powered applications. So far, I’ve dived into models, prompts, output parsers, and a bit of chains.

The best way to retain and reinforce new technical concepts is by building something practical. To solidify my understanding of LangChain’s prompts and output parsers, I decided to create a mini project—a text summarizer that extracts key information from user input and generates a structured summary.

What does my project do?

When summarizing large text or creating structured outputs, it’s helpful to extract key details like:

Role: Who the content is for.

Topic: What the content is about.

Lines: The number of lines for the summary.

My project takes a natural language input and extracts these three key details. For instance, given the sentence:

"You are a maths teacher. Explain dot product in 5 lines."

The summarizer extracts:

{

"role": "maths teacher",

"topic": "dot product",

"lines": 5

}

But what if the user is lazy and doesn’t specify all the details? It still works!

For example:

"Explain dot product"

The system intelligently assigns default values for missing keys:

{

"role": "Explainer",

"topic": "dot product",

"lines": 1

}

Project Evolution: Three versions with Different Parsers

To explore LangChain’s output parsers, I built three versions of the summarizer, each using a different parser:

Version 1:

JsonOutputParser→ Basic parsing into JSON format.Version 2:

StructuredOutputParser→ Allows defining schema structure for improved consistency.Version 3:

PydanticOutputParser→ Enables strict validation, constraints, and default values.

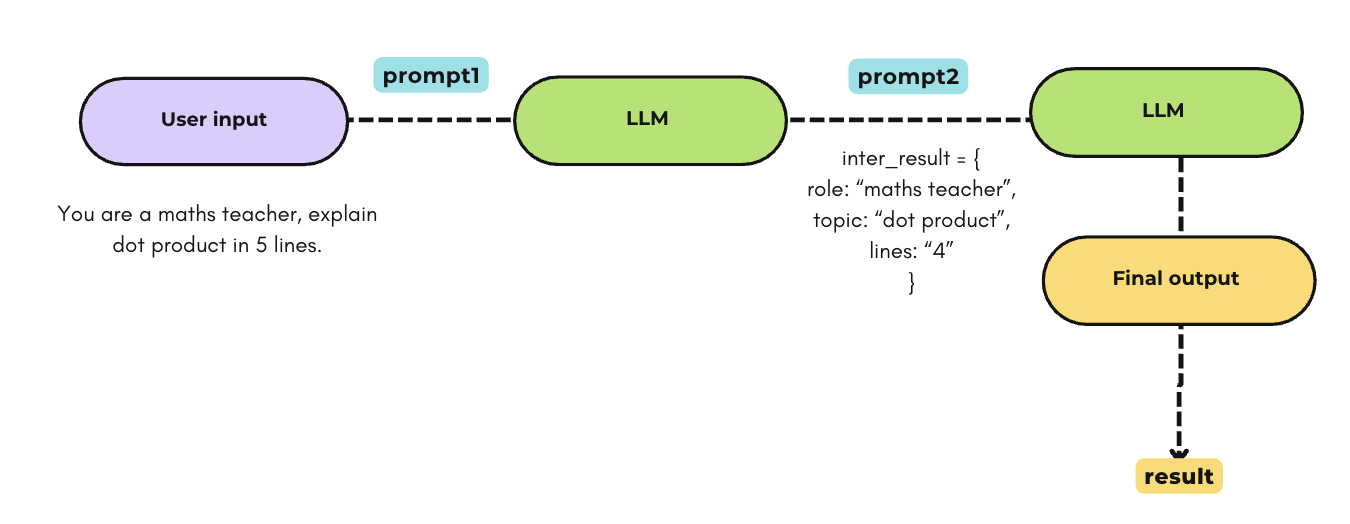

Project Pipeline

The summarizer works in two stages:

Extracting Key Information:

- The model first extracts the

role,topic, andlinesfrom the user input.

- The model first extracts the

Generating the Summary:

- It then uses the extracted details to generate a structured summary.

The LLM is being called twice here, once to extract key information and then to use extracted details to generate final output.

Version 1: JsonOutputParser

I started by using JsonOutputParser() to extract structured data because the expected format resembles JSON.

from langchain_core.prompts import PromptTemplate

from langchain_core.output_parsers import JsonOutputParser, StrOutputParser

parser = JsonOutputParser()

template1 = PromptTemplate(

template="""Take the user input: {user_input} and extract the role you have to play,

the topic to explain, and the number of lines.\n {format_instruction}""",

input_variables=['user_input'],

partial_variables={'format_instruction': parser.get_format_instructions()}

)

template2 = PromptTemplate(

template="""You are a helpful {role}. Answer the user question :

{user_question} in {lines} lines.""",

input_variables=['role', 'user_question', 'lines']

)

Workflow:

Prompt 1: Instructions for the LLM to extract key details (role, topic, lines) form user input.

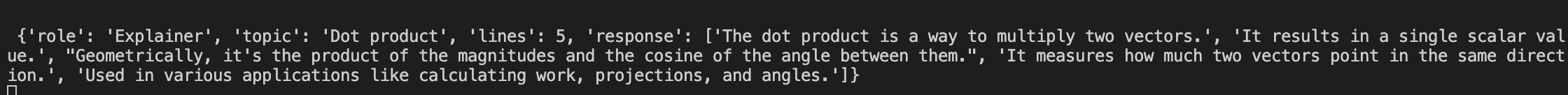

Intermediate Result: JSON output from the first model call stored in

inter_result.Prompt 2: Instruction to the LLM to use the extracted values to generate the final summary.

Final Output: Display the summarized content in plain text using

StrOutputParser

Below given is the implementation of this workflow.

I have create two different chains one for each tasks. Although its possible to do it using one single chain, but I wanted to understand and see how the intermediate output would look like so I created two different chains like this:

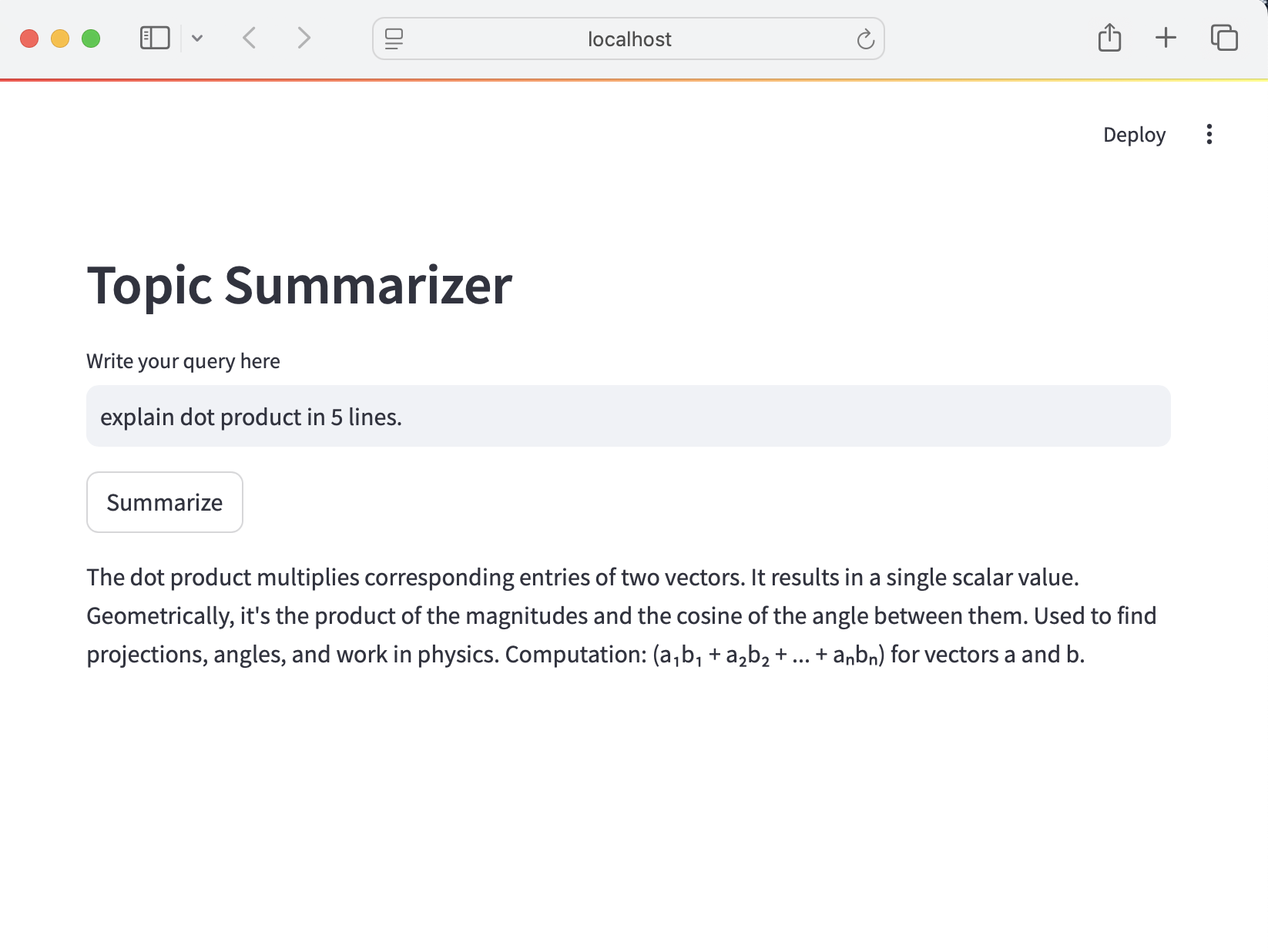

user_input = st.text_input('Write your query here')

# way of taking input in streamlit

if st.button('Summarize'):

chain1 = template1 | model | parser

inter_result = chain1.invoke({'user_input': user_input})

print(f"\n\n {inter_result}")

chain2 = template2 | model | StrOutputParser()

result = chain2.invoke({

'role': inter_result['role'],

'user_question': inter_result['topic'],

'lines': inter_result['lines']

})

st.write(result)

As you can see in the below given snapshot, it worked fine even when question is asked in this format:

Why JsonOutputParser?

Since I needed the extracted data in a JSON-like format,

JsonOutputParser()was an obvious first choice.It worked well even with incomplete queries by assigning default values.

Problem with JsonOutputParser:

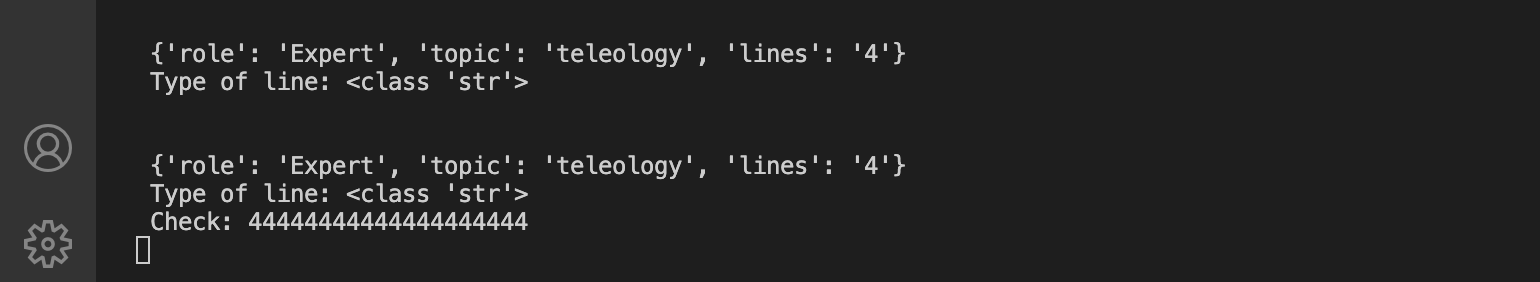

If I want to set default role to let’s say “Expert” rather than “Explainer”, and default lines to “4” than “1”, we do not have any such option to set this structure of the schema. Of course you can always mention this explicitly in the instruction to the LLM. But there should be a better way to handle it right? Where we can explicitly define the structure of the schema.

No way to validate the data generated by LLM. For example, If the

linesvalue is expected as an integer, but it returns a string, it can cause issues.

Key Learning:

JsonOutputParserworks but lacks strict validation and schema enforcement, which can lead to unreliable outputs.

Version 2: StructuredOutputParser

To solve the lack of schema enforcement, I moved on to StructuredOutputParser, which allows defining the structure of the output.

Workflow Changes:

Instead of relying on the LLM’s interpretation of the instruction given to it, I explicitly defined the schema using

ResponseSchema. OneResponseSchemaobject for each key information we are expecting.This ensured the model followed a consistent output format.

# importing required libraries to define schema

from langchain.output_parsers import StructuredOutputParser, ResponseSchema

schema = [

ResponseSchema(name="role", description="Extract the role from the user question. If no role is mentioned take 'Expert' as default."),

ResponseSchema(name="topic", description="extract the topic to explain from the user question"),

ResponseSchema(name="lines", description="Extract number of lines the user want the answer in. If nothing is given take 4 as default number of lines.")

]

parser = StructuredOutputParser.from_response_schemas(schema)

Here in the description of each key we have given instructions about taking default value inside the “description” tag only to check if this is working or not. And no doubt it is working perfectly fine.

Why StructuredOutputParser?

It allows defining the schema beforehand, making the output more reliable.

Improves consistency by clearly specifying what to extract.

Problems with StructuredOutputParser:

Giving default values in the form of instruction is still not a good and reliable idea. Because if the instruction is not made clear, the LLM might not work as we expect it to be.

Still lacks data validation. It doesn’t guarantee that

linesis always an integer. For example, have a look at the below given snapshot. Here the type oflinesisstrand because of this if I try to perform any mathematical calculation on it(lines * 20), it’ll produce unexpected result. Here it’s printing “4” which is number of lines 20 times!

No default values. If a field is missing, it returns

Noneinstead of a default value.

Key Learning:

- StructuredOutputParser ensures consistent formatting but doesn’t offer strict type validation or defaults.

Version 3: PydanticOutputParser

Finally, I switched to PydanticOutputParser(), which combines schema definition with strict validation, constraints, and default values.

# importing libraries

from langchain_core.output_parsers import PydanticOutputParser

from pydantic import BaseModel, Field

class Summarize(BaseModel):

role: str = Field(default='Expert', description="Extract the role from the user question.")

topic: str = Field(description="extract the topic to explain from the user question")

lines: int = Field(gt = 1, default=4, description="Extract number of lines the user want the answer in.")

parser = PydanticOutputParser(pydantic_object=Summarize)

Workflow Changes:

I created a schema class called

Summarize, extendingBaseModelfrom Pydantic.Used

Field()to:Define default values.

Add constraints (e.g.,

linesmust be an integer), and should be greater than 1.Provide clear descriptions for each field.

Why PydanticOutputParser?

Adds strict validation, ensures the

linesvalue is always an integer.Allows defining default values, so no need to rely on ambiguous LLM instructions.

Provides error handling, catches schema violations early.

Problems with PydanticOutputParser:

Slightly more complex setup due to schema definition.

Adds a bit of overhead but significantly improves reliability.

Key Learning:PydanticOutputParser offers the best reliability, validation, and maintainability by enforcing schema and type validation.

Finally, PydanticOutputParser works best in this scenario. However, JsonOutputParser is still useful for quick prototyping, and StructuredOutputParser offers flexibility when validation isn’t necessary.

Conclusion

This mini project was a practical way to explore LangChain parsers and understand their strengths and limitations. By iterating through three versions, I learned the importance of schema enforcement, validation, and data consistency—crucial aspects when building LLM-powered applications.

If you’re exploring LangChain, I highly recommend experimenting with different parsers and building your own mini projects to solidify your understanding.

You can check out the full project code here: Text Summarizer

I hope you enjoyed this breakdown of my thought process.

Let me know if you have any questions or suggestions! ✨

Subscribe to my newsletter

Read articles from Harshita Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Harshita Sharma

Harshita Sharma

Learning to build AI applications.