Building a RAG-Powered Chatbot with Streamlit, OpenAI, and Google Cloud

Govinda Bobade

Govinda Bobade

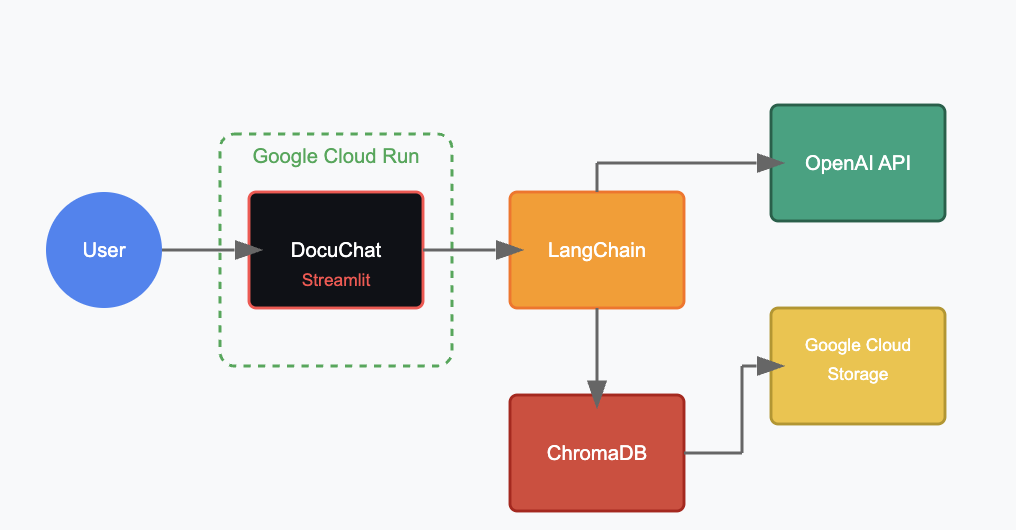

Deploying a Retrieval-Augmented Generation (RAG) application to the cloud can be a challenging yet rewarding endeavor. In this blog post, I'll walk you through creating and deploying a document-based chatbot that allows users to upload documents, process them, and then chat with the content using OpenAI's large language models.

The Challenge of RAG Applications in the Cloud

Building a RAG application that runs smoothly in the cloud presents several unique challenges:

Persistence across deployments: How do you ensure your vector database persists when containers restart?

Stateless environments: Cloud Run containers are ephemeral and stateless by design

File handling: Temporary file storage and processing in containerized environments

Environment configuration: Setting up credentials and configuration securely

Resource optimization: Balancing performance with cost

After weeks of trial and error and failing many deployments, I've developed a solution that addresses these challenges using Google Cloud's infrastructure.

Let's dive into how we can build this application.

Project Overview

I have built a Streamlit application called "DocuChat" that:

Allows users to upload PDF or text documents

Processes documents using LangChain and OpenAI embeddings

Stores document embeddings in ChromaDB

Persists the vector store in Google Cloud Storage

Provides a chat interface for querying document content

Setting Up the Project Structure

First, let's set up our project structure Following is the URL for the code

https://github.com/data-vishwa/GCP_RAG_Cloudrun

docuchat/

├── app.py # Main Streamlit application

├── Dockerfile # Container configuration

├── requirements.txt # Python dependencies

├── deploy-commands.sh # Deployment script

└── utils/ # Utility modules

├── __init__.py

├── document_processor.py

├── gcs_utils.py

└── chat_engine.py

Understanding RAG: How It All Works

Let's break down how our Retrieval-Augmented Generation (RAG) application works:

Document Processing Flow

Document Upload: Users upload PDF or text documents through the Streamlit interface.

Text Extraction: The application uses PyPDFLoader or TextLoader to extract text from the documents.

Text Chunking: The extracted text is split into smaller chunks (1000 characters with 100-character overlap).

Embedding Generation: OpenAI's embedding model converts these text chunks into vector embeddings.

Vector Storage: The embeddings are stored in ChromaDB, a vector database.

Persistence: The vector database is persisted both locally and in Google Cloud Storage.

Query Flow

User Question: The user types a question about their documents.

Similarity Search: The question is converted to an embedding and used to search ChromaDB for similar text chunks.

Context Assembly: The most relevant chunks are assembled into context.

LLM Response Generation: The context and question are sent to the OpenAI LLM.

Response Display: The LLM's response is displayed to the user.

Common Challenges and Solutions

During development, I encountered several challenges that might trip up others:

1. Persistence in Cloud Run

Challenge: Cloud Run containers are ephemeral, meaning any local file changes are lost when the container is restarted.

Solution: We persist the vector database to Google Cloud Storage. On startup, the application checks if a vector database exists in GCS and downloads it if found.

2. Cold Start Performance

Challenge: Loading large databases during cold starts can cause timeouts.

Solution:

Minimize the container size

Use min instances > 0 to avoid cold starts

Break large documents into smaller pieces

3. Memory Limitations

Challenge: Processing large documents can exceed memory limits.

Solution:

Allocate more memory to the Cloud Run service (2Gi or more)

Process documents in batches

Optimize chunking parameters

4. OpenAI API Keys

Challenge: Securely managing API keys.

Solution: Use environment variables in Cloud Run, never hardcode keys in your codebase.

Cost Optimization

To keep costs under control while running this application:

Cloud Run Budget:

Use min instances = 0 for development environments

For production, balance between availability and cost

OpenAI API Usage:

Monitor token usage

Use cheaper models for initial testing

Implement caching for frequent queries

Storage Optimization:

Regularly clean up unused vector stores

Compress data where possible

Conclusion

Building a RAG-powered chatbot that works seamlessly in Google Cloud Run requires addressing specific challenges related to persistence, state management, and resource optimization. By using the modular approach outlined in this blog, you can create a robust, scalable application that allows users to chat with their documents.

The key takeaways:

Always consider persistence in stateless environments

Structure your code modularly for better maintenance

Use Google Cloud Storage for persistent state

Carefully manage API keys and secrets

Monitor and optimize resource usage

With these considerations in mind, you can build powerful AI applications that leverage the latest RAG techniques while running reliably in the cloud.

Happy building!

Subscribe to my newsletter

Read articles from Govinda Bobade directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by