Understanding the Model Context Protocol (MCP) by Anthropic

Nagen K

Nagen K

Introduction

The Model Context Protocol (MCP) is an open-source standard introduced by Anthropic that allows AI models to interact with external tools and data in a consistent, secure, and extensible way. Anthropic explains MCP as : Think of it as the "USB-C for AI agents" — one unified interface to connect LLMs with files, databases, APIs, and more.

MCP bridges the gap between large language models and the real-world systems they need to access — such as file systems, REST APIs, cloud tools, or custom business logic — by establishing a universal protocol that handles discovery, interaction, and contextual grounding. Instead of hardcoding prompts or writing model-specific plugins, developers can use MCP to build reusable, composable interfaces that are model-agnostic and easy to extend.

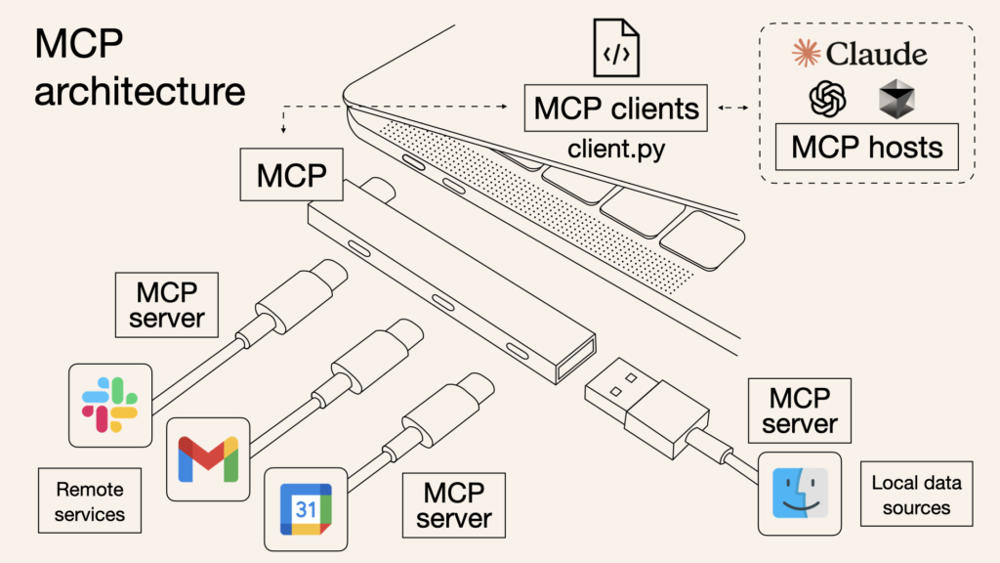

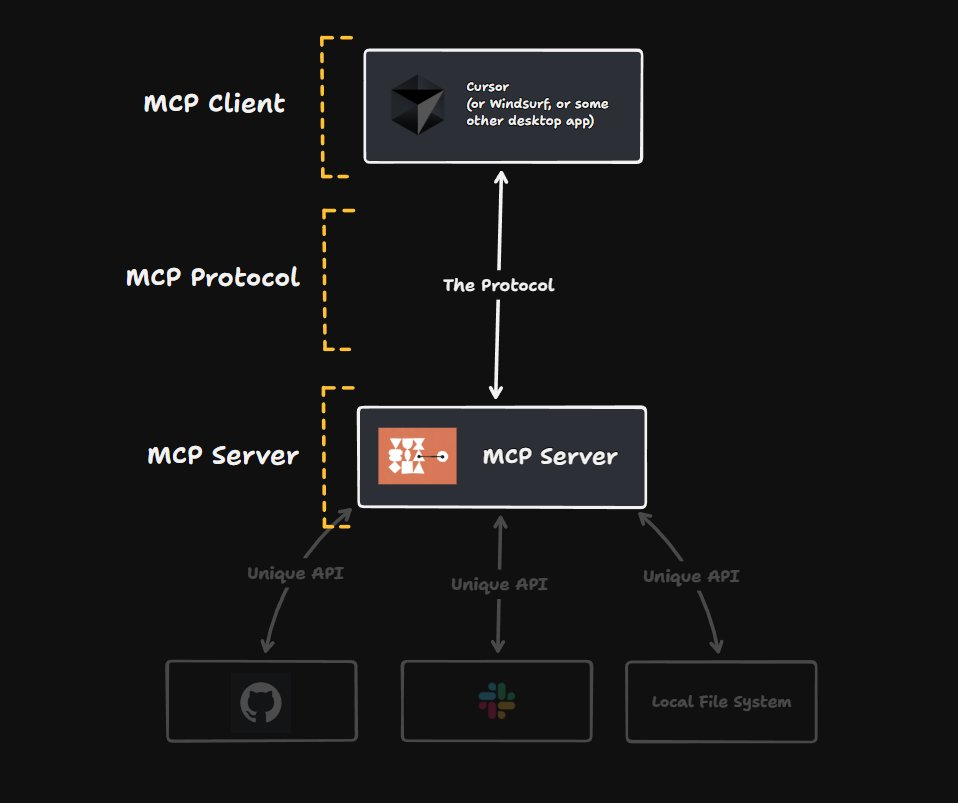

At its core, MCP introduces a client-server model where the AI application (host) communicates with one or more MCP-compliant servers. Each server exposes "resources" (read-only data) and "tools" (executable functions), allowing the AI to retrieve relevant context or perform actions without knowing the backend specifics.

Why MCP Matters

Before MCP, integrating tools or data with AI models was fragmented and inconsistent. MCP solves this by:

Providing a standard protocol for accessing data (resources) and invoking actions (tools).

Supporting cross-application interoperability.

Enabling contextual memory across sessions.

Enforcing security and approval flows for sensitive actions.

Core Architecture

Components:

Host: AI-powered app (e.g., Claude, IDE, chatbot)

MCP Client: Embedded in host; communicates with servers.

MCP Server: Exposes resources/tools from external systems.

How It Works

Communication Protocol:

Based on JSON-RPC 2.0

Transports:

stdiofor local,HTTP+SSEfor remote

Initialization Flow:

initializeinitializedReady to exchange

resources/*,tools/*, etc.

MCP Endpoints and Capabilities

Initialization & Lifecycle

initialize: Initiates the handshake between client and server, sharing capabilities.initialized: Acknowledges successful initialization and readiness for interaction.shutdown: Instructs the server to gracefully terminate the session.

Resources (Read-Only Data)

resources/list: Lists available resources or resource templates.resources/read: Reads one or more specified resources by URI.resources/subscribe: Subscribes to changes on a specific resource.notifications/resources/updated: Notification that a subscribed resource has changed.notifications/resources/list_changed: Notification that the list of resources has changed.

Tools (Executable Functions)

tools/list: Lists all available tools exposed by the server.tools/call: Executes a specific tool with the provided arguments.notifications/tools/list_changed: Notification that the list of tools has been updated.

Prompts (Prompt Templates)

prompts/list: Lists available prompt templates with optional arguments.prompts/get: Retrieves a specific prompt with its rendered message content.

Roots (Scope Management)

roots/set(via initialization or update): Specifies root URIs to scope resource/tool access for security or context restriction.

Sampling (Server → Model Calls)

sampling/createMessage: Allows the server to ask the client to run a model completion using specified messages (experimental feature).

Key MCP Capabilities

1. Resources (Read-Only Context)

{

"method": "resources/read",

"params": { "uri": "file:///README.md" }

}

Use for:

File contents

KB articles

Structured data (CSV, JSON)

2. Tools (Function Calls)

{

"method": "tools/call",

"params": { "name": "fetch_url", "arguments": { "url": "https://..." } }

}

Use for:

Fetching data

Updating records

Running computations

3. Prompts (Prompt Templates)

{

"method": "prompts/get",

"params": { "name": "generate-summary" }

}

Use for:

Standardized prompt workflows

Reusable prompt injections

4. Roots (Scope Control)

{

"method": "roots/set",

"params": { "uris": ["file:///project"] }

}

Use for:

- Scoping resources/tools to a directory or project

Practical Example: Summarize a Web Page

Goal: AI summarizes content from a live URL

Steps:

User asks: "Summarize https://example.com/blog/post"

AI triggers tool

fetch_urlvia MCPMCP server fetches content and returns text

AI summarizes based on the fetched content

// Request

{

"method": "tools/call",

"params": {

"name": "fetch_url",

"arguments": { "url": "https://example.com/blog/post" }

}

}

Real-World Walkthrough: Local MCP Agent Example

Objective

Build a local MCP server with one tool and one resource that Claude Desktop can interact with.

1. Install MCP Python SDK

Use pip:

pip install mcp

2. Write the Server

Create a file server.py with:

from mcp import FastMCP, Tool, Resource

app = FastMCP()

@app.tool

async def add(a: int, b: int) -> int:

return a + b

@app.resource

async def get_greeting(name: str) -> str:

return f"Hello, {name}!"

if __name__ == "__main__":

app.run()

3. Run the Server

Install and test your server:

mcp dev server.py

For Claude Desktop:

mcp install server.py

4. Configure Claude Desktop (macOS example)

Edit claude_desktop_config.json in ~/Library/Application Support/Claude/:

{

"mcpServers": {

"myserver": {

"command": "python",

"args": ["server.py"]

}

}

}

5. Try It in Claude

Ask: “What is 5 + 8?” → Should invoke the

addtoolAsk: “Greet Alice” → Should invoke the

get_greetingresource

Best Practices & Tips

✅ Use precise input schemas and enums for tools

✅ Separate tools (actions) from resources (read-only)

✅ Return large results as

resourceURIs to avoid token bloat✅ Gate sensitive tools with user approval

✅ Use

prompts/getto keep logic modular and reusable

Diagrams & Mindmaps

MCP Flow:

[User] --> [AI Host] --> [MCP Client] --> [MCP Server] --> [Backend/API]

Tool Usage Pattern:

[AI decides to use tool] → MCP Client → tools/call → Server → Result → Back to AI

Conclusion

MCP is a powerful framework for enabling dynamic, contextual, and safe interactions between LLMs and the real world. It simplifies the orchestration of multi-step AI workflows and unlocks powerful agentic capabilities.

References

Official site: https://modelcontext.org

GitHub spec: https://github.com/anthropics/model-context-protocol

Anthropic blog: https://www.anthropic.com/news/model-context-protocol

Subscribe to my newsletter

Read articles from Nagen K directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by