Code: Convolutional Neural Network

Retzam Tarle

Retzam Tarle

Aloha 🕶️,

In this chapter, we’ll put our knowledge of CNN into practice. We’ll train a Convolutional Neural Network (CNN) to predict human emotions from images 👽. We learned about CNN in Chapter 22.

Problem

Train a CNN model to predict human emotions from images. The emotions to predict are 7:

Anger

Sadness

Disgust

Fear

Contempt

Happy

Surprise

Dataset

We got two fitting datasets for this from Kaggle. Both the training and test datasets are CK+ datasets. CK+ means the Extended Cohn-Kanade Dataset, a complete dataset for action unit and emotion-specified expression.

Training dataset: https://www.kaggle.com/datasets/shawon10/ckplus

Test dataset: https://www.kaggle.com/datasets/shuvoalok/ck-dataset

Solution

We’ll be using Google Colab; you can create a new notebook here. You can use any other ML text editor like Jupyter.

You can find the complete code pdf with the outputs of each step here.

Data preprocessing

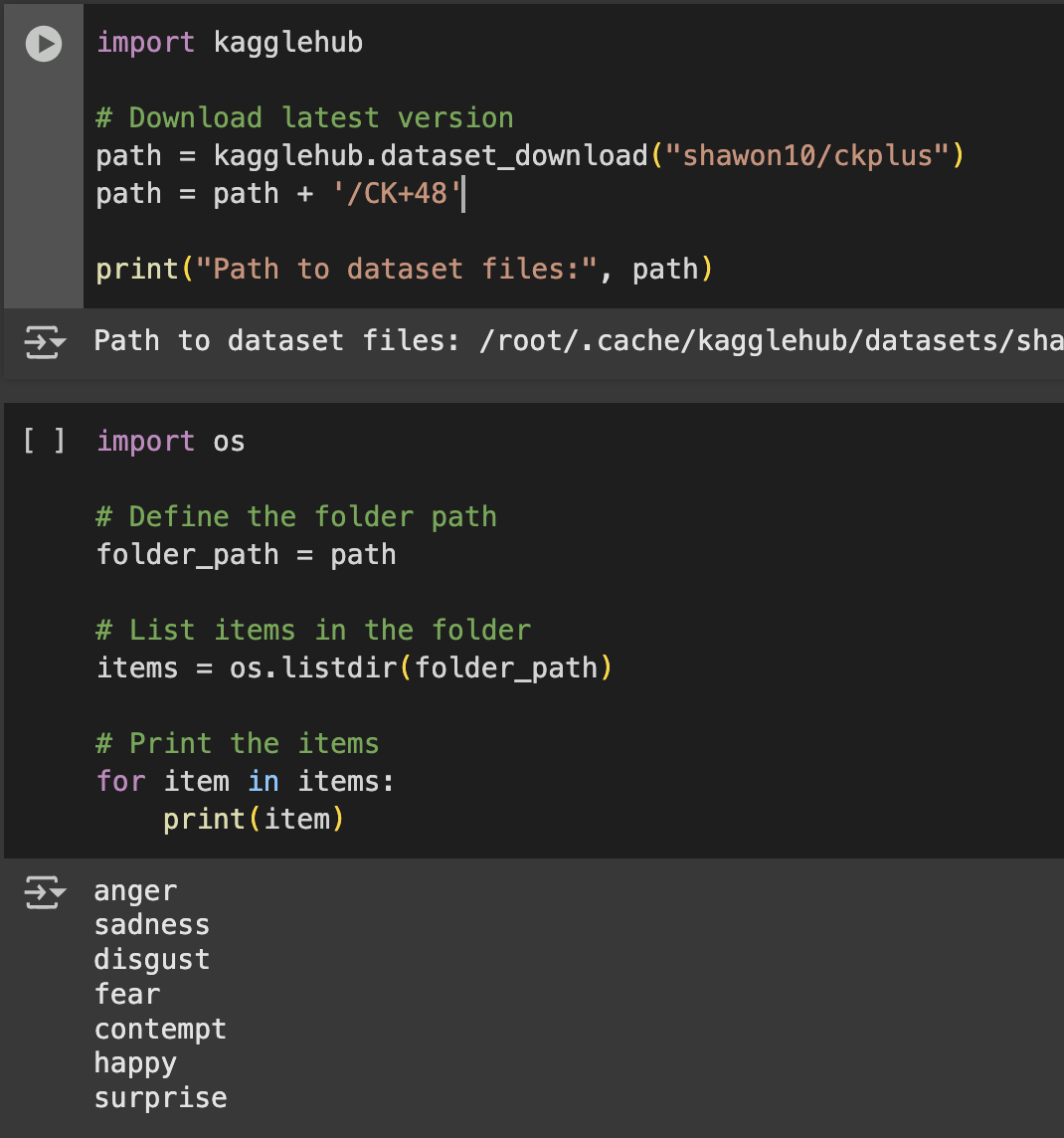

a. Load dataset: Here, we download the training dataset from Kaggle.

We append

CK+48to the path because that’s the directory the image folders exists.

When we check the folder, we can see that it consists of 7 sub-folders each named after each of the 7 emotions we want to be able to predict. Each folder contains images of that specific emotion, so the anger folder contains images of angry people across different age groups, races, genders, etc.

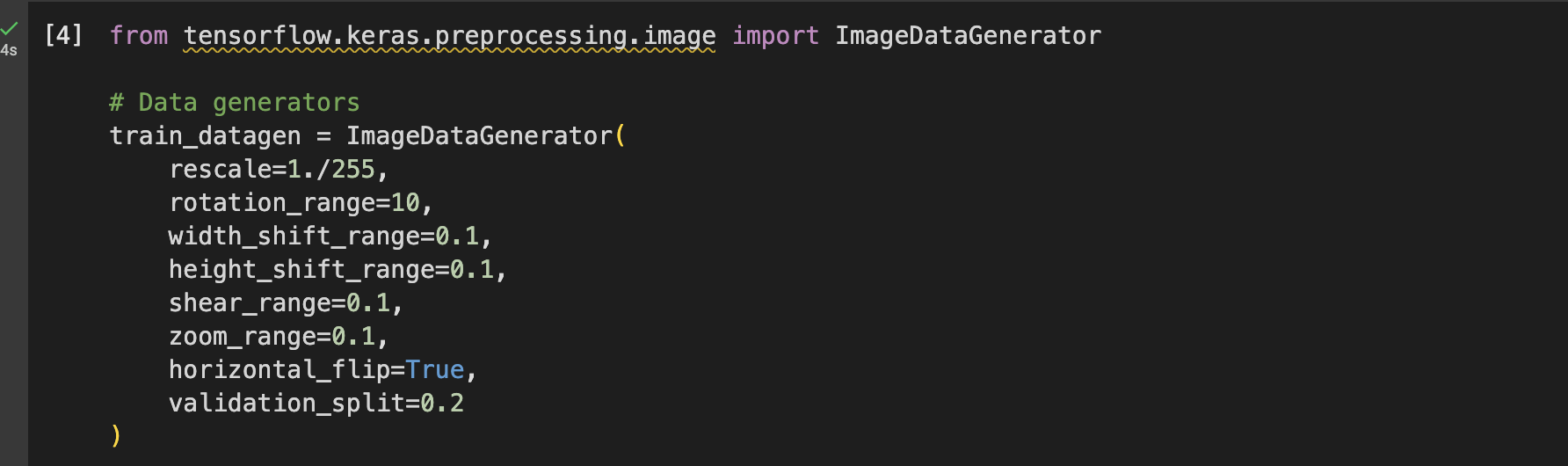

b. Preprocess image data: Here we optimize the images using a method we’ll call the train_datagen.

The train_datagen handles several tasks including: Normalizing the images, by rescaling from 0 - 255 to 0 - 1. Set the validation split to 20% of the dataset. It performs other tasks like zooming, rotating images, etc. This is so that it can create standardized variations of the images.

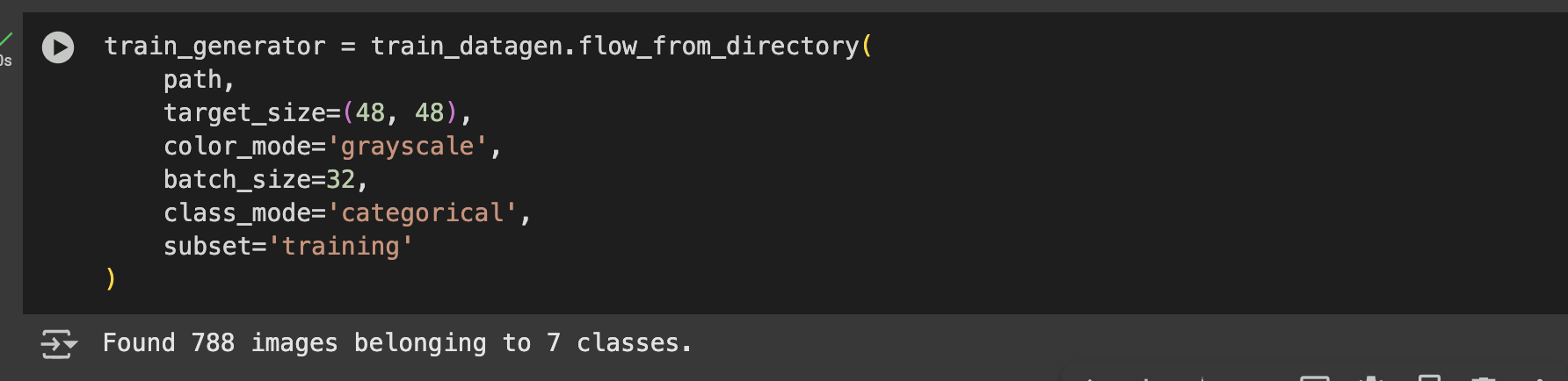

We then create a train_generator and validation_generator. We use the train_datagen to create this, this is important as it specifies batch size =32. This means that during training the model would be given the image data in batches of 32 images per batch, this is important to preserve memory.

Build a Convolutional Neural Network

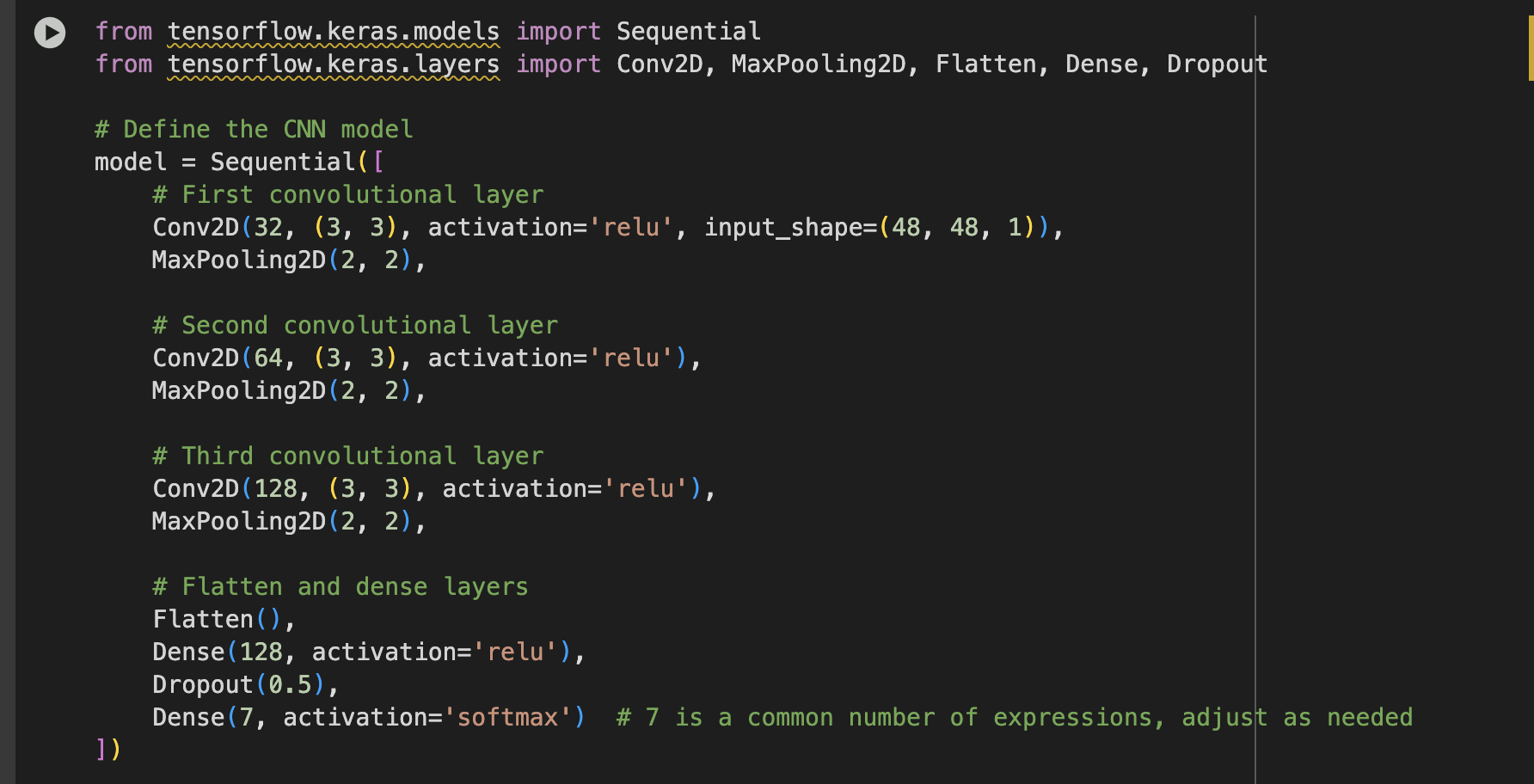

a. Build CNN: As we know by now, we don’t need to create our model from scratch. We’ll use the CNN model class from Tensorflow.

We have input, hidden, and output layers in CNN.

The first convolutional layer is our input layer. Here, we specify our model input shape of 48×48 pixels, with 32 filters. CNNs use filters to detect features in images, and we specify to use a 3×3 grid of filters as well. With

reluthe activation function.The second and third convolutional layers are the hidden layers, with more feature extraction, as we can see from the 64 and 128 filters, respectively. MaxPooling2D is used to reduce feature maps, in our case by half(2, 2), so if we have (24×24) it’ll convert to 12×12, keeping the maximum values.

Flatten converts the 2D array to a 1D array. Dropout drops neurons, 50% in our case, I believe we remember why, so the model does not depend on any specific neuron.

Finally, the last Dense layer makes a prediction from 7 options.

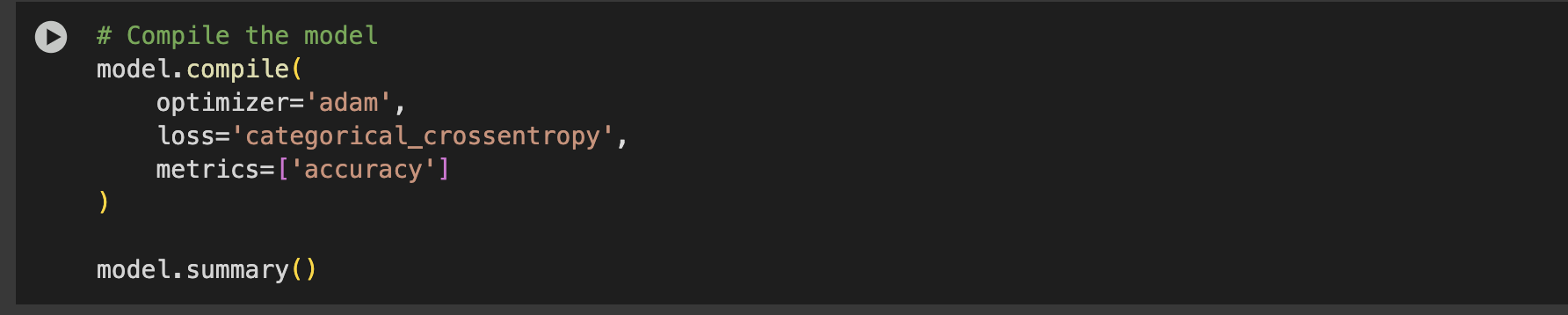

b. Compile model:

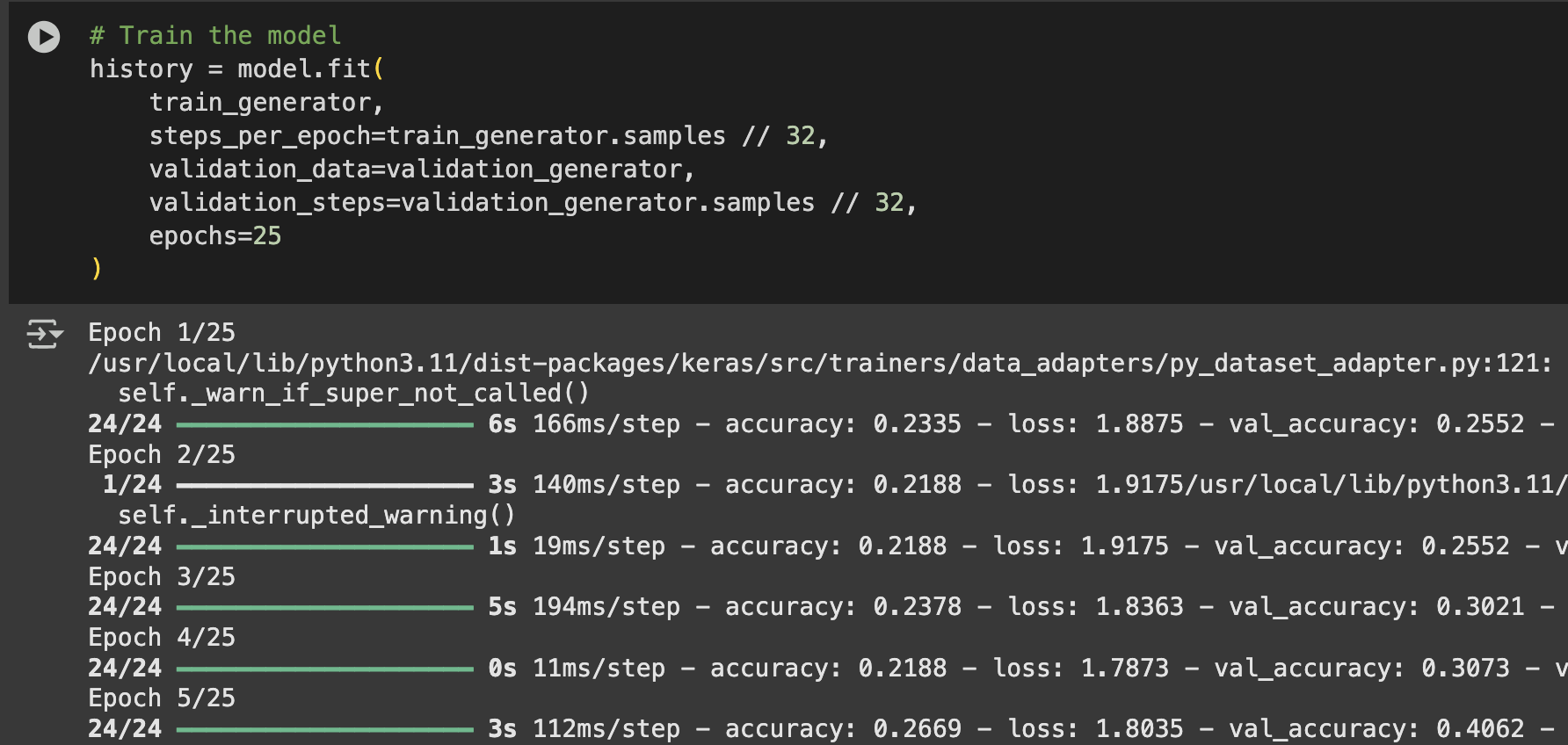

Train the CNN model: Here we train the model with our training datasets as shown in the image below.

a. Train:

The training will take a few minutes, we used 25 epochs, which means 25 circles.

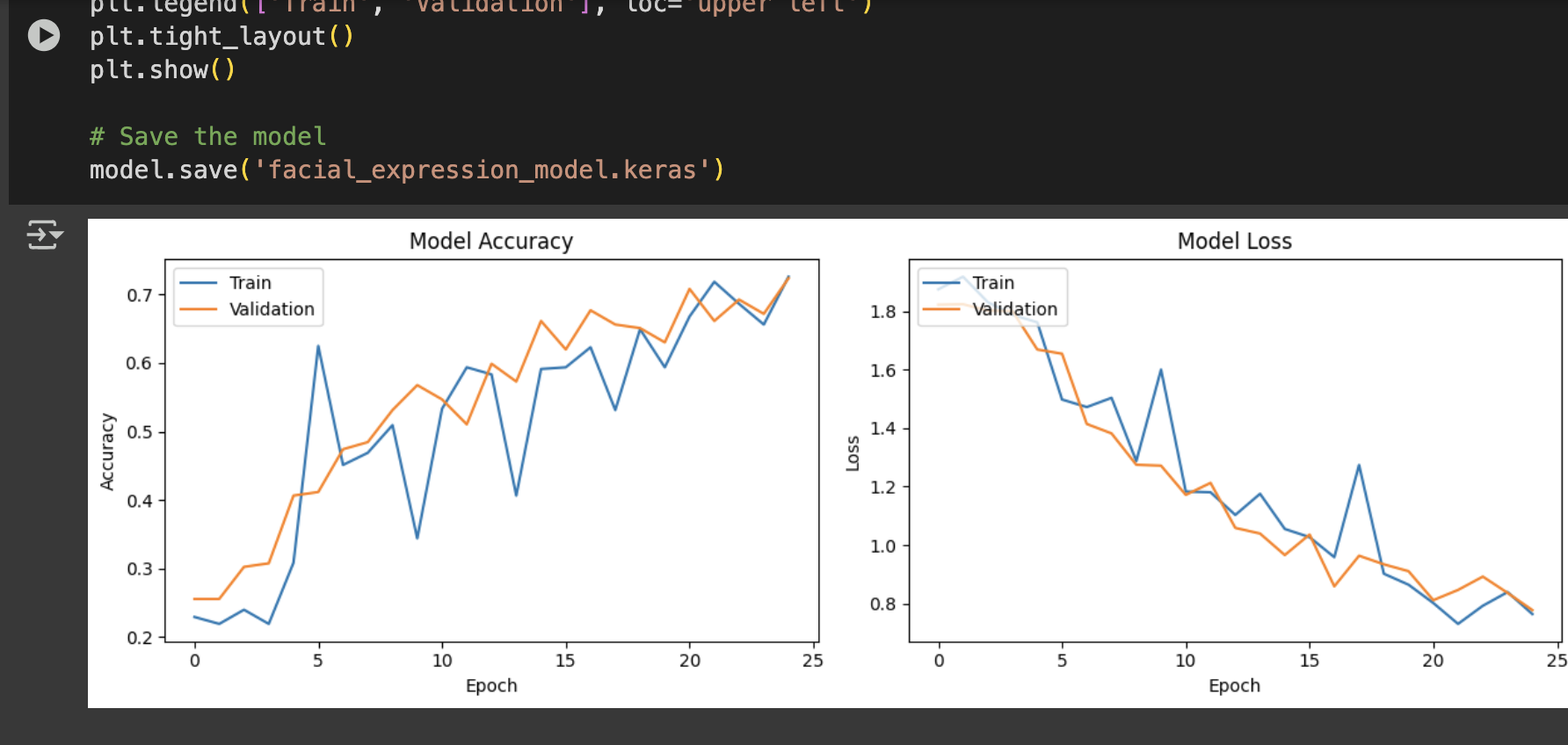

b. Evaluate model: We plot a map with Matplotlib to show our model's accuracy and loss. And we can see our model is doing great, the accuracy increases per epoch and the loss decreases.

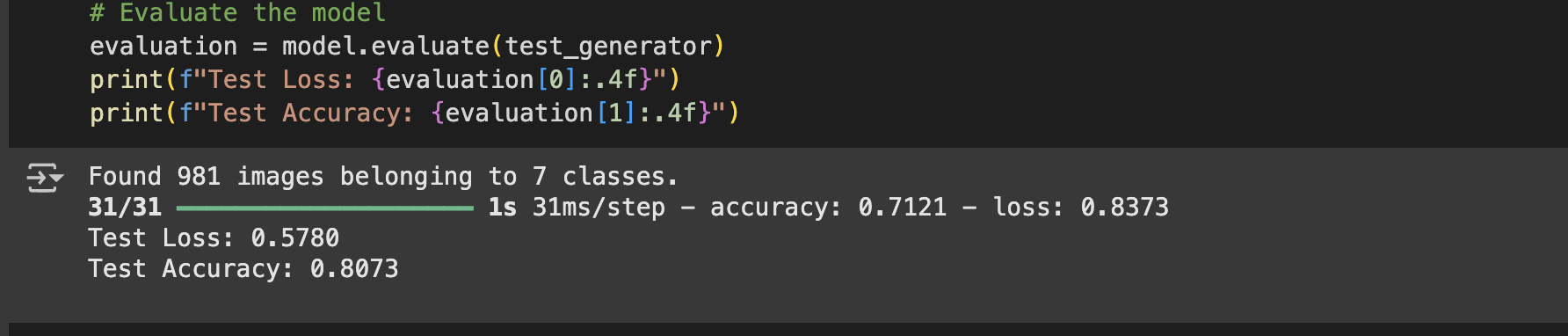

For more reliable measurement we download the test set and test the model accuracy and loss.

The accuracy is 80%, which is not great, but not bad for our simple solution 🕶️.

Deploy model: We are going to use our test set to make predictions with our model to see its performance in predicting human emotions from pictures 😺.

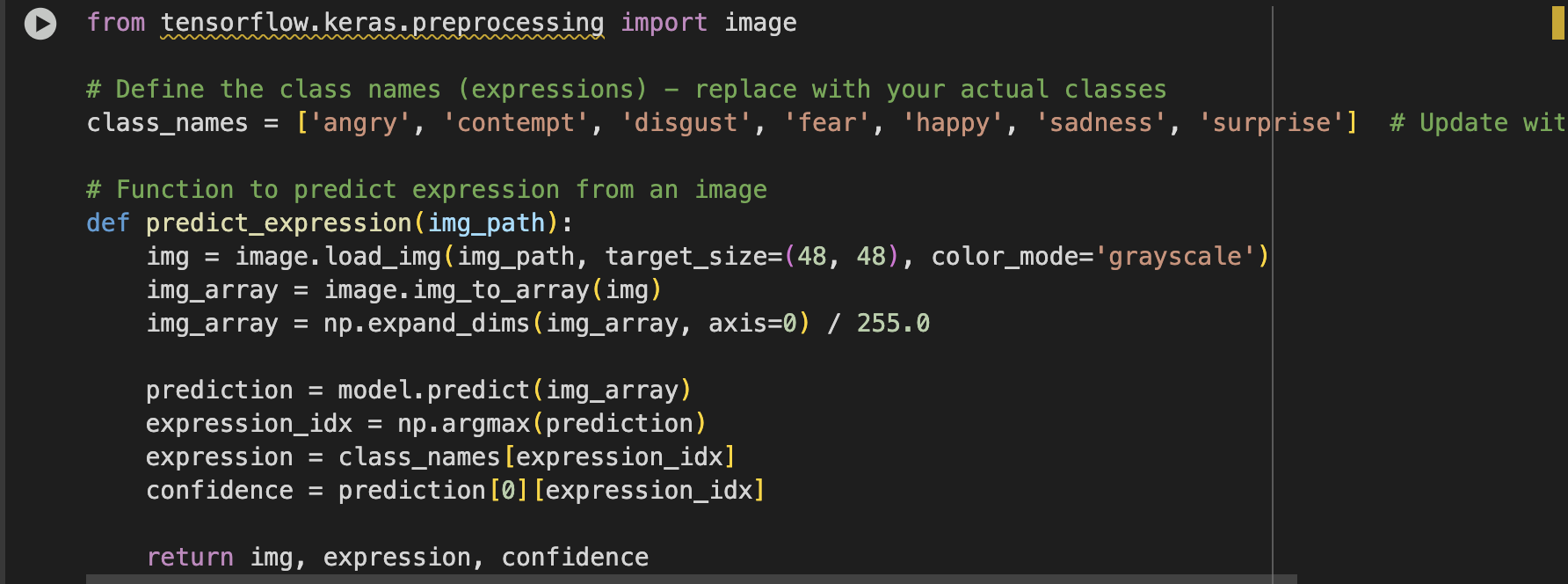

We use the predict_expression method above to make predictions on the test images. The method preprocesses the image to grayscale, 48×38 pixels, etc, and predicts with the model.

The screenshot below shows our model predictions, it’s quite spot on, right? 😁

How did this go? Hope great. Still intense like the FNN model, right? 😁

We have successfully built and deployed a CNN model to predict the facial emotions of human beings.

In our next chapter, we’ll train a Recurrent Neural Network model.

Aloha 🕶️

Subscribe to my newsletter

Read articles from Retzam Tarle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Retzam Tarle

Retzam Tarle

I am a software engineer.