Multi Tenant Call Transcoding and Analytics System

Ravinder Singh

Ravinder SinghIntroduction

With the rise of customer-centric businesses, enterprises are increasingly relying on call analytics to gain deeper insights into customer interactions. Call centers and support teams generate millions of recorded calls daily, and organizations seek efficient ways to transcribe, analyze, and extract meaningful data from these interactions.

However, building a multi-tenant call transcoding and analytics system is no trivial task. It demands high scalability, real-time processing, flexible configurations, and robust storage to ensure seamless operation across different businesses.

In this blog, we’ll break down the key requirements and design decisions that shape the architecture of such a system.

Requirements

A robust call transcoding and analytics system must address several fundamental challenges:

1. Multi-Tenancy Support

Modern enterprises need a multi-tenant architecture where multiple organizations (tenants) can access and analyze call data through a single, scalable platform. This means:

Each tenant should have isolated access to their data.

Data access must be controlled through strict authentication and authorization mechanisms.

Tenants should have customizable configurations to tailor the analytics to their specific needs.

2. Configurable Business Rules

Every organization has different call processing and analytical requirements. A flexible rule engine is essential to:

Enable businesses to define custom transcoding parameters (e.g., convert only calls above a certain duration).

Allow dynamic categorization of calls based on metadata (e.g., flagging customer complaints, identifying sales calls).

Apply custom survey and feedback mechanisms post-call processing.

3. Metadata Extraction and Analytics

To derive actionable insights, the system must extract metadata from calls, such as:

Caller details (number, location, agent handling the call).

Call sentiment analysis (happy, neutral, frustrated).

Keywords and topics discussed (product inquiries, complaints, feedback).

This metadata powers advanced analytics, dashboards, and reports for business leaders to make informed decisions.

4. Handling High Call Volumes

Call centers operate at scale, often handling millions of calls daily. The system must be capable of:

Efficient parallel processing of call recordings.

Real-time ingestion of metadata for instant analytics.

Optimized storage and retrieval mechanisms to reduce costs and ensure fast response times.

5. Cost-Optimized Transcoding

Call transcoding (converting audio formats for analysis) is CPU-intensive and can be expensive. To optimize costs:

The system should only transcode necessary calls instead of processing everything.

Businesses should have configurable transcoding options based on call importance.

Cloud-based serverless or containerized transcoding can be leveraged to scale dynamically.

System Design

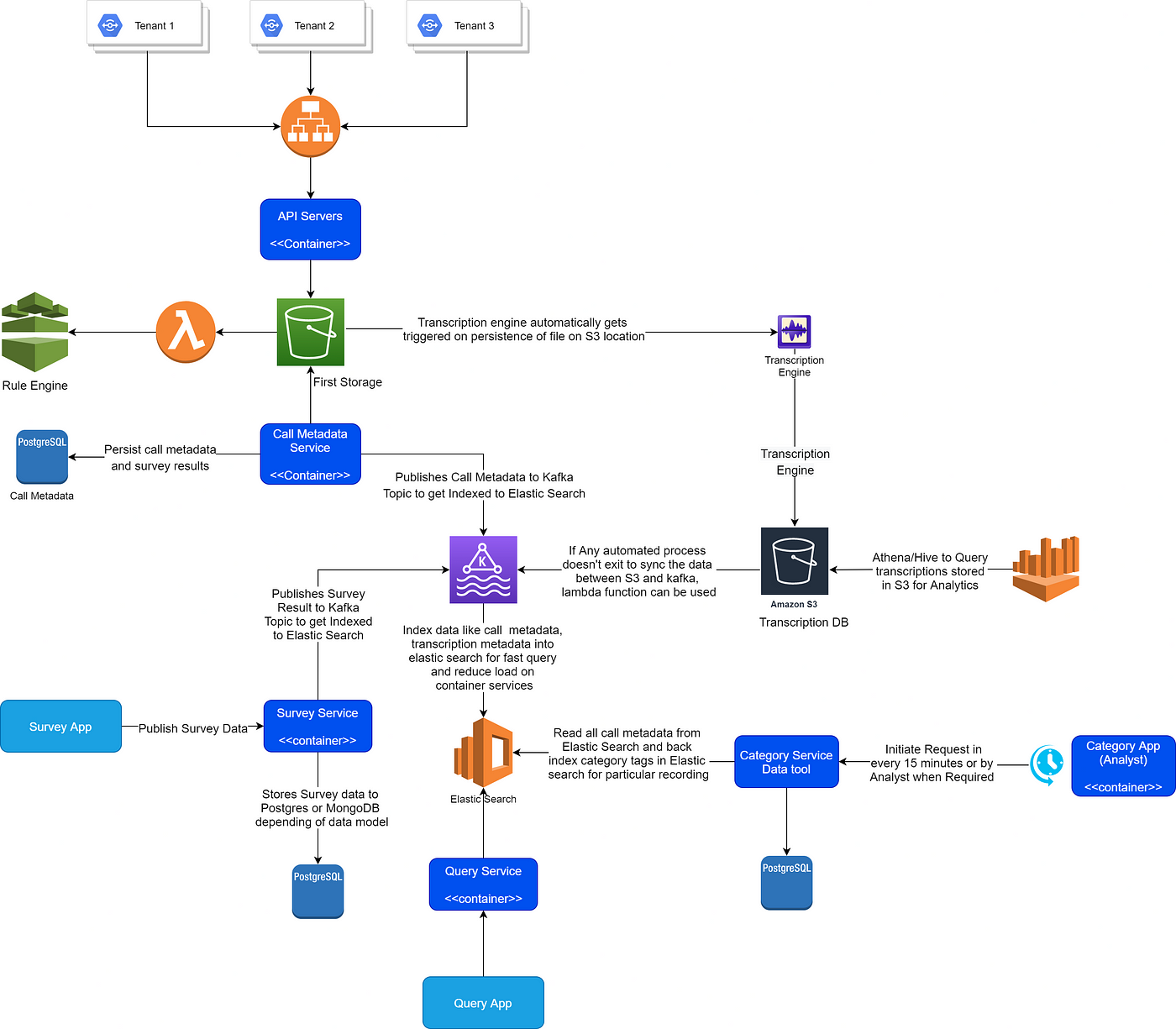

Multi Tenant Call Transcoding and Analytics System

Key Design Decisions

Building an efficient call analytics system requires a strategic blend of technologies and architectural patterns. Here’s how we can achieve it:

1. Implementing a Rule Engine for Custom Business Logic

A rule engine enables businesses to dynamically define and update call processing logic without modifying the core system.

Example: A telecom company may configure rules to analyze only customer complaints, while a sales company may focus on lead qualification calls.

Apache Drools, Camunda, or a custom-built microservice can handle such dynamic rules.

2. Real-Time Analytics with Elasticsearch

For fast retrieval of call metadata and transcripts, Elasticsearch is a powerful choice.

It provides low-latency search capabilities, enabling instant query responses.

Metadata such as call duration, customer sentiment, and topic categories can be indexed for quick lookup.

Integrated dashboards (e.g., Kibana or Grafana) allow businesses to visualize call trends in real time.

3. Scalable and Resilient Architecture Using Containers

To handle unpredictable workloads, the system should be containerized using Docker and Kubernetes.

Microservices-based architecture allows individual components (e.g., transcoding, metadata extraction, analytics) to scale independently.

Auto-scaling with Kubernetes ensures cost-efficient resource allocation.

Fault tolerance and high availability prevent service disruptions.

4. Event-Driven Processing with Kafka or AWS Kinesis

Since call analytics involves continuous data ingestion, a pub-sub messaging architecture helps ensure smooth processing.

Kafka or AWS Kinesis can be used for real-time streaming of call events.

Events such as call received, transcript generated, analytics ready can be published and consumed asynchronously.

This approach decouples services, ensuring scalability and reliability even during traffic spikes.

5. Storing Transcription Data in Cost-Effective Blob Storage

Transcriptions and audio files consume significant storage. To optimize costs:

Store transcripts in Amazon S3, Google Cloud Storage, or Azure Blob Storage.

Use compression techniques to reduce storage costs.

Implement tiered storage strategies where older transcripts move to low-cost archival storage.

Query historical transcriptions efficiently using Hive, Athena, or BigQuery.

6. Managing Structured Data with PostgreSQL

While call transcripts and metadata require flexible storage, relational databases are essential for transactional data.

Use PostgreSQL to store tenant details, call metadata, and survey results.

Ensure data consistency and relationships using normalized schemas.

Use partitioning and indexing to optimize performance on large datasets.

7. Choosing the Right Multi-Tenancy Model

Different organizations may have varying data isolation and security requirements. The multi-tenancy model should be carefully chosen:

Shared Application, Shared Database (Most Cost-Effective)

A single application and database serve multiple tenants.

Best for: Businesses that don’t require strict data isolation.

Shared Application, Dedicated Database (Balanced Approach)

Each tenant gets a separate database but uses the same application instance.

Best for: Mid-sized enterprises needing some data isolation.

Dedicated Application, Dedicated Database (Most Secure, Expensive)

Every tenant gets a completely separate deployment.

Best for: Financial institutions or organizations with strict compliance needs.

8. API-First Approach for Seamless Integration

Since businesses might want to integrate analytics with their existing CRM, support tools, or AI models:

Expose RESTful APIs for seamless access to transcoded data.

Provide WebSockets or GraphQL for real-time analytics consumption.

Ensure OAuth 2.0 / JWT-based authentication for secure API access.

Conclusion

Building a multi-tenant call transcoding and analytics system requires careful architectural choices to balance scalability, cost-efficiency, and configurability.

By leveraging modern cloud-native technologies such as Kafka, Elastic Search, PostgreSQL, Kubernetes, and AI-driven transcription, organizations can gain deeper insights into customer conversations while ensuring high performance and reliability.

With the right multi-tenancy model, event-driven architecture, and API-first approach, this system can empower businesses with actionable insights, improve customer engagement, and optimize operational efficiency.

Subscribe to my newsletter

Read articles from Ravinder Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ravinder Singh

Ravinder Singh

Senior Architect with 15 years of experience in development, Design, Analysis, and Implementation of large-scale distributed systems using Java, micro services, open source, messaging, streaming and cloud technologies. Experience in Core technology and deployment strategy, System Design, Architecture and Technology Consulting for various transformation, migration or Greenfield projects.