Data Engineering Pipeline — Microsoft Azure managed Services

Ravinder Singh

Ravinder SinghIntroduction: The Rise of Data-Driven Decision Making

In today’s digital era, data is one of the most valuable assets for any organization. Businesses across industries are collecting massive amounts of data from various customer touchpoints, operational systems, social media, IoT devices, and third-party integrations. However, raw data alone holds little value—it needs to be processed, transformed, and analyzed to drive insights, innovation, and revenue generation.

To achieve this, companies must build scalable, efficient, and cost-effective data pipelines that can seamlessly ingest, process, and analyze large volumes of structured and unstructured data.

In this blog, we will explore how Microsoft Azure’s managed services can be leveraged to design a highly scalable and efficient data engineering pipeline while minimizing operational overhead and ensuring cost efficiency.

A Business Use Case: Data Pipeline for an Insurance Firm

To illustrate a real-world implementation, let’s consider an insurance company that collects data from various sources:

🔹 User registration details – Personal, demographic, and contact information.

🔹 Claims data – Information about insurance claims, including nature, amount, and filing date.

🔹 Social media & analytics – Sentiment analysis and user behavior insights.

🔹 External government & demographic data – Crime rates, weather conditions, or regional statistics.

🔹 User’s financial & medical history – Credit score, income category, past claims, and lifestyle habits.

🔹 Agent-customer interactions – Recorded conversations and chat logs.

🔹 Call center & support interactions – Customer service logs and queries.

By aggregating and analyzing this data, the insurance company can:

✅ Detect fraud and mitigate risks

✅ Optimize policy pricing and underwriting decisions

✅ Enhance customer segmentation for personalized offers

✅ Identify new markets and customer trends

✅ Improve call center and customer support efficiency

Key Concepts: Understanding Data Engineering and Its Components

Before diving into the design architecture, let’s clarify the key components of data engineering and how they fit into the system:

1️⃣ Data Science

Data Science is about understanding, processing, and modeling raw data.

It uses statistical methods and machine learning algorithms to generate insights.

Tools like Power BI, Azure ML, and Cognitive Services help visualize and interpret this data.

2️⃣ Machine Learning & AI

Machine Learning (ML) helps refine predictions and correlations using trained models.

AI-enhanced ML models continuously improve insights by learning from new data.

Azure provides MLaaS (Machine Learning as a Service) with frameworks like TensorFlow, PyTorch, scikit-learn, and Keras.

3️⃣ Data Engineering

Data Engineering is responsible for building the infrastructure and pipelines that move, clean, and transform data.

It ensures data flows from multiple sources to storage, processing, and analytics layers.

Azure Data Factory, Databricks, Synapse Analytics, and Event Hubs are key services here.

4️⃣ Data Pipelines

A data pipeline is a set of automated processes that move data from sources to final storage and analytics.

It includes collection, ingestion, transformation, storage, and consumption stages.

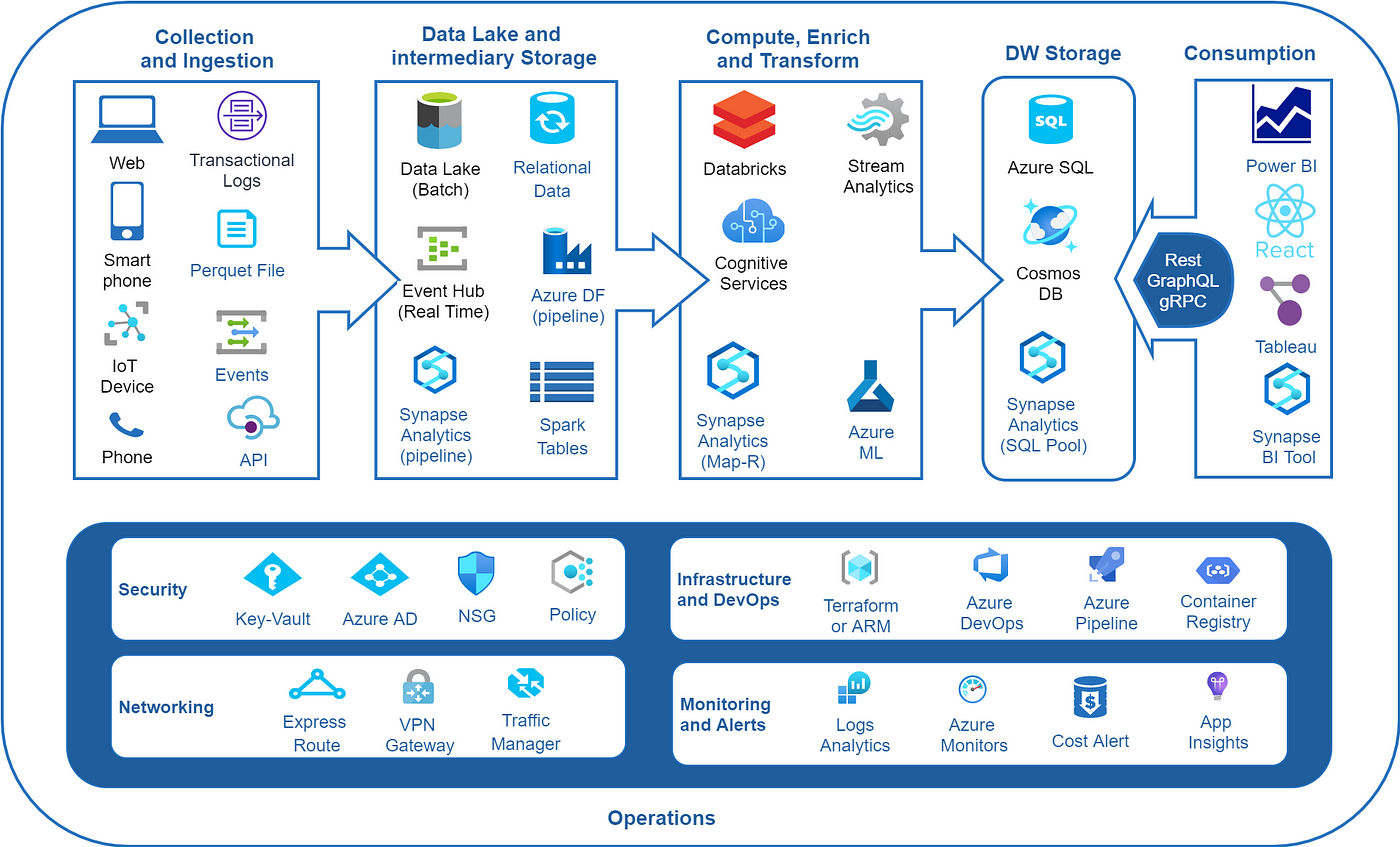

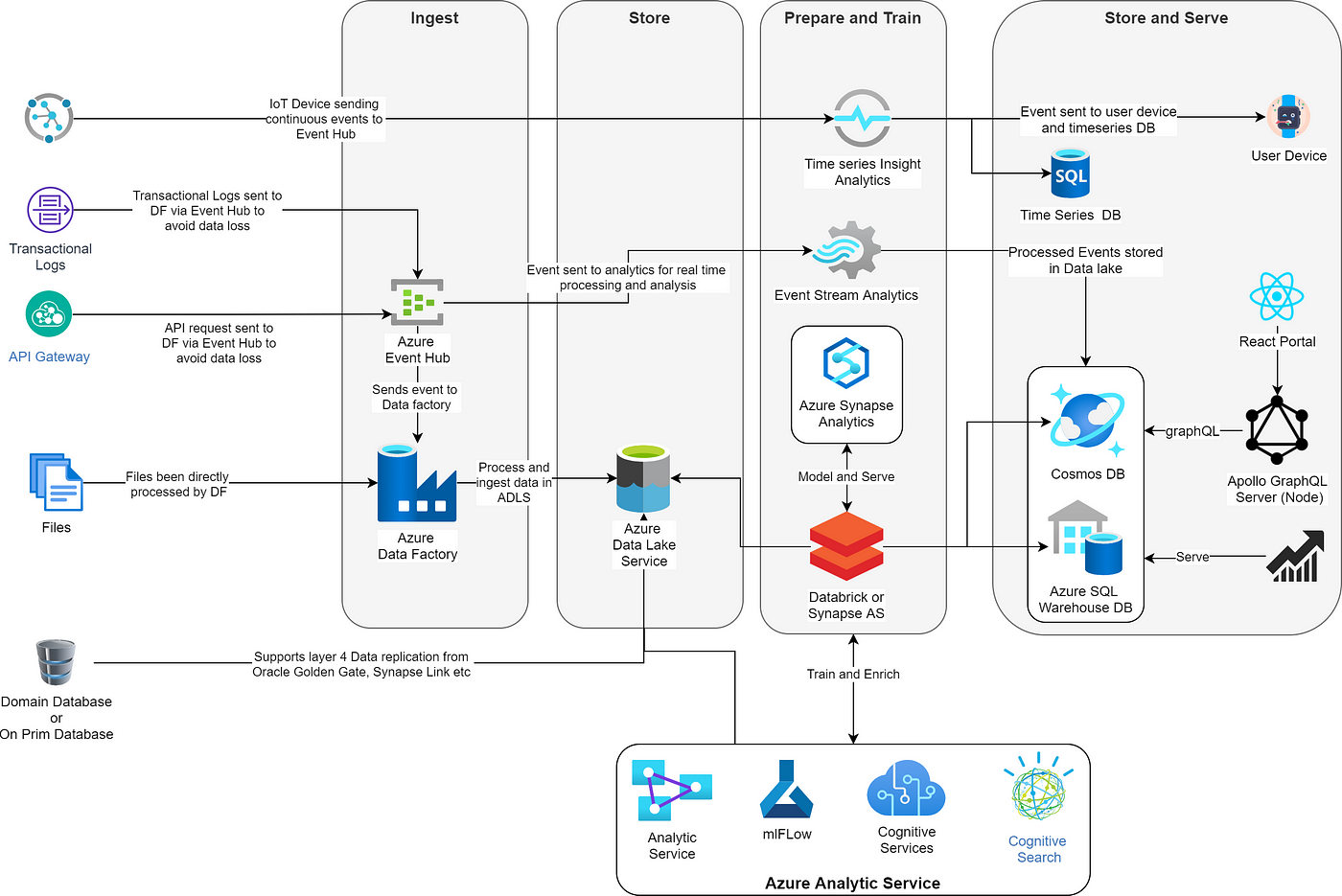

Designing a Scalable Data Pipeline with Azure Managed Services

A well-architected data pipeline consists of multiple stages to process and analyze data efficiently. Microsoft Azure provides a fully managed ecosystem to support each stage while reducing maintenance overhead.

🔹 Stage 1: Data Collection & Ingestion

What happens in this stage?

Collect data from multiple sources such as mobile apps, websites, IoT devices, APIs, and batch uploads.

Ingest the data into Azure Data Lake Storage (ADLS), Azure SQL, or Cosmos DB for further processing.

Choose between real-time ingestion (Event Hubs, IoT Hub, API Gateway) or batch ingestion (Blob Storage, Azure Data Factory).

Azure Services Used:

✅ Azure Event Hubs / IoT Hub → Handles real-time event streaming from applications and IoT devices.

✅ Azure API Management (APIM) → Centralized gateway for external and internal APIs.

✅ Azure Data Factory (ADF) → Managed ETL (Extract, Transform, Load) service for batch data movement.

✅ Azure Blob Storage / ADLS → Cost-effective storage for raw data.

🔹 Stage 2: Data Storage & Intermediate Processing

What happens in this stage?

Store raw and semi-processed data in a data lake or operational database.

Decide the best storage format based on access frequency and query performance.

Some datasets can be pushed directly to analytics platforms without transformations.

Azure Services Used:

✅ Azure Data Lake Storage (ADLS) → Stores structured & unstructured data.

✅ Azure SQL Database / Cosmos DB → Stores operational and transactional data.

✅ Azure Synapse Analytics (SQL Pools) → Data warehousing for high-performance queries.

🔹 Stage 3: Data Processing, Transformation & Enrichment

What happens in this stage?

Transform and enrich raw data using distributed processing frameworks.

Combine streaming and structured data for deeper insights.

Use machine learning models to enhance predictions.

Azure Services Used:

✅ Azure Databricks (Apache Spark-based) → Cleans, transforms, and enriches data at scale.

✅ Azure Synapse Analytics → Runs large-scale analytics queries.

✅ Azure Machine Learning → Integrates ML models into pipelines for predictive analysis.

🔹 Stage 4: Data Warehousing & Storage for Analytics

What happens in this stage?

Store processed data in data warehouses for fast querying and analytics.

Optimize database schema for high-throughput querying.

Use appropriate storage format (columnar, document-based, or relational).

Azure Services Used:

✅ Azure Synapse Analytics / Azure SQL → Data warehouse for structured data.

✅ Cosmos DB (Document, Graph, Columnar models) → NoSQL database for flexible querying.

✅ ClickHouse / PostgreSQL → High-performance query engines for analytical workloads.

🔹 Stage 5: Data Consumption & Visualization

What happens in this stage?

Generate insights using dashboards and analytics tools.

Create self-service reports for stakeholders using ML models.

Build AI-powered predictions and visualizations.

Azure Services Used:

✅ Power BI / Tableau → Business Intelligence dashboards.

✅ Azure Analysis Services → Scalable data modeling and reporting.

✅ Azure Cognitive Services → AI-driven analytics and predictions.

Leveraging Azure Synapse for End-to-End Analytics

Microsoft Azure Synapse Analytics offers a fully integrated platform that combines:

✅ Data ingestion (Data Factory)

✅ Data lake storage (ADLS)

✅ Processing & analytics (Spark, SQL pools)

✅ BI & ML integration

💡 Azure Synapse enables organizations to build a serverless, scalable, and high-performance data ecosystem*, paying only for what they use.*

Conclusion: The Future of Scalable Data Engineering

A well-architected data pipeline enables organizations to harness data effectively, drive business insights, and optimize decision-making.

💡 By leveraging Azure’s fully managed services, companies can:

✅ Reduce maintenance costs 🚀

✅ Scale effortlessly with demand 📈

✅ Optimize costs with serverless & pay-as-you-go models 💰

🔹 Want to explore more? Let’s discuss in the comments! 💬

Subscribe to my newsletter

Read articles from Ravinder Singh directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ravinder Singh

Ravinder Singh

Senior Architect with 15 years of experience in development, Design, Analysis, and Implementation of large-scale distributed systems using Java, micro services, open source, messaging, streaming and cloud technologies. Experience in Core technology and deployment strategy, System Design, Architecture and Technology Consulting for various transformation, migration or Greenfield projects.