Beyond Keywords: AI-Powered Customer Support with Semantic Search

Wakeel Kehinde

Wakeel Kehinde

Semantic search is a search technique that improves accuracy by understanding the intent and contextual meaning of a query rather than relying only on exact keyword matching.

Picture this: A customer types “can’t access my account” - but your help article is titled “How to recover your password.” A traditional system might miss the connection. But with semantic search, your system recognizes the intent and surfaces the right content.

A semantic search engine becomes the first line of defense - giving instant, meaningful, and context-aware results from your knowledge base. Google and other search engines use semantic search to provide users with more accurate and relevant search results.

Semantic search works by converting your sample tickets, FAQs, troubleshooting guides and user queries into vector embeddings and comparing these vectors in a multi-dimensional space to find articles that truly match the customer’s need.

Why this matters:

- Faster answers, fewer tickets - customers find what they need on their own

- Better support experience - even vague queries lead to helpful results

- Smarter knowledge delivery - your system grows more helpful over time

In this guide, we’ll explore how to build a smart, AI-powered support search system using FastAPI and Weaviate, a powerful vector database built to understand meaning, not just keywords.

Vector Database

At the heart of semantic search systems are vector databases and embeddings. Vector databases store complex data as high-dimensional vectors, enabling efficient and accurate similarity searches. Embeddings are numerical representations of data (like words or documents) that capture semantic relationships in a multi-dimensional space. This allows for understanding context and intent beyond simple keyword matching. Examples include Weaviate, Chroma, Pinecone, Milvus, Faiss, Qdrant and PGVector.

Why Weaviate?

Weaviate is an open-source vector database. It allows you to store data objects and vector embeddings from your favorite ML models and scale seamlessly into billions of data objects. Some of the key features of Weaviate are:

Weaviate can quickly search the nearest neighbors from millions of objects in just a few milliseconds.

With Weaviate, either vectorize data during import or upload your own, leveraging modules that integrate with platforms like OpenAI, Cohere, HuggingFace, and more.

From prototypes to large-scale production, Weaviate emphasizes scalability, replication, and security.

Apart from fast vector searches, Weaviate offers recommendations, summarizations, and neural search framework integrations.

While they all have their strengths and weaknesses, Weaviate is relatively newer and it is specially focused on semantic search. It excels at understanding the meaning behind search queries, going beyond simple keyword matching. It uses GraphQL for querying, making it flexible and powerful.

Tech Stack Overview

FastAPI - The lightning-fast, easy-to-use api framework.

Weaviate - The engine that powers semantic matching through vector search

Sentence Transformers - Generates meaningful embeddings from text data

Docker - Makes it easy to run and manage our environment

Setting up Fast API

Before diving into coding, let’s prepare our development environment. We’ll use FastAPI as our web framework and keep things tidy by isolating dependencies in a virtual environment.

1. Set Up a Virtual Environment

Open your terminal and navigate to the folder where you want to create the project. Then, create a virtual environment:

Mac/Linux:

python3 -m venv env

Windows:

python -m venv env

Now, activate the virtual environment:

Mac/Linux:

source env/bin/activate

Windows:

.\env\Scripts\activate

Once activated, your terminal should show (env) - this means you’re now working inside the virtual environment.

2. Install FastAPI

With your virtual environment active, install FastAPI and the required standard packages:

pip install -r requirements.txt

3. Confirm the Installation

To make sure everything installed properly, run:

pip freeze

You should see fastapi and other related packages listed in the output.

4. Build a Simple FastAPI App

Let’s create a basic app to test our setup.

In your project folder, create a file named main.py and add the following code:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def root():

"""Root endpoint returning API information."""

return {"message": "Welcome to the Semantic Search Engine API!"}

This simple app returns a message when you visit the root URL.

5. Run the FastAPI Application

Start your FastAPI server with the command below:

fastapi dev main.py --port 9000

fastapi devruns the app in development mode.--port 9000specifies the port (default port is 8000, you can change it if needed).

Once the server is running, open your browser and visit:

You’ll see the message: {"message": "Welcome to the Semantic Search Engine API!"}

Bonus: Explore the auto-generated API documentation at:

Running Weaviate Locally via Docker

So lets start with running weaviate Vector Database container. You can use command in below. If necessary change the data persistent path.

docker run -p 8080:8080 -p 50051:50051 cr.weaviate.io/semitechnologies/weaviate:1.29.1

Now next step is loading data to vector database.

Loading the Customer Support Dataset

With your FastAPI application up and running, the next step is to load the dataset that will power your semantic search engine.

Start by placing your dataset file in the same directory as main.py.

Now that our FastAPI app and dataset are in place, the next building block is embeddings - the secret sauce behind semantic search.

What are Embeddings?

Embeddings are numerical representations of words, sentences, or documents in a high-dimensional space. Think of them as a way for machines to “understand” the meaning of language. By converting both your articles and your users’ queries into embeddings, you allow your system to compare meanings — not just words.

We’ll use SentenceTransformers to generate our embeddings. It’s powerful, flexible, and works well with most vector databases.

Define your Weaviate collection and initialize the embedding model:

# Define Weaviate collection name

collection_name = "CustomerSupport"

# Initialize the SentenceTransformer model

model = SentenceTransformer("all-MiniLM-L6-v2")

Next: To ensure the dataset is loaded seamlessly when the application starts, we’ll leverage FastAPI’s lifespan context manager. This allows us to connect to Weaviate, load the dataset, and store the client instance for reuse across the application:

from contextlib import asynccontextmanager

from fastapi import FastAPI

import weaviate

@asynccontextmanager

async def lifespan(app: FastAPI):

client = weaviate.use_async_with_local(host="localhost", port=8080)

await client.connect()

# Load dataset into Weaviate

await load_data(client, collection_name, model)

# Store client in the app state for reuse

app.state.weaviate_client = client

yield

await client.close()

Explanation of the Code:

Imports:

asynccontextmanager: Used to create an asynchronous context manager that handles startup and shutdown events in FastAPI.FastAPI: The main FastAPI application class used to build the API.weaviate: A module or library used to initialize and manage an asynchronous connection to a local Weaviate vector database instance.

Creating the Lifespan context:

weaviate.use_async_with_local(host="localhost", port=8080): Initializes the Weaviate async client to connect with a locally running instance on port 8080.await client.connect(): Establishes a connection to the Weaviate instance.await load_data(client, collection_name, model): Loads a dataset into Weaviate using a specified collection name and embedding model.app.state.weaviate_client = client: Stores the connected Weaviate client in the app’s global state so it can be accessed within route handlers or other modules.yield: Signals the point where the application runs. Startup logic runs before this, and shutdown logic after it.await client.close():Closes the connection to the Weaviate client when the application is shutting down.

Function lifespan(app: FASTAPI):

- Connects to Weaviate asynchronously and loads dataset into the collection and cleans up resources when the app stops.

Initializing the FastAPI App:

# Initialize FastAPI with the lifespan context

app = FastAPI(lifespan=lifespan)

Initializes the FastAPI application and binds the

lifespancontext so the startup and shutdown events are automatically handled.Ensures the Weaviate client is ready for use during API requests and properly released when the server stops.

Next: Define a dependency to safely retrieve the Weaviate client from the app state. This ensures your routes only proceed when the client is fully ready:

# Dependency to access the Weaviate client

async def get_weaviate_client():

if not await app.state.weaviate_client.is_ready():

raise HTTPException(status_code=503, detail="Weaviate is not ready")

return app.state.weaviate_client

Finally, Create load_data.py file in the same directory as main.py .This handles the creation of the Weaviate collection, vectorization of customer issues using a local embedding model, and bulk insertion of support data in batches.

import weaviate.classes.config as wc

import json

from weaviate.classes.data import DataObject

async def load_data(client, collection_name, model):

with open('customer_support_data.json', 'r') as f:

data = json.load(f)

# Delete collection if it already exists

if await client.collections.exists(collection_name):

await client.collections.delete(collection_name)

# Create collection

await client.collections.create(

name=collection_name,

description="Customer support issues and resolutions",

vectorizer_config=None, # Since we're providing vectors manually

properties=[

wc.Property(name="ticketId", data_type=wc.DataType.TEXT),

wc.Property(name="category", data_type=wc.DataType.TEXT),

wc.Property(name="customerIssue", data_type=wc.DataType.TEXT),

wc.Property(name="resolutionResponse", data_type=wc.DataType.TEXT),

]

)

collection = client.collections.get(collection_name)

objects = []

for entry in data:

vector = model.encode(entry["customer_issue"]).tolist()

data_object = DataObject(

properties={

"ticketId": entry["ticket_id"],

"category": entry["category"],

"customerIssue": entry["customer_issue"],

"resolutionResponse": entry["resolution_response"]

},

vector=vector

)

objects.append(data_object)

# Load data in batches

batch_size = 100

for i in range(0, len(objects), batch_size):

batch = objects[i:i+batch_size]

result = await collection.data.insert_many(batch)

if hasattr(result, 'errors') and result.errors:

print(f"Errors in batch {i//batch_size}: {result.errors}")

print(f"Data successfully loaded into Weaviate ✅, Total tickets: {len(data)}")

With this setup, your FastAPI app will automatically load and embed your customer support dataset into Weaviate during startup, making it ready for lightning-fast semantic search queries.

Explanation of the Code:

Imports:

weaviate.classes.config as wc: Provides property and data type configuration classes for defining the collection schema in Weaviate.

json: Used to read and parse the local customer_support_data.json file.

DataObject from weaviate.classes.data: Represents a single object (with properties and optional vector) to be inserted into a Weaviate collection.

Deleting Existing Collection:

Checks if the specified collection already exists in Weaviate. If it does, the collection is deleted to ensure a clean setup before inserting new data.

Creating the Collection:

A new collection is created with clearly defined properties: ticketId, category, customerIssue, and resolutionResponse.

The vectorizer_config=None setting tells Weaviate that vector embeddings will be manually provided (not auto-generated).

Encoding Customer Issues:

Each customer issue text (customer_issue) is encoded into a vector using the provided model. This transforms textual information into a numerical format suitable for semantic search.

Preparing DataObjects:

For every record, a DataObject is created. Each object contains both the structured information and its corresponding vector embedding, ready to be inserted into the collection.

Uploading in Batches:

All DataObject items are uploaded to Weaviate in batches of 100. This batching strategy improves performance, reduces memory usage, and handles large datasets efficiently.

Any errors during batch insertion are printed for easier debugging.

Function load_data(client, collection_name, model):

Reads customer support data and encodes them into vectors, prepares data objects and inserts them in batches into Weaviate.

🔍 Create the Semantic Search API

Now that your dataset is loaded into Weaviate, it’s time to expose a semantic search endpoint through your FastAPI application.

In your main.py, define the search route and the code below

from fastapi import FastAPI, Query

from search_engine import SemanticSearchEngine

@app.get("/search")

async def search(query: str = Query(..., description="Enter search query"),

top_k: int = 5, client = Depends(get_weaviate_client)):

"""

Perform semantic search using the search engine.

:param query: The user query

:param top_k: Number of results to return (default: 5)

:param client: Weaviate client

:return: List of relevant customer support issues

"""

search_engine = SemanticSearchEngine(client, collection_name, model)

results = await search_engine.search(query, top_k)

return {"query": query, "results": results}

Explanation of the Code

Let’s break down the code:

Imports:SemanticSearchEngine from search_engine: The custom class responsible for performing semantic search over the Weaviate collection.

Defining the Search Endpoint /search:

Creates a GET endpoint /search that accepts user queries for performing semantic search.

Query Parameters:query: The user’s search input provided via URL parameters.top_k: Optional parameter to specify how many top results to return (defaults to 5).client: Injected via Depends(get_weaviate_client), this provides the Weaviate client instance from application state.

Initializing the SemanticSearchEngine

search_engine = SemanticSearchEngine(client, collection_name, model): Initializes the semantic search engine with the Weaviate client, collection name, and embedding model.results = await search_engine.search(query, top_k): Executes the semantic search and retrieves the top-k most relevant results based on the user’s query.

The search logic as shown is encapsulated in the SemanticSearchEngine class defined in search_engine.py. This class handles encoding queries into dense vectors and performing hybrid searches against the Weaviate collection:

search_engine.py

import weaviate.classes.config as wc

from fastapi import HTTPException

class SemanticSearchEngine:

def __init__(self, client, collection_name, model):

self.client = client

self.collection_name = collection_name

self.model = model

async def search(self, query, top_k=5):

try:

# Encode the query into a vector

query_vector = self.model.encode(query).tolist()

# Perform hybrid search

response = await self.client.collections.get(self.collection_name).query.hybrid(

query=query,

vector=query_vector,

limit=top_k,

return_properties=["customerIssue", "category", "resolutionResponse"]

)

return [

{

"Customer Issue": item.properties["customerIssue"],

"Category": item.properties["category"],

"Resolution Response": item.properties["resolutionResponse"]

}

for item in response.objects

]

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

Explanation of the Code

Let’s break down the code:

Imports:

fastapi.HTTPException: Enables raising standardized HTTP error responses within FastAPI endpoints

Performing the semantic search:

model.encode(): Transforms the user’s query text into a dense numerical vector that captures the semantic context, enabling meaningful similarity comparisons.

Hybrid Query:

The hybrid() method combines traditional keyword search with semantic vector search to improve result relevance.

Why Hybrid Search?:

Hybrid search enhances accuracy by accounting for both exact keyword matches and contextual meaning, making it ideal for understanding varied user queries in customer support use cases.

Alternative: near_vector Search

Instead of hybrid search, you can use near_vector() to perform pure semantic search, relying entirely on vector similarity without considering keywords. This approach is useful when semantic meaning matters more than exact keywords, such as in natural language queries with synonyms or paraphrased expressions.

Everything put together

from fastapi import FastAPI, Query, HTTPException, Depends

from search_engine import SemanticSearchEngine

from sentence_transformers import SentenceTransformer

from load_data import load_data

import weaviate

from contextlib import asynccontextmanager

# Define Weaviate collection name

collection_name = "CustomerSupport"

# Initialize Sentence Transformer model

model = SentenceTransformer("all-MiniLM-L6-v2")

@asynccontextmanager

async def lifespan(app: FastAPI):

client = weaviate.use_async_with_local(host="localhost", port=8080)

await client.connect()

# Load data into Weaviate

await load_data(client, collection_name, model)

# Store the client in the app state

app.state.weaviate_client = client

yield

await client.close()

app = FastAPI(lifespan=lifespan)

# Dependency to get the Weaviate client

async def get_weaviate_client():

if not await app.state.weaviate_client.is_ready():

raise HTTPException(status_code=503, detail="Weaviate is not ready")

return app.state.weaviate_client

@app.get("/")

async def root():

"""Root endpoint returning API information."""

return {"message": "Welcome to the Semantic Search Engine API!"}

@app.get("/search")

async def search(query: str = Query(..., description="Enter search query"),

top_k: int = 5, client = Depends(get_weaviate_client)):

"""

Perform semantic search using the search engine.

:param query: The user query

:param top_k: Number of results to return (default: 5)

:param client: Weaviate client

:return: List of relevant customer support issues

"""

search_engine = SemanticSearchEngine(client, collection_name, model)

results = await search_engine.search(query, top_k)

return {"query": query, "results": results}

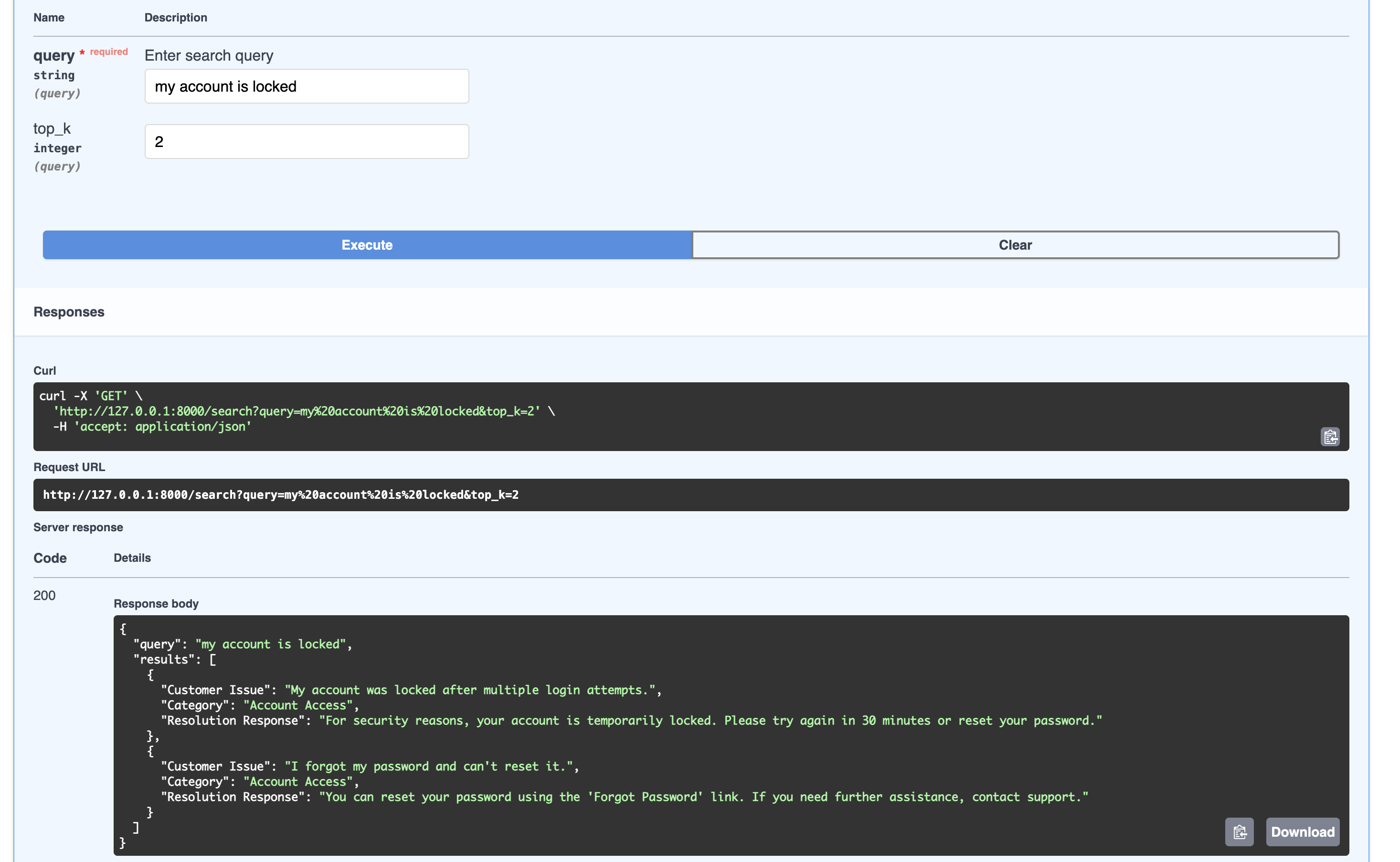

You’re All Set — Time to Search!

Your semantic search engine is now fully functional! 🎉

Let’s put it to the test by asking a common support question:

"My account is locked"

Here’s an example response from the search engine:serve the result :)

{

"query": "my account is locked",

"results": [

{

"Customer Issue": "My account was locked after multiple login attempts.",

"Category": "Account Access",

"Resolution Response": "For security reasons, your account is temporarily locked. Please try again in 30 minutes or reset your password."

},

{

"Customer Issue": "I forgot my password and can't reset it.",

"Category": "Account Access",

"Resolution Response": "You can reset your password using the 'Forgot Password' link. If you need further assistance, contact support."

}

]

}

As you can see, the system retrieves highly relevant entries, even if they don’t exactly match the query keywords - thanks to the power of semantic search. Feel free to try out more queries and tailor the system further to fit your own use case.

You can access the full code for this project on GitHub at the following link: Semantic Search.

In Conclusion

And there you have it - you’ve just built a semantic search system that goes far beyond simple keyword matching. With FastAPI, Weaviate, and a touch of AI magic, you now have a semantic search API that truly understands what users are trying to say - not just what they say.

Thank you for following along. I hope you enjoyed building this as much as I enjoyed walking you through it. Whether you’re helping users find answers faster, improving support experiences, or just exploring the beauty of modern search technology, I reckon this project is a great starting point. ✨

Subscribe to my newsletter

Read articles from Wakeel Kehinde directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Wakeel Kehinde

Wakeel Kehinde

Experimenting, breaking stuff, failing, learning, and building startups for the last three years. Being part of the core team on different Startup SaaS companies allows me to work with entrepreneurs to build, test, and grow ideas into products. Working from getting the first users, iterating or pivoting products through data, and scaling globally.