Mcp Server

hardik bharti

hardik bhartiChatGPT and Gemini have caused a sea change in the way humans interact with technology, from solving the most complicated problems and writing programming code to research. The most crippling limitation of these models is, however, that they are static data-trained; they do not have any way of accessing information constantly refreshed, like the internet or various APIs, in online time. The Model Context Protocol (MCP) solves this problem by allowing the seamless interaction of AI models with the real-world data sources.

What is the Model Context Protocol (MCP)?

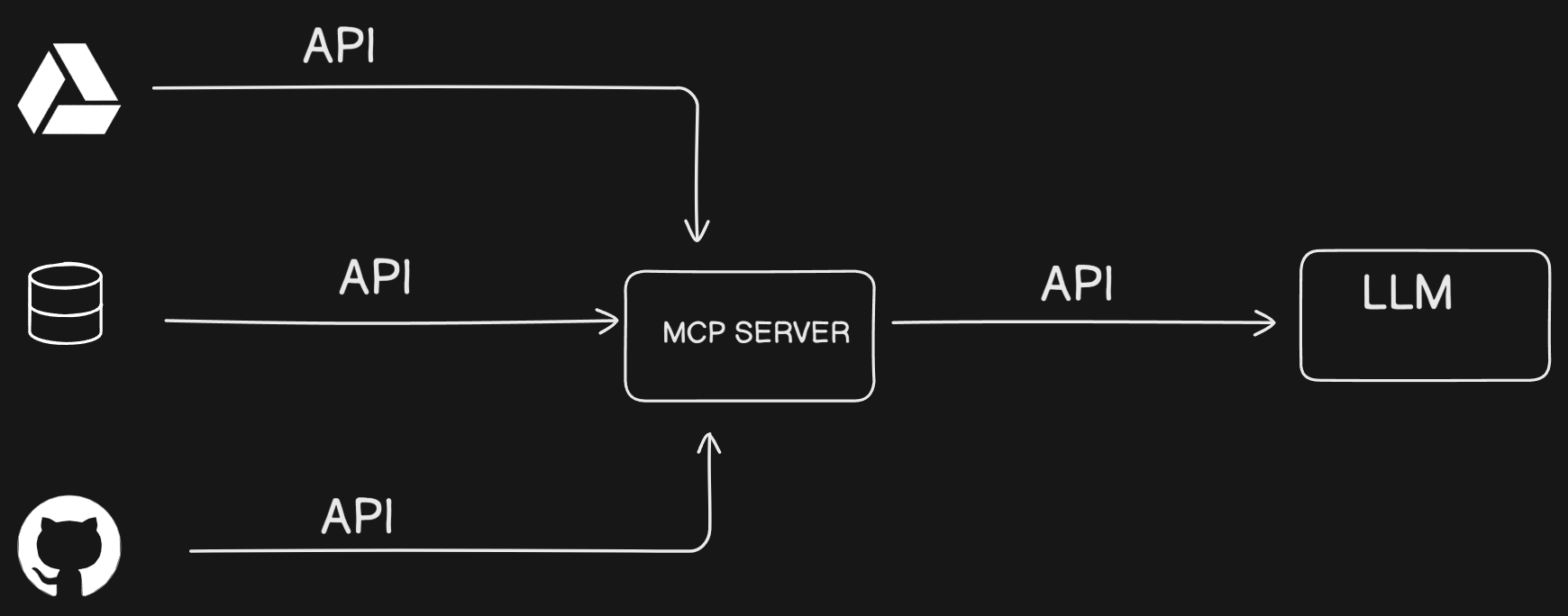

The Model Context Protocol (MCP) effectively addresses the challenge of integrating large language models (LLMs) with external data sources and tools. By serving as a publicly accessible guideline and set of rules, MCP enables two-way communication between AI applications and data sources.

The MCP server acts as a bridge, facilitating seamless interaction between AI tools and various data sources. It exposes your data through a server or any other AI application connected to the client, allowing for dynamic and real-time data access. This capability helps developers by eliminating the need to build separate AI tools and databases for each integration. Instead, developers can utilize the Model Context Protocol to streamline connections, making the development process more efficient and reducing the complexity of integrating disparate systems.

Embracing Future with MCP

Model Context Protocol (MCP) is a very big mile stone for its new evolution among AI integration. It tackles the NxM problem and not only makes things easier for development, but moreover opens an even new world for how one interacts with AI.

Better User Experience

End users will reap the immediate impact benefits of MCP. For instance, they will not perform the excruciating "copy and paste tango" to find relevant and current information. Instead, thanks to MCP, the AI applications integrate with sources of real-time data smooth to deliver accurate response thus context aware for the users. This will therefore lead to easier interaction with the AI, allowing such users to focus on deriving insight other than managing their flow of data.

Simple Development

MCP will surely simplify life for developers and enterprises. A standardized approach cut out the need for unnecessary development jobs that made teams wasted much time innovating rather than reinventing wheels each time. As such, it means shorter development cycles and stronger systems which can be maintained.

Less disintegration of implementation would mean that developers would now be able to develop more consistent and predictable integration systems in case an AI system would be made. Are very important issues that align to user trust and satisfaction, as it would be defined by an AI application, at different platforms and tools.

Futurizing AI Integrations

The most convincing advantage of the MCP is its ability to future-proof AI integrations. The advancement of tools, models, and APIs may happen with time, and MCP provides a solid foundation that could adapt with those changes. This adaptability assures that the integrations would stand functional and relevant even as time changes and the world becomes more technical.

And this means that companies are embracing the advancing edge of the capabilities of AI without the incorrigible fear of obsolescence. It's better that way because that translates into improvement and iteration continued time by time, driving in innovations and competitive edge.

Call to Action

MCP has the great future, yet, will thrive and blend in its ecosystem maximally through adoption and community. By heralding the open-source protocol for that matter, it will be aboard collaboration and contribution from the developer community around the world. Let's adopt MCP; then go on, and push the boundaries of AI what all can do now for even smarter, well-integrated, and more user-friendly applications.

Be you be a developer, business leader, or AI enthusiast, the time is now to discover what MCP has to offer towards transforming such applications and workflows forever. Together, we can bring out the most out of our AI and create a future where technology works for us and not against us.

MCP Architecture with Core Components

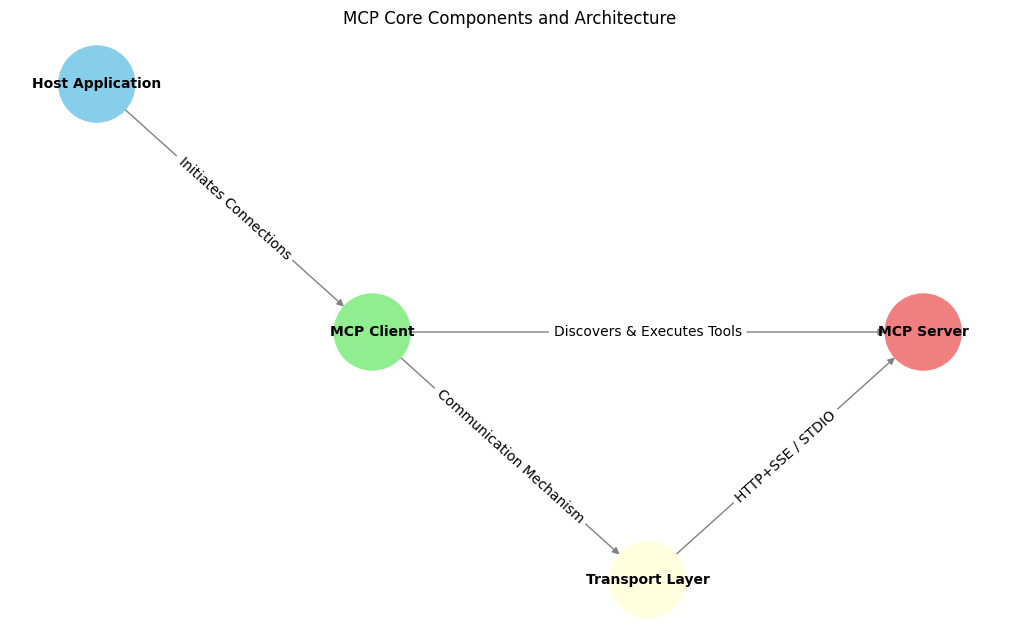

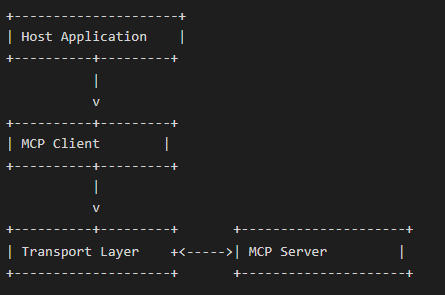

MCP is tracked with a client-server architecture of current state models rather like the language server protocol to connect programming languages to their development tools. It aims to standardize context for all AI applications to interoperate with outside systems.

Core Components of MCP

Host Application: Such LLMs are themselves those that provide access to users and start connections, such as: Claude Desktop; AI-enhanced IDEs like Cursor; and web-based LLM chat interfaces.

MCP Client: Internal to the host application itself, it handles connections with MCP servers and finally performs the translation between what the host application needs and what the Model Context Protocol can give you.

MCP Server: Provides augmentation context and capability, exposing particular functionality to AI apps through MCP. Each server is usually tailored to a specific integration point, such as GitHub for repository access or PostgreSQL for database commands.

Transport Layer: It covers the communication part of clients with servers. Main transport types supported by MCP include:

STDIO (Standard Input/Output): Generally works for local integrations in which the server runs in the same environment as the client.

HTTP+SSE (Server-Sent Events): For remote connections, via HTTP the client addresses its requests and SSE brings the server responses and events.

All communication in MCP is mediated through JSON-RPC 2.0 as the standard underlying message format that defines structure on both requests, responses, and notifications.

How MCP Operates

Generally, many processes go behind the defending wall to ensure that the AI and the external system can meaningfully speak once a host application supports MCP. Here is how it works when a person tells Claude Desktop to perform a task by invoking external tools:

Protocol Handshake:

Initial Connection: The MCP client connects to the configured MCP servers at the user's device.

Capability Discovery: The client queries each server for its available tools, resources, and prompts.

Registration: The client registers these capabilities, making them available to the AI for use within the conversation.

From User Request to External Data:

Tool or Resource Selection: The AI identifies relevant MCP capabilities for fulfilling the request.

Permission Request: The client prompts the user to allow access to the external tool or resource.

information Exchange: After that, the client will issue a request to the correct MCP server using the standardized protocol format once consent has been given.

External Processing: The MCP server processes that request, performing actions like querying a weather service or accessing a database.

Return of Results: In a common format, the server will return the information requested to the client.

Context Integration: The AI gets this information and assembles it into its frame of reference for the conversation.

Response Generation: The AI generates a response that includes the external information, providing the user with an answer based on current data.

MCP Client and Server Ecosystem

The fast and cross adoption of MCP across various platforms since its launch in late 2024 resulted in a broad ecosystem of clients and servers to tie LLMs together with external tools.

Examples of MCP Clients

MCP clients show the greatest diversity, existing as desktop applications and IDEs. Claude Desktop is the software environment developed by Anthropic and provides full support for MCP. Other code editors and IDEs such as Zed, Cursor, Continue, and Sourcegraph Cody have implemented MCP support. Extending into frameworks are such integrations for Firebase Genkit, LangChain adapters, and Superinterface.

Examples of MCP Servers

The MCP ecosystem contains reference servers, official integrations, and community servers. Reference servers provide core functionality and stand as examples for development to MCP project contributors. Official integration includes Stripe, JetBrains, and Apify, certified production-ready connectors. Community servers maintained by enthusiasts address the greater demand spectrum, including integrations with Discord, Docker, and HubSpot.

Security Considerations for MCP Servers

Security Considerations for MCP Servers MCP's OAuth implementation using HTTP+SSE transport servers runs with a risk profile similar to that of standard OAuth flows. Protect against open redirect vulnerabilities, secure tokens, take care of implemented PKCE on all authorization code flows, and developers must stay alert. The Human-In-The-Loop design should protect the user by having the client explicitly ask the user for permission to access the tools/resources. Server developers should apply the principle of least privilege-never ask for more access than is required to perform any action.

Conclusion

The Model Context Protocol signifies a vast improvement in connecting L.L.M.s to external systems, standardizing one abrupt ecosystem, and perhaps assisting with the NxM problem. By providing a universal way for AI applications to converse with tools and data sources, MCP reduces developer overhead and provides an interoperable ecosystem in which innovation benefits the entire community.

An evolving MCP means many more things will be coming: MCP registry and sampling features, as well as a finalized authorization specification. These will significantly entrench MCP's role in fast-tracking AI development and setting a stage where both big companies and individual contributors build cool solutions to make life better for everyone.

As more and more adopt, AI assistants can be expected to be truly helpful not only in understanding but in managing and navigating the digital world outside, which to-the-normal-reason has become exceedingly complex.

Subscribe to my newsletter

Read articles from hardik bharti directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by