A Starter Guide to Data Encoding

Josep

Josep

Data encoding is a fundamental concept in computer science that lets us represent and share information in a digital format. For software developers, understanding the basics is crucial. This guide serves as a starting point, offering a concise overview of key concepts in data encoding. From the basics of bits and bytes to various numeral systems and encoding formats, this article provides an introductory guide for developers to explore the different ways data can be encoded and decoded. Whether you're working with numbers, text, or media, this guide will help you grasp the essentials and direct you to additional resources for further exploration.

Computers work with bits

Computers work with bits. A bit is the smallest unit of data a computer can handle. Simply put, a bit is a piece of information that can have only two values.

For example, traditional hard drives are physical devices with a spinning platter full of magnetic bits (meaning small pieces). A tiny head moves over these bits and magnetizes them with either North or South. To read the bits, the tiny head hovers over them and checks their magnetic orientation. Don’t miss this live demo!

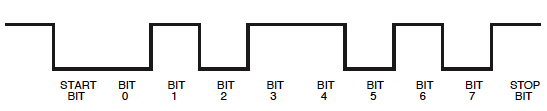

One last example: consider a microcontroller in a keyboard that needs to send key presses to a computer using an old-school PS2 cable. To achieve this, the keyboard will send voltage pulses at a specific frequency to the computer. For this to work, both the keyboard's microcontroller and the computer must agree on the voltage and frequency. During each time interval, the device can either send a voltage pulse through the cable or wait until the next time period.

Different patterns correspond to different key presses. Oscilloscopes let you see these voltage pulses. Check out this live demo!

Notice that the definition of a bit changes depending on the context. In the first example, a bit was a magnetic orientation, while in the second example, it was a voltage pulse. Generally, we refer to bit states as 0 (zero) and 1 (one), but in some cases, it might be easier to use the terms true and false.

As a side note, don't miss this inspiring interview where Professor Brailsford explains why it's more practical for computers to work with bits that have two values, rather than bits with three, four, or even more values.

Representing Bits with Numeral Systems

Modern processors are designed to work with groups of 8 bits, called bytes. This is a common architecture today, but in the past, there were processors with even 6 bits!

As we'll see shortly, information is encoded using bits and bytes. Regardless of the encoded information, almost any software, such as IDEs or Byte Editors, represents bytes using the decimal numeral system, the hexadecimal numeral system, and the octal numeral system. These systems are more convenient and easier for humans to work with.

| Bytes represented with the … | |

| … Binary numeral system | 01101000 01100101 01101100 01101100 01101111 01110111 01101111 01110010 01101100 01100100 |

| … Decimal numeral system | 104 101 108 108 111 119 111 114 108 100 |

| … Hexadecimal numeral system | 68 65 6c 6c 6f 77 6f 72 6c 64 |

| … Octal numeral system | 150 145 154 154 157 167 157 162 154 144 |

If you want to explore the math behind these numeral systems, Sparkfun offers excellent introductory tutorials on the binary and hexadecimal numeral systems. You'll learn about notation, mathematical operations like addition, subtraction, multiplication, division, and bitwise operations, as well as how to convert numbers between decimal, binary, and hexadecimal systems in any order. With this knowledge, you will understand the pros and cons of each numeral system.

Understanding Data Encoding with Bits

Virtually any information can be encoded into bytes. Let's quickly go over some of the key formats that software developers should know when working with bytes.

Encoding boolean statements using bits

As we discussed earlier, a boolean value is encoded using just one bit. True is typically represented by 1, and False by 0. That's all there is to it.

Encoding numbers with bits

Integer numbers are encoded into sequences of bits using the binary numeral system (or base-2 numeral system). Non-negative (or unsigned) integers use all the bits in the sequence for encoding, while signed integers use the left-most bit to show whether the integer is positive or negative.

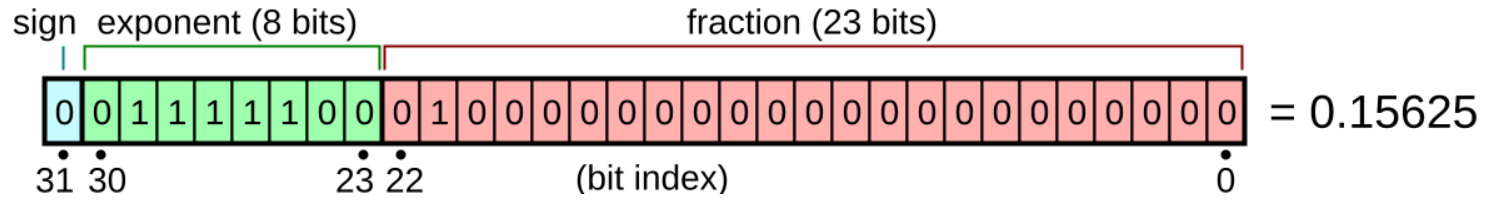

Similarly, decimal numbers are also encoded into sequences of bits using the binary numeral system. For example, the Single-precision and Double-precision floating-point formats represent decimal numbers in scientific notation with 32 bits and 64 bits, respectively. In the Single-precision floating-point format, the left-most bit indicates the sign, 8 bits are used for the exponent, and 23 bits for the fraction. Here is an example from Wikipedia:

Encoding text with bytes using character encodings maps

Text is encoded into bits using a character encoding map that assigns characters to sequences of bytes. Many companies developed different encodings for various languages. For example, the byte 11010100 (D4 in hex) in Windows-1253 encoding represents the Greek character Σ (Sigma), while in Windows-1256 encoding, it represents the Arabic character ش (Shin).

The existence of many encodings makes sharing information challenging because everyone involved needs to know the character encoding of the information being shared. Imagine an employee in Athens trying to print a file in Greek encoded with Windows-1253, but the printer outputs characters in Arabic!

The first major effort to create a universal standard encoding, common to all computers, was the American Standard Code for Information Interchange (ASCII, though the name US-ASCII is often preferred). Here are a few examples:

| Binary | Hexadecimal | Symbol | Description |

0000 1010 | 0A | (no printable representation) | Line feed |

0010 0100 | 24 | $ | Dollar |

0011 0100 | 34 | 4 | Digit 4 |

0100 0001 | 41 | A | Uppercase A |

0110 0001 | 61 | a | Lowercase a |

Notice that the digit 4 as a character is represented in binary as 00110100, while the integer 4 as a number is represented as 00000100.

Check out the Sparkfun ASCII tutorial for a quick introduction to ASCII. You'll get a bit of history and learn how to convert bytes in binary, hexadecimal, decimal, and octal numeral systems into ASCII characters.

US-ASCII (and many of its variants) is compact and efficient for the English language, but it is not practical for other languages that use different alphabets and characters, such as Arabic, Chinese, French, Greek, Japanese, etc.

Eventually, a universal encoding system was developed to solve this problem: The Unicode Standard.

The Unicode Standard

The Unicode Standard (or Unicode) is a universal character encoding system designed to support all languages and writing systems. It is maintained by the Unicode Consortium. The Unicode Standard defines three encodings: UTF-8, UTF-16, and UTF-32, with UTF-8 being the most widely used.

You can explore the Unicode encoding map at codepoints.net. A codepoint is an identifier for any entity that can be converted into bytes. For example, the codepoint (or Unicode Standard identifier) for ℒ (Script Capital L) is U+2112. This codepoint is represented differently in UTF-8 (E2 84 92 in hex), UTF-16 (21 12), and UTF-32 (00 00 00 E4).

In this section, we used the term character in a general way, without giving a strict definition. The truth is, encoding writing systems involves more specific concepts with precise definitions. Joel Spolsky introduces these concepts well in this article.

The Strings section in the Rust book provides a clear and concise explanation of graphemes and glyphs with some examples. If you're still curious, this comment explains the meanings of character, code points, code units, graphemes, and glyphs.

Encoding media with bytes

We can encode colors and images into bytes too!

Colors of light are created by mixing different intensities of red, green, and blue. The absence of red light is represented by the byte 00000000 (0 in decimal), and the maximum presence of red light is represented by the byte 11111111 (255 in decimal). Green and blue colors are encoded in the same way. Colors are then encoded with three bytes. For example, the bytes 244 250 130 (or f4 fa 82 in hex) encode a light yellow color, made with a high intensity of red and green, and a medium intensity of blue.

We just described the RGB (Red Green Blue) color model, and there are many more, such as CMYK (cyan, magenta, yellow, and black), which is ideal for printers that work with ink rather than light.

Now, take your favorite image and draw a grid made of very small cells on top of it, then encode the color contained in each cell. If a cell has more than one color, just pick a color that roughly represents the many colors in that cell. You’ve just encoded your first image!

And here's a challenge for you: How are sounds (and eventually music) encoded into bytes?

Decoding Bytes: Interpreting Bytes Correctly

We’ve just seen various ways to encode integers, real numbers, text, sound, and images into bits. Notice that there is still a problem, as multiple entities can end up with the same bytes representations. For example, the bytes 11110000 10011111 10000000 10111101 (in hex F0 9F 80 BD) can be decoded into many different entities:

| Interpret bytes as… | Decoded value |

| … an array of boolean values | True, True, True, True, False, False, False, False … etc. |

| … an unsigned integer encoded with 4 bytes in binary numeral system | Unsigned integer 4036985021. |

| … a signed integer encoded with 4 bytes in binary numeral system | Signed integer -257982275. |

| … a real number encoded with Single precision floating point format | Float number -3.9491E+28. |

| … as a Unicode codepoint encoded in UTF-8. | Code point U+1F03D Domino Tile Horizontal-01-05 |

| … as RGB color model. | The first three bytes represent a light salmon color, but the last byte by itself is not valid in this format. |

If producers and consumers of bits want to communicate information successfully, they need to know in advance what type of information those bits represent.

For example, file extensions help software and users understand how to interpret a file's contents. A file ending with .txt suggests that the bytes encode text (likely in UTF-8, since it is the standard, though not guaranteed), while a file ending with .png indicates that the bytes encode an image.

Conclusion

By understanding the basics of bits and bytes, numeral systems, and different encoding formats, developers can handle data effectively across various applications. This guide has given an overview of important encoding techniques for numbers, text, and media. Mastering these concepts is essential for your software developer career growth. It will enhance your technical skills and enable you to create more efficient and reliable software solutions.

Subscribe to my newsletter

Read articles from Josep directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by