Kubernetes Guide #1: Autoscaling: EKS, Cluster Autoscaler and HPA Explained

lokeshmatetidevops1

lokeshmatetidevops1In this article, we’ll explore how autoscaling works in Kubernetes, focusing on Amazon EKS, Cluster Autoscaler, and Horizontal Pod Autoscaler (HPA). We’ll also compare autoscaling in cloud vs on-prem setups and discuss when to use Cluster Autoscaler vs HPA.

What is Autoscaling in Kubernetes?

Autoscaling in Kubernetes allows the system to automatically adjust the number of pods or nodes based on demand. There are two main components that help Kubernetes scale:

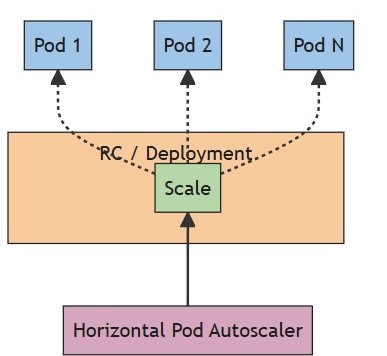

Horizontal Pod Autoscaler (HPA): This scales the number of pods based on CPU, memory, or custom metrics.

Cluster Autoscaler: This scales the number of nodes in your cluster when pods cannot be scheduled due to resource constraints.

Let’s dive into each autoscaling component, explaining how they work and how you can configure them in your Kubernetes cluster.

1. Horizontal Pod Autoscaler (HPA)

What is HPA?

The Horizontal Pod Autoscaler (HPA) automatically adjusts the number of replicas (pods) in a deployment or statefulset based on observed CPU or memory usage or other custom metrics.

How HPA Works:

Scaling Pods: HPA monitors resource utilization (CPU, memory) in the pods. If the CPU usage reaches a certain threshold, HPA increases the number of pods in the deployment. If usage drops, it will scale down the number of pods.

For example, let’s say you have a deployment that is set to scale based on CPU usage. Here’s a manifest for a deployment with HPA that scales when CPU usage exceeds 60%:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app-container

image: my-app:latest

resources:

requests:

cpu: "500m" # Set CPU request

memory: "128Mi"

limits:

cpu: "1000m" # Set CPU limit

memory: "256Mi"

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 3

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: AverageValue

averageValue: "60%" # Scale pods when CPU usage exceeds 60%

In the above example:

The deployment starts with 3 replicas and requests 500m CPU (half of a CPU core).

The HorizontalPodAutoscaler is configured to scale the number of pods between 3 and 10 replicas based on CPU usage. If the average CPU usage exceeds 60%, more pods will be added to handle the load.

When to Use HPA:

HPA is useful when you want to scale your pods based on the load or resource usage (e.g., CPU, memory) in your application. It's often used to handle fluctuating application traffic efficiently.

2. Cluster Autoscaler

What is Cluster Autoscaler?

The Cluster Autoscaler automatically adjusts the number of nodes in your Kubernetes cluster. When pods cannot be scheduled due to insufficient resources (e.g., CPU, memory), Cluster Autoscaler can add more nodes. Conversely, if nodes are underutilized, it can remove them to save resources.

How Cluster Autoscaler Works:

Scaling Nodes: If the Cluster Autoscaler detects that pods are pending (i.e., they cannot run due to lack of resources), it will request more nodes from the underlying infrastructure to ensure that the pods can be scheduled and run.

Scale Down: If there are nodes that are underutilized (i.e., running too few pods), the Cluster Autoscaler will scale down the cluster by removing those nodes, thus saving resources.

Here's an example of how you can install and configure the Cluster Autoscaler in a Kubernetes cluster:

kubectl apply -f https://github.com/kubernetes/autoscaler/releases/download/cluster-autoscaler-<version>/cluster-autoscaler-aws.yaml

In the above command, replace <version> with the desired version of Cluster Autoscaler. This YAML manifest configures Cluster Autoscaler to work with AWS EC2 instances. You may need to customize the configuration if you're running Kubernetes in other environments.

When to Use Cluster Autoscaler:

Cluster Autoscaler is mainly useful when you need to manage the nodes in your Kubernetes cluster. If your cluster runs out of resources because there aren't enough nodes, Cluster Autoscaler will add new nodes automatically. Similarly, it will remove nodes if they are underutilized to save on costs.

Differences Between HPA and Cluster Autoscaler

| Feature | HPA (Horizontal Pod Autoscaler) | Cluster Autoscaler |

| What It Scales | Scales pods in a deployment or statefulset. | Scales nodes in the Kubernetes cluster. |

| When It Scales | Based on CPU, memory, or custom metrics. | Based on pending pods and underutilized nodes. |

| Where It Works | At the application level (pods). | At the infrastructure level (nodes in the cluster). |

| Primary Function | Adjust the number of application instances (pods). | Adjust the number of compute resources (nodes). |

| Scale Direction | Scales pods up or down based on resource usage. | Scales nodes up or down based on pod scheduling. |

| Use Case | Useful when application traffic fluctuates. | Useful when resource capacity is insufficient to run pods. |

When Should You Use HPA vs Cluster Autoscaler?

Use HPA if you want to scale the number of pods in response to the load on your application (CPU, memory, or custom metrics). HPA is great when the problem is more about handling fluctuating application traffic.

Use Cluster Autoscaler if you need to scale nodes in your cluster to ensure there are enough resources for pods to run. If you’re running a cloud-based or on-prem Kubernetes cluster with variable resource requirements, Cluster Autoscaler will help keep the infrastructure in check by adding or removing nodes as needed.

Autoscaling in Cloud vs On-Prem Kubernetes

Autoscaling in Cloud (Amazon EKS)

In Amazon EKS, scaling is typically managed by the cloud provider. EKS integrates seamlessly with AWS Auto Scaling and Amazon EC2 services, so you don’t need to install the Cluster Autoscaler manually. However, you can still use the Cluster Autoscaler to scale nodes if needed.

For example, in EKS, you can create an Auto Scaling Group for your worker nodes, and EKS will automatically scale the number of worker nodes based on demand. Here’s a sample Node Group configuration in Terraform for EKS:

resource "aws_eks_node_group" "example" {

cluster_name = aws_eks_cluster.example.name

node_group_name = "example-node-group"

node_role_arn = aws_iam_role.example.arn

subnet_ids = aws_subnet.example[*].id

scaling_config {

min_size = 1

max_size = 5

desired_size = 3

}

instance_types = ["t3.medium"]

disk_size = 20

}

In this example:

- EKS will manage scaling nodes between 1 and 5 nodes automatically based on demand, but you can use Cluster Autoscaler to fine-tune this.

Autoscaling in On-Prem Kubernetes

In an on-prem Kubernetes setup, the scaling process is more complex. You don’t have the same level of integration with cloud providers, so you will need to set up the Cluster Autoscaler manually to scale your nodes. This could involve integrating with external tools like VMware, OpenStack, or bare-metal automation scripts to provision or decommission machines.

For instance, here's a manifest to deploy the Cluster Autoscaler for a Kubernetes cluster running on VMware:

apiVersion: apps/v1

kind: Deployment

metadata:

name: cluster-autoscaler

spec:

replicas: 1

selector:

matchLabels:

app: cluster-autoscaler

template:

metadata:

labels:

app: cluster-autoscaler

spec:

containers:

- name: cluster-autoscaler

image: gcr.io/google-containers/cluster-autoscaler:v1.21.0

command:

- ./cluster-autoscaler

- --v=4

- --cloud-provider=vmware

- --nodes=1:10:vmware-node-group

In this setup:

- The Cluster Autoscaler is set up for an on-prem Kubernetes cluster and will automatically scale nodes based on pod requirements.

Cluster Autoscaler in Cloud vs On-Prem

| Feature | Cloud (EKS/Managed Kubernetes) | On-Prem Kubernetes |

| Cluster Autoscaler Setup | Managed and integrated with the cloud platform. | Requires manual configuration and external integrations. |

| Scaling Nodes | Handled automatically by cloud provider (AWS, GCP, etc.). | Requires additional setup, e.g., with VMware or OpenStack. |

| Scaling Pods (via HPA) | Native support for HPA, including CloudWatch metrics. | Native support for HPA, but underlying node scaling needs to be managed manually. |

In cloud environments, autoscaling is more integrated and hands-off. In on-prem setups, you'll have to manage more aspects of the scaling process, especially for nodes, by integrating Cluster Autoscaler with your on-prem infrastructure.

Conclusion

In Kubernetes, autoscaling is essential for keeping your application responsive to changing workloads. The Horizontal Pod Autoscaler (HPA) scales the pods based on CPU, memory, or custom metrics, while the Cluster Autoscaler scales the nodes to ensure there are enough resources for your workloads.

In cloud environments like EKS, much of the scaling process is automated, making it easier to manage. For on-prem Kubernetes setups, however, you need to handle the scaling more manually, especially for nodes, by integrating Cluster Autoscaler with your infrastructure.

Whether you are working with cloud or on-prem Kubernetes, combining both HPA and Cluster Autoscaler ensures that your applications are highly available and can handle varying loads efficiently.

Subscribe to my newsletter

Read articles from lokeshmatetidevops1 directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

lokeshmatetidevops1

lokeshmatetidevops1

I am DevOps Specialist with over 15+ years of experience in CI/CD, automation, cloud infrastructure, and microservices deployment. Proficient in tools like Jenkins, GitLab CI, ArgoCD, Docker, Kubernetes (EKS), Helm, Terraform, and AWS. Skilled in scripting with Python, Shell, and Perl to streamline processes and enhance productivity. Experienced in monitoring and optimizing Kubernetes clusters using Prometheus and Grafana. Passionate about continuous learning, mentoring teams, and sharing insights on DevOps best practices.