Oracle APEX and Oracle AI Vector Search - Ask from your own PDF documents

Pekka Kanerva

Pekka KanervaThere are several public AI services into which you can load your own documents and ask questions about their contents. But what if the documents are confidential and should not be sent outside the company? And what if there are tens or hundreds of documents, making it impossible to ask questions from all of them at the same time using the public services?

This solution shows how you can store Embedding Models in ONNX format into Oracle Database 23ai, then vectorize the contents of the documents using the models, and store the vectors in the new vector datatype column in Oracle Database 23ai. End users can then ask questions using natural language with the Retrieval Augmented Generation (RAG) technique. The relevant parts of the document text are queried from the stored vectors using Oracle AI Vector Search. Then the retrieved document chunks and the question are sent to Oracle Generative AI Service, which generates the answer to the question using LLM inside the GenAI service. All this happens securely inside the Oracle Database and Oracle Cloud Infrastructure without any data or document text ever leaving the customer’s own tenancy.

Both the processing of the documents and the question chatbot application are built with Oracle's low code tool, Oracle APEX. So there is no need to, for example, install any Python libraries and manage complex infrastructure. Instead, everything happens inside the Oracle-managed Oracle Autonomous Database using the declarative low code tool APEX and a little bit of PL/SQL.

Loading the embedding model into database

You can convert any embedding model to ONNX format. In this solution, we are using the model “all-MiniLM-L6-v2.onnx” supplied by Oracle, which is already in the correct format. The model is loaded into Oracle Autonomous Database in three phases:

Load the model into the OCI Bucket (in this example, "models").

Copy the model into the database directory. You can use the already existing DATA_PUMP_DIR or create your own directory.

BEGIN

DBMS_CLOUD.GET_OBJECT(

credential_name => 'OCI$RESOURCE_PRINCIPAL',

object_uri => 'https://objectstorage.<region>.oraclecloud.com/n/<namespace>/b/models/o/all-MiniLM-L6-v2.onnx',

directory_name => 'DATA_PUMP_DIR');

END;

/

- Load the model into the database

BEGIN

DBMS_VECTOR.LOAD_ONNX_MODEL(

'DATA_PUMP_DIR',

'all-MiniLM-L6-v2.onnx',

'all_MiniLM_L6_v2',

JSON('{"function" : "embedding", "embeddingOutput" : "embedding", "input": {"input":["DATA"]}}'));

END;

/

APEX Application

In the APEX application, there are two pages:

Embed your PDFs to Vectors

On this page, admin users process the documents they want to store in the document knowledge base in Oracle Database.Ask from your own PDFs

On this page, end users ask their questions about the documents in the knowledge base.

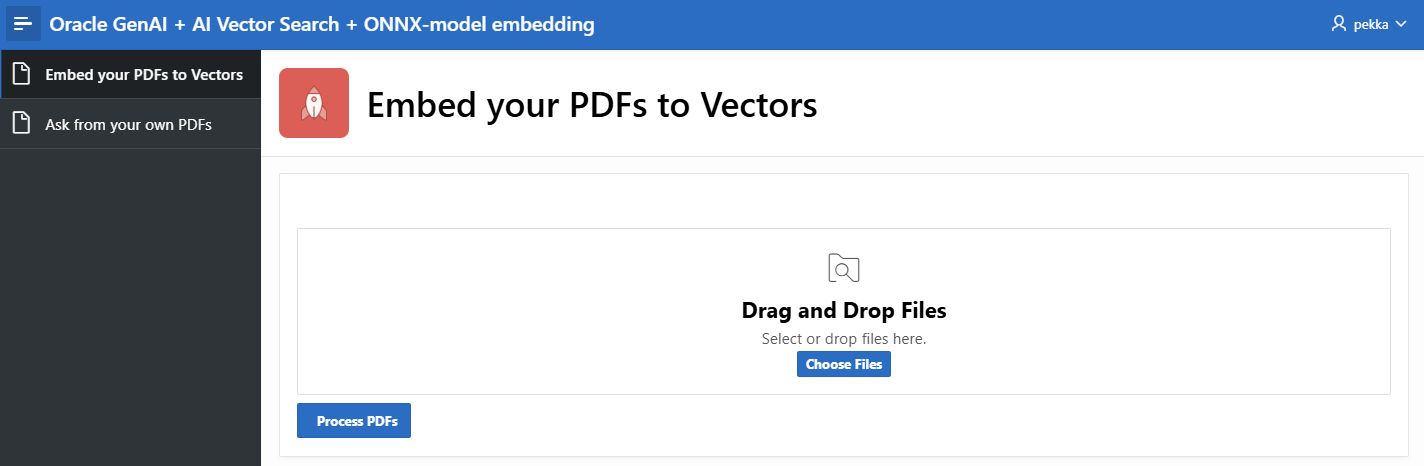

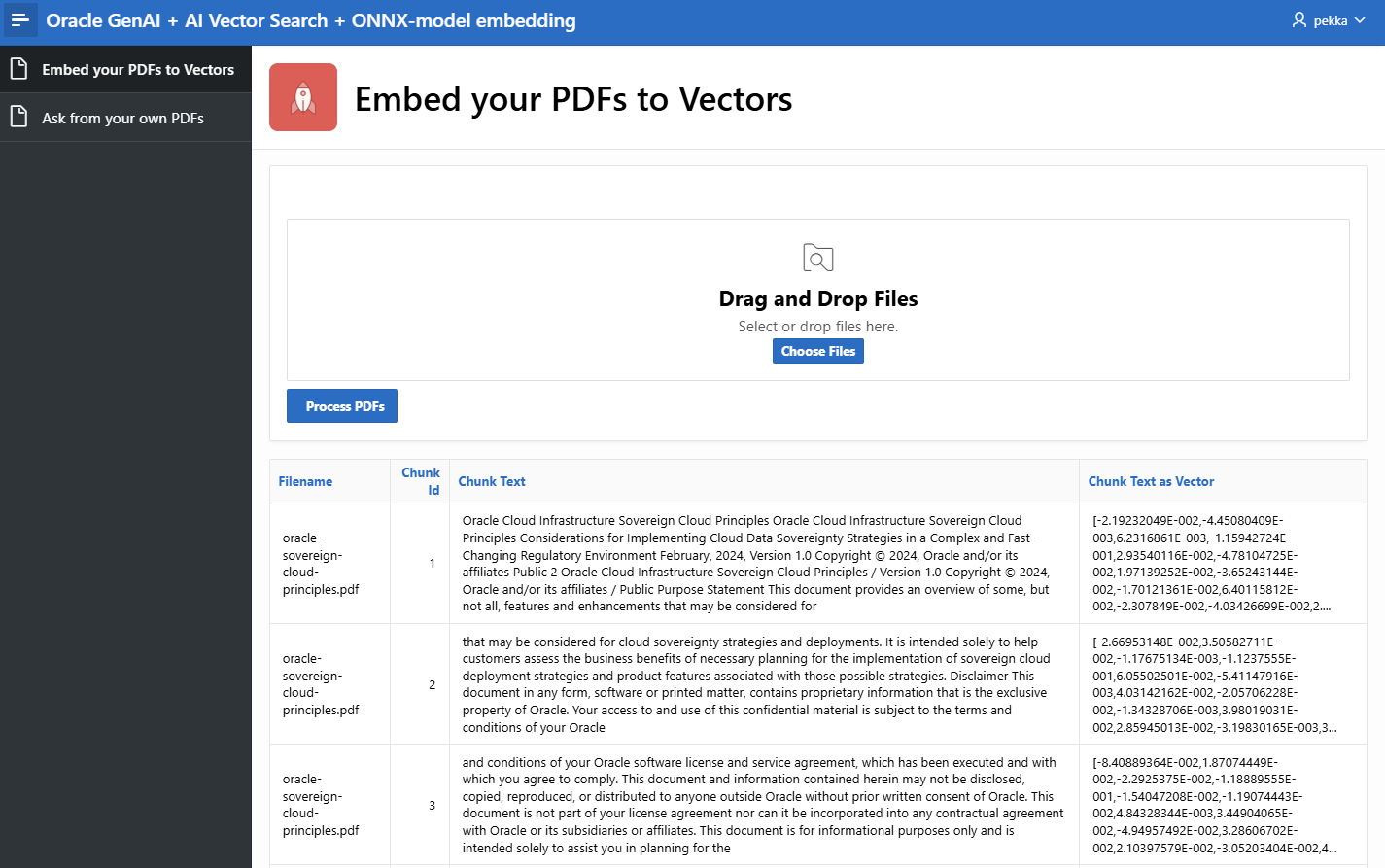

Page 1: Embed your PDFs to Vectors

The user interface is as follows:

The user selects or drags and drops the documents into APEX’s built-in file selector item and presses the Process PDFs button. Here, I have used two Oracle documents:

The button submits the page and starts the processing, after which the results become visible on the same page. The user can check the results and optionally fine-tune or ask the developer to fine-tune the parameters that define how the documents are split into chunks. Vectors are shown here just for demonstration purposes.

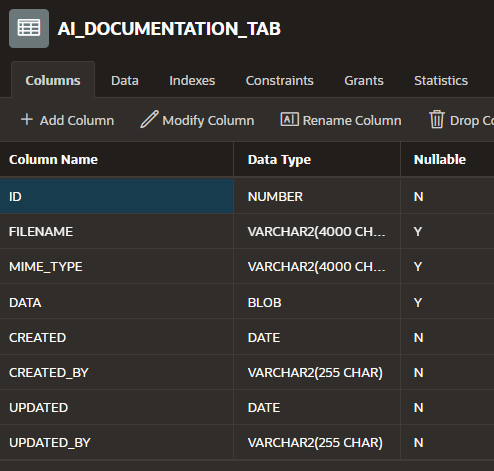

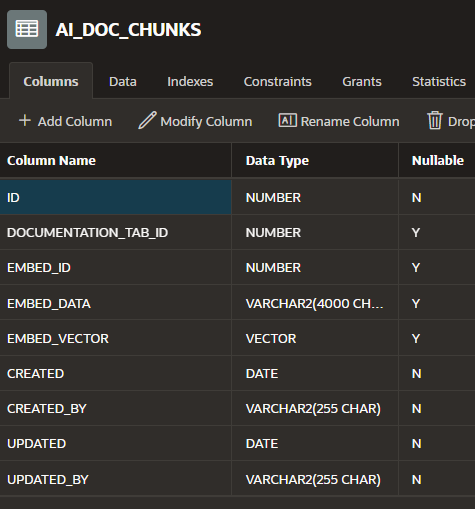

The documents and vectors are stored in two database tables:

Note the new datatype VECTOR of the column EMBED_VECTOR.

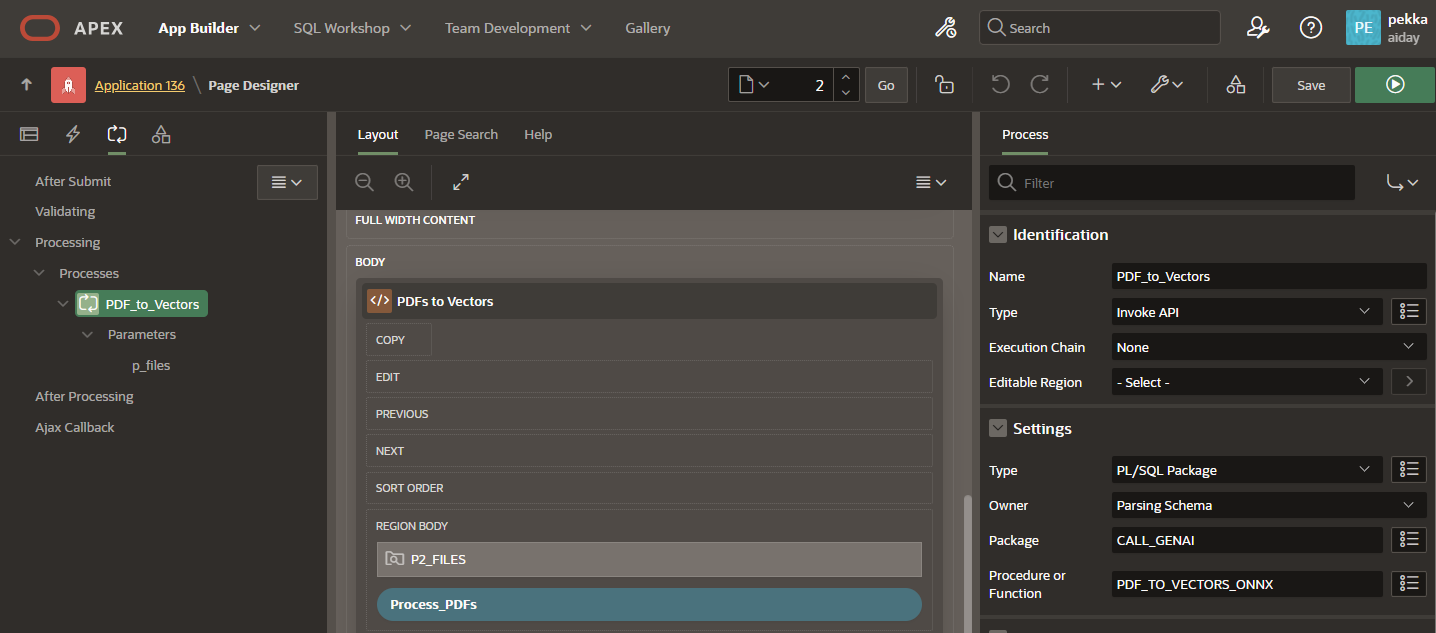

The processing button calls the PL/SQL procedure CALL_GENAI.PDF_TO_VECTORS_ONNX stored in the database.

The package first stores the documents in the database and then performs text extraction, chunking, and embedding all in just one insert statement, using the new dbms_vector_chain package:

insert into ai_doc_chunks

( documentation_tab_id

, embed_id

, embed_data

, embed_vector

)

select

dt.id

, et.embed_id

, et.embed_data

, to_vector(et.embed_vector)

from ai_documentation_tab dt

, dbms_vector_chain.utl_to_embeddings(

dbms_vector_chain.utl_to_chunks(

dbms_vector_chain.utl_to_text(dt.data)

,json('{"by":"words"

,"max":"100"

,"overlap":"5"

,"split by":"recursively"

,"normalize":"all"

}')

)

, json('{"provider":"database", "model":"all_MiniLM_L6_v2"}')

) t

, JSON_TABLE(t.column_value, '$[*]'

COLUMNS ( embed_id NUMBER PATH '$.embed_id'

, embed_data VARCHAR2(4000) PATH '$.embed_data'

, embed_vector CLOB PATH '$.embed_vector'

)

) et;

Page 2: Ask from your own PDFs

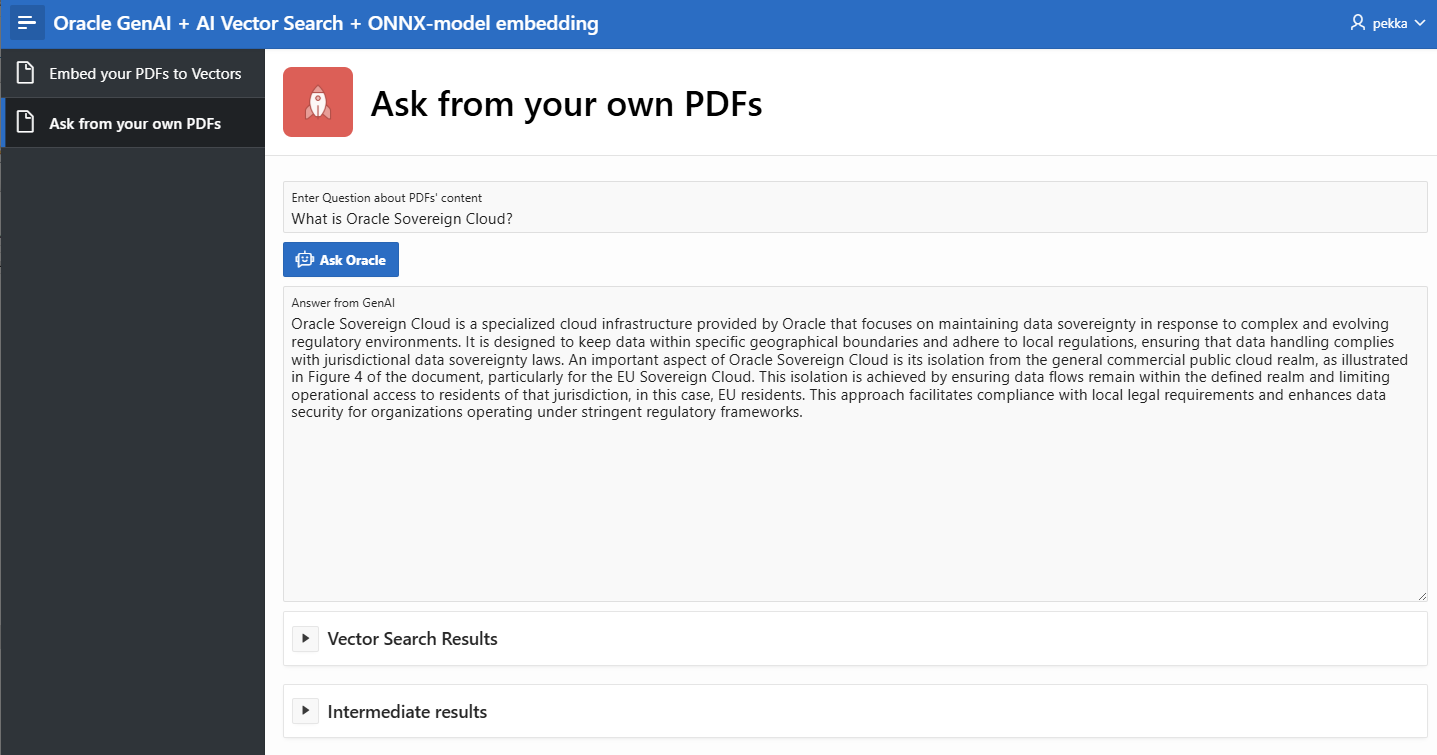

This page is the actual chatbot application page for end users. The user enters the question, presses the Ask Oracle button, and gets the answer:

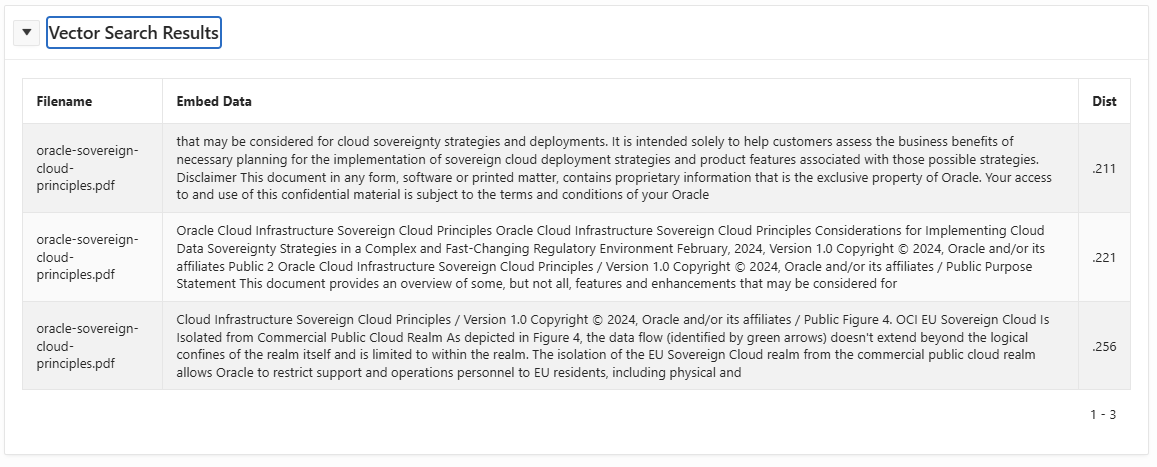

The collapsible regions are just for demonstration purposes. From the Vector Search Results region, the user can check which text chunks AI Vector Search found:

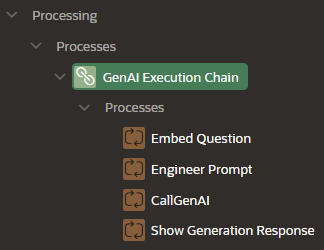

Under the hood, the Ask Oracle button starts an execution chain:

Embed Question-process, as the name implies, vectorizes the user’s question.

Engineer Prompt-process executes the AI Vector Search using the VECTOR_DISTANCE-function:

select

c.embed_data

, round( VECTOR_DISTANCE( c.embed_vector

, to_vector(:P4_PARSED_QUESTION_EMBED_RESPONSE)

, COSINE

), 3) as dist

from ai_doc_chunks c

order by 2

fetch first 3 rows only;

And then, after that, it builds the final prompt that will be sent to the GenAI Service.

The CallGenAI process sends the question and text chunks to the Oracle GenAI service using the apex_ai package:

:P4_GENAI_RESPONSE := apex_ai.chat(

p_prompt => :P4_PROMPT,

p_system_prompt => l_system_prompt,

p_messages => l_messages);

And finally, the Show Generation Response process displays the response in the Answer page item.

This application demonstrates the power of Oracle APEX. It shows how easy it is to build the user interface and integrate with the Oracle Cloud Infrastructure AI services.

Subscribe to my newsletter

Read articles from Pekka Kanerva directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by