How To Use Vision Hand Pose in SwiftUI (Updated)

Carlos Mbendera

Carlos Mbendera

Back in the Olden Days, also known as 2023, I wrote an article on Medium documenting how a developer can set up the Vision framework in their iOS app and how I implemented in it in my winning submission for the Apple WWDC23 Swift Student Challenge, Rhythm Snap. Since posting that article, a lot has happened in the SwiftUI World, we now have visionOS, Swift 6 and so much more. Likewise, I’ve also grown as a developer and can’t help but cringe at my older article. Thus, I have decided to update it.

Feel free to share some tips and advice in the comments, I’m always happy to learn something new.Vis

What Is The Vision Framework?

Simply put, the Apple’s Vision Framework allows developers to access common Computer Vision tools in their Swift projects. Similar to how you can use Apple Maps with MapKit in your SwiftUI Apps except now we’re doing cool Computer Vision things.

Hand Pose is a subset of the Vision Framework that focuses on well… hands. With it, you can get detailed information on what hands are doing within some form on visual content whether it be a video, a photo, a live stream from the Camera and so on. In this article, I’ll be focusing on setting up Hand Pose but here’s a list of other interesting things you can do with the Vision framework:

Face Detection

Text Recognition

Tracking human and animal body poses

Image Analytics

Trajectory, contour, and horizon detection

More…

For the full list of capabilities, visit the Apple Vision documentation.

Sounds Cool. Now Show Me The Code

Before we write a single line of code, we need to decide what type of content we’re working with. If we’re working with frames being streamed from the Camera, then we need to write some logic that connects the Camera to the Vision Framework. On the other hand, if we’re working with local files, we don’t need to write the Camera logic and our main focus is on the Vision side.

This articles focuses on the Camera approach. However, I recently wrote an implementation of Face Detection using a saved .mp4 file for another project. It should be a pretty good reference. I might write a follow up article for that project sooner or later, depending on whether or not there’s demand for it.

Setting Up The Camera

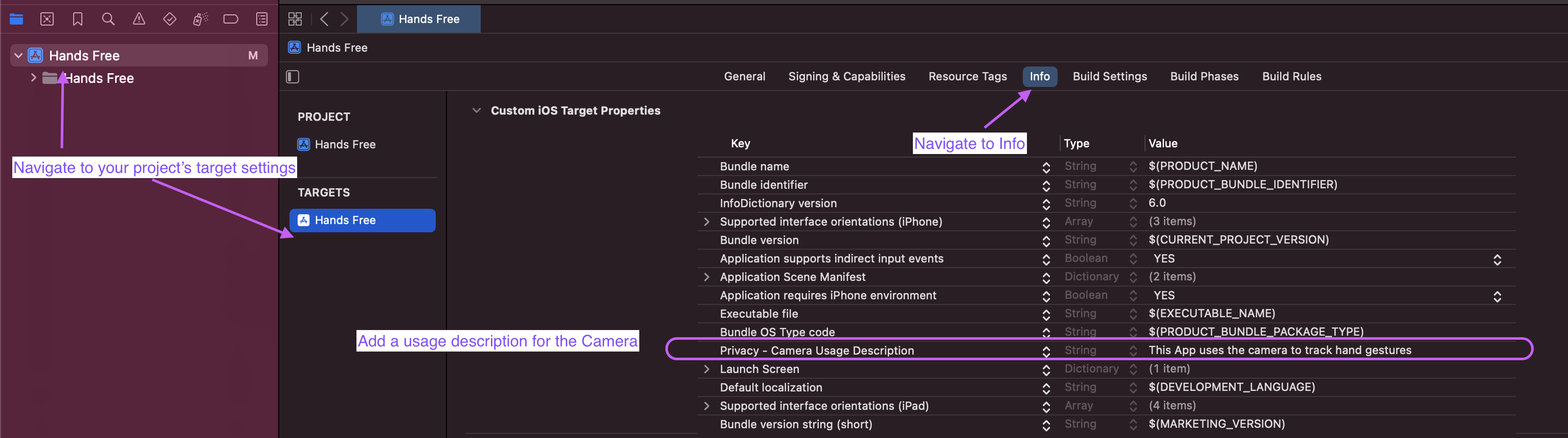

Firstly, to set up the Camera, we will most likely require the user’s permission to access their Camera. Thus, we need to tell Xcode, what message to present to the user when we’re asking to use their camera.

Here’s Apple’s guide on doing that. For our use case, you’ll want to:

Navigate to your Project’s target

Go to the Target’s Info

Add

Privacy - Camera Usage Descriptionand write a description for it

At this point, we need to write some code to create a viewfinder and access the Camera. Since our main focus is on Vision, I will reuse and refactor most of my code from the first article. However, if you want a detailed breakdown on what’s going on. This article is rather thorough.

We Need 2 Files:

Camera View

CameraViewController

We’re essentially wrapping a UIKit View Controller for use in SwiftUI. This approach is based on the Apple Documentation for AVFoundation but stripped down a fair amount.

CameraView.swift

import SwiftUI

// A SwiftUI view that represents a `CameraViewController`.

struct CameraView: UIViewControllerRepresentable {

// A closure that processes an array of CGPoint values.

var handPointsProcessor: (([CGPoint]) -> Void)

// Initializer that accepts a closure

init(_ processor: @escaping ([CGPoint]) -> Void) {

self.handPointsProcessor = processor

}

// Create the associated `UIViewController` for this SwiftUI view.

func makeUIViewController(context: Context) -> CameraViewController {

let camViewController = CameraViewController()

camViewController.handPointsHandler = handPointsProcessor

return camViewController

}

// Update the associated `UIViewController` for this SwiftUI view.

// Currently not implemented as we don't need it for this app.

func updateUIViewController(_ uiViewController: CameraViewController, context: Context) { }

}

CameraViewController.swift

import AVFoundation

import UIKit

import Vision

enum CameraErrors: Error {

case unauthorized, setupError, visionError

}

final class CameraViewController: UIViewController {

// Queue for processing video data.

private let videoDataOutputQueue = DispatchQueue(label: "CameraFeedOutput", qos: .userInteractive)

private var cameraFeedSession: AVCaptureSession?

//Vision Vars, these are used later

var handPointsHandler: (([CGPoint]) -> Void)?

// On loading, start the camera feed.

override func viewDidLoad() {

super.viewDidLoad()

do {

if cameraFeedSession == nil {

try setupAVSession()

}

//Important: Call this line with DispatchQueue otherwise it will cause a crash

DispatchQueue.global(qos: .userInteractive).async {

self.cameraFeedSession?.startRunning()

}

} catch {

print(error.localizedDescription)

}

}

// On disappearing, stop the camera feed.

override func viewDidDisappear(_ animated: Bool) {

cameraFeedSession?.stopRunning()

super.viewDidDisappear(animated)

}

// Setting up the AV session.

private func setupAVSession() throws {

//Ask for Camera permission otherwise crash

if AVCaptureDevice.authorizationStatus(for: .video) != .authorized{

AVCaptureDevice.requestAccess(for: .video) { authorized in

if !authorized{

fatalError("Camera Access is Rquired")

}

}

}

guard let videoDevice = AVCaptureDevice.default(.builtInWideAngleCamera, for: .video, position: .front) else {

throw CameraErrors.setupError

}

guard let deviceInput = try? AVCaptureDeviceInput(device: videoDevice) else {

throw CameraErrors.setupError

}

let session = AVCaptureSession()

session.beginConfiguration()

session.sessionPreset = .high

guard session.canAddInput(deviceInput) else {

throw CameraErrors.setupError

}

session.addInput(deviceInput)

let dataOutput = AVCaptureVideoDataOutput()

if session.canAddOutput(dataOutput) {

session.addOutput(dataOutput)

dataOutput.alwaysDiscardsLateVideoFrames = true

dataOutput.setSampleBufferDelegate(self, queue: videoDataOutputQueue)

} else {

throw CameraErrors.setupError

}

let previewLayer = AVCaptureVideoPreviewLayer(session: session)

previewLayer.videoGravity = .resizeAspectFill

view.layer.addSublayer(previewLayer)

previewLayer.frame = view.bounds

session.commitConfiguration()

cameraFeedSession = session

}

}

Awesome! Now that we have the camera things set up. You can add a CameraView to your ContentView.

An alternative and exciting approach for your camera set up is to use Combine. Here’s a Kodeco article if you want to try that out. You will have to write some form of Frame Manager class to handle your Vision Calls

The Vision Stuff

To perform a VNDetectHumanHandPoseRequest we’re going to make an extension for the View Controller. You can place this code anywhere you want really. I put it under the View Controller

// Extension to handle video data output and process it using Vision.

extension CameraViewController: AVCaptureVideoDataOutputSampleBufferDelegate {

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

// Vision request to detect human hand poses.

let handPoseRequest = VNDetectHumanHandPoseRequest()

//Using one hand to make debugging easier, you can change this value if you'd like monitor more than 1 hand.

handPoseRequest.maximumHandCount = 1

var fingerTips: [CGPoint] = []

let handler = VNImageRequestHandler(cmSampleBuffer: sampleBuffer, orientation: .up, options: [:])

do {

try handler.perform([handPoseRequest])

guard let observations = handPoseRequest.results, !observations.isEmpty else {

DispatchQueue.main.async {

self.handPointsHandler?([])

}

return

}

// Process the first detected hand

guard let observation = observations.first else { return }

// Get all hand points

let points = try observation.recognizedPoints(.all)

// Filter points with good confidence and convert coordinates

let validPoints = points.filter { $0.value.confidence > 0.9 }

.map { CGPoint(x: $0.value.location.x, y: 1 - $0.value.location.y) }

DispatchQueue.main.async {

self.handPointsHandler?(Array(validPoints))

}

} catch {

cameraFeedSession?.stopRunning()

}

}

}

ContentView.swift

This Content View Does not have an overlay or anything it just has the viewfinder. You can use this as a checkpoint to make sure you’ve got everything set up.

Please note that it is in fact making calls to the Hand PoseAPI. So you can add some print statements if you’d like or branch off here and add your own logic.

import SwiftUI

struct ContentView: View {

@State private var fingerTips: [CGPoint] = []

var body: some View {

ZStack {

CameraView{ points in

fingerTips = points

}

}

.edgesIgnoringSafeArea(.all)

}

}

#Preview {

ContentView()

}

Finger Tip Overlay ContentView.swift

To have an overlay we need to convert the coordinates of the Finger Tips from their position in the Video, to a relative position in the viewfinder. This makes sense if you think of all the possible different shapes of iPhones, Macs and iPads exist.

import SwiftUI

import Vision

struct ContentView: View {

@State private var fingerTips: [CGPoint] = []

@State private var viewSize: CGSize = .zero

//This points View is based on the code that @LeeShinwon Wrote. I thought it was more elegant than the previous overlay I wrote in my last article

private var pointsView: some View {

ForEach(fingerTips.indices, id: \.self) { index in

let pointWork = fingerTips[index]

let screenSize = UIScreen.main.bounds.size

let point = CGPoint(x: (pointWork.y) * screenSize.width, y: pointWork.x * screenSize.height)

Circle()

.fill(.orange)

.frame(width: 15)

.position(x: point.x, y: point.y)

}

}

var body: some View {

GeometryReader { geometry in

ZStack {

CameraView { points in

fingerTips = points

}

pointsView

}

}

.edgesIgnoringSafeArea(.all)

}

}

Additional Readings: Sample Projects And Helpful Articles

If you’d like to experiment with the text and face detection, there is a pretty good and brief article from hacker noon.

Kodeco provides a very strong introduction to the Hand Pose Framework in this article.

Detailed breakdown of setting up the Camera from CreateWithSwift

CreateWithSwift’s similar to take this article: Detecting hand pose with the Vision framework

Rhythm Snap - App that taught users how to have better rhythm by using an iPad’s camera to monitor their finger taps in real time

Open Mouth Detection With Vision - Basically checks if any of the faces detected have their mouth open.

A Starter Project by Apple WWDC24 Swift Student Challenge Winner, Shinwon Lee

Subscribe to my newsletter

Read articles from Carlos Mbendera directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Carlos Mbendera

Carlos Mbendera

bff.fm tonal architect who occasionally writes cool software