Kubernetes Tutorial for Beginners: Mastering the Basics in 1 Hour (Hindi)

MANISH KUMAR

MANISH KUMARIntroduction

Swagat hai hamare Kubernetes tutorial ke complete guide mein jo bilkul beginners ke liye hai! Is ek ghante ke safar mein, hum Kubernetes ke core concepts aur main components ko samjhenge jo aapko is powerful container orchestration platform ke saath efficiently kaam karne ke liye zarurat hai. Tutorial ke end tak, aap ek local Kubernetes cluster setup karna, Kubernetes configuration files ka syntax samajhna, aur ek web application ko uske database ke saath local Kubernetes cluster mein deploy karna seekh chuke honge.

Kubernetes kya hai?

Sabse pehle hum samjhenge ki Kubernetes kya hai. Kubernetes ek open-source container orchestration platform hai jo containerized applications ke deployment, scaling, aur management ko automate karta hai. Ye developers aur operators ke liye containerized applications ka management effortless banata hai.

Kubernetes is kaam ko achieve karta hai ek robust aur scalable platform provide karke jo containerized applications ke deployment, scaling, aur management ke liye hai. Ye underlying infrastructure ko abstract karta hai aur ek consistent API provide karta hai cluster ke saath interact karne ke liye. Ye abstraction developers ko sirf unke applications ki logic par dhyan dene deta hai aur infrastructure management ki complexities ko door karta hai.

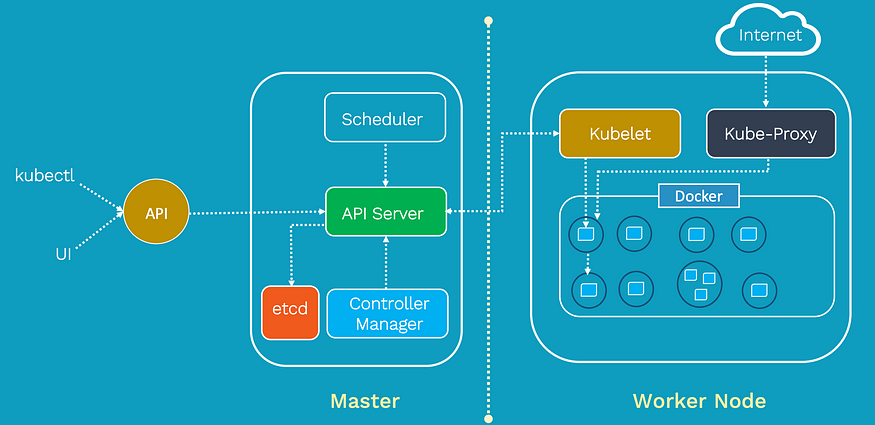

Kubernetes Architecture

Ab hum Kubernetes architecture ke baare mein samjhenge. Architecture ko samajhna zaruri hai taaki aap jaan saken ki Kubernetes containerized applications ko efficiently kaise manage karta hai.

Master Node

Kubernetes cluster ka dil Master Node hota hai. Ye cluster ka control plane hota hai aur poore cluster ki overall state manage karta hai. Master Node global decisions leta hai, jaise naye pods ka scheduling, nodes aur pods ki health ka monitoring, aur applications ko demand ke hisaab se scale karna.

Master Node ke key components:

API Server: Ye cluster ka central management point hai. Ye Kubernetes API expose karta hai, jo users aur doosre components ko cluster ke saath interact karne ka mauka deta hai.

etcd: Ye ek distributed key-value store hai jo cluster ke configuration data ko store karta hai. Cluster ki saari state ki information yahi store hoti hai.

Controller Manager: Ye alag-alag controllers ka ek set hota hai jo API Server ke madhyam se cluster state ko monitor karta hai aur corrective actions leta hai taaki desired state maintain ho. Jaise, ReplicaSet Controller ensure karta hai ki specified pod replicas chal rahe ho.

Scheduler: Scheduler naye pods ko resource requirements aur availability ke hisaab se nodes pe assign karta hai. Ye workload ko evenly distribute karne mein madad karta hai.

Worker Nodes

Worker Nodes wo machines hain jaha containers (pods) schedule aur run hote hain. Ye cluster ke data plane ko banate hain aur actual workloads ko execute karte hain. Har Worker Node kuch key components run karta hai:

Kubelet: Ye ek agent hai jo har Worker Node par run hota hai aur Master Node ke saath communicate karta hai. Ye ensure karta hai ki pod specifications mein diye gaye containers run kar rahe hain aur healthy hain.

Container Runtime: Kubernetes multiple container runtimes support karta hai, jaise Docker ya containerd. Ye container images ko pull karta hai aur containers ko Worker Nodes par run karta hai.

Kube Proxy: Ye cluster ke andar network communication manage karta hai. Ye services ke liye network routing aur load balancing karta hai.

Interaction kaise hota hai?

Master Node aur Worker Nodes Kubernetes API Server ke madhyam se communicate karte hain. Users aur doosre components bhi API Server ke through cluster ke saath interact karte hain.

Jab user ek naye application ko deploy karta hai, to configuration API Server ko bheji jaati hai, jo usse etcd mein store karta hai.

Controller Manager cluster state ko continuously API Server ke madhyam se monitor karta hai. Agar state mein koi deviation ho (jaise, koi pod nahi chal raha), to ye corrective actions leta hai.

Jab ek naye pod ko schedule karna hota hai, to Scheduler resource availability aur doosri constraints ke base par ek appropriate Worker Node select karta hai.

Kubelet Worker Node par container ko start karta hai, aur Worker Nodes pod ki status Master Node ko report karte hain.

Main Kubernetes Components: Nodes aur Pods

Ab hum Kubernetes ke fundamental building blocks, nodes aur pods, ko samjhenge.

Nodes:

Kubernetes mein ek Node ek worker machine hai jaha containers deploy aur run hote hain. Ye ek physical ya virtual machine ho sakta hai.

Nodes cluster ke workloads run karte hain aur resources provide karte hain.

Example:

Maan lijiye ek Kubernetes cluster mein teen Worker Nodes hain:

Node A

Node B

Node C

Pods:

Pod Kubernetes mein sabse chhoti deployable unit hai jo ek ya zyada tightly coupled containers ko represent karta hai.

Containers ek pod ke andar same network namespace share karte hain aur localhost par ek doosre se baat karte hain.

Example:

Ek simple web application ko deploy karte hain jo ek application server aur ek database ka use karta hai. Dono servers ko ek single pod mein package kiya ja sakta hai.

- Pod 1: (Web App + Database)

Agli sections mein hum Pods, Services, Deployments aur Kubernetes ke doosre fundamental concepts ko explore karenge. Isse aapko apne containerized applications ko effectively orchestrate karne mein madad milegi.

Neeche ek Kubernetes YAML configuration file ka sample diya gaya hai jo ek simple web application ko deploy karta hai, jisme ek application server (nginx) aur ek database server (MongoDB) ko ek single pod mein rakha gaya hai:

YAML Configuration File (webapp-with-db-pod.yaml):

apiVersion: v1

kind: Pod

metadata:

name: webapp-with-db

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

- name: database

image: mongo:latest

(Explanation):

Pod ka naam:

webapp-with-db.Containers: Is pod ke andar do containers hain:

webapp container: Ye

nginx:latestimage ka use karta hai, jo ek popular web server aur reverse proxy hai. Ye port 80 expose karta hai.database container: Ye

mongo:latestimage ka use karta hai, jo ek widely used NoSQL database hai.

Shared Network Namespace: Dono containers same network namespace share karte hain aur ek doosre se

localhostke madhyam se baat kar sakte hain.

Pods aur Individual Containers ke beech farak kyu?

Grouping Containers: Pods logically related containers ko group karte hain, jo scheduling aur management ko simplify karta hai.

Shared Resources: Pod ke andar containers shared resources (network aur volumes) use karte hain.

Atomic Unit: Pods ek atomic deployment unit represent karte hain, jisse scaling aur management asaan hota hai.

Scheduling & Affinity: Kubernetes pods ko nodes par schedule karta hai, na ki individual containers ko.

Updated YAML Configuration with Service (webapp-with-db-pod-and-service.yaml):

apiVersion: v1

kind: Pod

metadata:

name: webapp-with-db

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

- name: database

image: mongo:latest

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

Service ka Explanation:

Service ka naam:

webapp-service.Selector: Ye pods ko target karta hai jinka label

app: my-webapphai.Port Mapping:

port: Service ke andar exposed port (80).

targetPort: Pod ke andar webapp container ka port (80).

Service ka Use Case:

Ab cluster ke andar doosre pods web application ko service ke naam

webapp-serviceka use karke access kar sakte hain.Service load balancing aur automatic scaling provide karta hai, jo application ko highly available banata hai.

Agle sections mein hum Deployments, Scaling, aur doosre Kubernetes components explore karenge taaki aap apne containerized applications ko effectively manage kar sakein.

Ingress:

Jabki Service cluster ke andar pods ke beech internal communication enable karta hai, Kubernetes Ingress ka use aapke services ko cluster ke bahar ke external clients ke liye expose karne ke liye hota hai. Ye ek external entry point ka kaam karta hai aur aane wale traffic ke liye routing rules aur load balancing configure karne ki suvidha deta hai.

YAML Configuration File (webapp-with-db-pod-service-and-ingress.yaml):

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: webapp-ingress

spec:

rules:

- host: mywebapp.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: webapp-service

port:

number: 80

(Explanation):

Ingress ka naam:

webapp-ingress.Host field: Ye domain name define karta hai jiske madhyam se web application cluster ke bahar se access kiya ja sakta hai. Aap

mywebapp.example.comko apne domain name ya IP address se replace karen.Paths section: Ye routing rules define karta hai. Is example mein,

/prefix ke saath koi bhi incoming requestwebapp-serviceki taraf forward ki jayegi.Backend: Target service define karta hai jahan traffic forward hoga, jo ki yahan

webapp-servicehai.

Note: Ingress resource ke kaam karne ke liye, aapko apne Kubernetes cluster mein ek Ingress controller deploy karna hoga. Ye Ingress rules ko implement karta hai aur services ke liye external traffic manage karta hai.

ConfigMap:

Kubernetes ConfigMap configuration data ko store karne ke liye use hota hai, jise pods environment variables ke roop mein consume kar sakte hain ya configuration files ke roop mein mount kar sakte hain. Ye configuration data ko container image se alag karta hai, jisse configuration ko bina container rebuild kiye update karna asaan hota hai.

Updated YAML Configuration File (webapp-with-db-pod-service-ingress-configmap.yaml):

apiVersion: v1

kind: Pod

metadata:

name: webapp-with-db

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: webapp-config

- name: database

image: mongo:latest

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

WEBAPP_ENV: "production"

DATABASE_URL: "mongodb://database-service:27017/mydb"

(Explanation):

ConfigMap ka naam:

webapp-config.Data section: Isme key-value pairs hain jo web application ke configuration data ko represent karte hain.

Pod definition mein envFrom:

webappcontainer ke andarwebapp-configConfigMap reference add kiya gaya hai. Ye ConfigMap ke key-value pairs ko environment variables ke roop mein container ke andar inject karega.

Result: Webapp container environment variables WEBAPP_ENV aur DATABASE_URL ka access kar sakta hai aur application ko configure kar sakta hai.

Secret:

Kubernetes Secrets sensitive information (jaise passwords, API keys, ya TLS certificates) ko securely store karne ke liye use hota hai. Secrets default roop mein base64-encoded hote hain aur pods ke andar files ke roop mein mount kiye ja sakte hain ya environment variables ke roop mein use ho sakte hain.

Updated YAML Configuration File (webapp-with-db-pod-service-ingress-configmap-secret.yaml):

apiVersion: v1

kind: Pod

metadata:

name: webapp-with-db

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: webapp-config

- name: database

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: db-credentials

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

WEBAPP_ENV: "production"

DATABASE_URL: "mongodb://database-service:27017/mydb"

---

apiVersion: v1

kind: Secret

metadata:

name: db-credentials

type: Opaque

data:

username: <base64-encoded-username>

password: <base64-encoded-password>

(Explanation):

Secret ka naam:

db-credentials.Data section: Ye base64-encoded values store karta hai jo database username aur password represent karte hain. Replace

<base64-encoded-username>aur<base64-encoded-password>with actual base64-encoded values.Pod definition mein Secrets reference: Database container ke liye environment variables

MONGO_INITDB_ROOT_USERNAMEaurMONGO_INITDB_ROOT_PASSWORDdefine kiye gaye hain jodb-credentialsSecret ko reference karte hain.

Result: Secrets ke madhyam se sensitive data securely manage kiya jata hai aur configuration files ya container images mein directly expose karne ki zarurat nahi hoti.

Ab humare paas ek fully configured web application hai jo non-sensitive aur sensitive information ke sath secure tarike se deploy hone ke liye tayar hai.

Chaliye pichle example par aur kaam karte hain aur Kubernetes Volume ka use karke apne database ke liye persistent storage provide karte hain. Volumes ka use karne se data container restarts ke baad bhi persist karta hai aur ek pod ke containers ke beech data share karne ka tarika deta hai.

Volume: Kubernetes Volume ek directory hai jo ek pod ke sabhi containers ke liye accessible hoti hai. Ye storage ko containers se alag kar deta hai, jisse data container ke restart hone ya reschedule hone ke baad bhi available rahta hai.

Is example mein, hum PersistentVolumeClaim (PVC) ka use karke dynamically ek PersistentVolume (PV) provision karenge aur ise apne database container ke saath attach karenge.

Updated YAML File with Volume:

# webapp-with-db-pod-service-ingress-configmap-secret-volume.yaml

apiVersion: v1

kind: Pod

metadata:

name: webapp-with-db

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: webapp-config

- name: database

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: db-credentials

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

volumeMounts:

- name: db-data

mountPath: /data/db

volumes:

- name: db-data

persistentVolumeClaim:

claimName: database-pvc

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

WEBAPP_ENV: "production"

DATABASE_URL: "mongodb://database-service:27017/mydb"

---

apiVersion: v1

kind: Secret

metadata:

name: db-credentials

type: Opaque

data:

username: <base64-encoded-username>

password: <base64-encoded-password>

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: database-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

Explanation:

PersistentVolumeClaim (PVC): Is YAML file mein humne

database-pvcnaam ka ek PVC add kiya hai jo storage ke liye 1Gi request karta hai.Volume Definition: Pod ke andar ek volume

db-datadefine ki gayi hai jodatabase-pvcse bind hoti hai.Volume Mount: Database container ke andar

/data/dbpar volume mount ki gayi hai. Ab jo bhi data is path par likha jayega, wodb-datavolume mein store hoga.

Is setup ke sath MongoDB ka data persist hoga, aur agar database container restart ya reschedule hota hai to bhi data available rahega.

Deployment:

Deployment ek higher-level abstraction hai jo ek jaise pods ka set manage karta hai. Ye stateless applications ke liye perfect hai jahan individual pods interchangeable hote hain.

Updated YAML File for Deployment:

# webapp-with-db-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-webapp

template:

metadata:

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: webapp-config

- name: database

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: db-credentials

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

volumeMounts:

- name: db-data

mountPath: /data/db

volumes:

- name: db-data

persistentVolumeClaim:

claimName: database-pvc

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

WEBAPP_ENV: "production"

DATABASE_URL: "mongodb://database-service:27017/mydb"

---

apiVersion: v1

kind: Secret

metadata:

name: db-credentials

type: Opaque

data:

username: <base64-encoded-username>

password: <base64-encoded-password>

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: database-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

Explanation:

Deployment Resource: Humne Pod resource ko Deployment resource se replace kiya aur

replicas: 3set kiya hai, jo 3 instances maintain karega.High Availability: Deployment ensure karta hai ki agar ek pod fail ho jaye to naya pod create ho jaye.

Is tarike se humne apne application ko persistent storage aur high availability ke sath deploy kar diya hai.

StatefulSet:

Kubernetes StatefulSet un applications ke liye use hota hai jo stateful hoti hain, jahan har pod ki ek unique identity aur persistent storage hoti hai. StatefulSets stable network identities provide karte hain aur databases, key-value stores, aur un applications ke liye suitable hote hain jo unique persistent storage aur ordered scaling ki demand karte hain.

Updated YAML file using StatefulSet:

# webapp-with-db-deployment-and-statefulset.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-webapp

template:

metadata:

labels:

app: my-webapp

spec:

# ... (same as the previous Deployment config)

---

apiVersion: v1

kind: Service

metadata:

name: webapp-service

spec:

selector:

app: my-webapp

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: v1

kind: ConfigMap

metadata:

name: webapp-config

data:

WEBAPP_ENV: "production"

DATABASE_URL: "mongodb://database-service:27017/mydb"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: database-statefulset

spec:

serviceName: database

replicas: 1

selector:

matchLabels:

app: database

template:

metadata:

labels:

app: database

spec:

containers:

- name: database

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: db-credentials

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

volumeClaimTemplates:

- metadata:

name: database-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

Explanation:

YAML file mein ek StatefulSet

database-statefulsetke liye add kiya gaya hai jo database manage karega.replicas: 1isliye set kiya gaya hai kyunki StatefulSets unique identity wale pods banate hain aur scaling ke liye manual intervention chahiye.StatefulSet ensure karta hai ki har pod ka stable hostname aur identity ho.

Deployment Configuration Example:

# webapp-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: webapp-deployment

spec:

replicas: 3

selector:

matchLabels:

app: my-webapp

template:

metadata:

labels:

app: my-webapp

spec:

containers:

- name: webapp

image: nginx:latest

ports:

- containerPort: 80

envFrom:

- configMapRef:

name: webapp-config

- name: database

image: mongo:latest

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: db-credentials

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: db-credentials

key: password

volumeMounts:

- name: db-data

mountPath: /data/db

volumes:

- name: db-data

persistentVolumeClaim:

claimName: database-pvc

Commands to Apply Configuration:

Deployment:

kubectl apply -f webapp-deployment.yamlService:

kubectl apply -f webapp-service.yamlConfigMap:

kubectl apply -f webapp-config.yamlSecret:

kubectl apply -f db-credentials-secret.yamlStatefulSet:

kubectl apply -f database-statefulset.yaml

Minikube aur Kubectl se K8s Cluster Setup karein (Locally):

Minikube Install karein:

Minikube ko install karne ke liye ek hypervisor (e.g., VirtualBox) required hai.

Install instructions ke liye official Minikube website par jaayein: Minikube

Install ke baad verify karein:

minikube version

Cluster Start karein:

minikube startCluster ki information dekhein:

kubectl cluster-infoNodes check karein:

kubectl get nodesTest Application Deploy karein:

YAML filetest-app.yamlbanayein aur deploy karein.Version: v1 kind: Pod metadata: name: test-app-pod spec: containers: - name: test-app-container image: nginx:latest ports: - containerPort: 80Command:

kubectl apply -f test-app.yamlPod ko verify karein:

kubectl get podsApplication ko access karein:

kubectl port-forward test-app-pod 8080:80

Browser mein URL:http://localhost:8080

Interacting with Kubernetes Cluster

Is section mein hum dikhayenge ki aap apne Kubernetes cluster ke saath kaise interact kar sakte hain kubectl commands ka use karke. Aap yeh seekhenge ki kaise apne applications, pods, aur other resources ko cluster mein inspect aur manage karte hain.

Kubernetes cluster ke saath interact karna mostly kubectl command-line tool ka use karke hota hai. kubectl aapko cluster mein various Kubernetes resources jaise pods, deployments, services, aur aur bhi cheezein inspect aur manage karne ki permission deta hai. Niche kuch common kubectl commands diye gaye hain jo aapko shuruaat karne mein madad karenge:

Cluster Information Inspect Karna:

Apne cluster aur uske components ka status check karne ke liye:

kubectl cluster-infoApne cluster ke nodes dekhne ke liye:

kubectl get nodes

Resources Ke Saath Kaam Karna:

Namespace mein resources (pods, services, deployments, etc.) ki list dekhne ke liye:

kubectl get <resource><resource>ko us resource ke naam se replace karein jo aap list karna chahte hain (jaisepods,services,deployments, etc.).Kisi specific resource ki detailed information dekhne ke liye:

kubectl describe <resource> <resource_name><resource>ko resource ke naam se aur<resource_name>ko specific resource instance ke naam se replace karein.

Resources Ko Manage Karna:

YAML configuration file ka use karke Kubernetes resource create ya apply karne ke liye:

kubectl apply -f <filename><filename>ko apne YAML configuration file ke naam se replace karein.Kisi resource ko delete karne ke liye:

kubectl delete <resource> <resource_name><resource>ko resource ke naam se aur<resource_name>ko specific resource instance ke naam se replace karein.

Pods Ke Saath Interact Karna:

Namespace mein pods dekhne ke liye:

kubectl get podsKisi specific pod ke logs dekhne ke liye:

kubectl logs <pod_name>Pod ke andar shell access karne ke liye:

kubectl exec -it <pod_name> -- /bin/bashYe command specific pod ke andar ek interactive shell khol dega.

Services Ke Saath Interact Karna:

Namespace mein services dekhne ke liye:

kubectl get servicesLocal machine se ek service access karne ke liye:

kubectl port-forward <service_name> <local_port>:<service_port><service_name>ko service ke naam se,<local_port>ko apne local machine ke port se, aur<service_port>ko us service ke port se replace karein jo aap access karna chahte hain.

Yeh kuch basic kubectl commands hain jo aapko apne Kubernetes cluster ke saath interact karne mein help karenge. Jaise-jaise aap Kubernetes ke saath familiar hote jayenge, aap additional commands aur options ko explore kar sakte hain taaki apne applications ko effectively manage aur monitor kar sakein. Detailed information ke liye hamesha Kubernetes documentation ka reference lein.

Conclusion

Badhaai ho! Aapne hamara Kubernetes tutorial for beginners complete kar liya hai! Aapne Kubernetes ke core concepts se lekar local cluster mein practical deployment tak sab kuch cover kiya hai. Ab aap achhi tarah se Kubernetes ki duniya mein aur deeply dive karne ke liye tayar hain aur apne containerized applications ko scale par deploy aur manage karne ke liye tayar hain. Happy container orchestration! 🎉

Subscribe to my newsletter

Read articles from MANISH KUMAR directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

MANISH KUMAR

MANISH KUMAR

Hey, My name is Manish Maurya and I am a DevOps⚙️ Engineer who is deeply committed to improving the efficiency and effectiveness of software development and deployment processes. My extensive knowledge of cloud computing, containerization, and automation enables me to drive innovation and deliver high-quality results. I remain dedicated to continuously expanding my skills by staying up-to-date with the latest DevOps tools and methodologies, and I'm always looking for new ways to optimize workflows and improve software delivery.🤖 :)