Beyond Chat: Leveraging LLMs to Automate Dataset Creation

Shlok Anand

Shlok AnandLet’s go beyond chat and into the world of smart dataset creation!

If you’ve ever worked on a machine learning project, you probably know the pain of finding the right dataset. High quality, labeled data is the lifeblood of any model but getting your hands on it isn’t always easy. Sometimes it’s locked behind privacy restrictions, other times it’s just too expensive or time-consuming to collect and label or it’s simply unavailable! That’s where synthetic data comes into play. Instead of relying on real-world examples, we can generate artificial data that looks and behaves like the real thing. It’s a clever workaround, especially when data is just not practical to gather. But you know what the real fun is? You can generate that data just by having a conversation with an AI!

Thanks to large language models like GPT-4, that’s totally possible. With the right prompts, you can create full-on datasets: product reviews with sentiment labels, question-answer pairs, even structured JSON objects — no scrapers, no spreadsheets, no manual tagging. In this post, we’re going beyond chat. I first tried this method during a project where I needed tons of labeled data in a short time and traditional methods just weren’t cutting it. I’ll show you how I’ve been using LLMs to automate dataset creation, the prompting techniques that worked for me, and what I’ve learned from experimenting with this process. Let’s dive in!

The Role of LLMs in Data Generation

Traditionally, generating synthetic data meant writing custom scripts, using simulation models, or manually crafting datasets all of which take time, domain expertise, and a lot of repetitive effort. LLMs change the game.

With the right prompt, an LLM can generate structured, diverse, and labeled data across a wide range of tasks, from sentiment-labeled reviews to JSON-formatted NER data. The beauty is that you’re not just generating words but you’re generating data ready to plug into machine learning pipelines.

In my case, I didn’t just use LLMs for a few test examples rather, I leaned on them to produce scalable, task-specific datasets, using prompts that evolved over time. This wasn’t about copy-pasting one good output. It was about designing prompt templates, testing different variations, and even handling format validation and output cleaning downstream.

For example, I started with zero-shot prompts, then experimented with few-shot formats to improve consistency. I also used LLMs to output directly in JSON and CSV-ready formats, which made it easy to integrate with scripts for model training. This approach transforms LLMs from a novelty into a real tool, one that can be inserted into any data-centric pipeline when traditional data collection isn’t feasible.

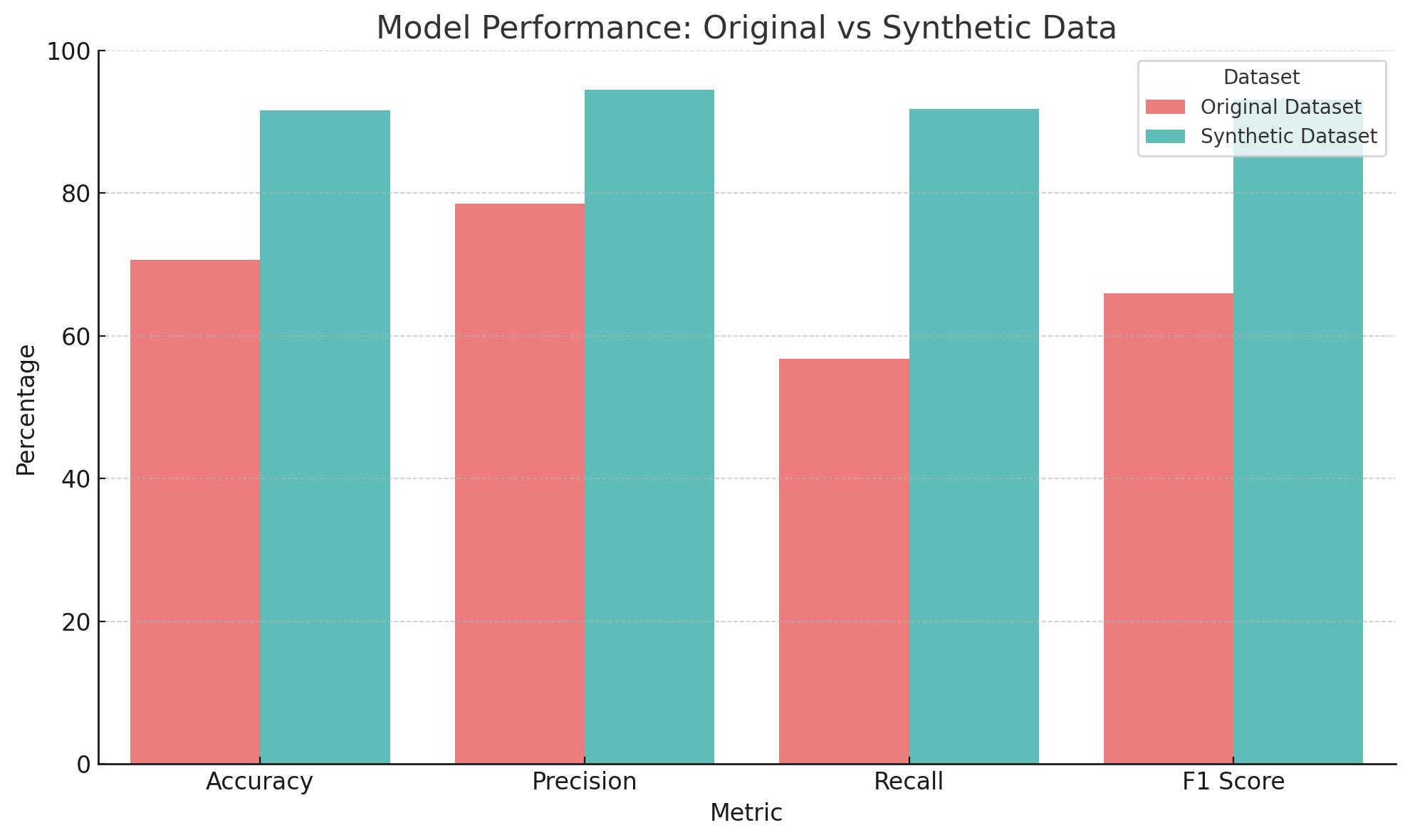

In fact, when I compared the model’s performance on the original imbalanced dataset versus the LLM-generated synthetic dataset, the difference was striking!

To really test the impact of LLM-generated synthetic data, I ran a comparison between two models:

• One trained on the original, imbalanced dataset

• Another trained on the synthetic dataset created entirely through prompting techniques

Here’s how they stack up:

The results speak for themselves i.e across every major performance metric, the model trained on synthetic data outperformed the original. Not just slightly, but significantly.

Prompting Techniques

Creating synthetic data using LLMs isn’t just about asking for examples instead, it’s about how you ask.

One of the most important realizations I had while working with LLMs for synthetic data was that the model only performs as well as the prompt allows it to. Prompting isn’t just an input; it’s a design decision. And small changes in how you phrase or structure your prompt can have a massive impact on the quality of your data.

The two main strategies for prompting include:

1. Zero-shot prompting - no examples, just instructions

This is the simplest form of prompting you ask the model to generate examples based on pure instruction. It’s easy to write but can produce inconsistent results, especially in formatting or label logic. It requires a lot of manual intensive labour to specify each and every exact details about the desired dataset.

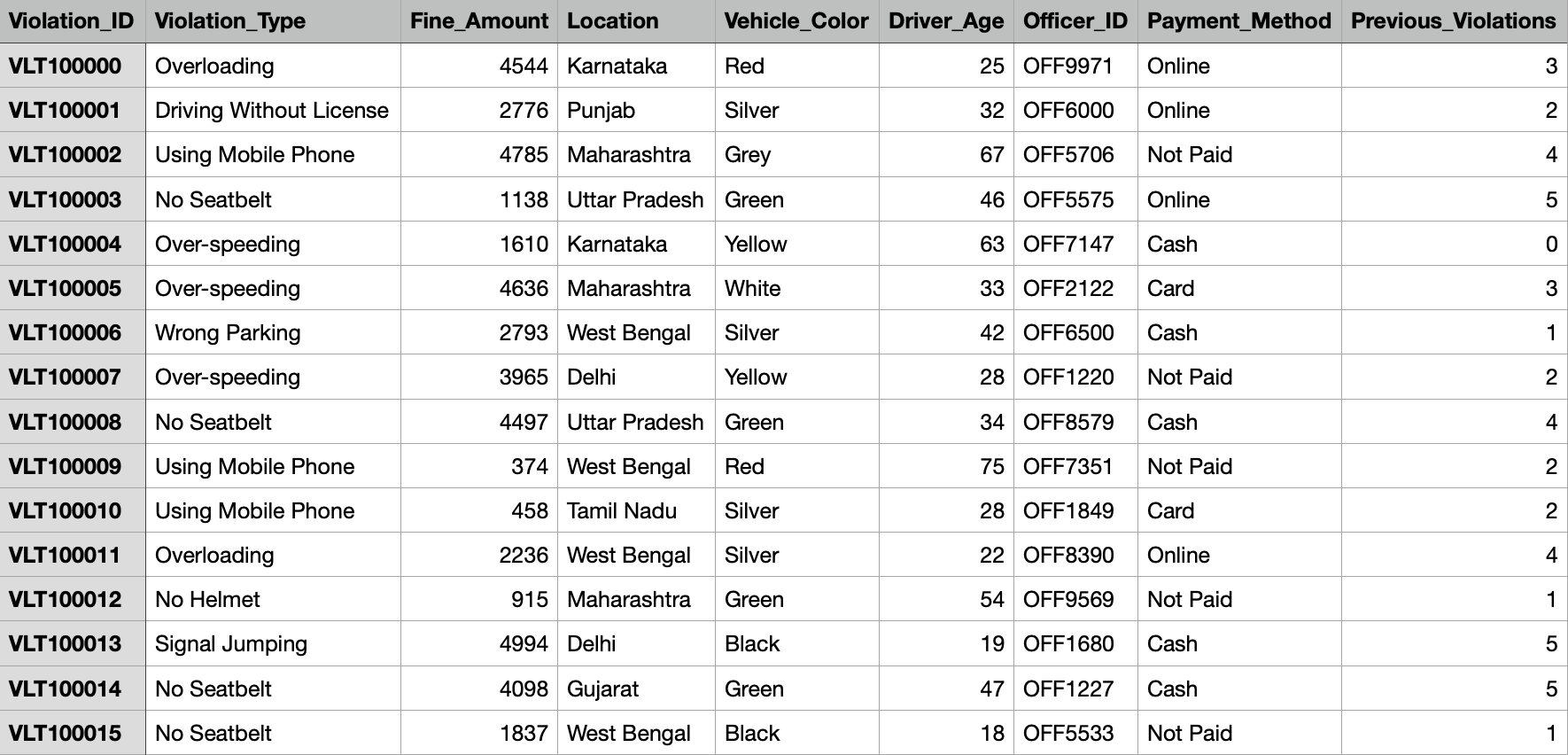

Let’s understand this process with an example. Suppose we’re working with a dataset that looks like this:

The dataset captures information about traffic violations, including:

• Violation Type (e.g., Over-speeding, No Seatbelt)

• Fine Amount

• Location

• Vehicle and Driver Details

• Officer ID

• Payment Method

• Previous Violations

Now, imagine we want to generate more synthetic data with a similar structure to train or test a model but we don’t want to scrape or collect it manually. Let’s try giving this as a task to a language model using zero-shot prompting.

Here’s a prompt I gave the model without providing any examples - just a clear instruction of what I wanted:

“You are a data generation assistant.

Generate 5 synthetic records of Indian traffic violations. Each record should contain the following fields:

• Violation_ID: A unique alphanumeric code starting with “VLT”

• Violation_Type: Choose from [“Over-speeding”, “Wrong Parking”, “Driving Without License”, “No Seatbelt”, “Using Mobile Phone”, “Overloading”]

• Fine_Amount: An integer between 1000 and 5000

• Location: A valid Indian state

• Vehicle_Color: Any realistic color

• Driver_Age: An integer between 18 and 70

• Officer_ID: A code like “OFF1234”

• Payment_Method: One of [“Cash”, “Card”, “Online”, “Not Paid”]

• Previous_Violations: A number between 0 and 5

Return the results in CSV format, where each item is a row with the fields listed above.”

Here is the link to view the LLM generated dataset- Synthetic Generated Data Link.

Let’s experiment and give the model nothing but basic instructions:

Prompt: “Generate 5 product reviews labeled as either ‘positive’ or ‘negative’. Return the output in JSON array format with two fields: text and label.”

[ {"text": "Amazing product!", "label": "positive"}, {"text": "Terrible service", "label": "negative"}, {"text": "I like it", "label": "positive"}, {"text": "Horrible", "label": "negative"}, {"text": "It's okay", "label": "neutral"} ]

It wasn’t bad, but there were clear issues:

• The reviews were too short and lacked context

• One entry had a “neutral” label, which wasn’t part of the allowed set

• The emotional tone felt robotic and inconsistent

2. Few-shot prompting — giving the model a few labeled examples to mimic

This is where things start to get really reliable. Instead of just telling the model what we want, we actually show it by providing a few examples first. It’s like saying, “Here’s the kind of data I’m looking for. Now give me more like this.”

By doing this, we help the LLM better understand:

• The structure we expect (e.g., JSON, tabular)

• The type of content that belongs in each field

• The tone, variety, and even the balance between different categories or values.

Few-shot prompting essentially works by setting a clear pattern and then asking the model to continue that pattern.

It’s surprisingly effective, especially when you’re generating complex, multi-field datasets like the traffic violation data we saw earlier. Then I tried the same task of generating product reviews using a few-shot prompt, where I showed the model exactly what kind of output I wanted.

Example:

[ {"text": "The phone arrived quickly and works perfectly. I'm super happy!", "label": "positive"}, {"text": "The battery dies too fast and it keeps freezing. Not worth it.", "label": "negative"} ]

“Continue with 5 more examples in the same format.“

This time, the result was significantly better:

[

{"text": "The camera quality is outstanding and the design feels premium.", "label": "positive"},

{"text": "The packaging was damaged and the charger didn’t work.", "label": "negative"},

{"text": "Fast delivery and excellent customer support.", "label": "positive"},

{"text": "App crashes frequently and drains battery life.", "label": "negative"},

{"text": "It’s user-friendly and met all my expectations.", "label": "positive"}

]

The model produced longer, more meaningful reviews. Each label was accurate, the JSON structure was clean, and the language felt natural — even varied. This small experiment confirmed what I had been sensing throughout the project: few-shot prompting isn’t just more consistent — it’s often the key to getting high-quality, structured data that’s actually usable in real-world applications.

If you’re working with a structured dataset (like a CSV), you can even paste a portion of it into the prompt as examples, then ask the model to continue generating rows in the same style. This approach takes you from “give me something random” to “give me exactly what I need.”

GPT-4 vs DeepSeek-R1: Let’s Put Them to the Test

At this point, I wanted to stir the pot a little — not just prompt one LLM and call it a day, but throw the same challenge at two different models and see who blinks first.

So, in the red corner, we have:

GPT-4 (a.k.a. ChatGPT, the polished veteran)

And in the blue corner:

DeepSeek-R1 (the ambitious and with something to prove)

Both are sharp, and surprisingly good at pretending to be human. To put their skills to the test and enjoy the drama, I handed them a prompt tied to a dataset that’s far more nuanced and context-heavy than something like sentiment classification or product reviews.

The goal is to generate synthetic examples of conversations between a user and a mental health assistant. Each entry includes- The user’s message, the assistant’s response and a tag (e.g., anxiety, depression, stress, motivation, etc.)

This is a great test because it reveals:

• How sensitive the models are to emotional tone

• Whether the responses sound robotic or human

• How well they generalize across similar user inputs

• Their ability to generate large datasets

The Prompt (for both GPT-4 & DeepSeek)-

You are simulating a mental health assistant. Generate 3000 short conversations between a user and the assistant. Each conversation should contain:

• user_message: the user expressing a concern

• assistant_reply: a compassionate and helpful response

•tag: the emotional category of the conversation (choose from anxiety, depression, stress, motivation) give this in json format

The results were fascinating…

DeepSeek-R1 responded with a compact set of five well-structured examples. It did a decent job capturing emotional tone and followed the required format closely. However, the model also added a disclaimer:

“This is a truncated example… Generating 3000 conversations would exceed practical limits…”

This revealed one of its limitations — a conservative response length, even when explicitly asked for more. While the quality of individual entries was strong, it clearly leaned toward playing it safe in terms of quantity and verbosity.

In contrast, GPT-4 went all in. It not only generated the required examples with emotional nuance and structure, but also created a downloadable dataset link with the entire JSON file. This made it far more practical for real-world use — especially for developers or researchers looking to directly integrate synthetic data into their pipelines.

This comparison highlights a key difference between the two models:

• DeepSeek is fast and lightweight, but occasionally cuts off or under-produces without strong prompting.

• GPT-4 is more robust and consistent when it comes to output length, structure, and delivery — especially for nuanced use cases like mental health dialogue generation.

If your goal is to generate high-volume, emotionally aware synthetic data, GPT-4 still has a clear edge.

Automating Dataset Generation with LLMs

Writing and refining prompts is just one part of the puzzle. But when you’re aiming to create a dataset with hundreds or even thousands of high-quality samples, manually interacting with a chatbot isn’t sustainable. That’s where automation changes everything after my initial experiments with zero-shot and few-shot prompting, I started building a simple yet powerful workflow using the OpenAI API, combined with Python and a few handy libraries. This helped me turn my LLM-powered data generation into a repeatable, scalable pipeline.

Once I had prompt templates working well manually, I used a loop to generate data for each category (e.g., anxiety, depression, motivation). Here’s a simplified version of the script:

import openai

import json

def save_output(response, tag):

data = json.loads(response['choices'][0]['message']['content'])

with open(f"{tag}_dataset.json", "a") as f:

json.dump(data, f, indent=4)

tags = ["anxiety", "depression", "stress", "motivation"]

for tag in tags:

prompt = f"""

You are generating mental health conversations.

Generate 5 labeled examples under the '{tag}' category.

Each should include a user_message, assistant_reply, and a tag field.

Return as a JSON array.

"""

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}],

temperature=0.7

)

save_output(response, tag)

This reduced hours of manual effort to just a few minutes — and gave me clean, labeled data that was ready for model training or testing.

Prompt as a Parameterized Function

I didn’t treat prompts like static strings — I treated them like functions with parameters. This made it easy to reuse prompts across different categories, sample sizes, and models. Here’s an example:

def generate_prompt(tag, count):

return f"""

You are simulating a dataset generator.

Generate {count} examples for category: {tag}.

Each item must contain a 'user_message', 'assistant_reply', and 'tag'.

Output format: JSON array.

"""

This allowed me to dynamically control content, volume, and context — while keeping my code clean and modular.

This project started out as a curiosity — “can I generate useful data using LLMs?” — and turned into a practical workflow I now use for real tasks. Along the way, I picked up more than just prompt engineering tricks. I learned how to think of LLMs as a tool in the ML pipeline, not just a chatbot.

Lessons Learned:

• Prompting is a creative and iterative design process. It’s not about guessing — it’s about shaping the model’s output by being clear, specific, and structured.

• Few-shot prompting delivers better results, especially for tasks requiring format consistency and variety.

• GPT-4 is still the most consistent model when it comes to nuanced, high-empathy tasks like mental health data — but models like DeepSeek-R1 are catching up and can be effective with tighter prompts.

• Post-processing is still important. Even though LLMs can output structured data, adding filters, validations, and format checks ensures quality and reliability.

• Automating the generation process with APIs and scripts is a must if you’re working on large-scale datasets.

If you’re working on a machine learning project and struggling with data availability or class imbalance — consider using prompting techniques to generate your own dataset. With the right structure, a few examples, and a bit of code, you can go from zero to production-ready in a matter of hours.

This approach has helped me save time, expand my training data, and experiment faster — and I hope it gives you the same edge.

Subscribe to my newsletter

Read articles from Shlok Anand directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by