Speeding-up Event Replay from a Postgres Event Store

Sam Becker

Sam BeckerThe setup

During a "Papercut Friday" at SKUTOPIA, I wanted to optimise the startup time of an event sourced service. The setup was as follows:

In-memory projections, built every time a NodeJS container starts.

A Postgres event store with ~2.5 million events in it.

This particular service split projections into two categories: those persisted in a database and those built in-memory every deployment. Ephemeral in-memory projections have a number of advantages, such as:

Extremely fast access, no database round trip to load frequently accessed entities.

Flexible schema, schema changes require no migration.

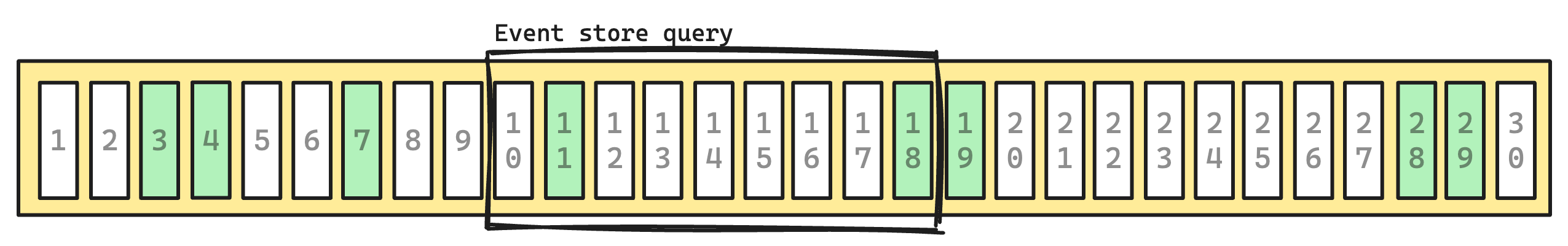

Each time a container starts, batches of events are retrieved from the event store and used for in-memory projections.

Importantly, only a small subset of events (~2%, those represented in green), were required by the in-memory projections.

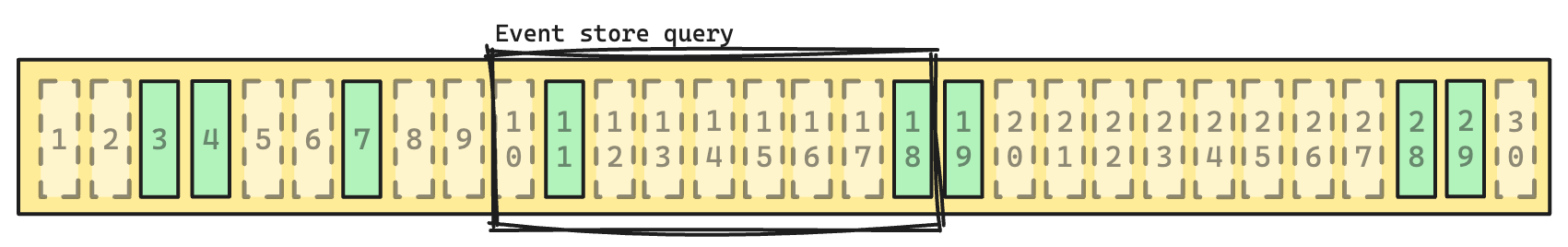

Only query relevant events

The first step was to only query for events actually used by the in-memory projections. This reduced the IO between the app and database, events that were discarded by the application no longer needed to be sent over the network during a replay.

Surprisingly, this optimisation resulted in only a marginal speed increase.

CLUSTER to the rescue

Digging into a couple of queries using EXPLAIN (ANALYZE, BUFFERS) yielded interesting results. A query for a batch range of 100,000 IDs, we'd expect on average 2,000 events to be returned:

Index Scan using event_store_in_memory_replay_idx on event_store (cost=0.29..2810.13 rows=2521 width=1069) (actual time=0.039..93.102 rows=1269 loops=1)

Index Cond: ((id > 1500000) AND (id <= 1600000))

Buffers: shared hit=673 read=476

I/O Timings: shared read=89.653

Planning Time: 0.195 ms

Execution Time: 93.339 ms

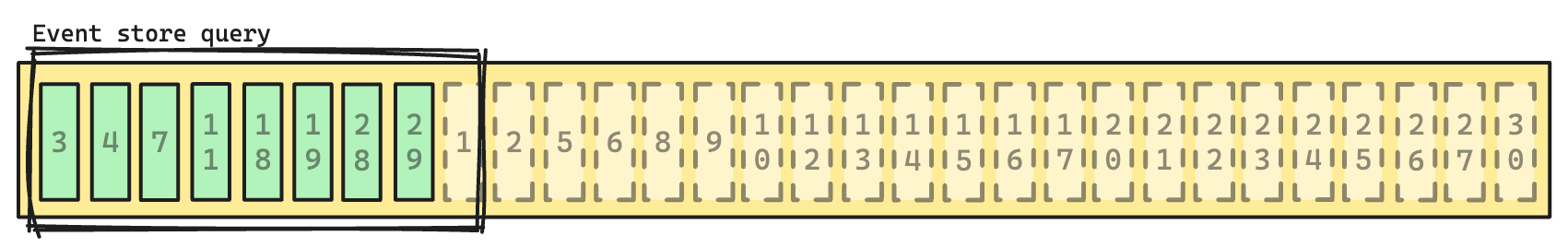

But we can observe a whopping 1,149 pages being required to satisfy the query (673 in cache, and 476 read from disk). One way to address this, would be to organize all events required for the replay onto a set of contiguous page tables. To do this required creating an index, ordered by our access pattern and then CLUSTERing the table by that index:

CREATE INDEX CONCURRENTLY

event_store_temp_cluster_idx ON public.event_store

((type IN ('EVENT_A', 'EVENT_B')), id);

CLUSTER public.event_store USING event_store_temp_cluster_idx;

This physically organises pages according to an "is a replay event" boolean as the primary criteria and "event id" as the second.

The analysis of the same query yielded much better results, 88 pages required for an ID range of 100,000 events, all in cache:

Index Scan using event_store_in_memory_replay_idx on event_store (cost=0.29..2828.18 rows=2542 width=1069) (actual time=0.040..0.473 rows=1269 loops=1)

Index Cond: ((id > 1500000) AND (id <= 1600000))

Buffers: shared hit=88

Planning Time: 0.274 ms

Execution Time: 0.578 ms

This ultimately reduced boot-up time for this service from 2 minutes, to 10 seconds.

Subscribe to my newsletter

Read articles from Sam Becker directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by