Building an Isolated Application Environment (Similar to Docker) on a Linux Server

Olusegun .O. ADELEKE

Olusegun .O. ADELEKE

In DevOps, the term "containerization" is almost always linked to Docker. But here’s something interesting—not only can you achieve a similar setup without Docker or Kubernetes, but you can actually build it from the ground up.

Through this experiment, you get to figure out how to create an isolated system using Linux namespaces, manage resource limits with cgroups, and establish an independent networking layer using network namespaces.

What is Containerization, and Why Does It Matter?

Imagine running multiple apps on your computer, but each one thinks it's the only thing running. That’s containerization in a nutshell—it creates a self-contained environment where applications operate independently, without interfering with each other or the system.

Here’s what containerization does: It Keeps apps separate so one app can’t break or affect another, protects system files so apps don’t mess with the host machine, manages network traffic so each app gets its own network space, controls resource usage preventing one app from hogging CPU and memory and lots more.

Without containerization, applications share the same system resources without clear boundaries. This can lead to conflicts, security risks, and performance issues.

Most people use Docker to handle all this automatically. But today, we’re taking a different approach— we’ll build a container-like setup manually using Linux namespaces, chroot, and network isolation.

Setting Up the Base System

Before we isolate anything, we need essential tools for process separation, resource control, and filesystem isolation. We also need to create a lightweight Ubuntu system inside a dedicated directory (/custom-container).

Install Required Packages & Create a Minimal Filesystem

sudo apt update

sudo apt install -y iproute2 cgroup-tools debootstrap

sudo debootstrap --arch=amd64 jammy /custom-container http://archive.ubuntu.com/ubuntu/

At this point, we have a blank Ubuntu system inside /custom-container, but it lacks access to system resources.

Granting the Environment Access to System Files

A filesystem alone isn’t enough—it needs access to critical system directories. To ensure proper functionality, we bind-mount essential directories inside our environment.

Mount System Directories

sudo mount --bind /proc /custom-container/proc

sudo mount --bind /sys /custom-container/sys

sudo mount --bind /dev /custom-container/dev

These directories allow processes to interact with the system kernel, access device files, and retrieve system information.

Isolating Processes

Processes running inside our environment shouldn’t see or interfere with host processes. To achieve this, we create a separate process namespace.

Run a New Process Namespace

cd /custom-container

sudo unshare --pid --fork --mount-proc /bin/bash

Now, running ps aux inside this namespace will show only a handful of processes, whereas running it outside will display hundreds.

Restricting Filesystem Access

For true isolation, we ensure the environment doesn’t have access to the host’s filesystem by using chroot.

Change Root to the Isolated Environment

sudo unshare --mount --fork chroot /custom-container

Now, /custom-container acts as the root directory, making the host filesystem invisible to processes inside the environment.

User Separation

To verify user isolation, we create a separate user namespace where users inside the environment don’t exist on the host.

Test User Isolation

sudo unshare --user --fork /bin/bash

whoami

The expected output is nobody, meaning no user ID mapping exists yet. We will configure this mapping later to provide proper permissions.

Creating a Private Network

Even with process and filesystem isolation, our environment still shares the host’s network. To change this, we:

Set up a separate network namespace.

Connect it to the host using a virtual Ethernet (veth) pair.

Configure Network Isolation

sudo ip netns add custom-container

sudo ip link add veth-host type veth peer name veth-container

sudo ip link set veth-container netns custom-container

sudo ip addr add 192.168.1.1/24 dev veth-host

sudo ip link set veth-host up

sudo ip netns exec custom-container ip addr add 192.168.1.2/24 dev veth-container

sudo ip netns exec custom-container ip link set veth-container up

This setup gives our isolated environment its own IP (192.168.1.2), separate from the host’s (192.168.1.1).

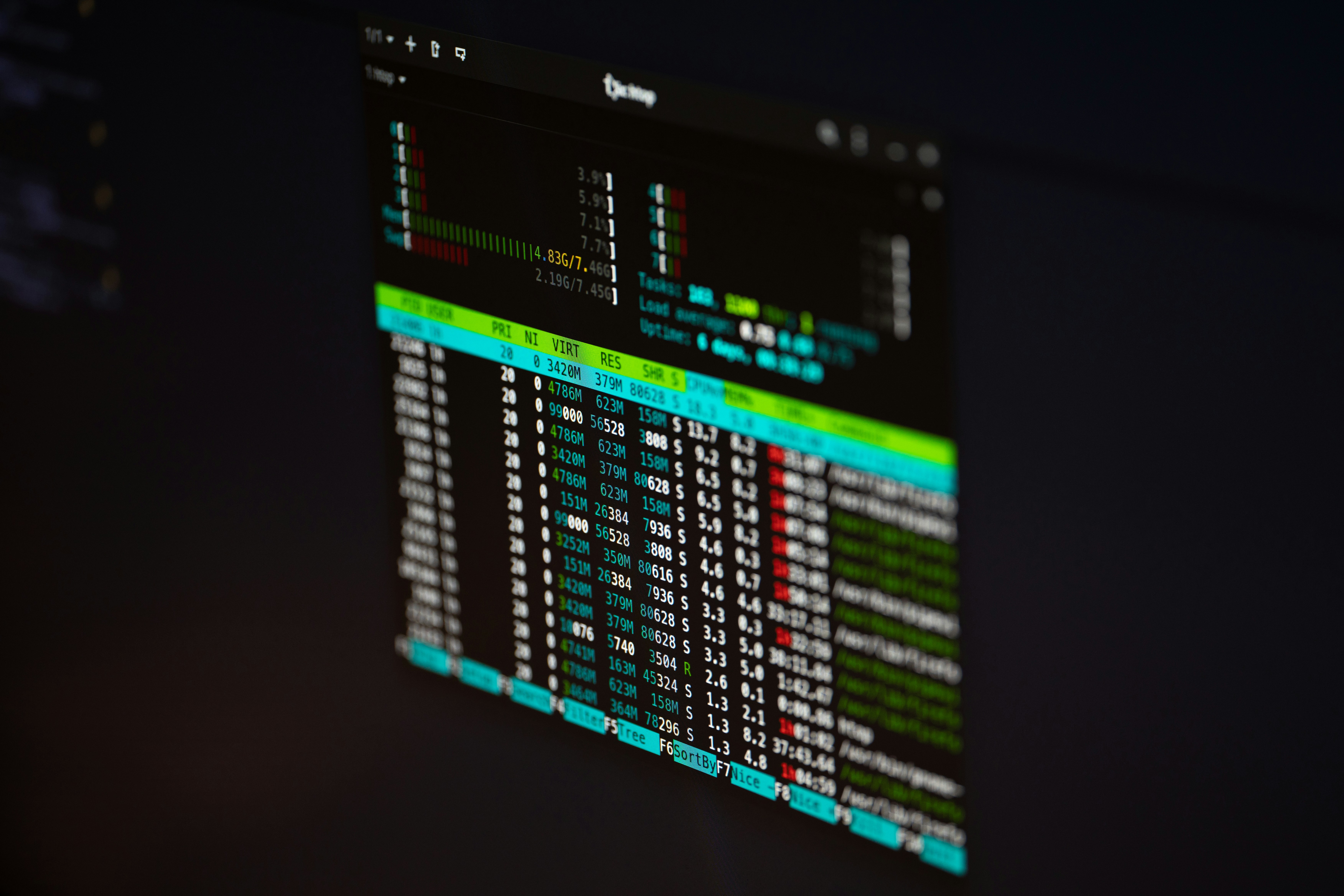

Controlling Resource Usage

To prevent excessive CPU, RAM, or disk usage from affecting the host, we apply resource limits using cgroups.

Set CPU, Memory, and I/O Limits

sudo mkdir /sys/fs/cgroup/custom-container

echo "+cpu +memory +io" | sudo tee /sys/fs/cgroup/cgroup.subtree_control

echo "500000 1000000" | sudo tee /sys/fs/cgroup/custom-container/cpu.max

echo "256M" | sudo tee /sys/fs/cgroup/custom-container/memory.max

echo "259:0 rbps=1048576 wbps=1048576" | sudo tee /sys/fs/cgroup/custom-container/io.max

To verify the settings, we check the applied limits:

cat /sys/fs/cgroup/custom-container/cpu.max

cat /sys/fs/cgroup/custom-container/memory.max

cat /sys/fs/cgroup/custom-container/io.max

Creating a Non-Root User

Running applications as root is a security risk, so we add a restricted user inside our environment.

Create & Test a Non-Root User

sudo chroot /custom-container

apt update

apt install -y passwd adduser

adduser --disabled-password testuser

exit

sudo unshare --mount --fork chroot /custom-container su - testuser

If the last command returns testuser@..., the user was successfully created.

Deploying an Application Inside the Isolated Environment

Now that we have a secure, isolated system with a dedicated user, we install Python and set up a basic web server.

Install Python

sudo chroot /custom-container

apt update

apt install -y python3

exit

Create & Copy a Simple Python Server Script

vim main.py

Paste the following Python code inside script.py:

from http.server import HTTPServer, BaseHTTPRequestHandler

class Handler(BaseHTTPRequestHandler):

def do_GET(self):

self.send_response(200)

self.send_header("Content-Type", "text/plain; charset=utf-8")

self.end_headers()

self.wfile.write(b"Isolated Environment Running Setup by Olusegun Adeleke!")

print("Starting server on port 8000...")

HTTPServer(("", 8000), Handler).serve_forever()

Then, move it into the isolated environment and set ownership:

sudo cp main.py /custom-container/home/testuser/

sudo chown 1000:1000 /custom-container/home/testuser/main.py

Running & Testing the Application

Finally, we start our web server inside the isolated environment and verify its accessibility.

Launch the Server

sudo ip netns exec custom-container unshare --mount --pid --fork chroot /custom-container su - testuser -c "python3 /home/testuser/main.py"

Test the Server from the Host

To test this, open another terminal of your host and run the following command:

curl 192.168.1.2:8000

Expected output:

Isolated Environment Running Setup by Olusegun Adeleke!

To access this on the internet, you can create a proxy pass on nginx config using the ip 192.168.1.2:8000.

Conclusion

In this project, we successfully built an isolated environment that mimics containerization—without relying on Docker or Kubernetes. We achieved this using Linux namespaces, cgroups, and chroot, each playing a crucial role in isolation and resource management:

Linux Namespaces: Provided process, filesystem, and network isolation.

PID namespaces ensured processes inside the environment were invisible to the host.

Network namespaces created a separate networking stack for the environment.

User namespaces enabled non-root execution within the isolated space.

Cgroups: Controlled resource usage by limiting CPU, memory, and disk I/O, ensuring the environment didn’t affect the host system’s performance.

Chroot: Restricted access to the host’s filesystem by setting a new root directory (

/custom-container), preventing unauthorized access to critical system files.

Challenge Faced & How It Was Solved

This wasn’t a straightforward journey—major challenge that popped up: Networking Confusion. Setting up network namespaces and virtual Ethernet (veth) pairs was tricky. Initially, the isolated environment had no network access. However, after researching, the correct veth configuration was applied, ensuring proper communication between the host and the isolated environment.

Final Thoughts

By manually setting up an isolated environment, we gained deep insights into how containers work under the hood. Instead of relying on Docker’s abstraction, we explored the core Linux features that make containerization possible. This hands-on approach not only strengthened our understanding of process isolation, resource management, and networking but also revealed the complexities that modern containerization tools simplify for us.

Subscribe to my newsletter

Read articles from Olusegun .O. ADELEKE directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by