From Building Blocks to Autonomous Agents: A Simple Guide to LLM Workflows

Vijay Sheru

Vijay Sheru

Based on insights from Anthropic's Building Effective Agents, adapted in a simplified, beginner-friendly style.

Introduction

If you’re working with AI models like ChatGPT or Claude, you don’t always need to jump straight into building a complex agent. Start simple, then scale up as needed. This guide explains how to structure your AI app from the ground up, in a way that’s easy to understand and build.

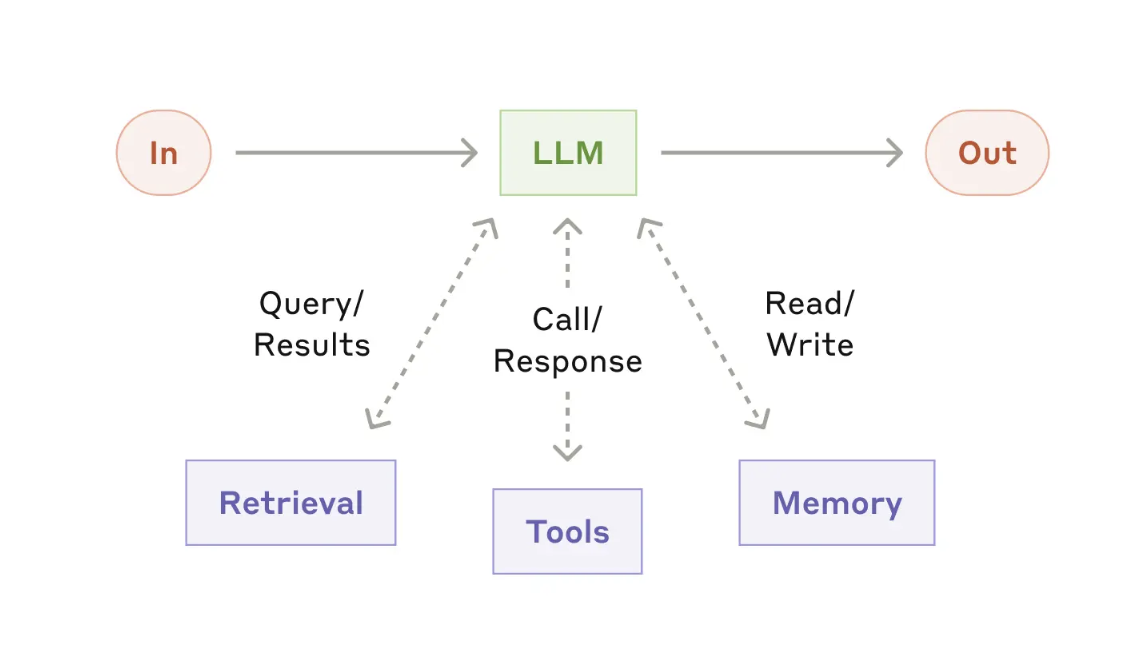

Augmented LLMs

Think of this as your smart assistant. An augmented LLM is just a language model that can:

Look up information (retrieval)

Use tools like calculators or APIs

Remember things (memory)

This makes it way more useful than just a basic chatbot.

Common LLM Workflows

These are step-by-step ways to make your app smarter. Let’s look at each one with simple examples.

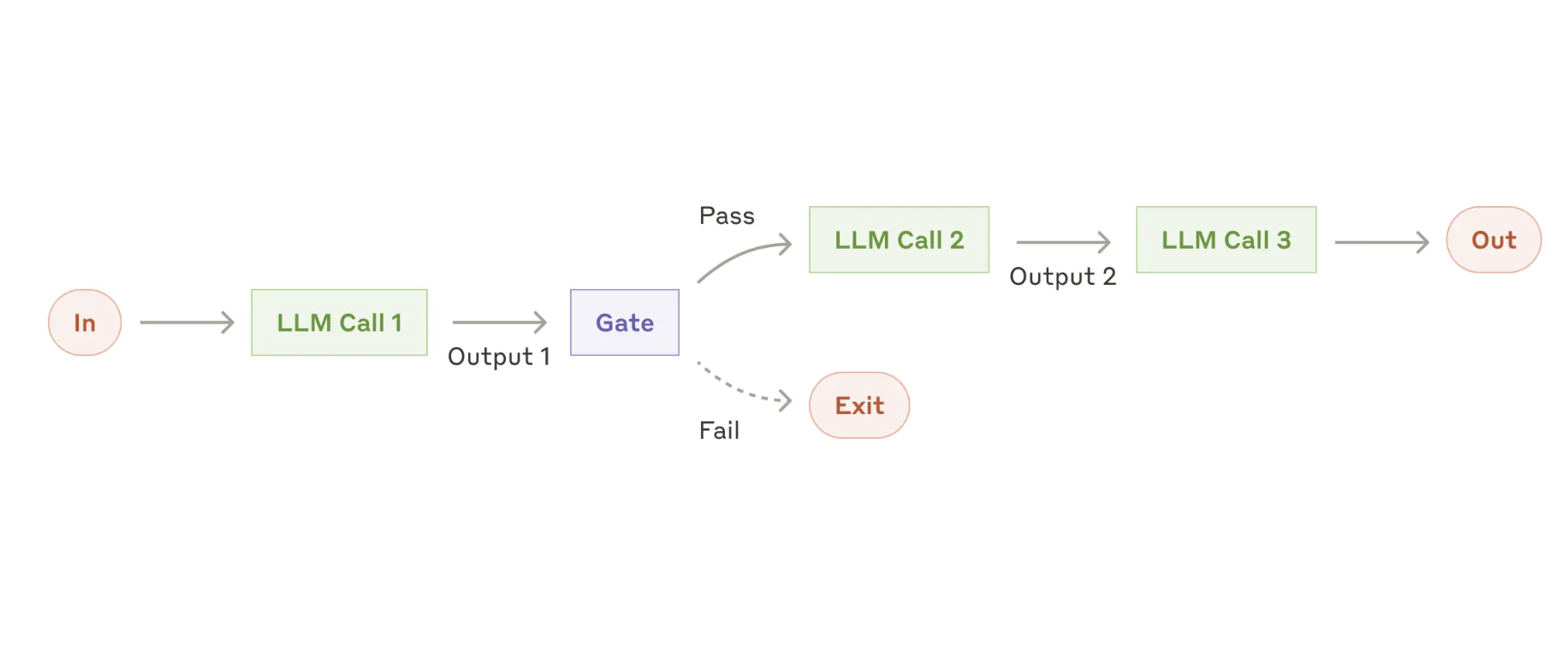

1. Prompt Chaining

What it is: One step at a time. Each step uses the result from the last.

When to use: Your task can be split into clear steps.

Example:

Step 1: Write a blog outline

Step 2: Check if the outline is good

Step 3: Write full blog using the outline

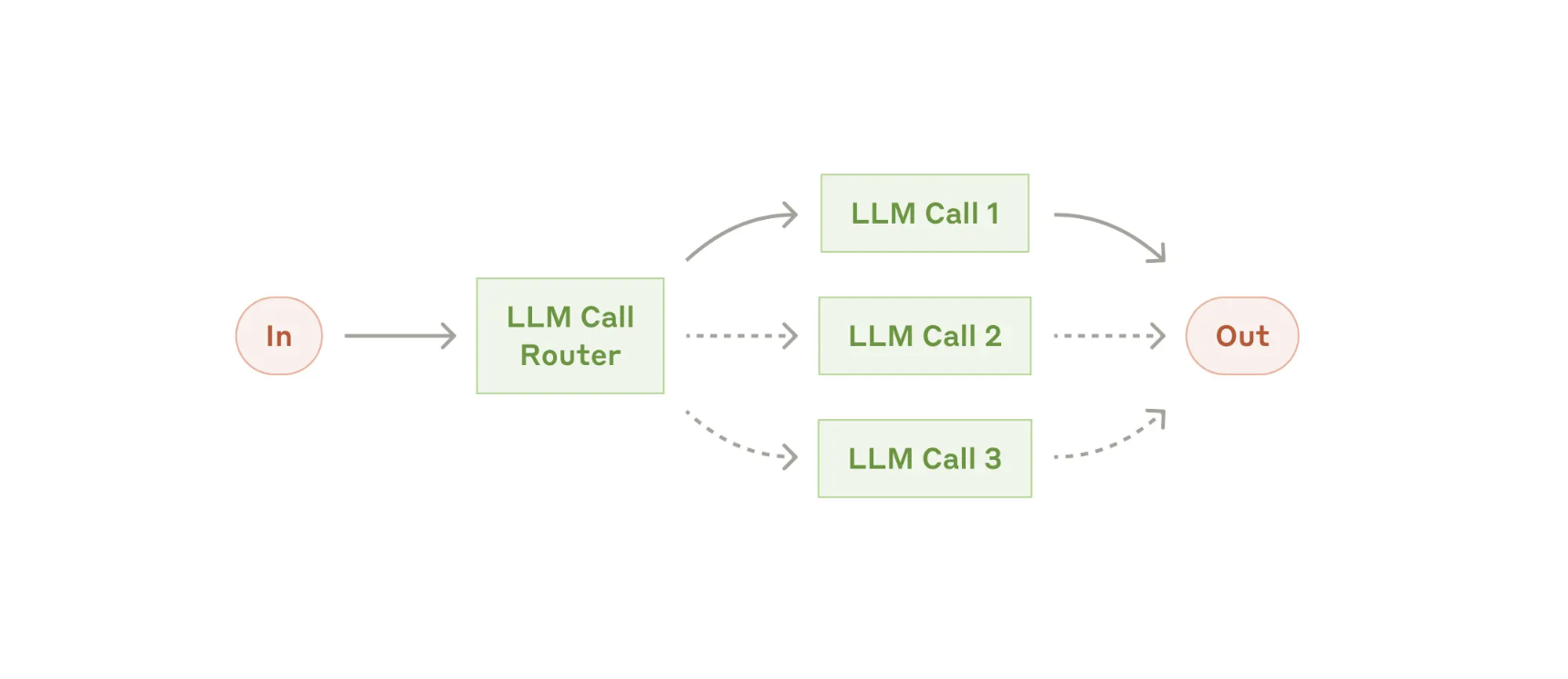

2. Routing

What it is: Choose the right path based on the input.

When to use: You have different types of tasks.

Example:

A customer service bot: if it's a refund question, go to refund handler; if it's tech support, go there.

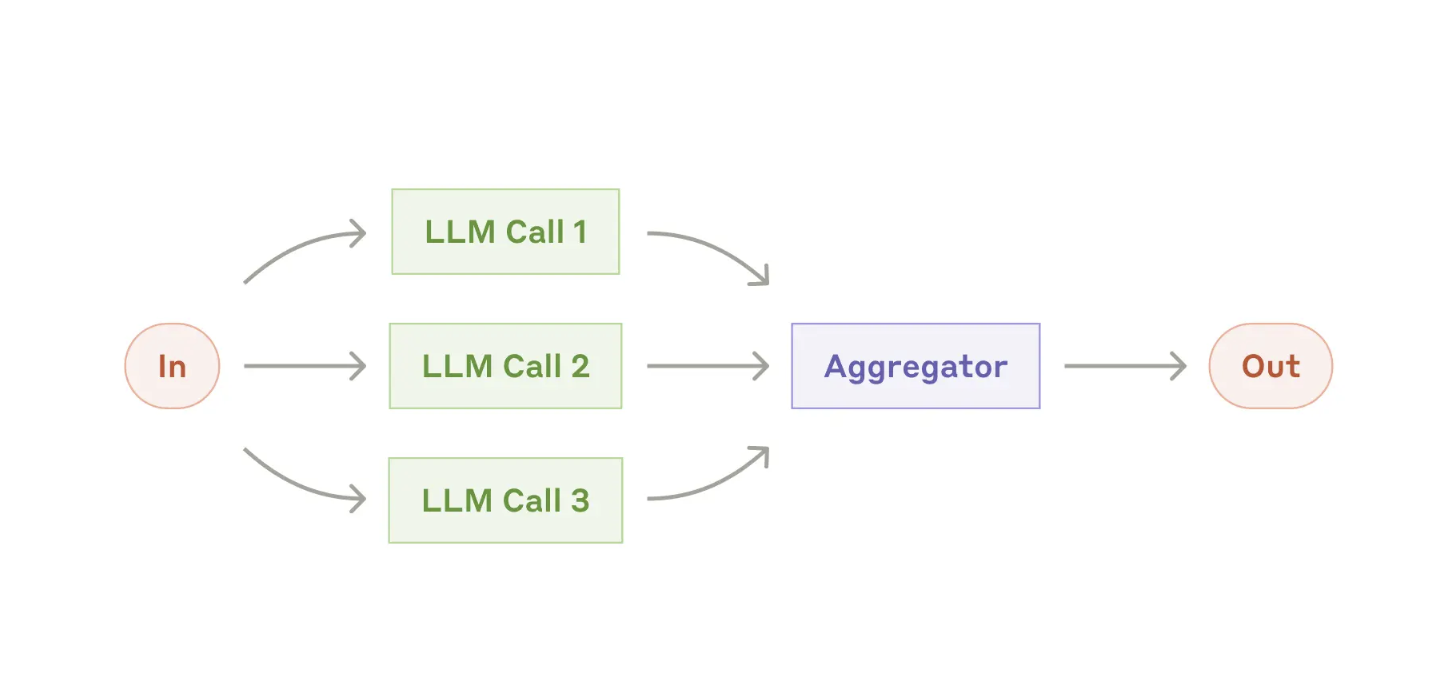

3. Parallelization

What it is: Do many things at the same time, then combine results.

When to use: You want to be fast, or get multiple opinions.

Example (Sectioning):

One LLM checks if content is safe

Another writes the actual response

Example (Voting):

3 LLMs give answers

Pick the best one

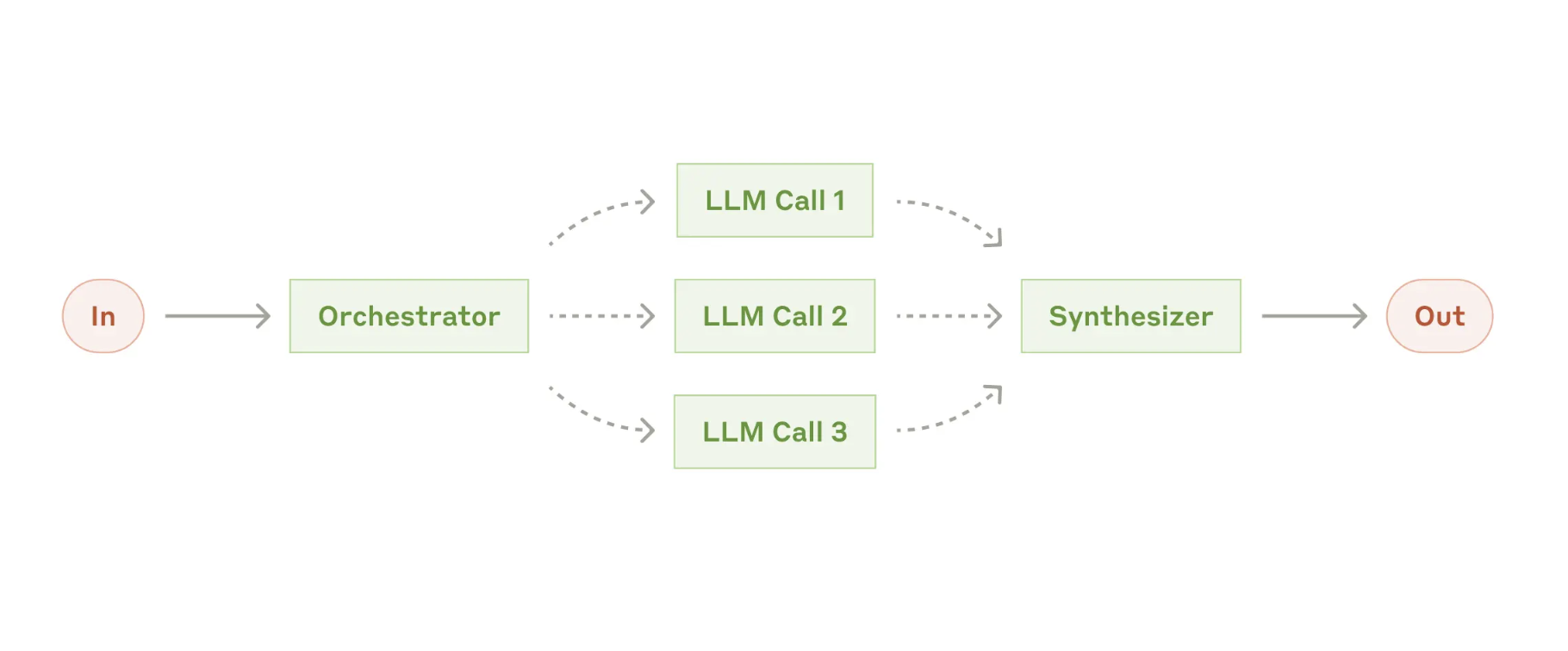

4. Orchestrator-Workers

What it is: One smart LLM decides the steps, and other LLMs do the work.

When to use: You don’t know what steps are needed until you see the task.

Example:

The main LLM sees the task: "Fix this bug."

It says: "Edit file A, B, and C."

Each worker edits one file.

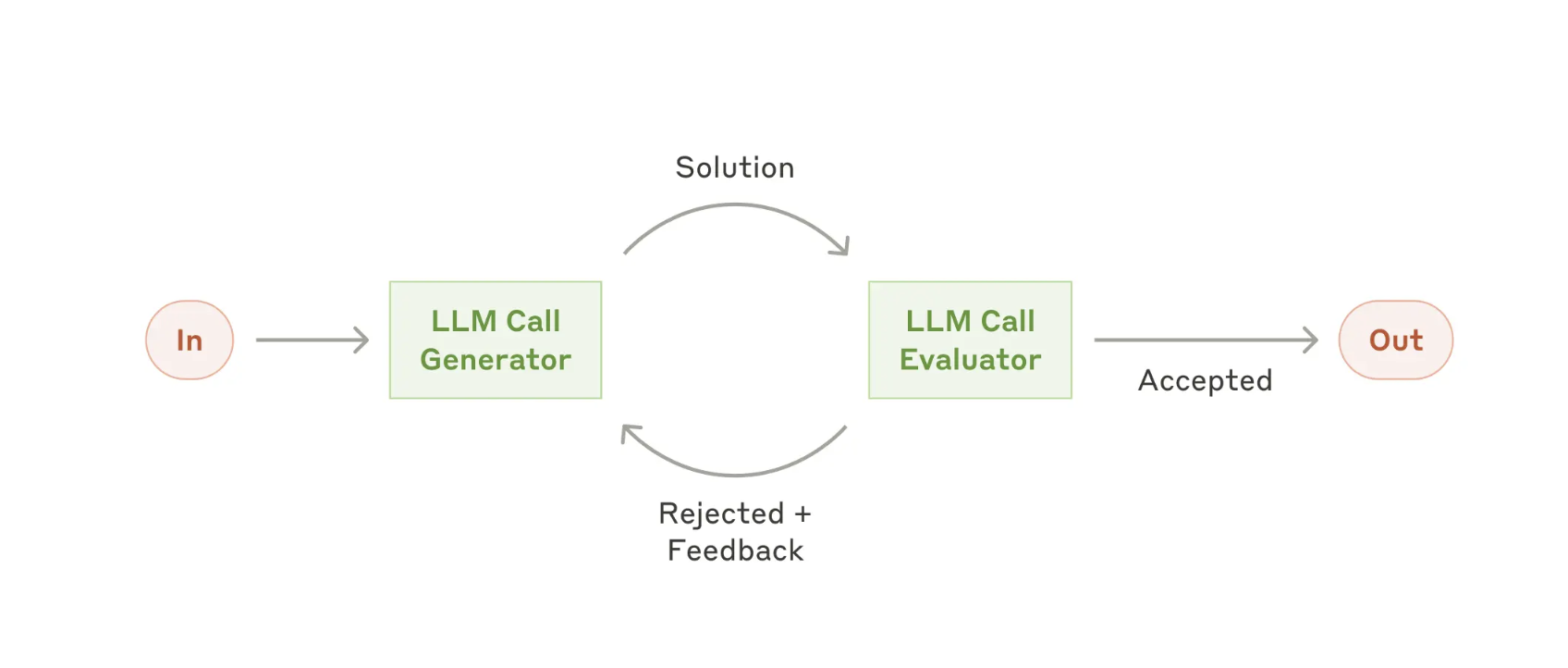

5. Evaluator-Optimizer

What it is: One LLM writes something, another gives feedback, then it improves.

When to use: You want better quality with feedback loops.

Example:

LLM writes a story

Another LLM says: "The ending is weak"

LLM rewrites the ending

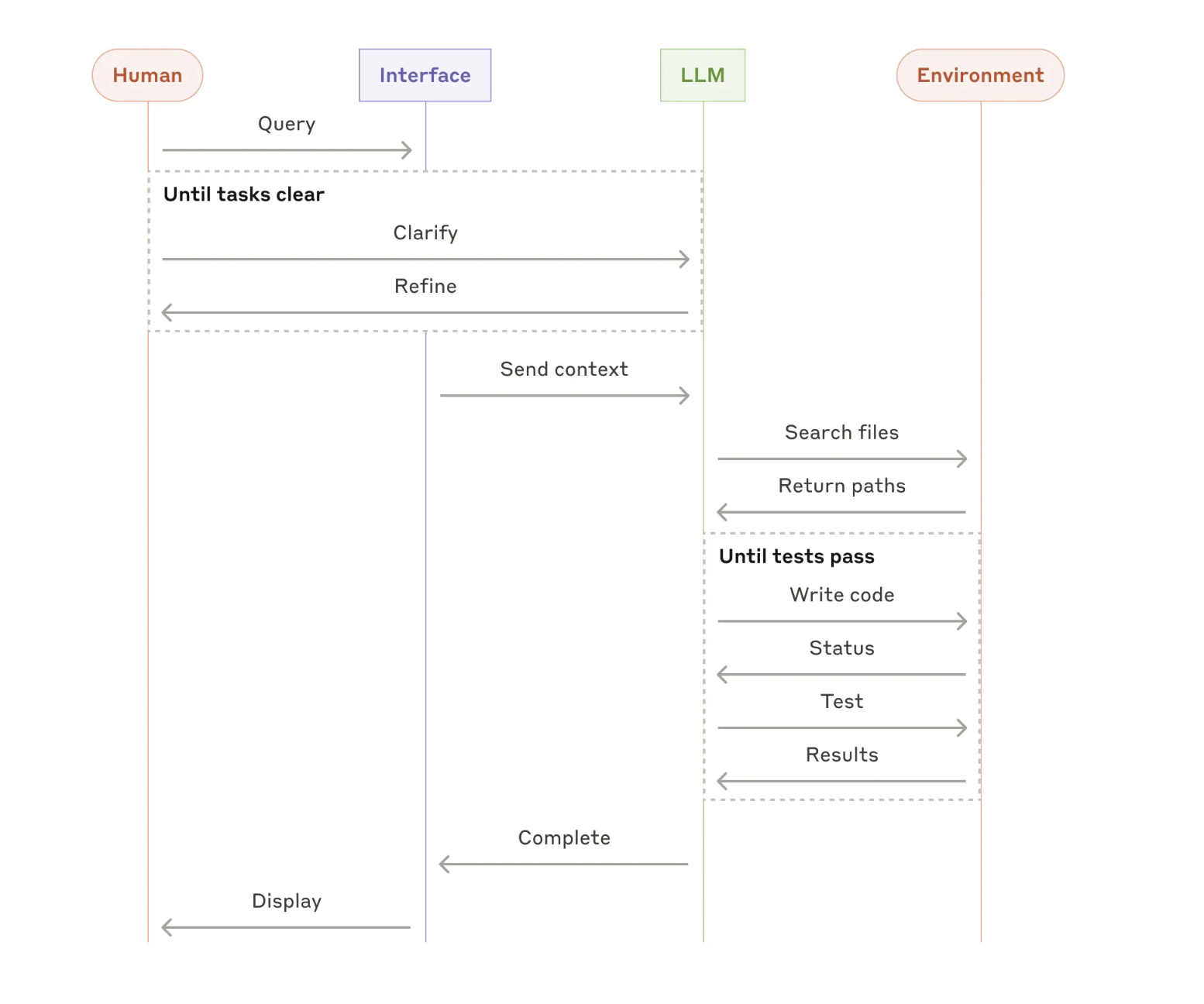

When to Use Agents

An agent is like an LLM that can run on its own:

Plans what to do

Chooses the right tool

Changes the plan if something fails

Might ask for help if needed

Use agents when:

You don’t know how many steps it will take

You want the AI to figure things out on its own

Examples:

Code agents that read an issue and fix the right files

A research assistant that browses, reads, and summarizes

Tools & Frameworks

You can build all of this using code. But some tools can help:

LangGraph (advanced flow with agents)

Amazon Bedrock Agents (AWS service)

Rivet and Vellum (drag-and-drop builders)

Tip: Start simple with just API calls. Only use frameworks when needed.

Final Tips

Start with simple LLM prompts

Use chaining or routing for structure

Go for agents when the task is complex and flexible

Always test and monitor agents before putting them into real-world use

Build smart. Start small. Scale only when you need to.

Subscribe to my newsletter

Read articles from Vijay Sheru directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vijay Sheru

Vijay Sheru

A Java Developer, Looking forward to expanding my knowledge in Data Structures and Algorithms and sharing my tales; I am passionate about increasing my skillset in Artificial Intelligence and Data Science. My interest in Cloud Computing has made me a Cloud Enthusiast, and I am excited to explore more about the intersection of AI and the Cloud. In addition to my technical skills, my background as a freelance Graphic Designer has honed my creative problem-solving abilities. I am always eager to take on new challenges and learn new technologies. My ultimate goal is to be an expert in AI and Cloud and use my skills and knowledge to impact the industry.