MCP Servers Explained: HTTPS Protocol for LLMs

UJJWAL BALAJI

UJJWAL BALAJI

Introduction

The Model Context Protocol (MCP) is an emerging standard designed to enhance how Large Language Models (LLMs) access and utilise external data. Introduced by Anthropic (the creators of Claude AI), MCP acts as a universal bridge between AI models and external tools, databases, or APIs—much like how USB provides a standardised way to connect peripherals to computers.

In this blog, we’ll dive deep into:

What MCP Servers are

How they work under the hood

Why they’re a game-changer for AI applications

What is an MCP Server?

An MCP Server is a lightweight program that provides structured context to LLMs in real-time. Instead of hardcoding data into prompts or relying on limited model memory, MCP Servers allow AI models to:

Fetch dynamic data (e.g., weather, stock prices)

Execute tools/functions (e.g., query a database, call an API)

Use pre-defined prompts for better responses

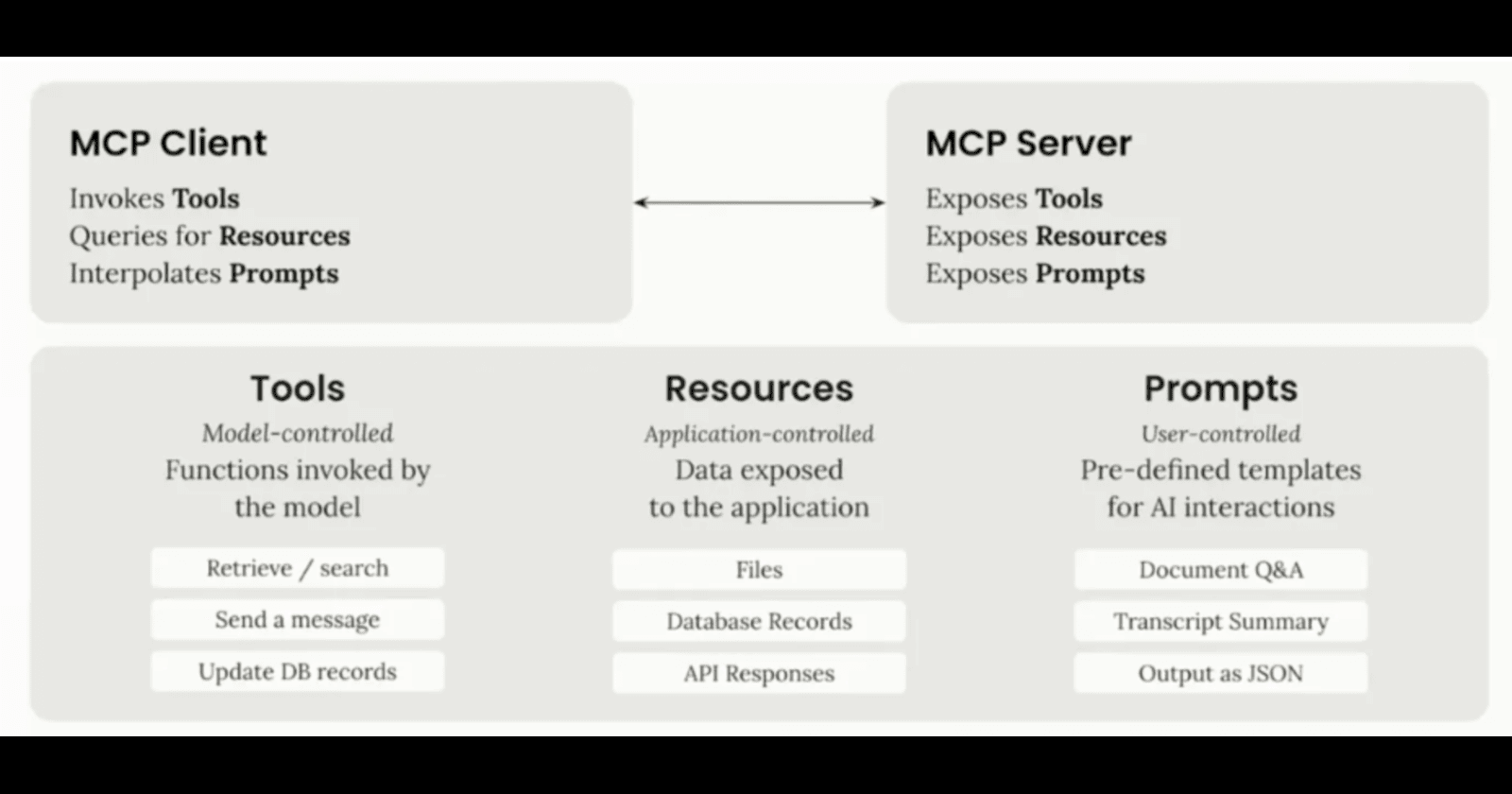

Key Components of MCP

MCP Host – The application (e.g., Cursor IDE, VS Code) that interacts with the LLM.

MCP Client – Manages communication between the host and MCP servers.

MCP Server – The backend service that retrieves data or performs actions.

Data Sources – APIs, databases, or files the server accesses.

How MCP Servers Work Under the Hood

1. Communication Protocol

MCP Servers primarily use two transport methods:

a) STDIO (Standard Input/Output) Transport

Works via terminal streams (stdin/stdout).

Ideal for local development (e.g., running a Python script).

Example Workflow:

LLM sends a request → Written to

stdin.MCP Server processes it → Returns output via

stdout.

b) Server-Sent Events (SSE) Transport

Uses HTTP POST requests for remote communication.

Allows cloud-hosted MCP Servers (e.g., on AWS, Vercel).

Example Workflow:

- LLM makes an HTTP request → MCP Server fetches data → Streams response back.

2. Handling Requests

When an LLM needs context, it follows this flow:

Request Initiation

The LLM (e.g., Claude in Cursor) detects it needs external data (e.g., "Get weather in Patiala").

Checks if an MCP tool is registered (e.g.,

get_weather_by_city).

Tool Execution

The MCP Server receives structured input (validated via Zod or similar).

Calls the required function (e.g., queries OpenWeather API).

Response Formatting

Returns data in a standardised JSON format:

json

Copy

{ "temperature": "30°C", "forecast": "Chances of high rain" }The LLM uses this to generate a natural-language response.

3. Types of Context MCP Servers Provide

| Type | Description | Example |

| Tools | Functions the LLM can call | get_weather, search_database |

| Prompts | Pre-optimised prompt templates | Code-review guidelines |

| Resources | Data from files/APIs | Company docs, CRM records |

Why MCP Servers Matter

1. Efficiency

Avoids overloading LLM context windows by fetching only necessary data.

Reduces API costs (no need to dump entire datasets into prompts).

2. Flexibility

Modular architecture: Swap tools without retraining models.

Enterprise-ready: Companies can host private MCP servers (e.g., Slack, GitHub integrations).

3. Future-Proofing AI Workflows

As AI agents become more autonomous, MCP enables real-time, dynamic decision-making.

Developers can monetize MCP servers (e.g., selling weather, finance, or CRM tools).

Building Your Own MCP Server (Demo Recap)

Step 1: Setup

bash

Copy

pnpm install @modelcontextprotocol/sdk

pnpm install zod

Step 2: Define a Tool

javascript

Copy

import { MCPServer } from "@model-context-protocol/mcp-js";

import { z } from "zod";

const server = new MCPServer();

// Tool: Get weather by city

server.registerTool({

name: "get_weather_by_city",

description: "Fetches weather data for a given city.",

parameters: z.object({ city: z.string() }),

execute: async ({ city }) => {

if (city.toLowerCase() === "dwarka") {

return { temperature: "30°C", forecast: "Rain expected" };

}

return { error: "City not found" };

},

});

// Start server (STDIO transport)

server.connect(new StdioTransport());

Step 3: Integrate with an LLM

In Cursor/Claude, register the MCP Server:

json

Copy

{ "mcp_servers": [{ "name": "weather", "path": "node ./weather-server.js" }] }Now, asking "What’s the weather in Dwarka?" triggers the MCP tool.

Conclusion

MCP Servers are the next big leap in AI infrastructure, enabling LLMs to interact with the real world dynamically. By standardising how models access external data, they solve critical limitations in context management, cost, and scalability.

Subscribe to my newsletter

Read articles from UJJWAL BALAJI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

UJJWAL BALAJI

UJJWAL BALAJI

I'm a 2024 graduate from SRM University, Sonepat, Delhi-NCR with a degree in Computer Science and Engineering (CSE), specializing in Artificial Intelligence and Data Science. I'm passionate about applying AI and data-driven techniques to solve real-world problems. Currently, I'm exploring opportunities in AI, NLP, and Machine Learning, while honing my skills through various full stack projects and contributions.