Configuring Fluentd to Collect Data from Azure Event Hub

Ibrahim Kabbash

Ibrahim Kabbash

This guide covers setting up Fluentd to fetch data from Azure Event Hub using Kafka Fluentd plugin.

Prerequisites

Azure Portal account.

Docker.

Understanding Azure Event Hub

Azure Event Hub is a real-time data streaming service similar to Apache Kafka. It supports Kafka protocol, allowing Kafka applications to send and receive messages without modification. Like Kafka, it handles high-throughput event ingestion, partitioning, and consumer groups.

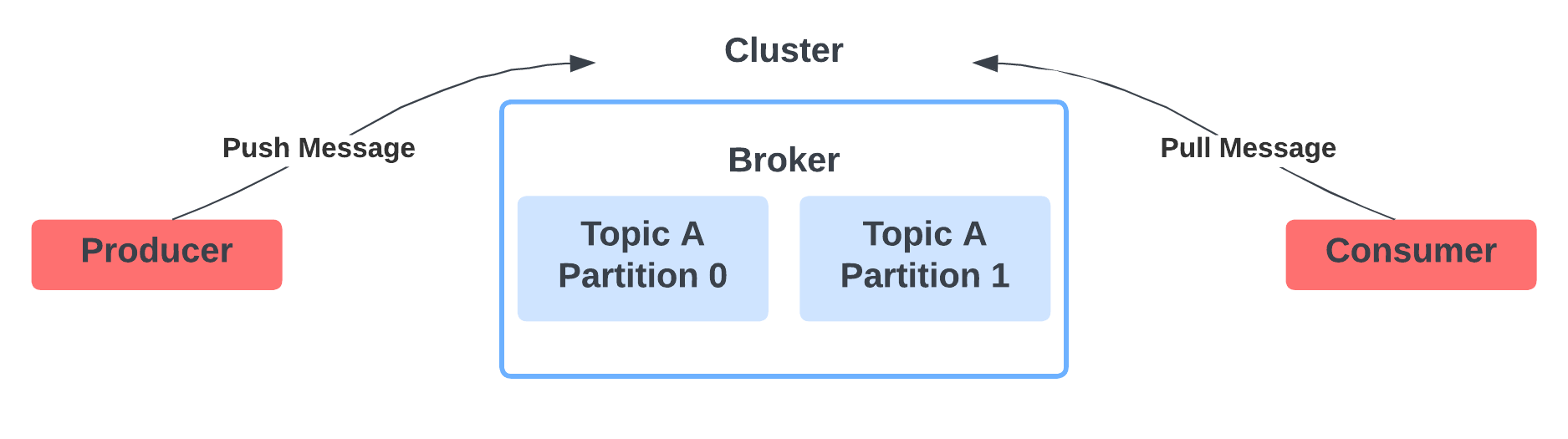

Event streaming platforms like Azure Event Hub and Kafka follow a publish-subscribe model. Producers send messages to a specific topic (or event hub), where they are stored in partitions. Consumers, which are subscribed to the topic, read messages from these partitions, processing them in real-time or batch mode. Each consumer group tracks its offset to ensure messages are processed efficiently without duplication.

TL;DR: Producers generate data to a specific topic, consumers are subscribed to the topic and receive the data.

The following table maps concepts between Kafka and Event Hubs.

| Kafka Concept | Event Hubs Concept |

| Cluster | Namespace |

| Topic | An event hub |

| Partition | Partition |

| Consumer Group | Consumer Group |

| Offset | Offset |

Note that you’ll need at least Standard tier because there is no Kafka consumer in the Basic tier.

What is Fluentd?

Fluentd is an open-source data collector used to unify logging by collecting, transforming, and routing log data across different systems. You can collect data from various sources like files, databases, protocols, etc.. and send them to other various destinations as well.

This Fluentd config sets up an HTTP input on port 9880 to receive data and saves it as JSON where the destination is a file. The tag-name is used to route logs, ensuring data flows from the source to the correct output.

<source [tag-name]>

@type http

port 9880

bind 0.0.0.0

body_size_limit 32m

keepalive_timeout 10s

</source>

<match [tag-name]>

@type file

path /output/alert-logs

append true

format json

</match>

Fluentd can also parse logs, format them, and route them to more than just files—it can send data to databases, cloud storage, or even message queues, making it great for centralized logging.

We’ll take similar concept from the config above where the source will be a Kafka input plugin.

Setting Up Fluentd for Azure Event Hub

Creating Event Hub

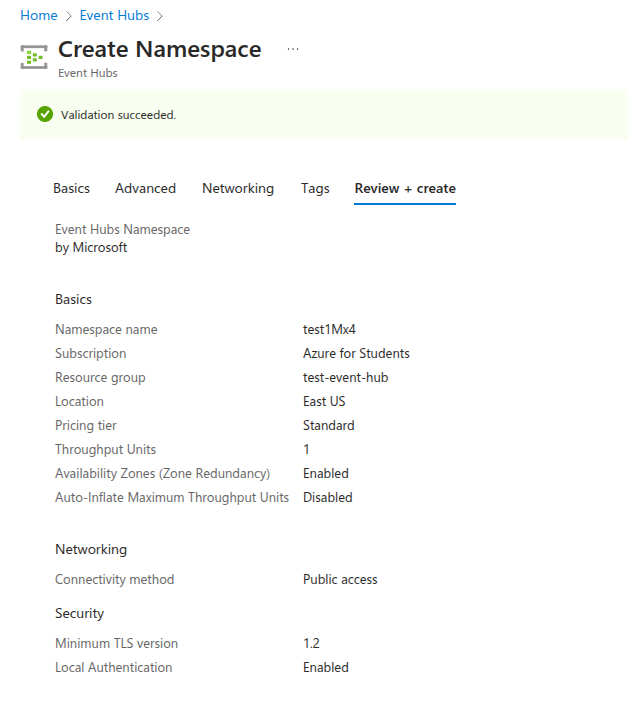

Go to your Azure portal and search for Event Hubs then click on “Create event hubs namespace.”

Pick or create a resource group.

Pick a unique namespace name.

Choose the Standard pricing tier.

Keep everything else default and click on “Review + create.”

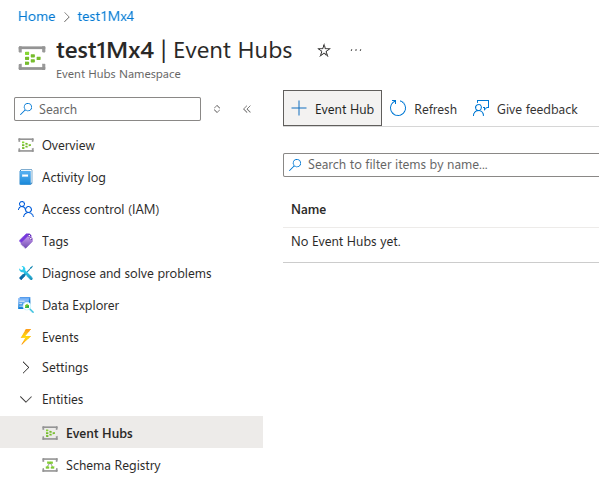

Go to the event hub namespace resource you created.

On the side bar, click on “Event Hubs” under entities and create an Event Hub with a name (I named mine test-hub).

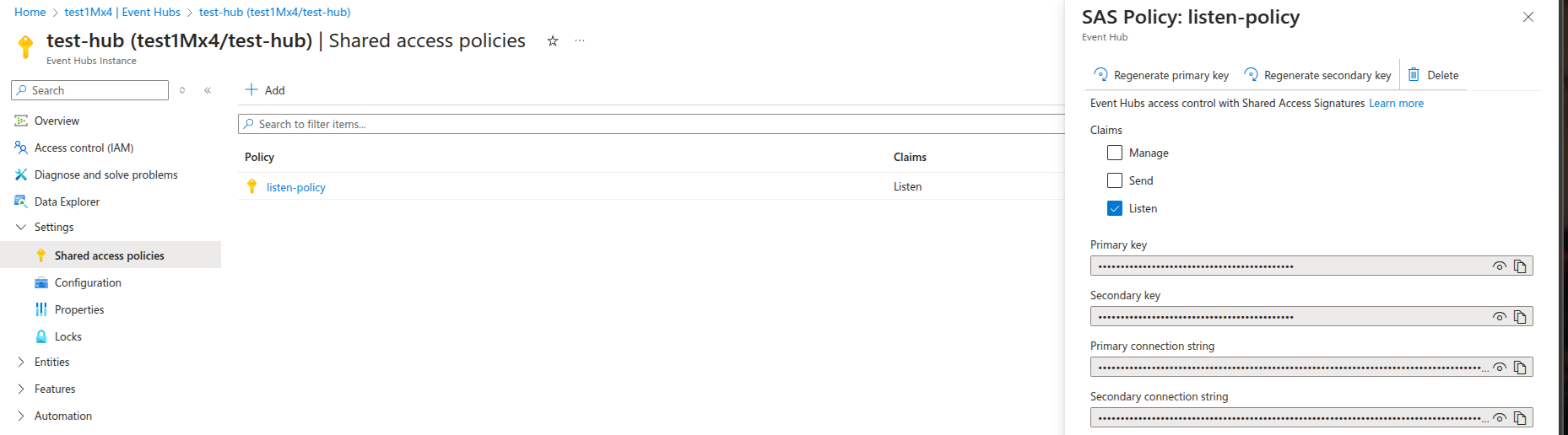

Select the event hub you just created.

On the side bar, click on “Shared access policies” under settings and add a new policy named

listen-policywith listen access.After creation, copy one of the connection strings and keep it somewhere for later steps.

- Be sure you’ve created the shared access policy in the event hub itself, not the event hub namespace.

Fluentd Docker Compose Setup

You can use these files from my tutorials repo or create the following below:

Dockerfilefor Fluentd, installing the Kafka plugin.FROM fluent/fluentd:v1.17-debian-1 # Install Kafka plugin USER root RUN fluent-gem install fluent-plugin-kafka USER fluentFluentd

docker-compose.yamlfile.services: fluentd: container_name: fluentd hostname: fluentd build: . volumes: - ./fluentd.conf:/fluentd/etc/fluentd.conf:ro - ./logs:/var/log/fluentdCreate

logsdirectory and change its ownership to Fluentd’s container UID.mkdir logs && sudo chown 999:999 logs

Using the Kafka Fluentd Plugin

You can use the plugin to both consume and produce data, in our case, we’ll configure to consume data from Event Hub using Event Hub’s Shared Access Signatures (SAS) for delegated access to Event Hubs for Kafka resources.

Use the fluentd.conf below and update it with your Event Hub’s name, Event Hub namespace name, and connection string. The HUB_NAME in this article’s case is test-hub, the namespace name is test1Mx4.servicebus.windows.net, and replace the connection string accordingly.

<source>

@type kafka

brokers [EVENT_HUB_NAMESPACE].servicebus.windows.net:9093

topics [HUB_NAME]

username $ConnectionString

password [EVENT_HUB_CONNECTION_STRING]

ssl_ca_certs_from_system true

format json

</source>

<match [HUB_NAME].**>

@type file

path /var/log/fluentd/

append true

<format>

@type json

</format>

</match>

It should look like this after updating the fluentd.conf file.

<source>

@type kafka

brokers test1Mx4.servicebus.windows.net:9093

topics test-hub

username $ConnectionString

password Endpoint=sb://test1mx4.servicebus.windows.net/;SharedAccessKeyName=listen-policy;SharedAccessKey=<secret-key>=;EntityPath=test-hub

ssl_ca_certs_from_system true

format json

</source>

<match test-hub.**>

@type file

path /var/log/fluentd/

append true

<format>

@type json

</format>

</match>

Testing and Verifying Data Collection

After setting up the files, execute docker compose up --build and be sure that the container is running fine.

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

73654ee6e84b fluentd-azure-eventhub-fluentd "tini -- /bin/entryp…" About a minute ago Up About a minute 5140/tcp, 24224/tcp fluentd

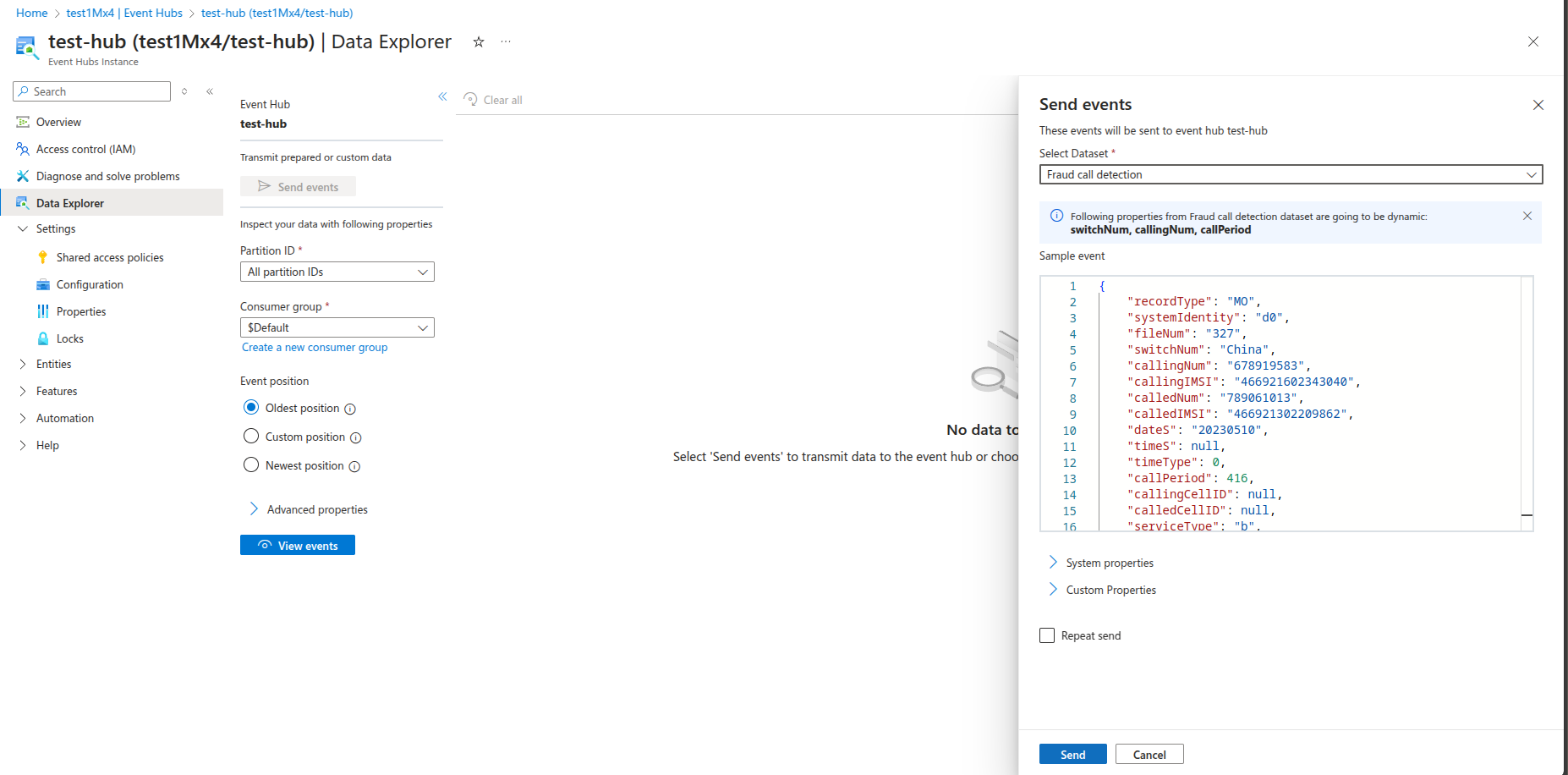

To test, open your Event Hub’s Data Explorer from the sidebar and click "Send Events." Choose a pre-canned dataset as an example, then click "Send" to produce it to the hub for Fluentd to consume.

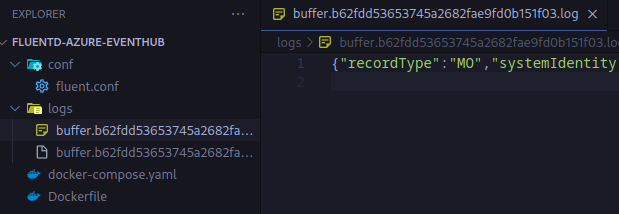

After the data gets sent to Event Hub, check your Fluentd logs directory as it should generate buffer files that store the produced data by consuming them from Event Hub.

Conclusion

In this guide, we set up Fluentd to fetch data from Azure Event Hub using the Kafka Fluentd plugin. We covered the basics of Azure Event Hub, configured Fluentd with Docker, and verified data collection by producing events and checking Fluentd’s log directory for buffered data.

With this setup, you can integrate Fluentd into your logging pipeline to collect, process, and route event data efficiently. For example, you can create a playbook in Azure Sentinel to export incident data to Event Hub and Fluentd will act as a log shipper for other destinations. You could also apply this approach to Azure Log Analytics, exporting data to Event Hub for Fluentd to forward to other destinations.

Subscribe to my newsletter

Read articles from Ibrahim Kabbash directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by