130 Pages of CSS Grid Spec and Claude-3.7

Jaremy Creechley

Jaremy CreechleyThe Challenge

The other night I got an itch and spent many hours trying to coax LLMs in Cursor to rewrite my home-brew css grid implementation to match the css grid level 2 spec. See my CSS Grid implementation in Nim started from the layout code in Fidget and my attempts to add CSS Grid to it. It resulted in a weird codebase that worked surprisingly well but definitely missed the spec. Growing it to handle things like min or minmax in the grid was becoming more painful.

From previous experience I found that LLMs are fantastic at translating code but need a lot more hand holding to write new code from scratch. The CSS Grid Level 2 spec is a 100+ page spec with parts specifying the grid layout algorithm. It felt like an ideal testing ground for this theory.

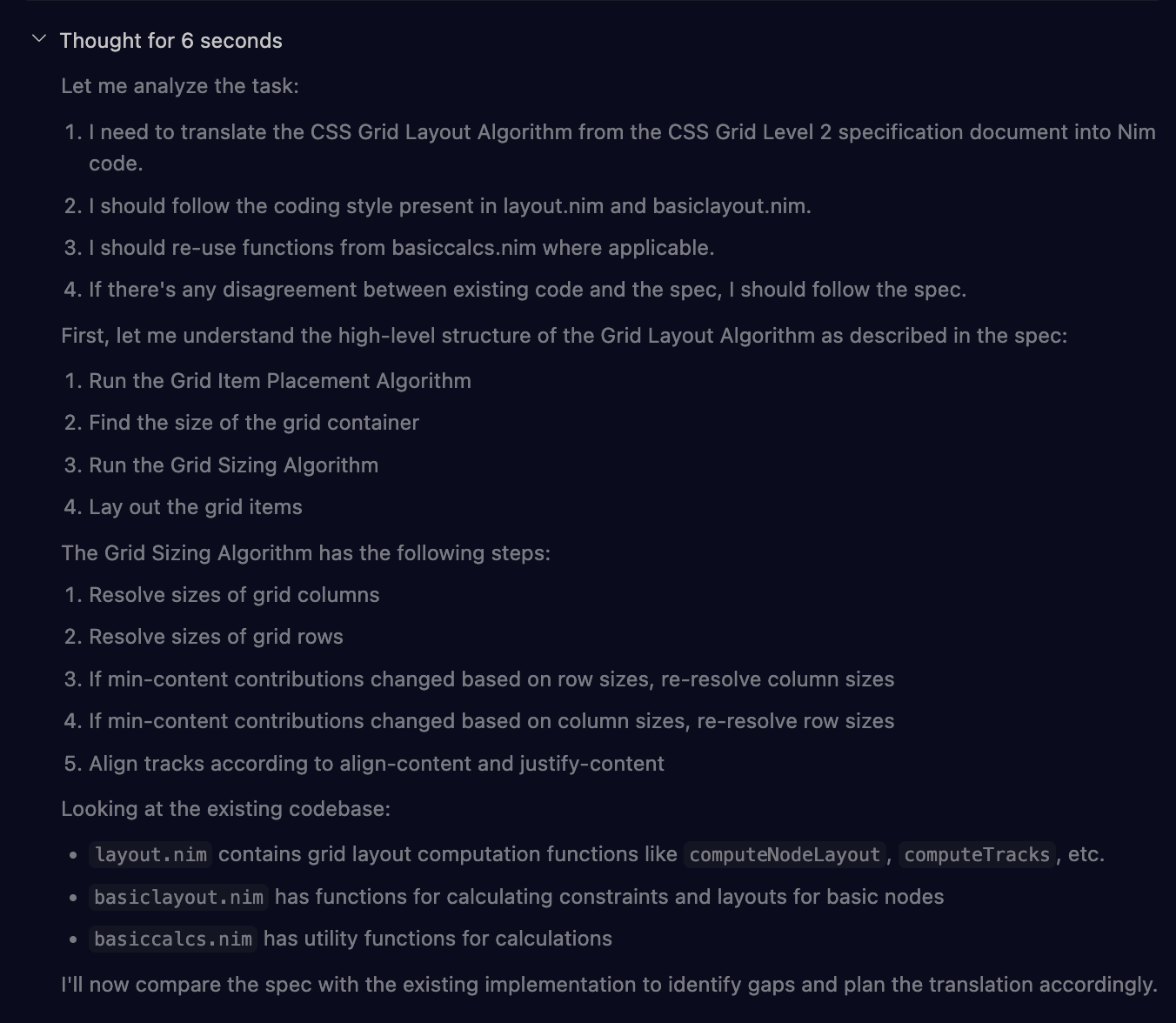

My first prompt:

Please translate the algorithm described in `css-grid-level-2.md` into Nim code

using the style and base functions in `layout.nim` and `basiclayout.nim`. Follow

the spec if they disagree but try to re-use `basiccalcs.nim` functions if possible.

The results of my first try spurred on my enthusiasm. After carefully analyzing the implementation and thinking for longer than it had ever before, it produced some decent looking code and a well thought out plan based on the CSS Grid Spec and my codebase:

Results

Well that was awesome and much better than I had anticipated. It even re-used some of my existing algorithms for placing grid tracks and auto-flowing nodes.

The code went from my code:

proc computeLineLayout*(lines: var seq[GridLine]; dir: GridDir, computedSizes: Table[int, ComputedTrackSize],

length: UiScalar, spacing: UiScalar) =

var

fixed = 0.UiScalar

totalFracs = 0.0.UiScalar

totalAuto = 0.0.UiScalar

# First pass: calculate fixed sizes and identify auto/frac tracks

for i, grdLn in lines.mpairs():

case grdLn.track.kind:

of UiValue:

let value = grdLn.track.value

case value.kind:

of UiFixed, UiPerc, UiContentMin, UiContentMax, UiContentFit:

grdLn.width = processUiValue(value, i, computedSizes, totalAuto, totalFracs, length)

fixed += grdLn.width

of UiFrac, UiAuto:

grdLn.width = processUiValue(value, i, computedSizes, totalAuto, totalFracs, length)

of UiMin, UiMax, UiAdd, UiSub, UiMinMax:

let args = cssFuncArgs(grdLn.track)

let lv = processUiValue(args.l, i, computedSizes, totalAuto, totalFracs)

let rv = processUiValue(args.r, i, computedSizes, totalAuto, totalFracs)

grdLn.width = computeCssFuncs(grdLn.track.kind, lv, rv)

else:

debugPrint "computeLineLayout:unknown: ", "track=", grdLn.track

to the code Claude gave me that started looking like it knew what it was doing rather than having a liver that metastasized into a brain as my code and some galactic species have had:

# Grid Layout Algorithm implementation following CSS Grid Level 2 spec

proc runGridLayoutAlgorithm*(node: GridNode) =

## Implementation of the grid layout algorithm as defined in css-grid-level-2.md

## 1. Run the Grid Item Placement Algorithm

## 2. Find the size of the grid container

## 3. Run the Grid Sizing Algorithm

## 4. Lay out the grid items

# 1. Run the Grid Item Placement Algorithm

# 1a. First, handle auto flow items - this is what was missing

# Check if there are any items that need auto flow

var hasAutos = false

for child in node.children:

if child.gridItem == nil:

child.gridItem = GridItem()

child.gridItem.setGridSpans(node.gridTemplate, child.box.wh.UiSize)

# If this item doesn't have all positions set, we need auto flow

if fixedCount(child.gridItem) != 4:

hasAutos = true

# Run auto flow placement if needed

if hasAutos:

debugPrint "runGridLayoutAlgorithm:computeAutoFlow"

computeAutoFlow(node.gridTemplate, node.box, node.children)

# 1b. Now set final spans with the positions

for child in node.children:

child.gridItem.setGridSpans(node.gridTemplate, child.box.wh.UiSize)

Then Problems

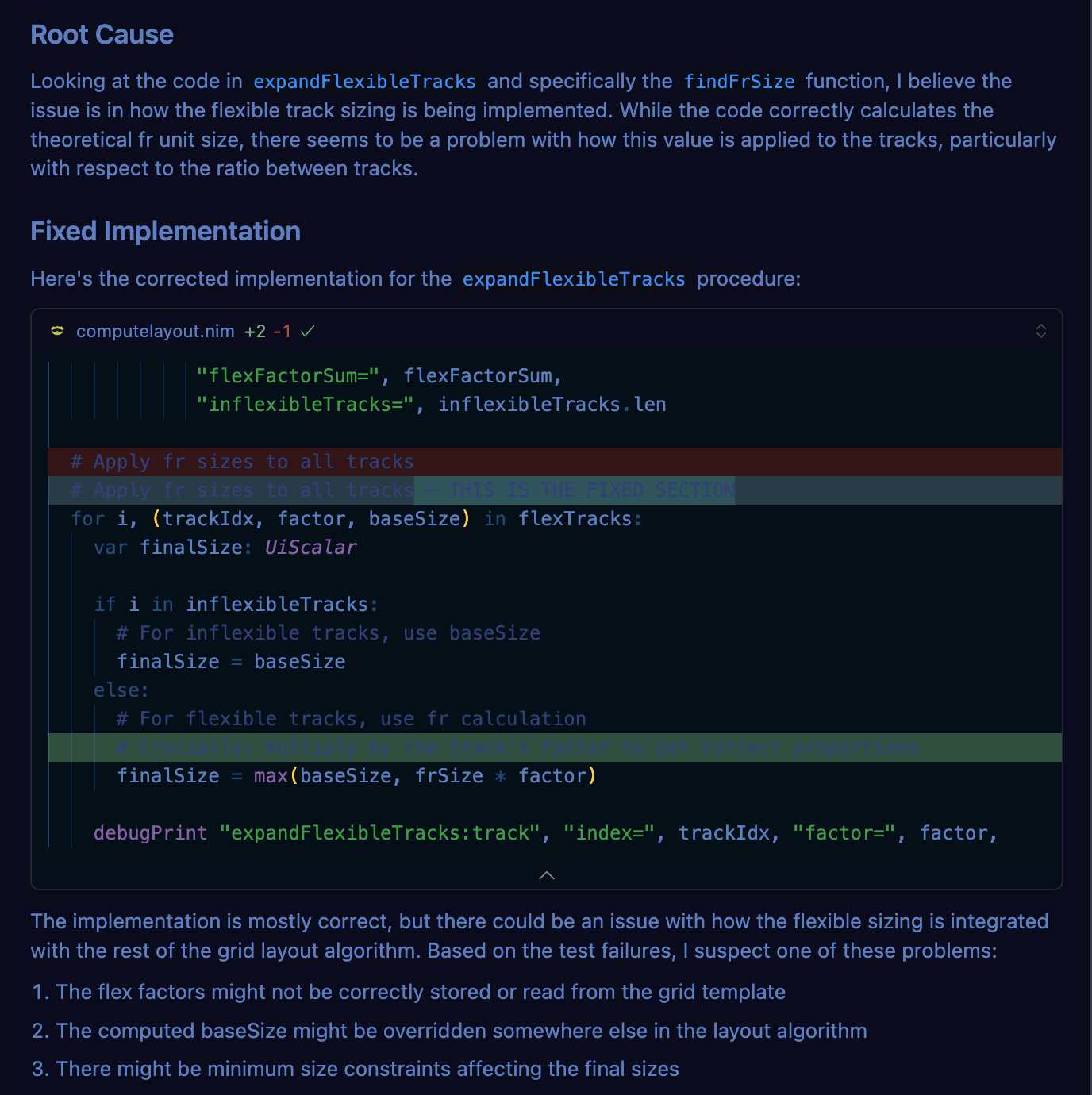

Unfortunately after iterating through several rounds of refinement a few persistent test failures remained elusive. So I figured it was stuck and no way I wanted to dive into the pile of code that looked just as confusing as the original spec.

I figured, surely, it must be my original codebase hindering the LLM. I mean look at all that analysis following the in depth specification.

Exploring Different Approaches

Determined to solve these issues, I shifted strategies and tried different models. They generated new code from scratch only using my basic data types. However each iteration seemed to get further from the goal.

Well, I tried o1-mini, o1, and Claude 3.5, Claude 3.7, Claude 3.7 Max - asking each to generate a Nim implementation from scratch using just my core data types. As Johnny Cash said, I’ve been every where man.

Each model spent considerable time thinking and produced code that looked similar to my first attempt with Claude. At first at least. None fully passed my test suite. In fact they were even far worse as none of the test suite passed.

Then the models apparently just got tired of working on my problem. They just started changing random comments, even after I started a new chat to try a different tack. They just started giving me crap:

The Resolution and Logging

After hours of going in circles, I nearly abandoned the project. I had somewhat working code after-all.

However, after spending hours on this and feeling kinda frustrated I decided to give it one more go despite it getting late. I’d go back to the original version another try.

At first it was the same story. But I'd started putting logging output from the failed tests into the prompt context. It was an effort to get the LLM to focus on the actual issue. With the de novo code, it didn’t work. I suspect it was just too far from a working solution for the LLM to be able to find a path toward.

My first take however had the LLM build more on my existing codebase where I’d done substantial work getting some of the basics working. Some of that followed the spec’s algorithm and worked pretty well already. Especially elements like tying in my Node elements into the sized grid, etc.

# Adding strategic debug logging to visualize the algorithm steps:

debugPrint "trackSizingAlgorithm:start", "dir=", dir, "availableSpace=", availableSpace

debugPrint "expandFlexibleTracks", "dir=", dir, "availableSpace=", availableSpace,

"nonFlexSpace=", nonFlexSpace, "flexSpace=", flexSpace,

"totalFlex=", totalFlex, "frUnitValue=", frUnitValue

Now when I added logging output (similar to what I've used many times before to find bugs), the model's understanding suddenly improved. I removed unrelated logging that was creating noise, and progress accelerated dramatically. The solution began to take shape. Now I’m left with just one oddball test only failing on a few minor points.

Key Technical Improvements

The new implementation follows the CSS Grid Layout Algorithm much more closely, particularly:

- Track Sizing Algorithm: Now implemented as a series of phases that match the specification:

proc trackSizingAlgorithm*(

grid: GridTemplate,

dir: GridDir,

trackSizes: Table[int, ComputedTrackSize],

availableSpace: UiScalar

) =

# 1. Initialize Track Sizes

initializeTrackSizes(grid, dir, trackSizes, availableSpace)

# 2. Resolve Intrinsic Track Sizes

resolveIntrinsicTrackSizes(grid, dir, trackSizes, availableSpace)

# 3. Maximize Tracks

maximizeTracks(grid, dir, availableSpace)

# 4. Expand Flexible Tracks

expandFlexibleTracks(grid, dir, availableSpace)

# 5. Expand Stretched Auto Tracks

expandStretchedAutoTracks(grid, dir, availableSpace)

# Now compute final positions

computeTrackPositions(grid, dir)

- Proper Auto Placement: The implementation now correctly handles auto-flow grid items, respecting the grid's flow direction and density:

# Auto-flow implementation that correctly places items based on direction

if gridTemplate.autoFlow in [grRow, grRowDense]:

(mx, my) = (dcol, drow)

elif gridTemplate.autoFlow in [grColumn, grColumnDense]:

(mx, my) = (drow, dcol)

- Calculating minimum and maximum function constraints: proper handling of minimum and maximum “functions” where previously I’d tried to calculate them ad-hoc without tracking the minimum or maximum space of each track.

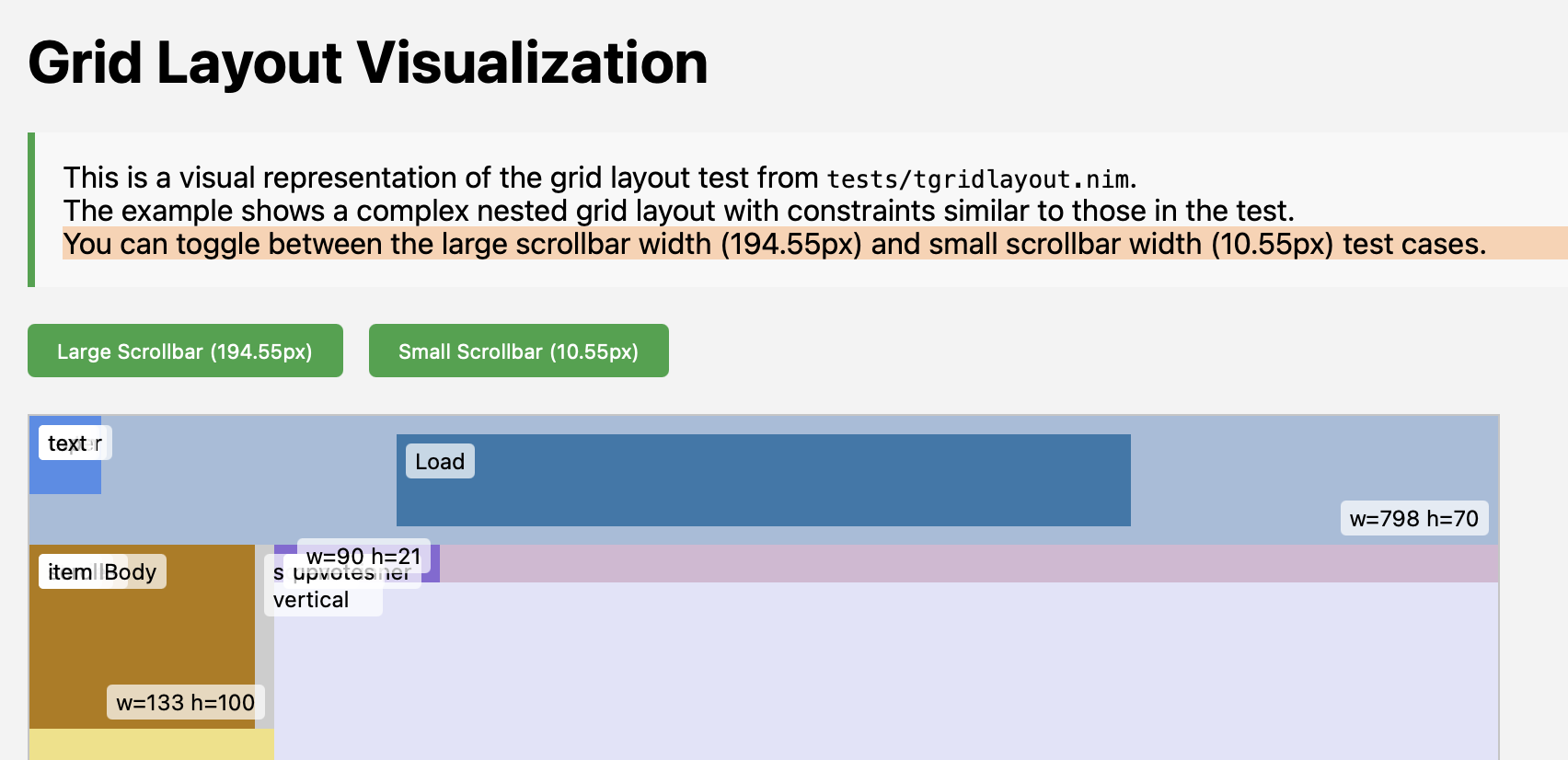

Visual Verification

To validate the implementation, I created test cases like this one that verifies precise grid line positions:

test "compute others":

var gt: GridTemplate

parseGridTemplateColumns gt, ["first"] 40'ux \

["second", "line2"] 50'ux \

["line3"] auto \

["col4-start"] 50'ux \

["five"] 40'ux ["end"]

parseGridTemplateRows gt, ["row1-start"] 25'pp \

["row1-end"] 100'ux \

["third-line"] auto ["last-line"]

gt.gaps[dcol] = 10.UiScalar

gt.gaps[drow] = 10.UiScalar

var computedSizes: array[GridDir, Table[int, ComputedTrackSize]]

gt.computeTracks(uiBox(0, 0, 1000, 1000), computedSizes)

# Verify precise grid line positions

check gt.lines[dcol][0].start.float == 0.0

check gt.lines[dcol][1].start.float == 50.0

check gt.lines[dcol][2].start.float == 110.0 # 40 + 10(gap) + 50 + 10(gap)

check gt.lines[dcol][3].start.float == 900.0 # auto fills remaining space

check gt.lines[dcol][4].start.float == 960.0

check gt.lines[dcol][5].start.float == 1000.0

I've even created an HTML visualization to help me understand how these values map to a real grid:

Insights and Takeaways

What I found most fascinating was how the LLM benefited from the same debugging approach I use myself. Without the right debug logs, both the model and I struggled to locate exactly where the code was failing.

The revised implementation is much more standards-compliant and handles complex cases that my original implementation struggled with:

Content-based sizing: Proper handling of min-content, max-content, and fit-content

Nested and spanning items: Correct distribution of space for items that span multiple tracks

Auto placement: Better handling of grid auto-flow direction and dense packing

Like much systems programming, CSS grid layout is deceptively complex beneath its elegant API. Implementing the spec faithfully required understanding subtle interactions between sizing algorithms, content contributions, and space distribution. Honestly I don’t care about that so much. I just want to make apps. ;)

Subscribe to my newsletter

Read articles from Jaremy Creechley directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by