Code: Recurrent Neural Network

Retzam Tarle

Retzam Tarle

Hello 👽,

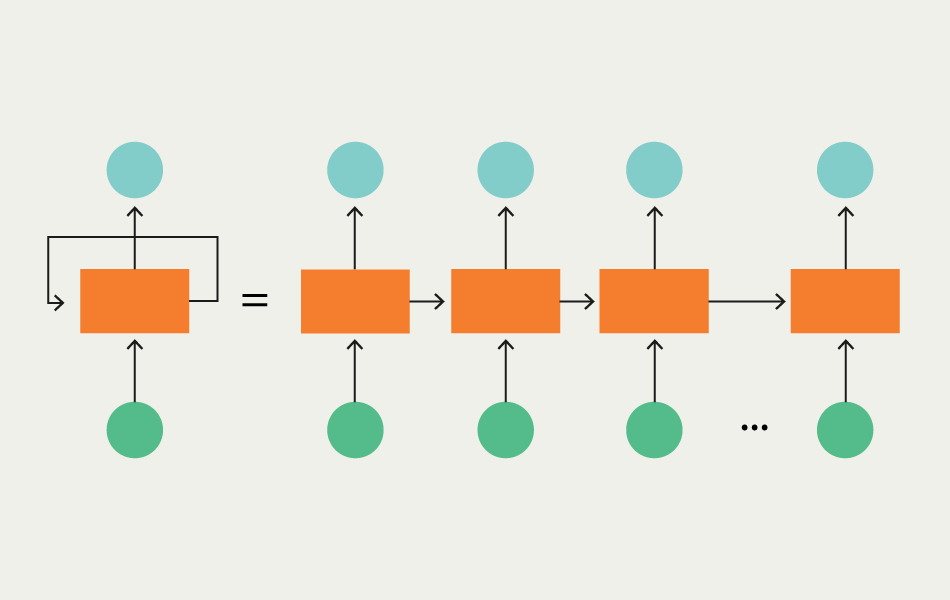

In this chapter, we'll continue with our neural network implementation phase by training a Recurrent Neural Network (RNN), which we learned about in Chapter 23.

Problem

Train a Recurrent Neural Network model to generate new song lyrics in the pattern and fashion of a popular artist.

Dataset

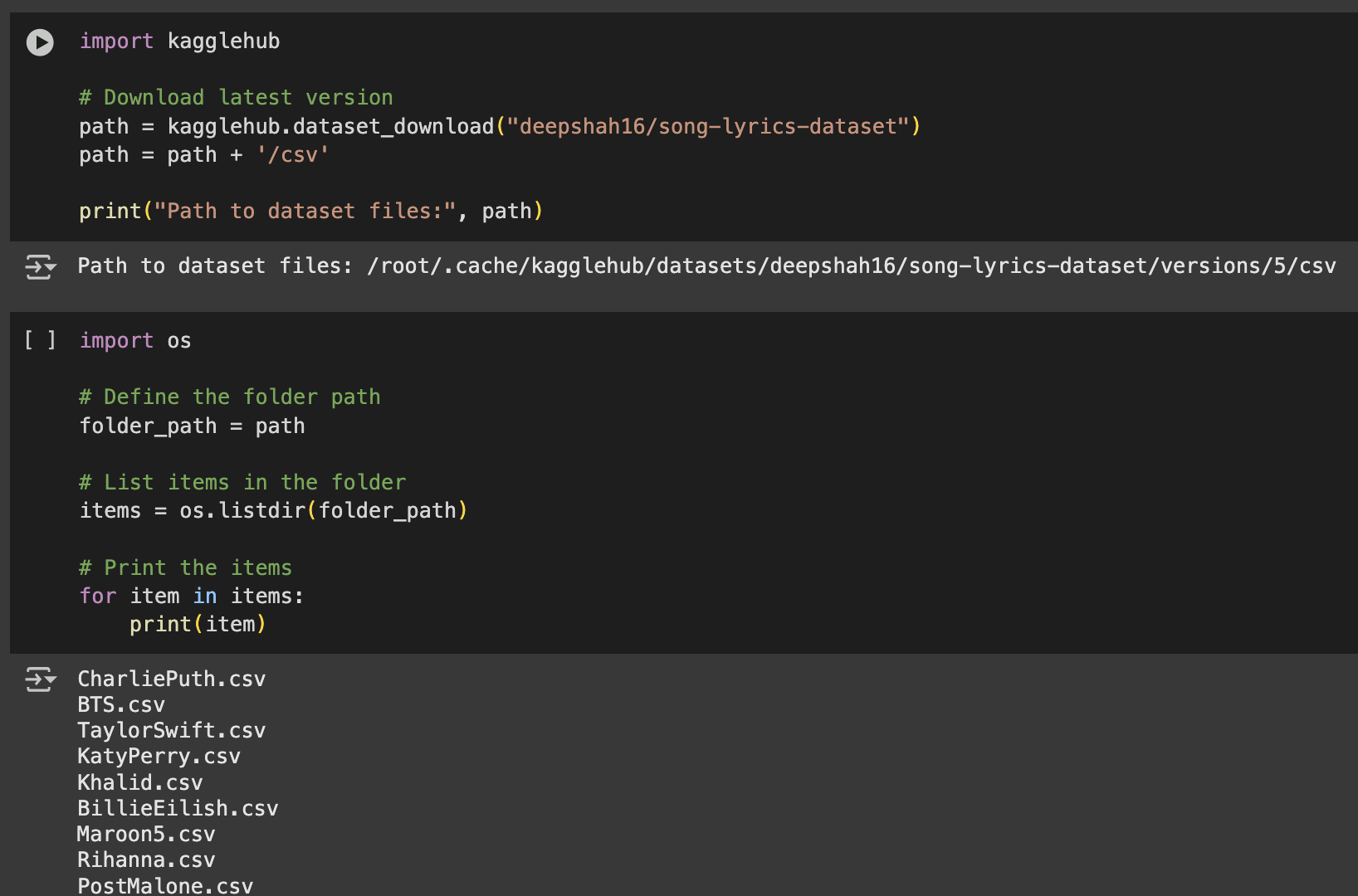

We’ll use a song lyrics dataset from Kaggle for about 20 popular artists worldwide.

Training dataset: https://www.kaggle.com/datasets/deepshah16/song-lyrics-dataset

Solution

We’ll be using Google Colab; you can create a new notebook here. You can use any other ML text editor, like Jupyter.

You can find the complete code PDF with the outputs of each step here.

Data preprocessing

a. Load dataset: Here, we download the training dataset from Kaggle.

We append

csvto the path because that’s the directory where the image folders exist.

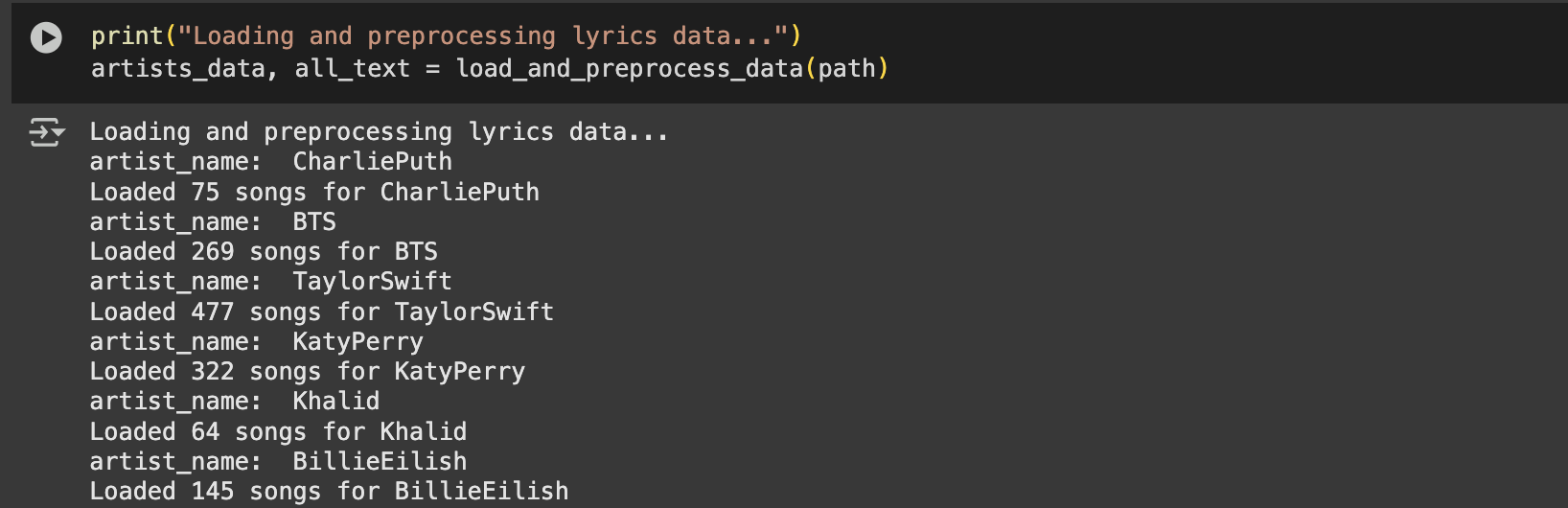

The dataset consists of 20 artists’ files in CSV format, which contain song name, title, album, lyrics, and so on. All we need are the song lyrics to train our model.

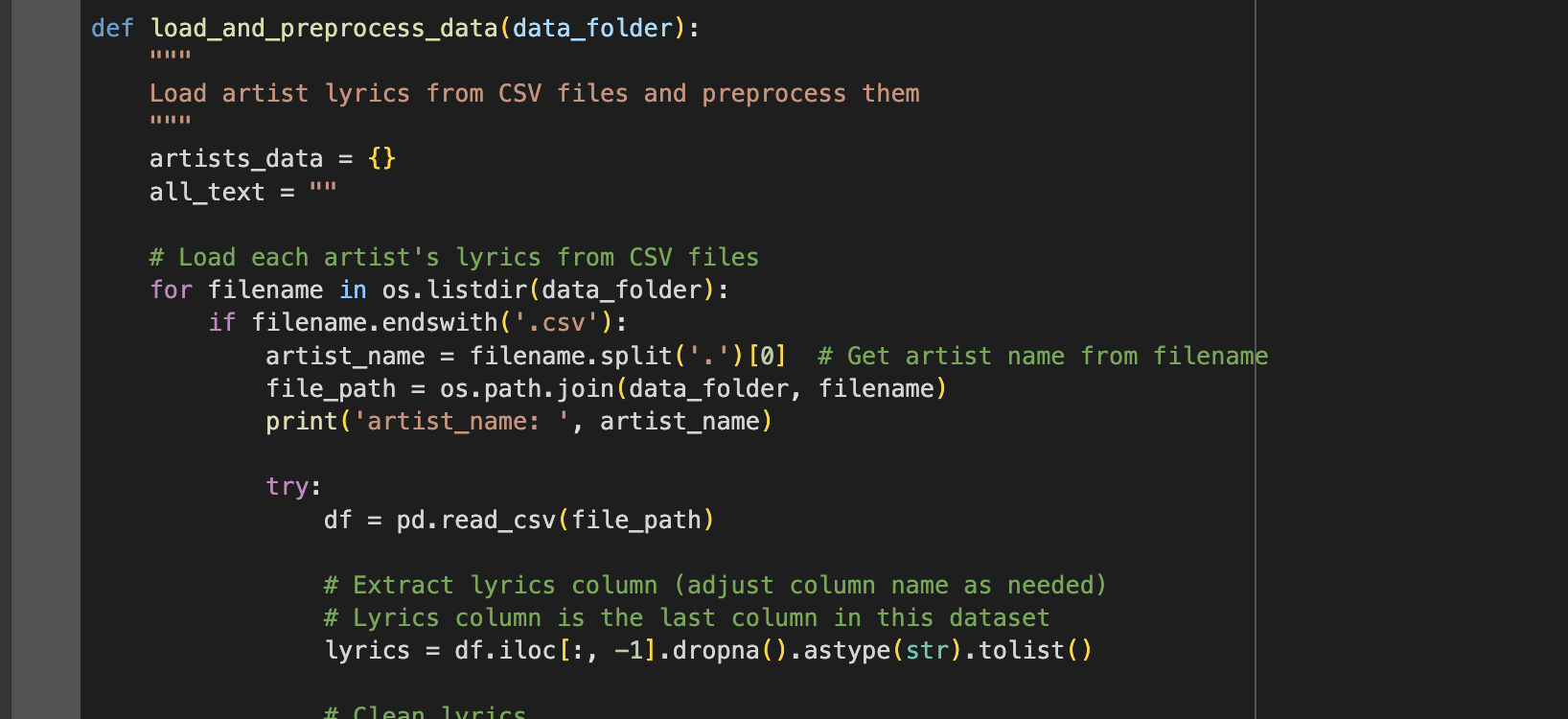

b. Preprocess lyrics data: Here, we create a method to first try to extract the lyrics from each file for each artist, and then clean the data.

Next, we run the method with our dataset path as input.

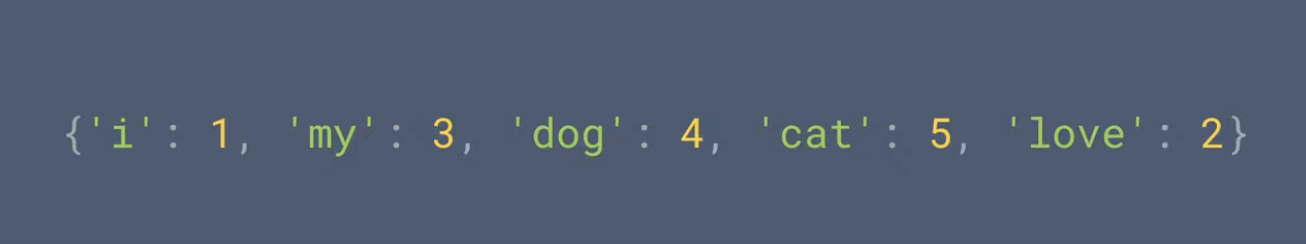

Upnext is tokenization. Tokenization is the process of breaking down texts into smaller units called Tokens. We’ll talk about tokenization more in-depth when we start learning Large Language Models. Tokenization is an important step in Natural Language Processing. It gives each token in a text a number. This is important because the computer can only understand numbers.

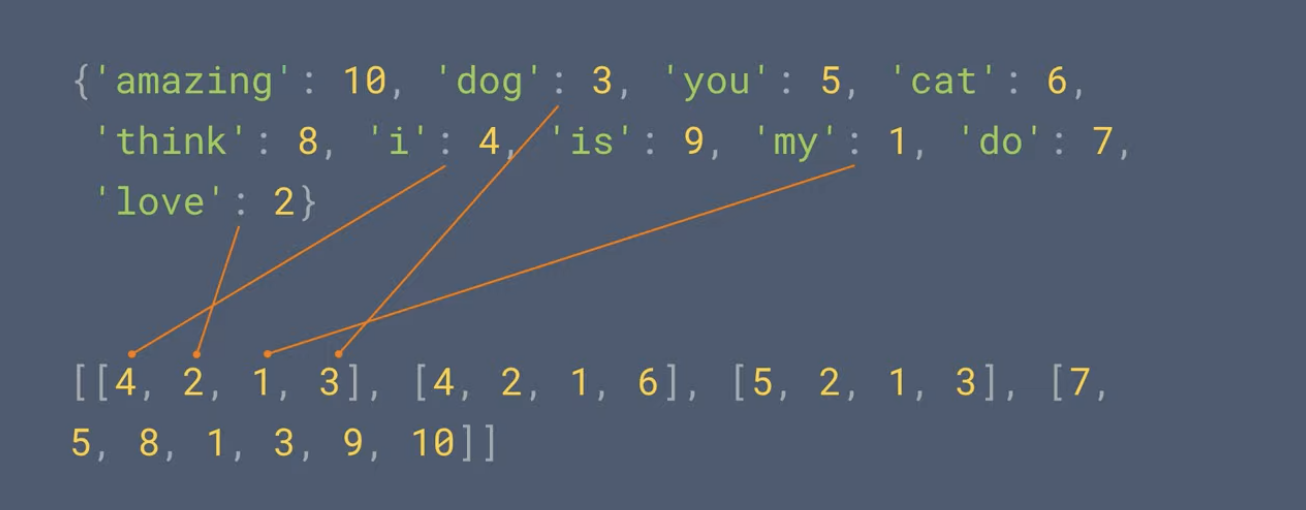

Next is sequencing. Sequencing is the process of generating number representations of a text after tokenization. It uses a sequence length to set the limit for each sequence.

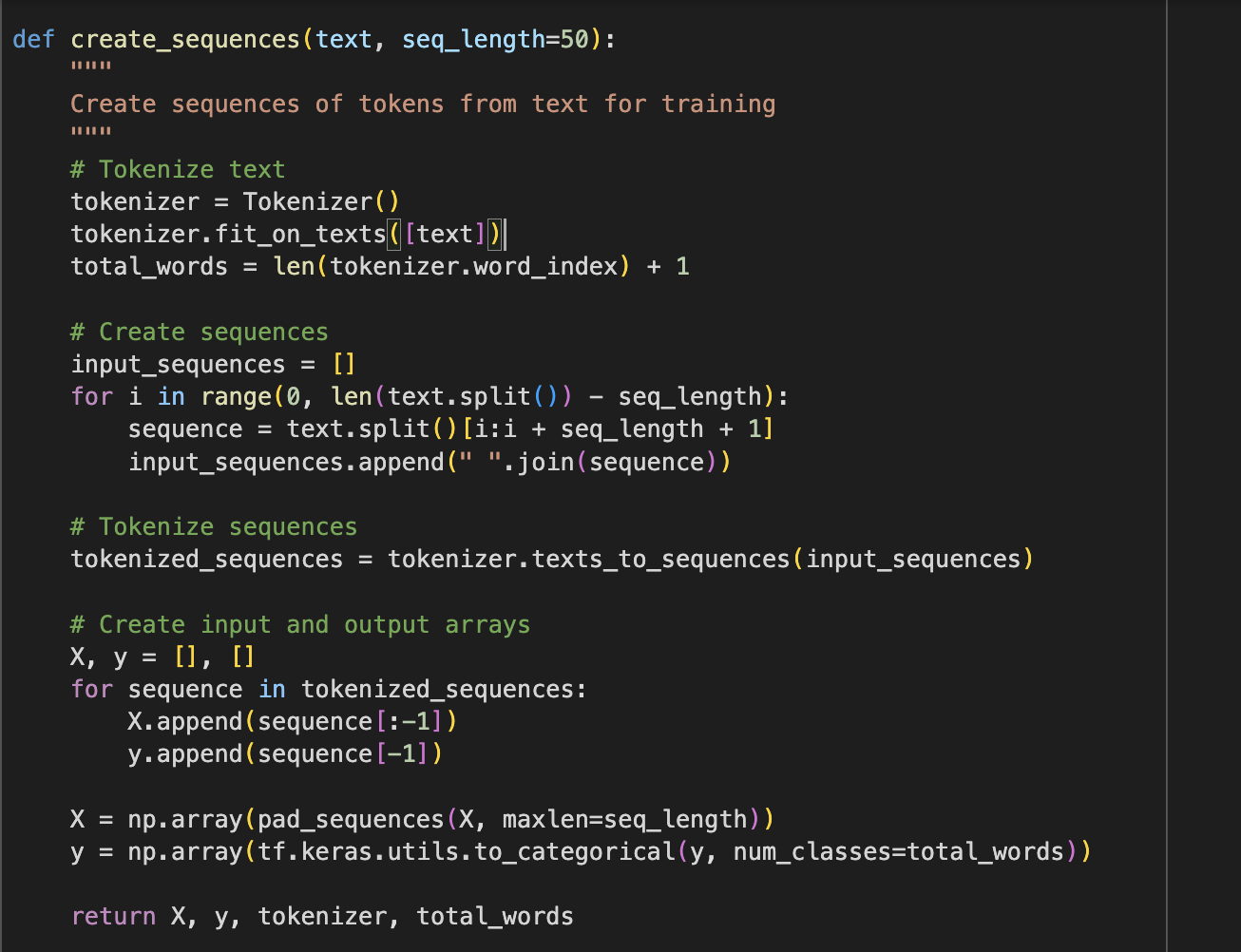

Here’s the code showing both the tokenization and sequencing steps.

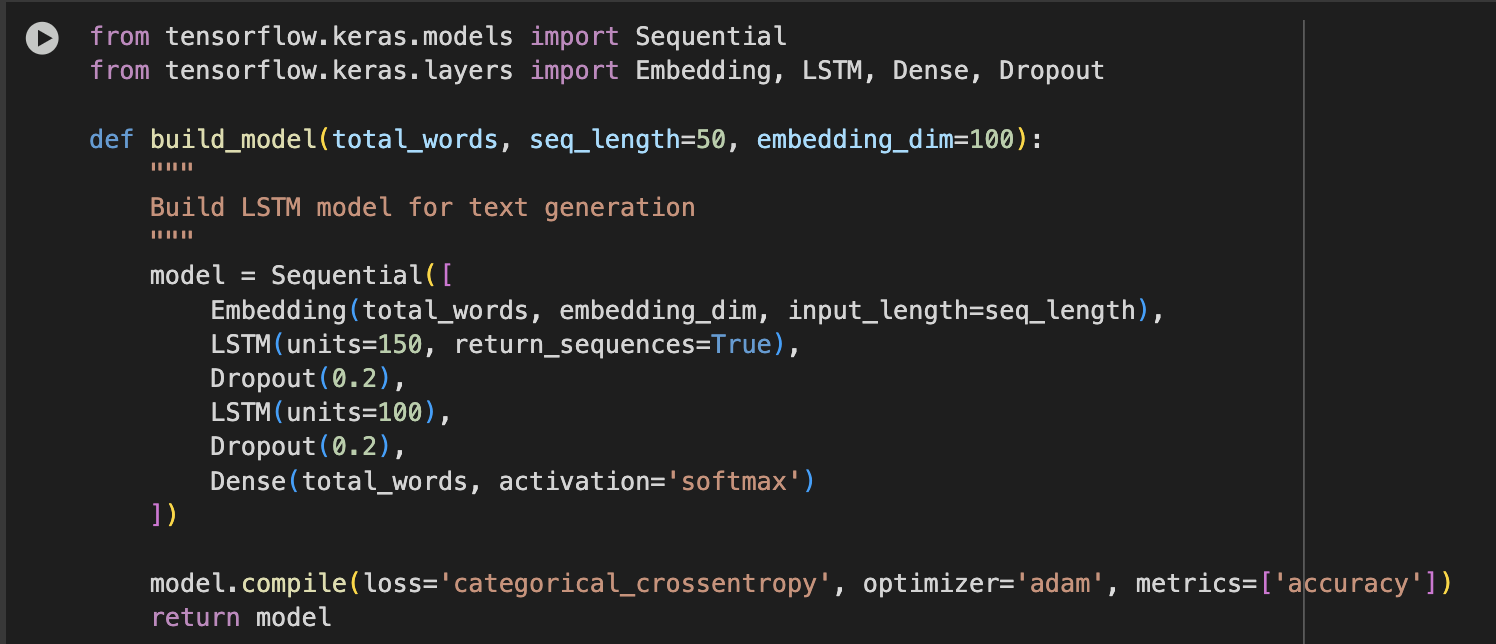

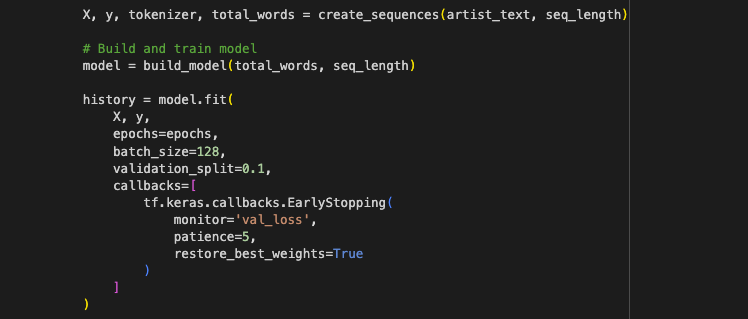

Build a Recurrent Neural Network: We’ll build an RNN model using the TensorFlow RNN model class.

We used Long Short Term Memory LSTM here, which is like a more robust version of RNN, which is great for large text processing. We learned about LSTM in our RNN chapter as well. I believe we are already familiar with this process of building a model.

We create an LSTM with 2 layers, 150 and 100 units each, respectively, which is the number of neurons. While

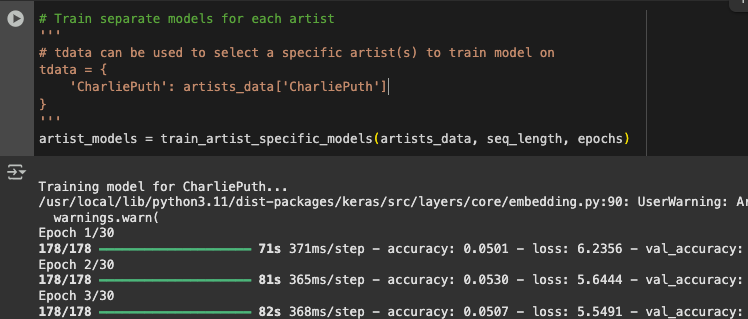

return_sequences=Trueensures that the second LSTM receives sequences instead of a single final output.Train the RNN model: We train the model now with our preprocessed data of artists’ lyrics.

When we train the model, we can see the accuracy increase and the loss decrease with each epoch.

The accuracy of the model was 0.31, which is not good, but for this, our implementation would suffice. It takes a longer time to train an RNN model, and it needs a lot of training data to increase accuracy

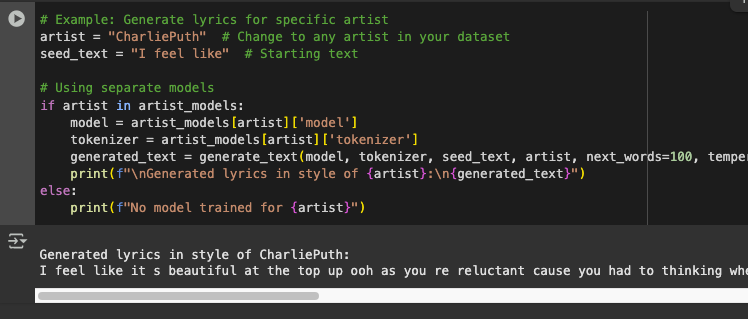

Deploy model: Our model is ready. Let us try it out by asking it to generate some lyrics for us that sound like Ed Sheeran.

That’ll be all for Recurrent Neural Network. As we can see, we can generate text with RNN in a specific style, which was one of the applications we mentioned in our RNN Chapter. You can do well to try it out on your own with other datasets and to solve other problems like sentiment analysis and so on.

In our next chapter, we’ll train a Generative Adversarial Network - GAN.

See ya 👽

Subscribe to my newsletter

Read articles from Retzam Tarle directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Retzam Tarle

Retzam Tarle

I am a software engineer.